This is the official repository of RoboFusion.

🔥 Our work has been accepted by IJCAI 2024!

🔥 Contributions:

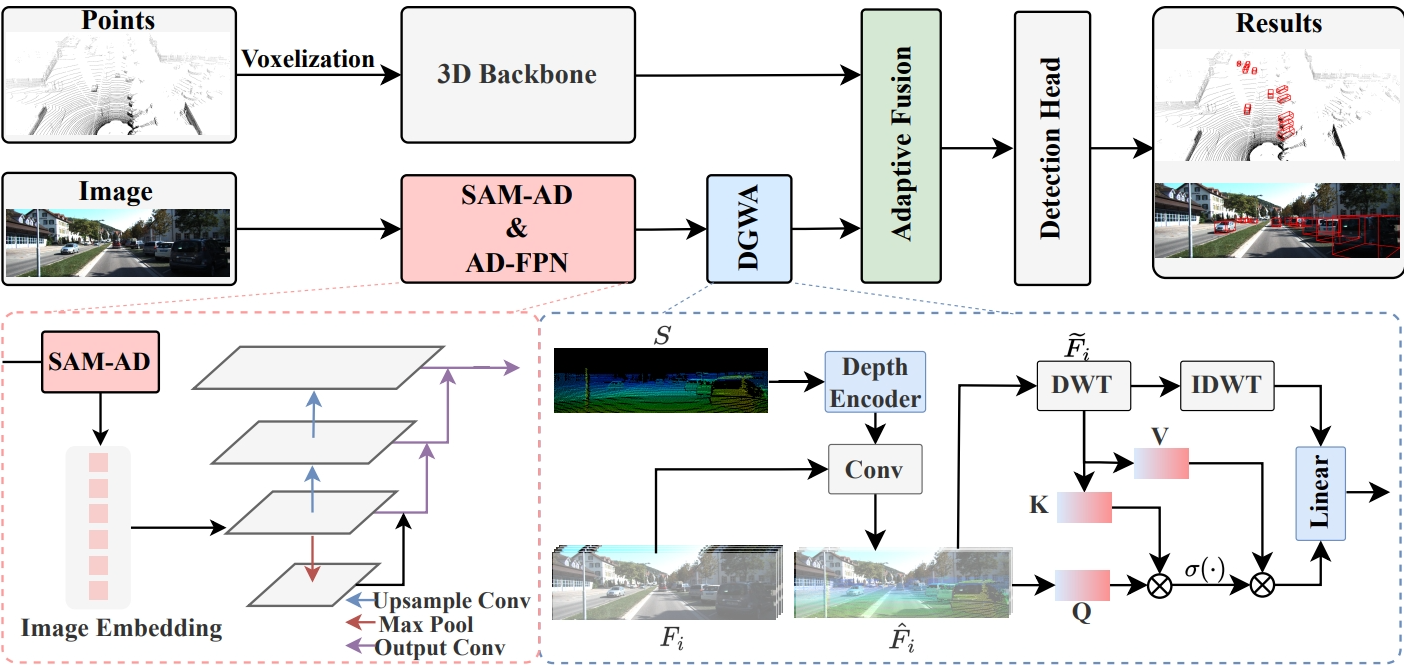

- We utilize features extracted from SAM rather than inference segmentation results.

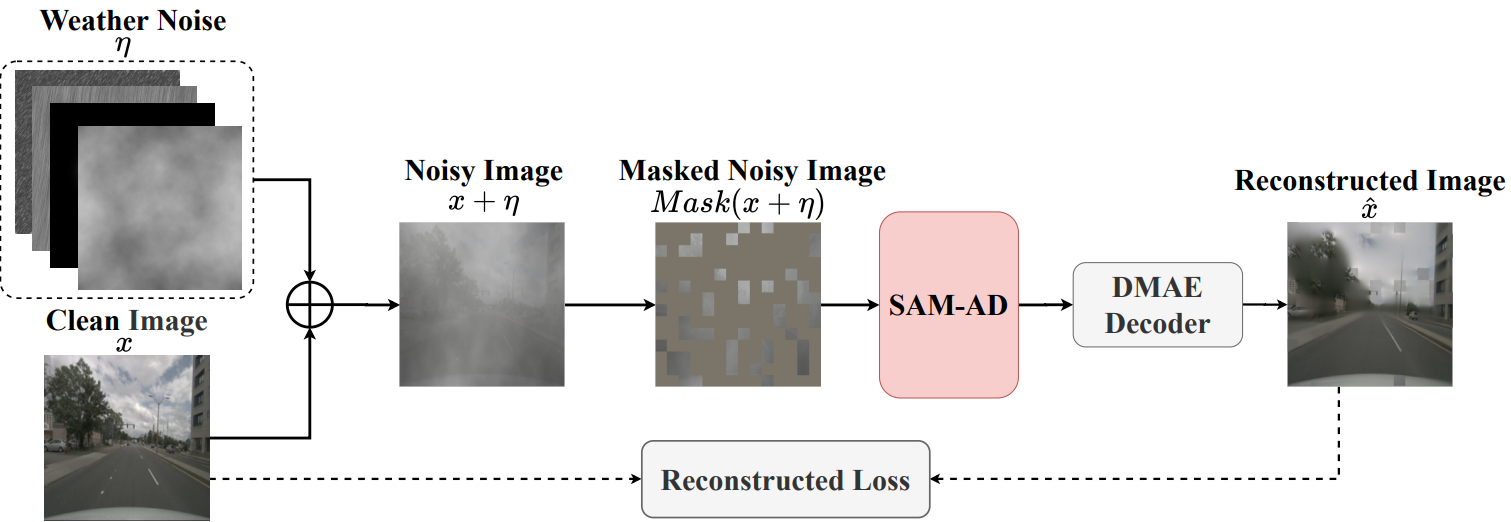

- We propose SAM-AD, which is a pre-trained SAM for AD scenarios.

- We introduce a novel AD-FPN to address the issue of feature upsampling for aligning VFMs with multi-modal 3D object detector.

- To further reduce noise interference and retain essential signal features, we design a Depth-Guided Wavelet Attention (DGWA) module that effectively attenuates both high-frequency and low-frequency noises.

- After fusing point cloud features and image features, we propose {\bf Adaptive Fusion} to further enhance feature robustness and noise resistance through self-attention to re-weight the fused features adaptively.

Multi-modal 3D object detectors are dedicated to exploring secure and reliable perception systems for autonomous driving (AD). However, while achieving state-of-the-art (SOTA) performance on clean benchmark datasets, they tend to overlook the complexity and harsh conditions of real-world environments. Meanwhile, with the emergence of visual foundation models (VFMs), opportunities and challenges are presented for improving the robustness and generalization of multi-modal 3D object detection in autonomous driving. Therefore, we propose RoboFusion, a robust framework that leverages VFMs like SAM to tackle out-of-distribution (OOD) noise scenarios. We first adapt the original SAM for autonomous driving scenarios named SAM-AD. To align SAM or SAM-AD with multi-modal methods, we then introduce AD-FPN for upsampling the image features extracted by SAM. We employ wavelet decomposition to denoise the depth-guided images for further noise reduction and weather interference. Lastly, we employ self-attention mechanisms to adaptively reweight the fused features, enhancing informative features while suppressing excess noise. In summary, our RoboFusion gradually reduces noise by leveraging the generalization and robustness of VFMs, thereby enhancing the resilience of multi-modal 3D object detection. Consequently, our RoboFusion achieves state-of-the-art performance in noisy scenarios, as demonstrated by the KITTI-C and nuScenes-C benchmarks.

Our RoboFusion framework. The LiDAR branch almost follows the baselines (FocalConv,TransFusion) to generate LiDAR features. In the camera branch, first, we extract robust image features using a highly optimized SAM-AD and acquire multi-scale features using AD-FPN. Secondly, the sparse depth map

Illustration of the pre-training framework. We corrupt the clean image

Please refer to clean dataset: OpenPCDet and MMdetection3D(KITTI and nuScenes).

Please refer to noise dataset.

Please refer to DMAE for how to pre-train orginal SAM.

Please refer to TransFusion+SAM.md for how to add SAM to TransFusion.

Please refer to FocalsConv+SAM.md for how to add SAM to FocalsConv.

Many thanks to these excellent open source projects:

Please cite our work by this:

@article{song2024robofusion,

title={Robofusion: Towards robust multi-modal 3d obiect detection via sam},

author={Song, Ziying and Zhang, Guoxing and Liu, Lin and Yang, Lei and Xu, Shaoqing and Jia, Caiyan and Jia, Feiyang and Wang, Li},

journal={arXiv preprint arXiv:2401.03907},

year={2024}

}