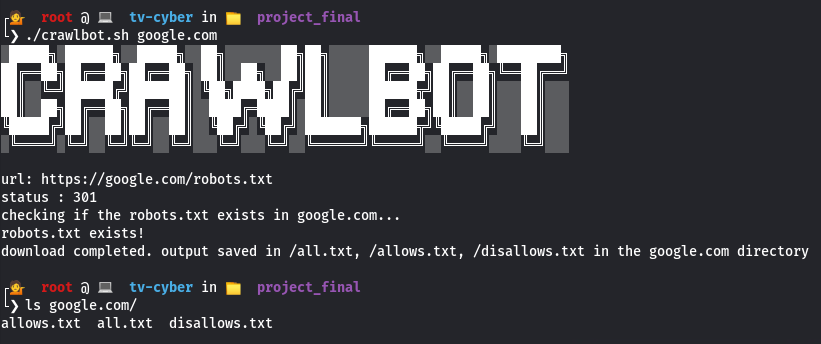

CrawlBot is a simple shell script which can be used to crawl the 'robots.txt' of a given domain. If the process is successful, the result will be listed into 3 different text files (allows.txt, disallows.txt, all.txt) in the created domain directory.

user@example:~git clone https://github.com/adhithyanmv/crawlbot.git

user@example:~cd crawlbot

user@example:~chmod +x *

user@example:~./crawlbot.sh domain_nameexample:~./crawlbot.sh domain_name

user@example:~./crawlbot.sh google.com

- Adhithyan

- Nithin M

- Angel Mariya

- Ranjith

- Ryan Roy Joseph.

- Mithin Kuttan

- Athul George

- Shyam S

- Muhammed Shareef M T

- Adharsh K K

- Sivaprasad S

- Meenu Cleetus

- Vinita Umesh

Current version is 1.0