For this project I've tried to build a batch pipeline to process real estate data of the state of Connecticut from (https://catalog.data.gov/,An official website of the GSA's Technology Transformation Services). The potential question I try to answer is, How can real estate professionals and investors leverage market data to identify trends and make data-driven decisions to optimize property portfolio performance?

Real Estate dataset direct link

- Amazon Web Services (AWS):

- VM Instance to run project on it.

- Cloud Storage to store data.

- Terraform to create cloud infrastructure.

- Docker for containerization (docker-compose)

- Python main programming language

- Airflow to run data pipelines as DAGs.

- Snowflake data warehouse for the project.

- Google data studio to visualize data.

Refer here for AWS setup details

Download Terraform!

- Download here: https://www.terraform.io/downloads

Initializing Terraform

- Create a new directory with

main.tf, and initialize your config file. Details- OPTIONAL Create

variables.tffiles to store your variables

- OPTIONAL Create

terraform initterraform planterraform apply

The dataset data download, process and upload to cloud storage, transfer to data warehouse is done via these Airflow DAGs:

Local to AWS Dag

- Downloads the dataset file in the csv format. This task runs by a bash script, which downloads the data.

- This file is then uploaded to project Cloud Storage(Data Lake).

- run the

local_to_aws_dagin the airflow UI after setting up airflow.

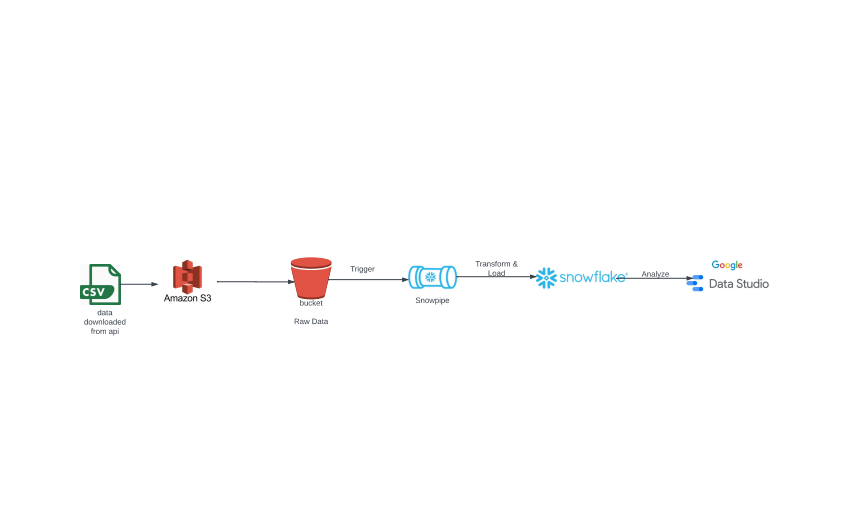

The snowflake environment is setup up using the files here. A snowpipe is configured in snowflake that is triggered by the event notification setup in the project s3 bucket(send notification as soon as a new file is uploaded in the project s3 bucket). This snowpipe transfers the data from the s3 bucket into an external stage in a metadata base in snowflake in the form of table. This table is then used to load the transformed data in the main data warehouse for the project.

- Go to Google Data Studio

- Click

Create>Data Source - Select

BigQuery> Your Project ID > Dataset > Table - Click on

Connecton the top-right and your data should now be imported to use for your dashboard!

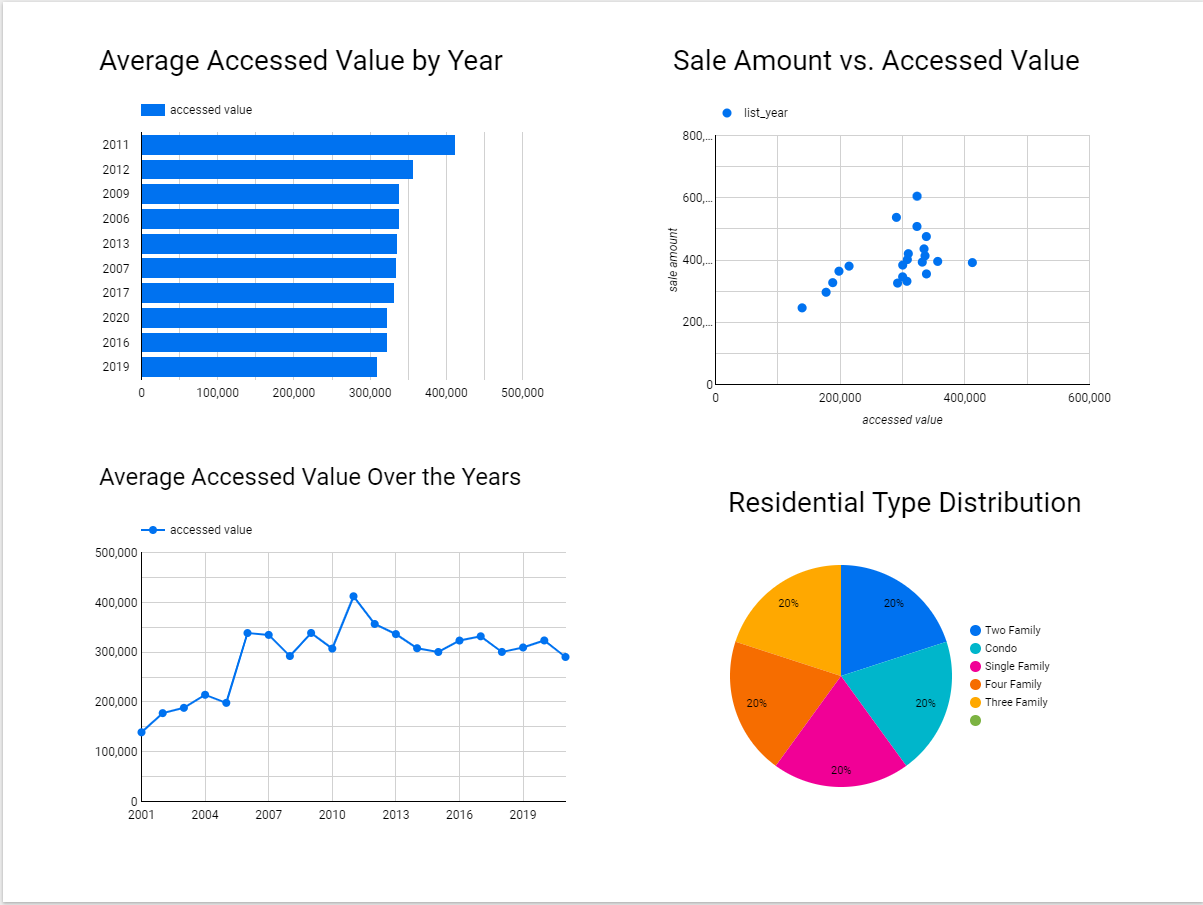

Simple dashboard at Google Data studio with few graphs.

- Average Accessed Value by Year.

- Sale Amount vs. Accessed Value.

- Average Accessed Value Over the Years.

- Residential Type Distribution.

Below is a screenshot of my dashboard.