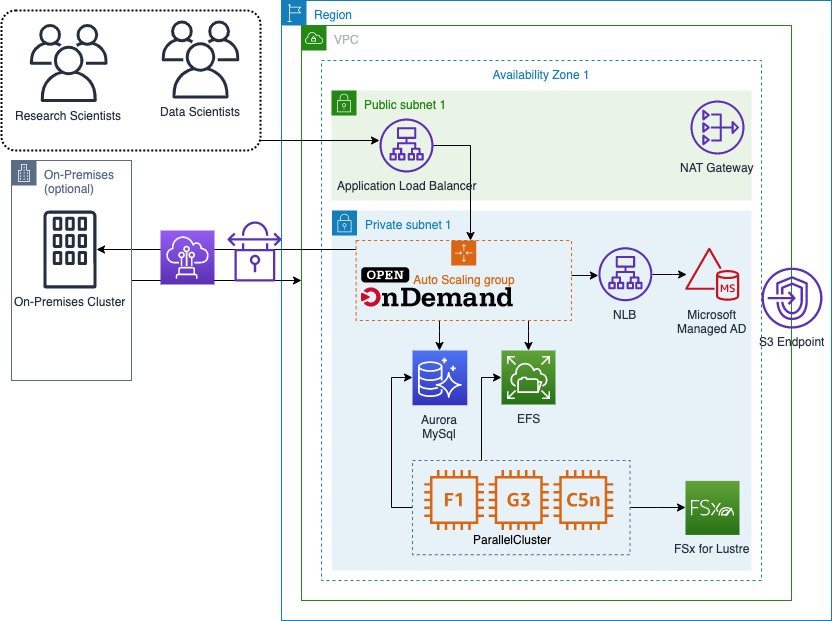

This reference architecture provides a set of templates for deploying Open OnDemand (OOD) with AWS CloudFormation and integration points for AWS Parallel Cluster.

The main branch is for Open OnDemand v 3.0.0

The primary components of the solution are:

- Application load balancer as the entry point to your OOD portal.

- An Auto Scaling Group for the OOD Portal.

- A Microsoft ManagedAD Directory

- A Network Load Balancer (NLB) to provide a single point of connectivity to Microsoft ManagedAD

- An Elastic File System (EFS) share for user home directories

- An Aurora MySQL database to store Slurm Accounting data

- Automation via Event Bridge to automatically register and deregister Parallel Cluster HPC Clusters with OOD

This solution was tested with PCluster version 3.7.2.

Download the cloudformation/openondemand.yml template, and use that to create a cloudformation stack in your AWS account and correct region.

Once deployed, you should be able to navigate to the URL you set up as a CloudFormation parameter and log into your Open OnDemand portal. You can use the username Admin and retrieve the default password from Secrets Manager. The correct secret can be identified in the output of the Open OnDemand CloudFormation template via the entry with the key ADAdministratorSecretArn.

The OOD solution is built so that a Parallel Cluster HPC Cluster can be created and automatically registered with the portal.

In your Parallel Cluster config, you must set the following values:

- HeadNode:

- SubnetId: PrivateSubnet1 from OOD Stack Output

- AdditionalScurityGroups: HeadNodeSecurityGroup from CloudFormation Outputs

- AdditionalIAMPolicies: HeadNodeIAMPolicyArn from CloudFormation Outputs

- OnNodeConfigured

- Script: CloudFormation Output for the ClusterConfigBucket; in the format

s3://$ClusterConfigBucket/pcluster_head_node.sh - Args: Open OnDemand CloudFormation stack name

- Script: CloudFormation Output for the ClusterConfigBucket; in the format

- SlurmQueues:

- SubnetId: PrivateSubnet1 from OOD Stack Output

- AdditionalScurityGroups: ComputeNodeSecurityGroup from CloudFormation Outputs

- AdditionalIAMPolicies: ComputeNodeIAMPolicyArn from CloudFormation Outputs

- OnNodeConfigured

- Script: CloudFormation Output for the ClusterConfigBucket; in the format

s3://$ClusterConfigBucket/pcluster_worker_node.sh - Args: Open OnDemand CloudFormation stack name

- Script: CloudFormation Output for the ClusterConfigBucket; in the format

The pam_slurm_adopt module can be enabled on the Compute nodes within ParallelCluster to prevent users from sshing into nodes that they do not have a running job on.

In your Parallel Cluster config, you must set the following values:

1/ Enable the PrologFlags: "contain" should be in place to determine if any jobs have been allocated. This can be set within the Scheduling section.

SlurmSettings:

CustomSlurmSettings:

- PrologFlags: "contain"

2/ ExclusiveUser should be set to YES to cause nodes to be exclusively allocated to users. This can be set within the SlurmQueues section.

CustomSlurmSettings:

ExclusiveUser: "YES"

3/ A new script scripts/configure_pam_slurm_adopt.sh can be added to the OnNodeConfigured configuration within the SlurmQueues section to run a sequence of scripts.

CustomActions:

OnNodeConfigured:

Sequence:

- Script: s3://$ClusterConfigBucket/pcluster_worker_node.sh

Args:

- Open OnDemand CloudFormation stack name

- Script: s3://$ClusterConfigBucket/configure_pam_slurm_adopt.sh

If ParallelCluster Login Nodes are being used a configuration script configure_login_nodes.sh can be used to configure the login node and enable it in Open OnDemand.

Usage Replace the following values:

<OOD_STACK_NAME>- name of the Open OnDemand stack name found in CloudFormation<ClusterConfigBucket>- 'ClusterConfigBucket' Output found in the Open OnDemand stack

Run the following script on the login node

S3_CONFIG_BUCKET=<ClusterConfigBucket>

aws s3 cp s3://$S3_CONFIG_BUCKET/configure_login_nodes.sh .

chmod +x configure_login_nodes.sh

configure_login_nodes.sh <OOD_STACK_NAME>You can enable interactive clusters on the Portal server by following the directions here.

In addition to the above steps, you must update /etc/resolv.conf on the Portal instance to include the Parallel Cluster Domain (ClusterName.pcluster) in the search configuration. resolv.conf will look similar to the example below. The cluster name, in this case was democluster.

Example resolv.confg:

# Generated by NetworkManager

search ec2.internal democluster.pcluster

nameserver 10.0.0.2

This requires you to have a compute queue with pcluster_worker_node_desktop.sh as your OnNodeConfigured script.

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.