This repository contains code related to the paper Using convolutional networks and satellite imagery to identify patterns in urban environments at a large scale. A slightly modified version of the paper appears in the proceedings of the ACM KDD 2017 conference.

This repository contains the Python implementation of the data processing, model training, and analysis presented in the paper:

- code to construct training and evaluation datasets for land use classification of urban environments is in the dataset-collection folder

Kerasimplementations of the convolutional neural networks classifiers used for this paper are in the classifier folder, along withKerasutilities for data ingestion and multi-GPU training (with aTensorFlowbackend)- code to train and validate the models, and to produce the analysis and figures in the paper is in the notebooks in the land-use-classification folder.

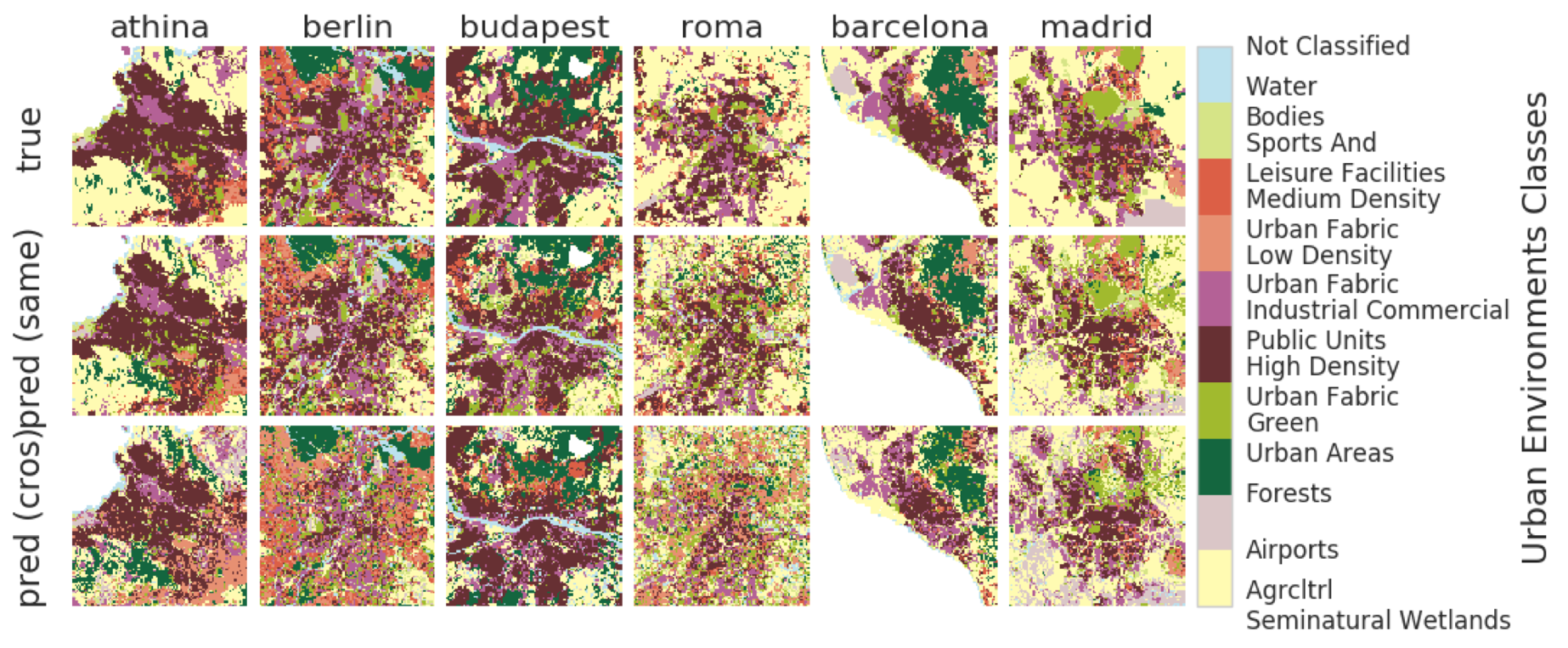

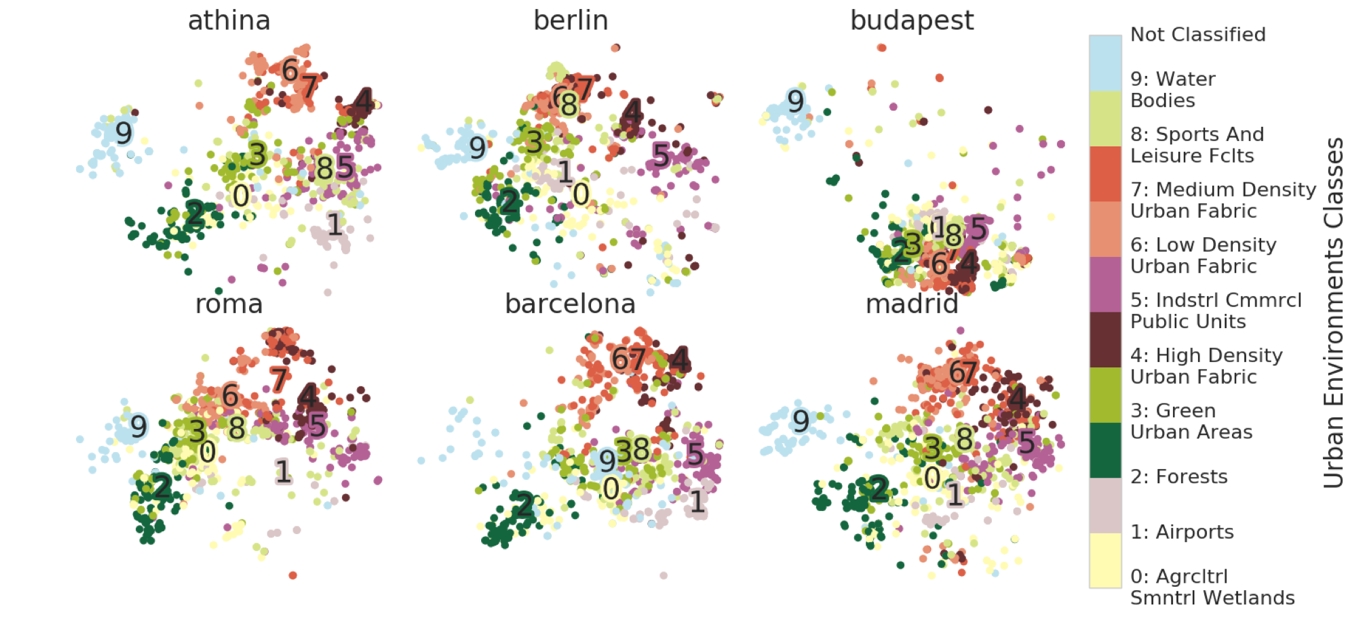

After a convolutional network classifier is trained on satellite data in a supervised way, it can be used to compare city blocks (urban environments) across many cities by studying the features extracted for each satellite image.

If you use the code, data, or analysis results in this paper, we kindly ask that you cite the paper above as:

Using convolutional networks and satellite imagery to identify patterns in urban environments at a large scale. A. Toni Albert, J. Kaur, M.C. Gonzalez, 2017. In Proceedings of the ACM SigKDD 2017 Conference, Halifax, Nova Scotia, Canada.