Torch implementation of CVPR'17 - Local Binary Convolutional Neural Networks http://xujuefei.com/lbcnn.html

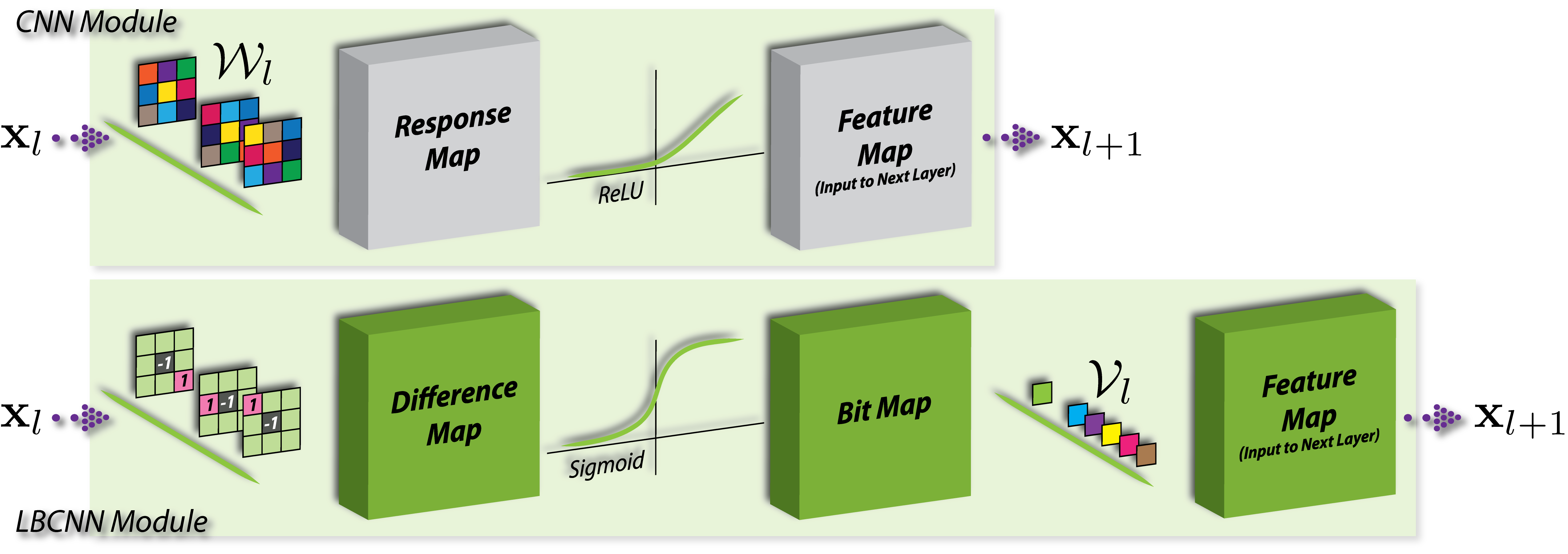

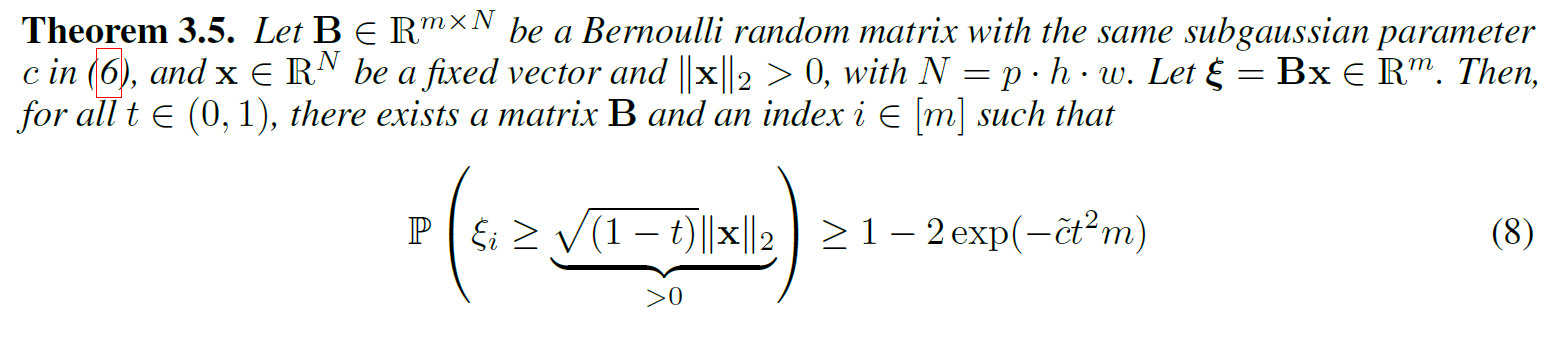

We propose local binary convolution (LBC), an efficient alternative to convolutional layers in standard convolutional neural networks (CNN). The design principles of LBC are motivated by local binary patterns (LBP). The LBC layer comprises of a set of fixed sparse pre-defined binary convolutional filters that are not updated during the training process, a non-linear activation function and a set of learnable linear weights. The linear weights combine the activated filter responses to approximate the corresponding activated filter responses of a standard convolutional layer. The LBC layer affords significant parameter savings, 9x to 169x in the number of learnable parameters compared to a standard convolutional layer. Furthermore, the sparse and binary nature of the weights also results in up to 9x to 169x savings in model size compared to a standard convolutional layer. We demonstrate both theoretically and experimentally that our local binary convolution layer is a good approximation of a standard convolutional layer. Empirically, CNNs with LBC layers, called local binary convolutional neural networks (LBCNN), achieves performance parity with regular CNNs on a range of visual datasets (MNIST, SVHN, CIFAR-10, and ImageNet) while enjoying significant computational savings.

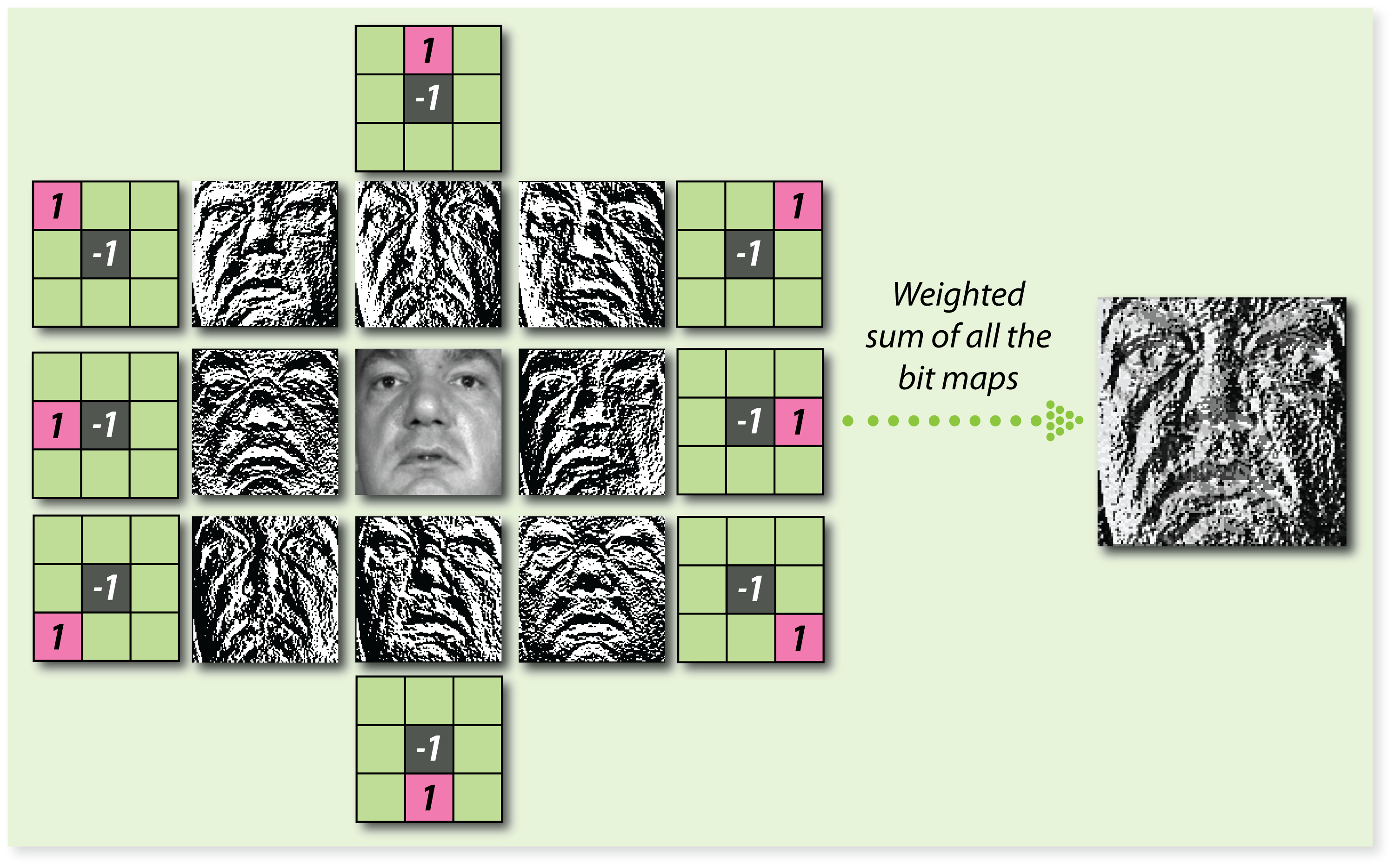

We draw inspiration from local binary patterns that have been very successfully used for facial analysis.

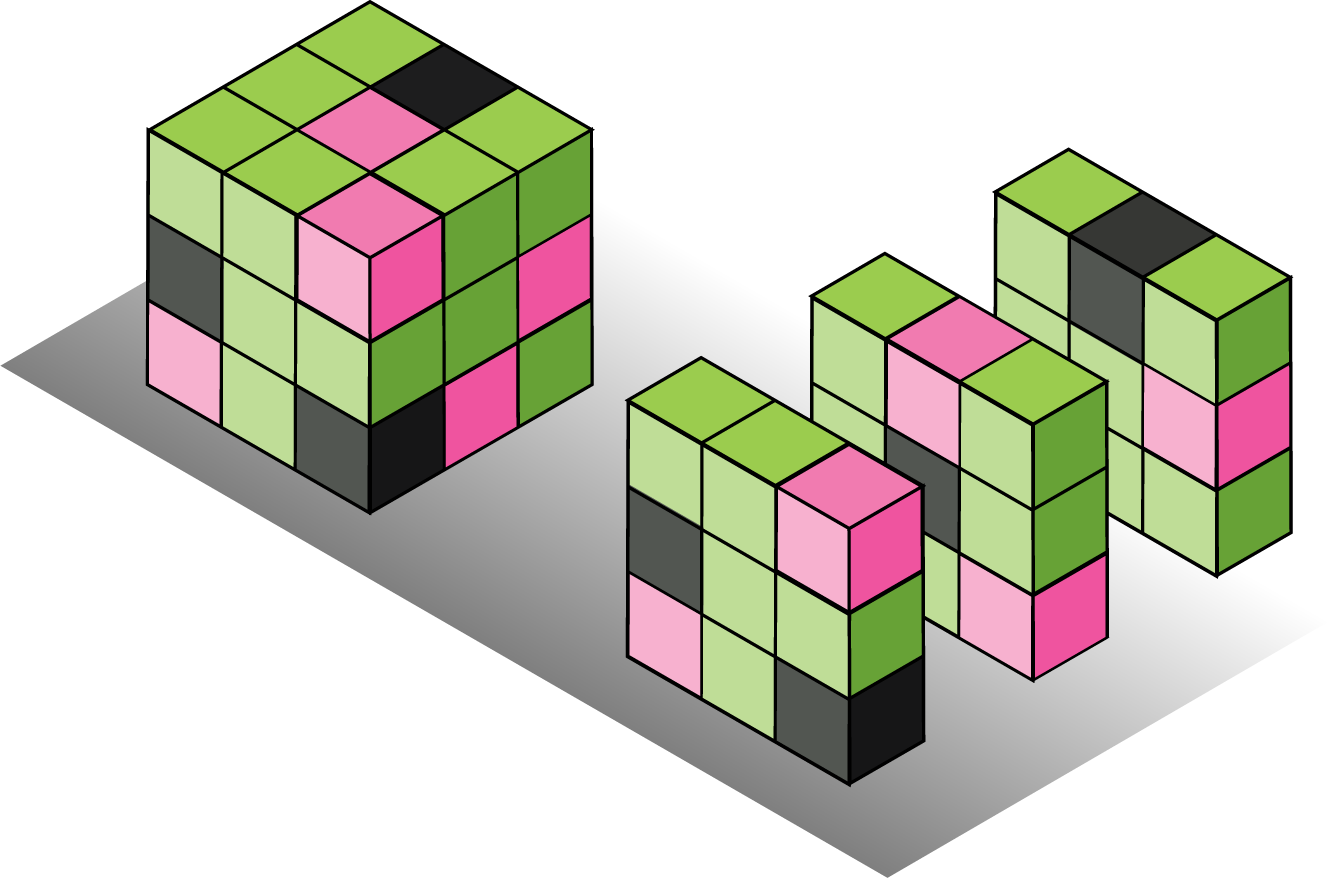

Our LBCNN module is designed to approximate a fully learnable dense CNN module.

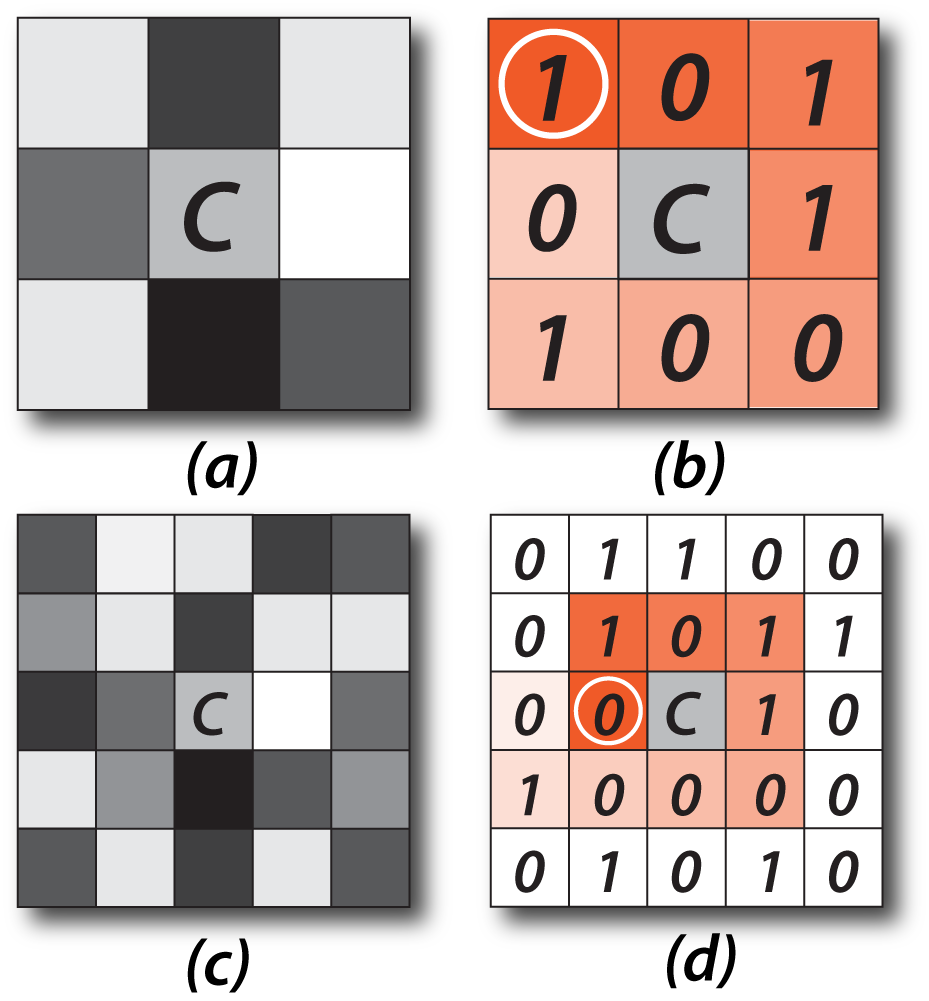

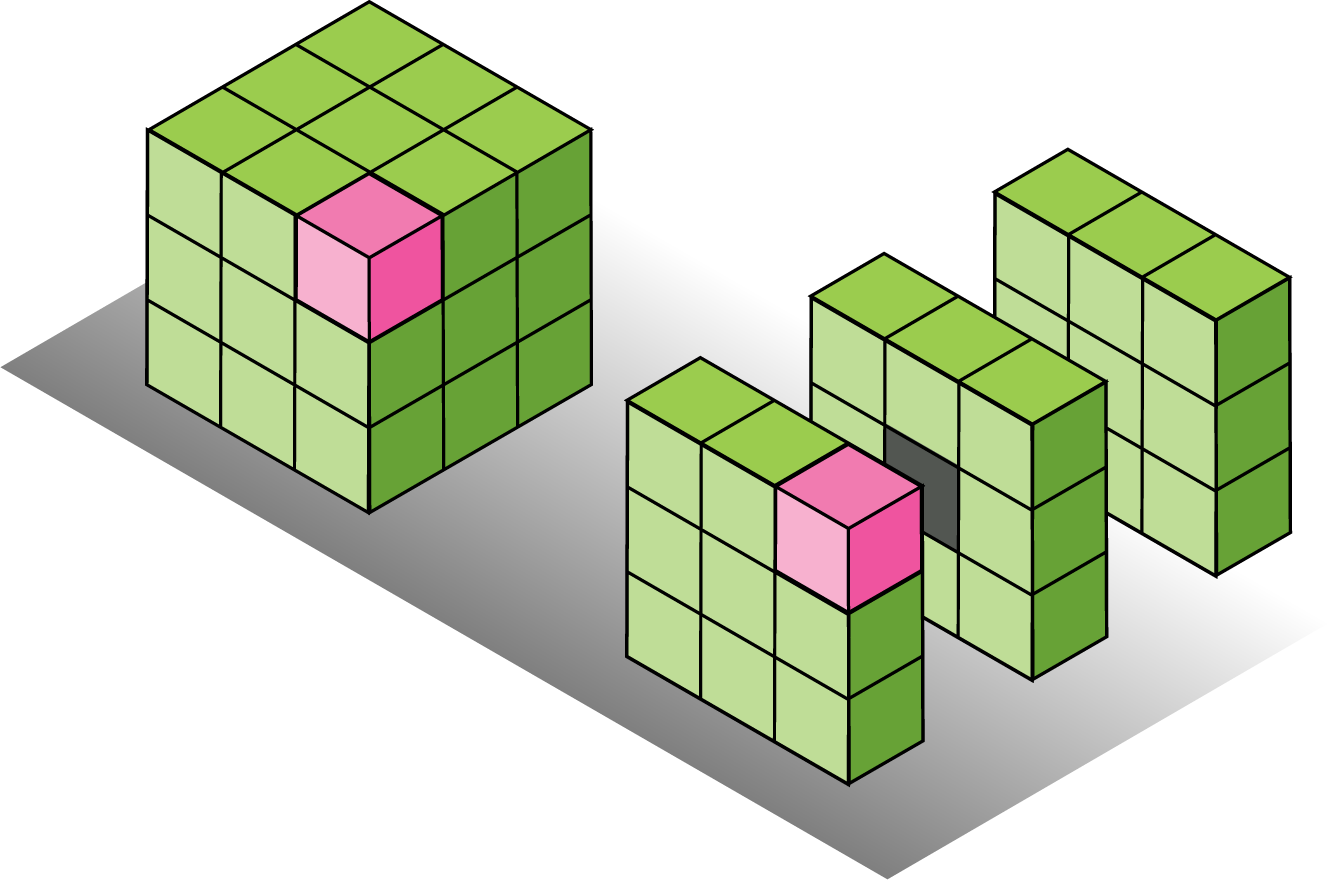

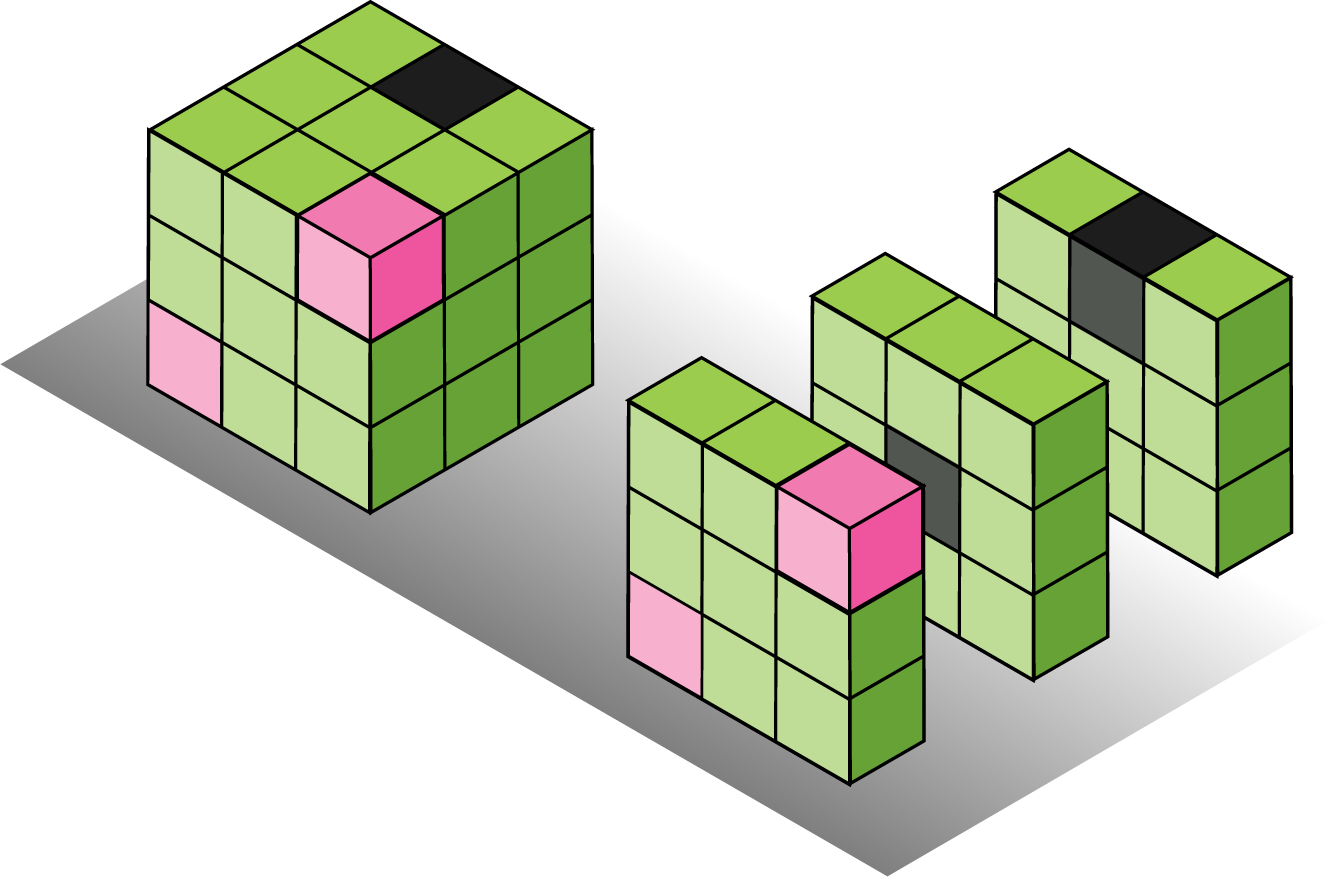

Binary convolutional kernels with different sparsity levels.

- Convolutional kernels inspired by local binary patterns.

- Convolutional neural network architecture with non-mutable randomized sparse binary convolutional kernels.

- Lightweight CNN with massive computational and memory savings.

- Felix Juefei-Xu, Vishnu Naresh Boddeti, and Marios Savvides, Local Binary Convolutional Neural Networks,

- To appear in IEEE Computer Vision and Pattern Recognition (CVPR), 2017. (Spotlight Oral Presentation)

@inproceedings{juefei-xu2017lbcnn,

title={{Local Binary Convolutional Neural Networks}},

author={Felix Juefei-Xu and Vishnu Naresh Boddeti and Marios Savvides},

booktitle={IEEE Computer Vision and Pattern Recognition (CVPR)},

month={July},

year={2017}

}

The code base is built upon fb.resnet.torch.

See the installation instructions for a step-by-step guide.

- Install Torch on a machine with CUDA GPU

- Install cuDNN v4 or v5 and the Torch cuDNN bindings

- Download the ImageNet dataset and move validation images to labeled subfolders

If you already have Torch installed, update nn, cunn, and cudnn.

The numChannels parameter corresponds to the output channels in the paper.

- MNIST

CNN

th main.lua -netType resnet-dense-felix -dataset mnist -data '/media/Freya/juefeix/LBCNN' -save '/media/Freya/juefeix/LBCNN-Weights' -numChannels 16 -batchSize 10 -depth 75 -full 128LBCNN (~99.5% after 80 epochs)

th main.lua -netType resnet-binary-felix -dataset mnist -data '/media/Freya/juefeix/LBCNN' -save '/media/Freya/juefeix/LBCNN-Weights' -numChannels 16 -batchSize 10 -depth 75 -full 128 -sparsity 0.5- SVHN

CNN

th main.lua -netType resnet-dense-felix -dataset svhn -data '/media/Freya/juefeix/LBCNN' -save '/media/Freya/juefeix/LBCNN-Weights' -numChannels 16 -batchSize 10 -depth 40 -full 512LBCNN (~94.5% after 80 epochs)

th main.lua -netType resnet-binary-felix -dataset svhn -data '/media/Freya/juefeix/LBCNN' -save '/media/Freya/juefeix/LBCNN-Weights' -numChannels 16 -batchSize 10 -depth 40 -full 512 -sparsity 0.9- CIFAR-10

CNN

th main.lua -netType resnet-dense-felix -dataset cifar10 -data '/media/Caesar/juefeix/LBCNN' -save '/media/Caesar/juefeix/LBCNN-Weights' -numChannels 384 -numWeights 704 -batchSize 5 -depth 50 -full 512LBCNN (~93% after 80 epochs)

th main.lua -netType resnet-binary-felix -dataset cifar10 -data '/media/Caesar/juefeix/LBCNN' -save '/media/Caesar/juefeix/LBCNN-Weights' -numChannels 384 -numWeights 704 -batchSize 5 -depth 50 -full 512 -sparsity 0.001