uLinux (µLinux) is a micro (µ)Linux Cloud Native OS designed for high performance, minimal overhead and a small footprint.

uLinux is container-ready and small enough that it could be used as a sort of "UniKernel" for your applications.

If you care about performance, footprint, security and minimalism like I do you'll want to use µLinux for your workloads.

NOTE:: µLinux is NOT a full blown Desktop OS nor will it ever likely be. It is also not ever likely to build your favorite GCC/GLIBC/Clang software as it only ships with a very small C compiler (tcc) and libc (musl). Consider using Alpine for a more feature rich system or any other "heavier" / "full featured" distro.

- VirtualBox

- QEMU/KVM

- Proxmox VE

- Vultr

- DigitalOcean

- need more? file an issue or submit a PR!

- Recent Linux Kernel

- Very Lightweight

- Full Container Support

- Busybox Userland

- Dropbear SSH Daemon

- Hybrid Live ISO

- Installer

- CloudInit

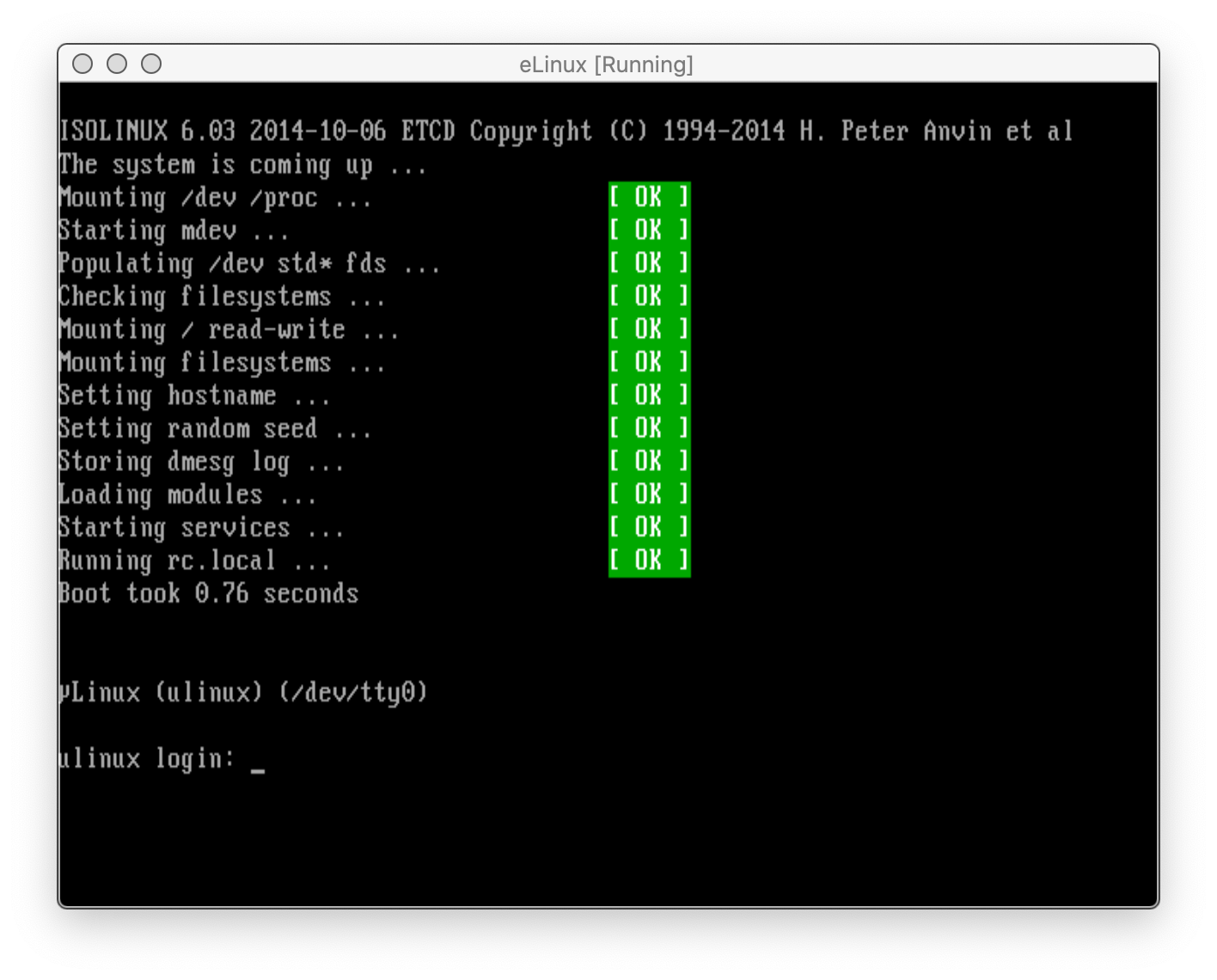

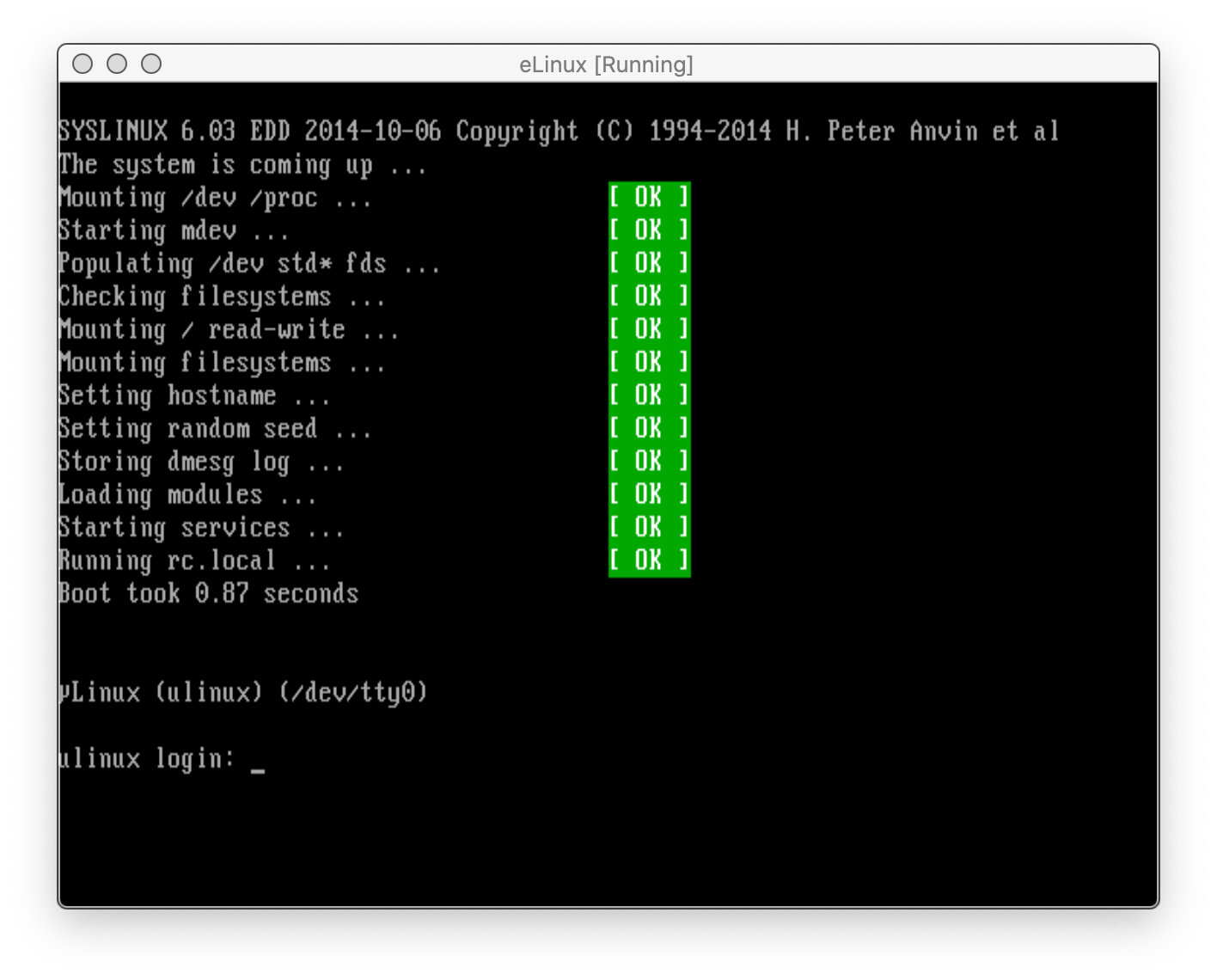

uLinux is designed for high performance and small footprint. uLinux is expected to boot within ~1s on a modern system. See Screenshots.

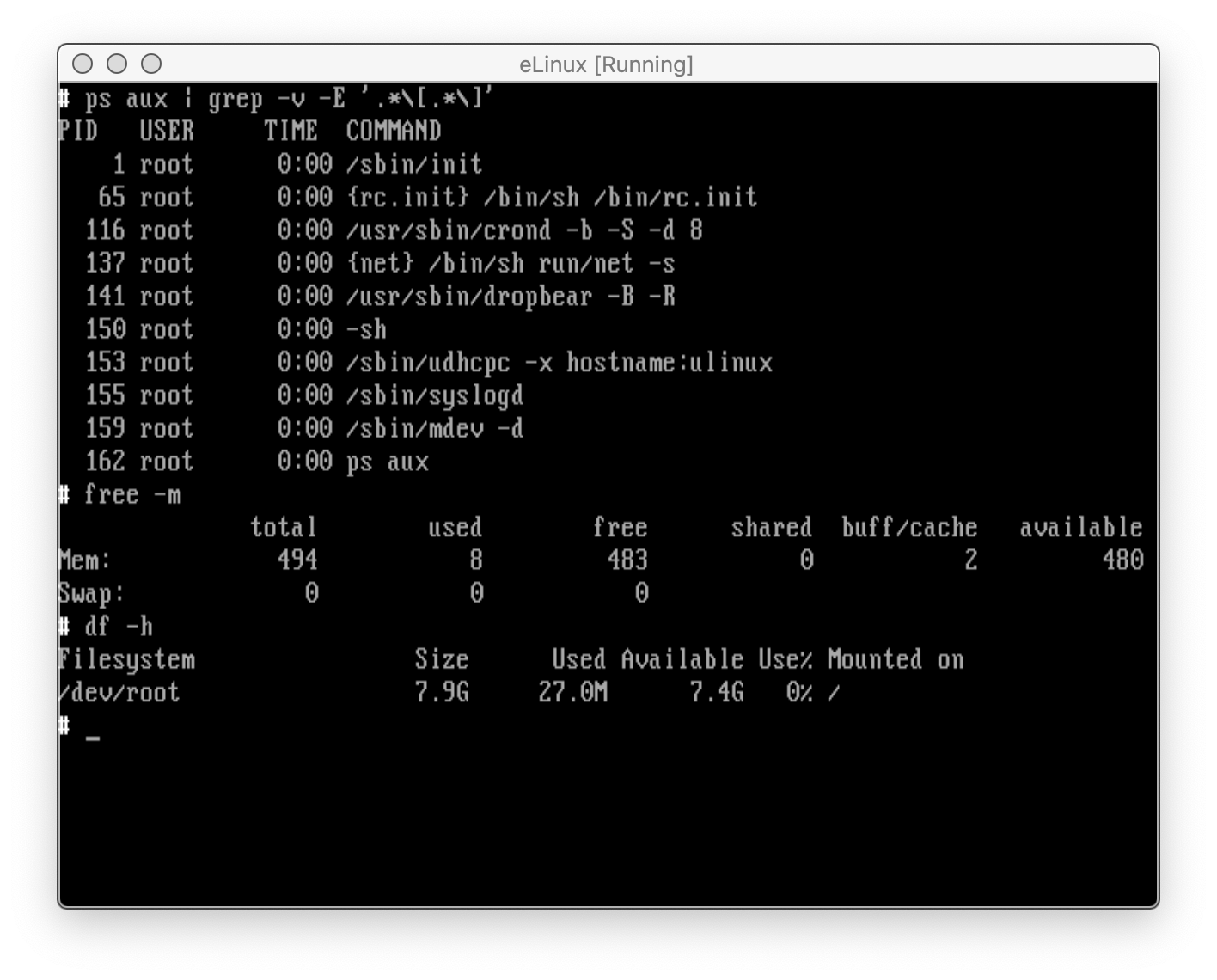

uLinux will boot on as little as 64MB of RAM! Yes that's right!

Other notable attributes:

- ~3MB Kernel

- ~6MB Root FS (compressed)

- ~10MB Hybrid ISO

- ~20MB On-Disk Install

The goal is to stick to these attributes as far as we can! The only way to grow your uLinux system once installed is through ports and packages.

uLinux supports full containerization and comes shipped with a very small

lightweight container / sandbox tool called box. See box.

Currently supported Container Engines:

uLinux currently supports building C and Assembly and ships with the following compilers and tools:

There is no support for GCC/GLIBC.

To use docker you must first install it using ports.

ports -u

cd /usr/ports/cgroups-mount

pkg build

pkg add

cd /usr/ports/docker

pkg build

pkg add

svc -r dockerd

docker psuLinux fully supports configuration via CloudInit/CloudConfig. See CloudInit.

This repo contains prebuilt images to get you started quickly without having to build anything yourself.

Please see the Release for the latest published versions of:

ulinux.iso-- Hybrid Live ISO with Installer.kernel.gz-- The Kernel Image (Linux)rootfs.gz-- The RootFS Image (INITRD)

TODO:

- Add prebuilt Cloud Disk Images.

Once booted with either the Hybrid Live ISO or Kernel + RootFS you can install uLinux to disk by typing in a shell:

# setup /dev/sdaAssuming /dev/sda is the device path to the disk you want to install to.

WARNING: This will automatically partition, format and install uLinux with no questions asked without hesitation. Please be sure you understand what you are doing.

First make sure you have Docker installed on your system as the build uses this extensively to build the OS reliably across all platforms consistently.

NOTE: Docker is not strictly required in order to build uLinux. You may also just run the

./main.shbuild script yourself as long as you have installed all required build dependencies. See the Dockerfile.builder for details on what dependencies need to be available for a successful build.

Also make sure you have QEMU installed as this is used for testing and booting the OS into a Guest VM.

Then just run:

$ makeYou can customize the build by overriding the customize_kernel() and

customize_rootfs() functions in customize.sh:

customize_kernel()-- This is passed no arguments and the current directory is the location of the current kernel sources being built. You can useconfig <value> <config>to enable/disable Kernel options. e.g:config m MULTIUSER.customize_rootfs()-- This is passed the path to the rootfs as its first argument. You may run any series of commands that add to or remove things from the rootfs.

if any of the customize_* functions fail the build will fail.

uLinux is fully cloud-init capable and supports the following cloud-init platforms:

- Amazon EC2

- OpenStack

- Proxmox VE

- CloudDrive

- need more? file an issue or submit a PR!

uLinux's cloud-init fully supports a subset of the cloud-config YAML spec including:

- Disabling the root account

- Setting a user account password

- Adding ssh keys to a user account

- Managing the hosts file

In addition there is also full support for running an arbitrary shell script as the `user-data.

There is a sample CloudDrive config in clouddrive as the top-level

of the repo which can be customized by editing the user-data script and

running make clouddrive.

uLinux comes with its own unique set of service management tools called svc

and service (which is just a wrapper for svc). Services are stored in

a directory at /bin/svc.d which has the following structure:

# cd /bin/svc.d

# find .

.

./bare.sh

./avail

./avail/syslogd

./avail/cloudinit

./avail/ntpd

./avail/mdev

./avail/dnsd

./avail/tfptd

./avail/crond

./avail/rngd

./avail/net

./avail/hwclock

./avail/ftpd

./avail/telnetd

./avail/httpd

./avail/dropbear

./avail/udhcpd

./run

./run/syslogd

./run/cloudinit

./run/mdev

./run/crond

./run/net

./run/hwclock

./run/dropbear

#

Each file listed in /bin/svc.d/avail can either be:

- a shell script or binary that responds to

-k(kill) or-s(start). - An empty file or "bare" service denoting no special requirements and will

be managed by the

bare.shwrapper. Useful for daemons that need no special requirements and only basic configuration.

Configuration for services is expected to be stored in /etc/default/<service>

which contains files that are sourced and in shell format. The bare.sh

wrapper looks here by default for configuration for "bare" services. Other

services may also store and look for their configuration here but this is

not mandatory.

This is the primary tool for managing services:

svc -a-- List available services that can be started or enabled.svc -a <service>-- Activates the given<service>.svc -c-- Creates the directory structure for/bin/svc.d(done by default).svc -d <service>-- Deactivates the given<service>.svc -k <service>-- Kills the given<service>.svc -r <service>-- Restarts the given<service>.svc -s <service>-- Starts the given<service>.

This is simply a wrapper for svc and supports the following three commands:

service start <service>service stop <service>service restart <service>

If you are at all familiar with CRUX or ArchLinux then the packages and ports and tools provided by uLinux will be somewhat familiar to you.

Packages in uLinux are basic Tarball(s) of a prebuilt piece of software

with one minor notable exception. It also includes state of the package

database which is used to update the uLinux package database on your system

(each package stores its installed state in separate directories in /var/db/pkg).

Ports in uLinux are very similar to that of the Pkgfile format of CRUX with

only one notable exception. They only support POSIX Shell as that is the only

type of shell that ships with uLinux. Therefore you cannot use an array for

source= it must be a space or newline delimited string.

Configuration of pkg and ports are stored in /etc/pkg.conf and here

you can customize the behavior of the packaging and ports tools. The most

notable configuration options currently are:

URL-- The Base URL of a web resource to synchronize a collection of ports.PKG_PORTSDIR-- The path to the primary ports collection on disk.

NOTE: Currently ports does not support multiple collections_)

What following is a brief description of the tools available and their usage.

This is the primary tool for managing packages installed on a uLinux system.

See the help for details on usage:

# pkg

=> pkg [a]dd pkg.tar.gz

=> pkg [b]uild

=> pkg [c]hecksum

=> pkg [d]el pkg

=> pkg [l]ist [pkg]

This tool updates and synchronizes the ports collection on disk depending

on the configuration of PKG_PORTSDIR with a remote collection specified

by URL. The remote collection must contain a collection of ports

as well as the index file generated by repogen and each file must be

retrievable with by wget.

There are a number of other utilities that live outside (currently) of

pkg and port, these are:

repogen-- Creates theindexfor the current directory of ports suitable for hosting by a basic web server. Thisindexis the first resource thatportslooks for in order to synchronize ports.prtcreate-- Creates a blank basic skeleton of a port and itsPkgfilein/usr/ports.

uLinux comes shipped with a simple Container / Sandbox tool called `box. It has a familiar user experience if you are used to Docker (but nowhere near as feature rich of course!).

Simply run box to get a shell in an isolated environment that is fully

isolated from your system's mounts, namespace, pid, devices, etc. The only

part that is shared from the host is the host's networking.

# box

KhJpKC / # ps aux

PID USER TIME COMMAND

1 root 0:00 /bin/sh

6 root 0:00 ps aux

Or run box <command> like so:

# box echo 'I am pid $$'

I am pid 1

Mostly useful as a way to debug and enter the environment of an installed system where uLinux is used as a "rescue" system.

$ enter-chroot /path/to/rootfs

NOTE: You must have already mounted the root file system at the desired path

before running enter-chroot /path/to/rootfs.

Just run:

$ make testThis uses QEMU and on macOS X we use the nanos Homrebrew formulae of QEMU that supports hardware accelaration:

brew tap nanovms/homebrew-qemu

brew install nanovms/homebrew-qemu/qemu

The OS comes pre-shipped with Dropbear (SSH Daemon) as well as a Busybox

Userland. The test script (test.sh) also uses QEMU and sets up a User mode

network which forwards port 2222 to the guest VM for SSH access.

You can access the guest with:

$ ssh -p 2222 root@localhostuLinux is licensed under the terms of the MIT License.