1704 🤖 Machine Learning, Data Science & Python Interview Questions (ANSWERED) To Land Your Next Six-Figure Job Offer from MLStack.Cafe

MLStack.Cafe is the biggest hand-picked collection of top Machine Learning, Data Science, Python and Coding interview questions for Junior and Experienced data analyst, machine learning engineers/developers and data scientists with more that 1704 ML & DS interview questions and answers. Prepare for your next ML, DS & Python interview and land 6-figure job offer in no time.

🔴 Get All 1704 Answers + PDFs + Latex Math on MLStack.Cafe - Kill Your ML, DS & Python Interview

👨💻 Hiring Data Analysts, Machine Learning Engineers or Developers? Post your Job on MLStack.Cafe and reach thousands of motivated engineers who is looking for a ML Job right now!

- Anomaly Detection

- Autoencoders

- Bias & Variance

- Big Data

- Big-O Notation

- Classification

- Clustering

- Cost Function

- Data Structures

- Databases

- Datasets

- Decision Trees

- Deep Learning

- Dimensionality Reduction

- Ensemble Learning

- Genetic Algorithms

- Gradient Descent

- K-Means Clustering

- K-Nearest Neighbors

- Linear Algebra

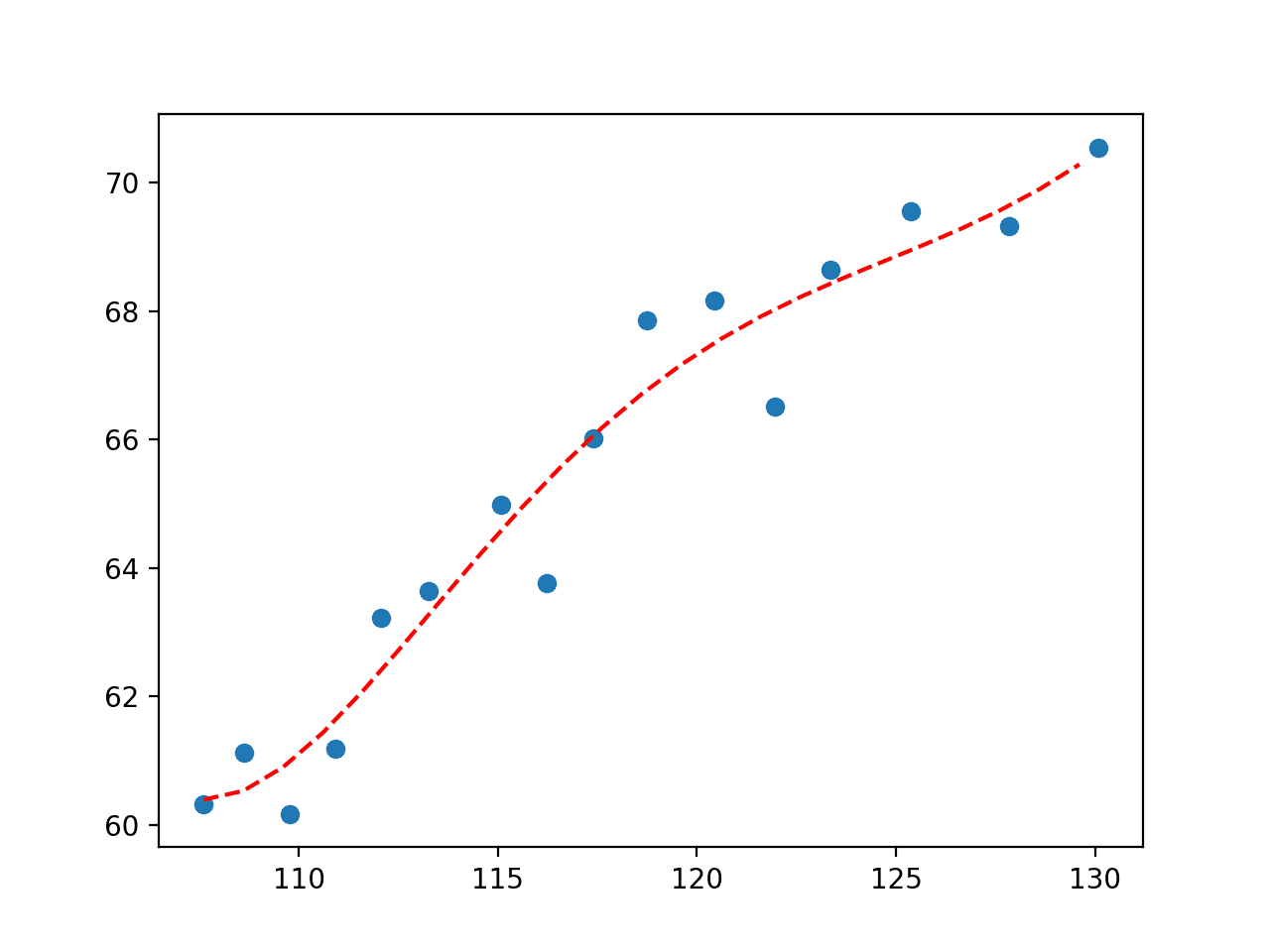

- Linear Regression

- Logistic Regression

- Machine Learning

- Model Evaluation

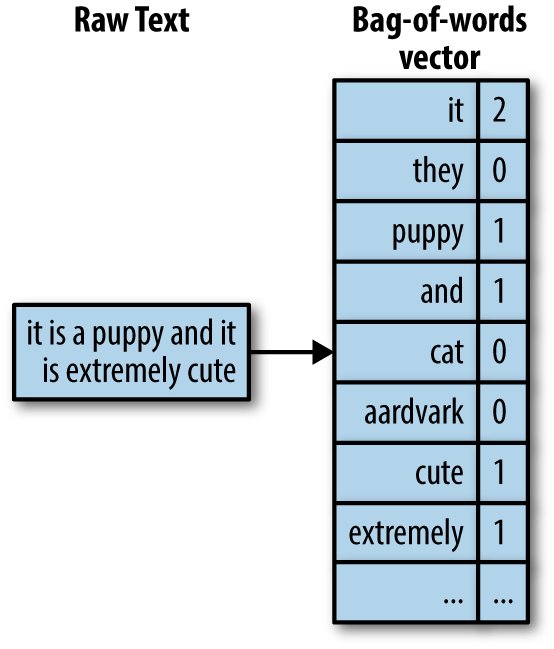

- Natural Language Processing

- Naïve Bayes

- Neural Networks

- NumPy

- Optimization

- Pandas

- Probability

- Python

- Random Forests

- SQL

- SVM

- Scikit-Learn

- Searching

- Sorting

- Statistics

- Supervised Learning

- TensorFlow

- Unsupervised Learning

[⬆] Anomaly Detection Interview Questions

Anomaly detection (or outlier detection) is the identification of rare items, events or observations which raise suspicions by differing significantly from the majority of the data.

Source: towardsdatascience.com

- The goal of anomaly detection is to identify cases that are unusual within data that is seemingly comparable hence anomaly detection can be used effectively as a tool for risk mitigation and fraud detection.

- When preparing datasets for machine learning models, it is really important to detect all the outliers and either get rid of them or analyze them to know why you had them there in the first place.

Source: towardsdatascience.com

Normalization rescales the values into a range of [0,1]. This might be useful in some cases where all parameters need to have the same positive scale. However, the outliers from the data set are lost.

Standardization rescales data to have a mean (

For most applications standardization is recommended.

Source: stats.stackexchange.com

The Median is the most suitable measure of central tendency for skewed distributions or distributions with outliers. For example, the median is often used as a measure of central tendency for income distributions, which are generally highly skewed.

Because the median only uses one or two values, it’s unaffected by extreme outliers or non-symmetric distributions of scores. In contrast, the mean and mode can vary in skewed distributions.

Source: en.wikipedia.org

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

[⬆] Autoencoders Interview Questions

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q5: What are some differences between the Undercomplete Autoencoder and the Sparse Autoencoder? ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

[⬆] Bias & Variance Interview Questions

In supervised machine learning an algorithm learns a model from training data.

The goal of any supervised machine learning algorithm is to best estimate the mapping function (f) for the output variable (Y) given the input data (X). The mapping function is often called the target function because it is the function that a given supervised machine learning algorithm aims to approximate.

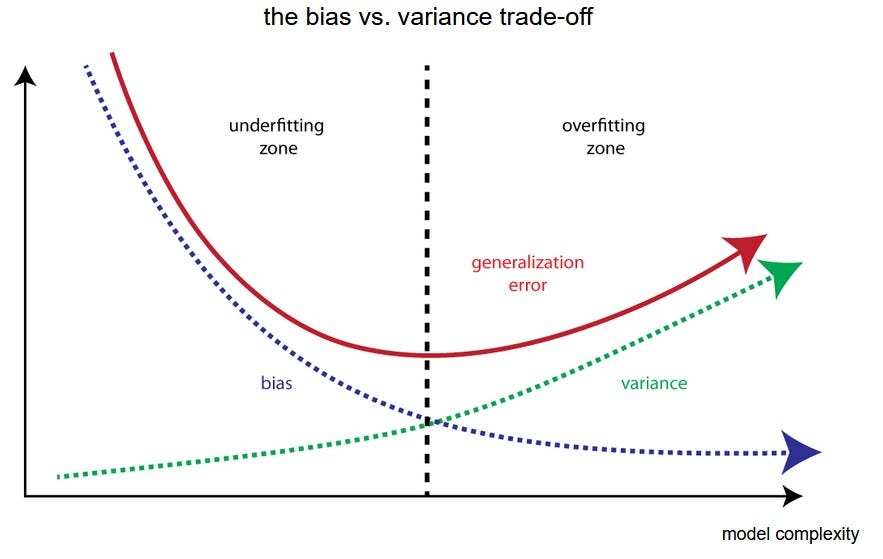

Bias are the simplifying assumptions made by a model to make the target function easier to learn.

Generally, linear algorithms have a high bias making them fast to learn and easier to understand but generally less flexible.

-

Examples of low-bias machine learning algorithms include: Decision Trees, k-Nearest Neighbors and Support Vector Machines.

-

Examples of high-bias machine learning algorithms include: Linear Regression, Linear Discriminant Analysis and Logistic Regression.

Source: machinelearningmastery.com

-

High Bias can cause an algorithm to miss the relevant relations between features and target outputs (underfitting).

-

High Variance may result from an algorithm modeling random noise in the training data (overfitting).

-

The Bias-Variance tradeoff is a central problem in supervised learning. Ideally, a model should be able to accurately capture the regularities in its training data, but also generalize well to unseen data.

-

It is called a tradeoff because it is typically impossible to do both simultaneously:

- Algorithms with high variance will be prone to overfitting the dataset, but

- Algorithms with high bias will underfit the dataset.

Source: en.wikipedia.org

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

[⬆] Big-O Notation Interview Questions

Big-O notation (also called "asymptotic growth" notation) is a relative representation of the complexity of an algorithm. It shows how an algorithm scales based on input size. We use it to talk about how thing scale. Big O complexity can be visualized with this graph:

Source: stackoverflow.com

Say we have an array of n elements:

int array[n];If we wanted to access the first (or any) element of the array this would be O(1) since it doesn't matter how big the array is, it always takes the same constant time to get the first item:

x = array[0];Source: stackoverflow.com

Big-O is often used to make statements about functions that measure the worst case behavior of an algorithm. Worst case analysis gives the maximum number of basic operations that have to be performed during execution of the algorithm. It assumes that the input is in the worst possible state and maximum work has to be done to put things right.

Source: stackoverflow.com

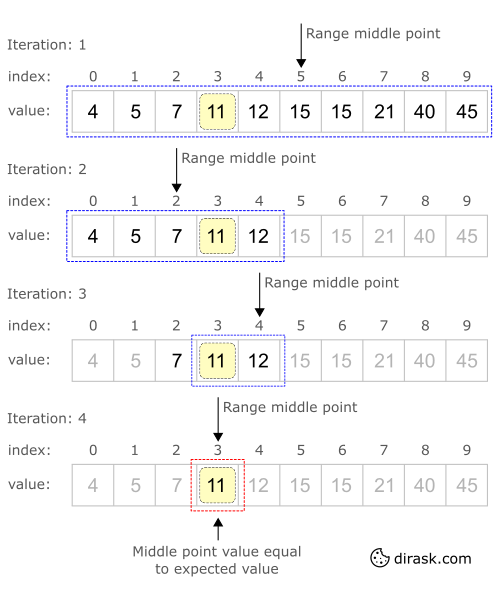

O(log n) means for every element, you're doing something that only needs to look at log N of the elements. This is usually because you know something about the elements that let you make an efficient choice (for example to reduce a search space).

The most common attributes of logarithmic running-time function are that:

- the choice of the next element on which to perform some action is one of several possibilities, and

- only one will need to be chosen

or

- the elements on which the action is performed are digits of

n

Most efficient sorts are an example of this, such as merge sort. It is O(log n) when we do divide and conquer type of algorithms e.g binary search. Another example is quick sort where each time we divide the array into two parts and each time it takes O(N) time to find a pivot element. Hence it N O(log N)

Plotting log(n) on a plain piece of paper, will result in a graph where the rise of the curve decelerates as n increases:

Source: stackoverflow.com

The fact is it's difficult to determine the exact runtime of an algorithm. It depends on the speed of the computer processor. So instead of talking about the runtime directly, we use Big O Notation to talk about how quickly the runtime grows depending on input size.

With Big O Notation, we use the size of the input, which we call n. So we can say things like the runtime grows “on the order of the size of the input” (O(n)) or “on the order of the square of the size of the input” (O(n2)). Our algorithm may have steps that seem expensive when n is small but are eclipsed eventually by other steps as n gets larger. For Big O Notation analysis, we care more about the stuff that grows fastest as the input grows, because everything else is quickly eclipsed as n gets very large.

Source: medium.com

O(n2) means for every element, you're doing something with every other element, such as comparing them. Bubble sort is an example of this.

Source: stackoverflow.com

Let's say we wanted to find a number in the list:

for (int i = 0; i < n; i++){

if(array[i] == numToFind){ return i; }

}What will be the time complexity (Big O) of that code snippet?

This would be O(n) since at most we would have to look through the entire list to find our number. The Big-O is still O(n) even though we might find our number the first try and run through the loop once because Big-O describes the upper bound for an algorithm.

Source: stackoverflow.com

O(1). Note, you don't have to insert at the end of the list. If you insert at the front of a (singly-linked) list, they are both O(1).

Stack contains 1,2,3:

[1]->[2]->[3]Push 5:

[5]->[1]->[2]->[3]Pop:

[1]->[2]->[3] // returning 5Source: stackoverflow.com

Let's consider a traversal algorithm for traversing a list.

O(1)denotes constant space use: the algorithm allocates the same number of pointers irrespective to the list size. That will happen if we move (reuse) our pointer along the list.- In contrast,

O(n)denotes linear space use: the algorithm space use grows together with respect to the input sizen. That will happen if let's say for some reason the algorithm needs to allocate 'N' pointers (or other variables) when traversing a list.

Source: stackoverflow.com

Consider:

f(x) = log n + 3nWhat is the big O notation of this function?

It is simply O(n).

When you have a composite of multiple parts in Big O notation which are added, you have to choose the biggest one. In this case it is O(3n), but there is no need to include constants inside parentheses, so we are left with O(n).

Source: stackoverflow.com

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q14: What is meant by "Constant Amortized Time" when talking about time complexity of an algorithm? ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q22: What are some algorithms which we use daily that has O(1), O(n log n) and O(log n) complexities? ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

[⬆] Classification Interview Questions

We call it naive because its assumptions (it assumes that all of the features in the dataset are equally important and independent) are really optimistic and rarely true in most real-world applications:

- we consider that these predictors are independent

- we consider that all the predictors have an equal effect on the outcome (like the day being windy does not have more importance in deciding to play golf or not)

Source: towardsdatascience.com

- A Perceptron is a fundamental unit of a Neural Network that is also a single-layer Neural Network.

- Perceptron is a linear classifier. Since it uses already labeled data points, it is a supervised learning algorithm.

- The activation function applies a step rule (convert the numerical output into +1 or -1) to check if the output of the weighting function is greater than zero or not.

A Perceptron is shown in the figure below:

Source: towardsdatascience.com

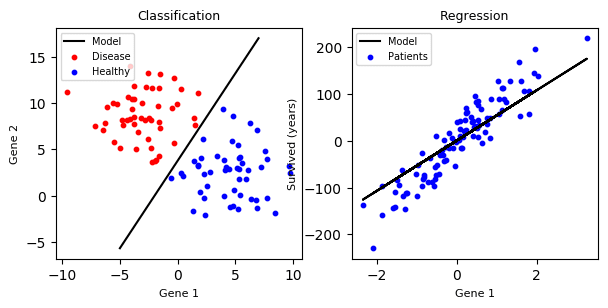

A decision boundary is a line or a hyperplane that separates the classes. This is what we expect to obtain from logistic regression, as with any other classifier. With this, we can figure out some way to split the data to allow for an accurate prediction of a given observation’s class using the available information.

In the case of a generic two-dimensional example, the split might look something like this:

Source: medium.com

-

Logistic regression: ideally used for classification of binary variables. Implements the sigmoid function to calculate the probability that a data point belongs to a certain class.

-

K-Nearest Neighbours (kNN): calculate the distance of one data point from every other data point and then takes a majority vote from k-nearest neighbors of each data points to classify the output.

-

Decision trees: use multiple if-else statements in the form of a tree structure that includes nodes and leaves. The nodes breaking down the one major structure into smaller structures and eventually providing the final outcome.

-

Random Forest: uses multiple decision trees to predict the outcome of the target variable. Each decision tree provides its own outcome and then it takes the majority vote to classify the final outcome.

-

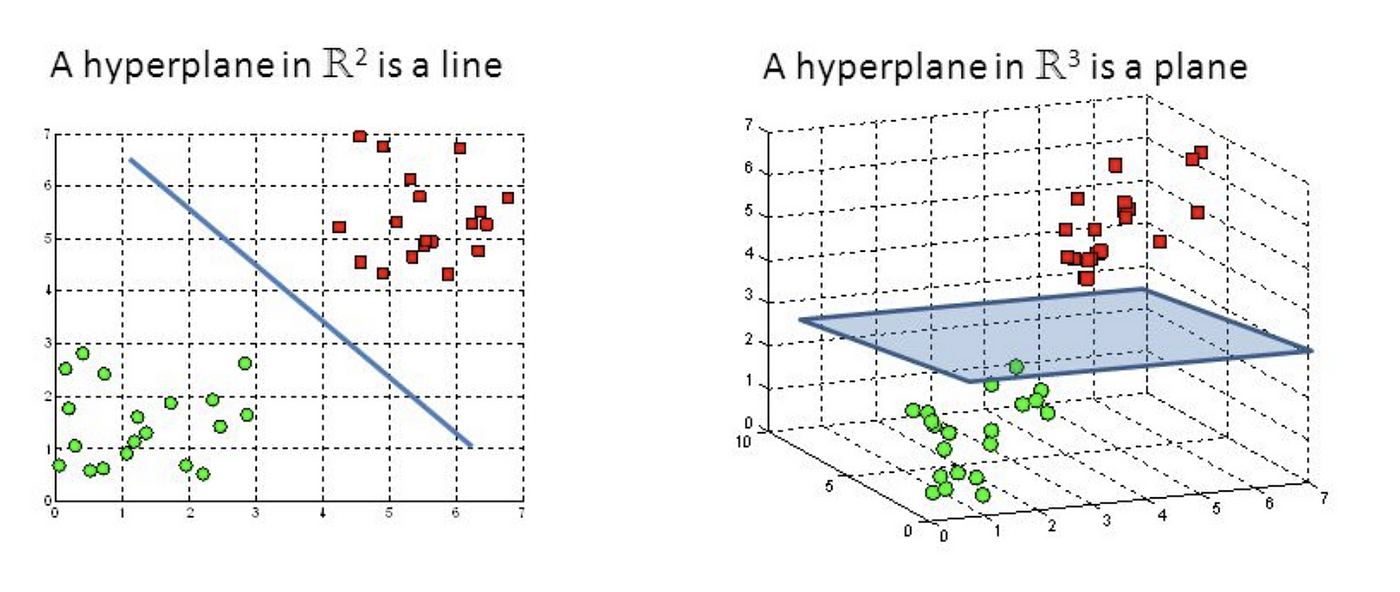

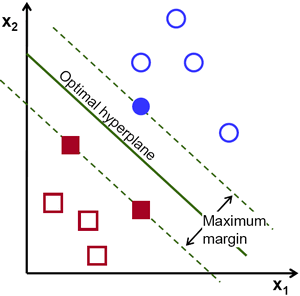

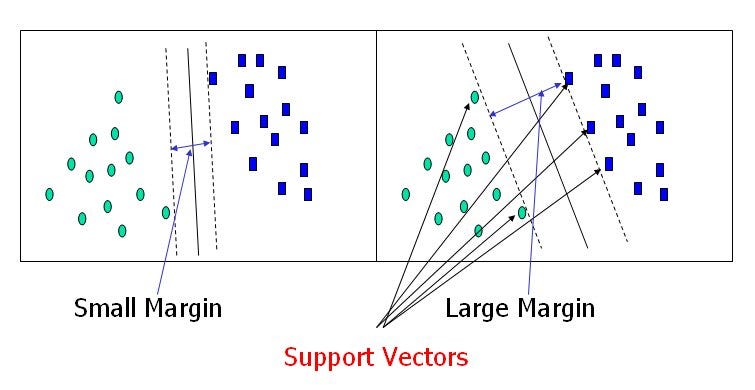

Support Vector Machines: it creates an n-dimensional space for the n number of features in the dataset and then tries to create the hyperplanes such that it divides and classifies the data points with the maximum margin possible.

Source: www.upgrad.com

-

K-nearest neighbors or KNN is a supervised classification algorithm. This means that we need labeled data to classify an unlabeled data point. It attempts to classify a data point based on its proximity to other

K-data points in the feature space. -

K-means Clustering is an unsupervised classification algorithm. It requires only a set of unlabeled points and a threshold

K, so it gathers and groups data intoKnumber of clusters.

Source: www.quora.com

There is not a rule of thumb to choose a standard optimal k. This value depends and varies from dataset to dataset, but as a general rule, the main goal is to keep it:

- small enough to exclude the samples of the other classes but

- large enough to minimize any noise in the data.

A way to looking for this optimal parameter, commonly called the Elbow method, consist in creating a for loop that trains various KNN models with different k values, keeping track of the error for each of these models, and use the model with the k value that achieves the best accuracy.

Source: medium.com

In Logistic regression models, we are modeling the probability that an input (X) belongs to the default class (Y=1), that is to say:

where the P(X) values are given by the logistic function,

The β0 and β1 values are estimated during the training stage using maximum-likelihood estimation or gradient descent. Once we have it, we can make predictions by simply putting numbers into the logistic regression equation and calculating a result.

For example, let's consider that we have a model that can predict whether a person is male or female based on their height, such as if P(X) ≥ 0.5 the person is male, and if P(X) < 0.5 then is female.

During the training stage we obtain β0 = -100 and β1 = 0.6, and we want to evaluate what's the probability that a person with a height of 150cm is male, so with that intention we compute:

Given that logistic regression solves a classification task, we can use directly this value to predict that the person is a female.

Source: machinelearningmastery.com

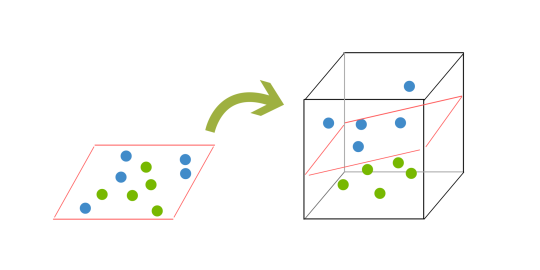

When it comes to classification problems, the goal is to establish a decision boundary that maximizes the margin between the classes. However, in the real world, this task can become difficult when we have to treat with non-linearly separable data. One approach to solve this problem is to perform a data transformation process, in which we map all the data points to a higher dimension find the boundary and make the classification.

That sounds alright, however, when there are more and more dimensions, computations within that space become more and more expensive. In such cases, the kernel trick allows us to operate in the original feature space without computing the coordinates of the data in a higher-dimensional space and therefore offers a more efficient and less expensive way to transform data into higher dimensions.

There exist different kernel functions, such as:

- linear,

- nonlinear,

- polynomial,

- radial basis function (RBF), and

- sigmoid.

Each one of them can be suitable for a particular problem depending on the data.

Source: medium.com

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q11: What is the difference between a Weak Learner vs a Strong Learner and why they could be usefu? ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q17: How would you use Naive Bayes classifier for categorical features? What if some features are numerical? ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Q18: What's the difference between Generative Classifiers and Discriminative Classifiers? Name some examples of each one ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q25: What are some advantages and disadvantages of using AUC to measure the performance of the model? ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q28: Name some advantages of using Support Vector Machines vs Logistic Regression for classification ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q31: What's the difference between Random Oversampling and Random Undersampling and when they can be used? ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q34: What are the trade-offs between the different types of Classification Algorithms? How would do you choose the best one? ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

[⬆] Clustering Interview Questions

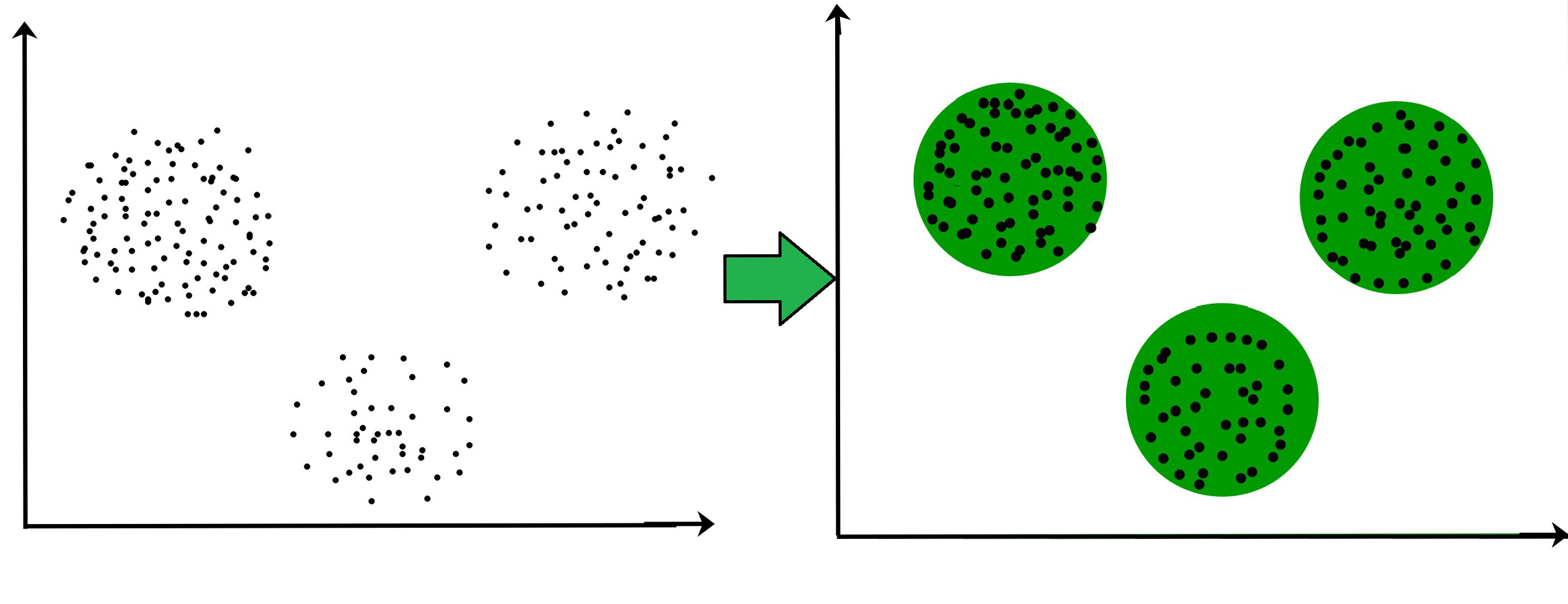

- Cluster analysis is also called clustering.

- It is the task of grouping a set of objects in such a way that objects in the same cluster are more similar to each other than to those in other clusters.

- Cluster analysis itself is not one specific algorithm, but the general task to be solved. It can be achieved by various algorithms that differ significantly in their understanding of what constitutes a cluster and how to efficiently find them.

Source: Handbook of Cluster Analysis from Chapman and Hall/CRC

- Clustering, when the data are similar pairs of points is called similarity-based clustering.

- A typical example of similarity-based clustering is community detection in social networks, where the observations are individual links between people, which may be due to friendship, shared interests, and work relationships. The strength of a link can be the frequency of interactions, for example, communications by e-mail, phone, or other social media, co-authorships, or citations.

- In this clustering paradigm, the points to be clustered are not assumed to be part of a vector space. Their attributes (or features) are incorporated into a single dimension, the link strength, or similarity, which takes a numerical value

$$S_{ij}$$ for each pair of pointsi,j. Hence, the natural representation for this problem is by means of the similarity matrix given below: $$ S=[S_{ij}]{i,j=1}^n $$ The similarities are symmetric $$S{ij} = S_{ji}$$ and nonnegative$$S_{ij} \geq 0$$ .

Source: Handbook of Cluster Analysis from Chapman and Hall/CRC

- Identifying cancerous data: Initially we take known samples of a cancerous and non-cancerous dataset, and label both the samples dataset. Then both the samples are mixed and different clustering algorithms are applied to the mixed samples dataset. It has been found through experiments that a cancerous dataset gives the best results with unsupervised non-linear clustering algorithms.

- Search engines: Search engines try to group similar objects in one cluster and the dissimilar objects far from each other. It provides results for the searched data according to the nearest similar object which is clustered around the data to be searched.

- Wireless sensor network's based application: Clustering algorithm can be used effectively in Wireless Sensor Network's based application. One application where it can be used is in Landmine detection. The clustering algorithm plays the role of finding the Cluster heads (or cluster center) which collects all the data in its respective cluster.

Source: sites.google.com

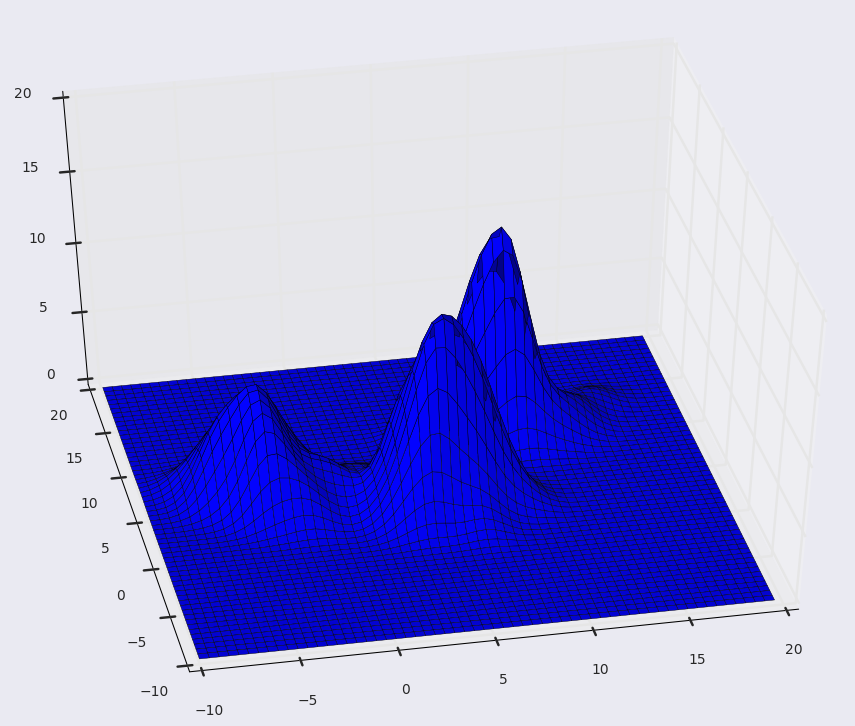

- Mean Shift is a non-parametric feature-space analysis technique for locating the maxima of a density function. What we're trying to achieve here is, to keep shifting the window to a region of higher density.

- We can understand this algorithm by thinking of our data points to be represented as a probability density function. Naturally, in a probability function, higher density regions will correspond to the regions with more points, and lower density regions will correspond to the regions with less points. In clustering, we need to find clusters of points, i.e the regions with a lot of points together. More points together mean higher density. Hence, we observe that clusters of points are more like the higher density regions in our probability density function.

So, we must iteratively go from lower density to higher density regions, in order to find our clusters.

-

The mean shift method is an iterative method, and we start with an initial estimate

x. Let a kernel function$$K(x_i - x)$$ be given. This function determines the weight of nearby points for re-estimation of the mean. Typically a Gaussian kernel on the distance to the current estimate is used, $$ K(x_i-x)= e^{-c|x_i-x|^2} $$ The weighted mean of the density in the window determined byKis $$ m(x) = \frac{\sum_{x_i \in N(x)} K(x_i - x) x_i}{\sum_{x_i \in N(x) K(x_i - x)}} $$ whereN(x)is the neighborhood ofx, a set of points for which$$K(x_i) \neq 0$$ . -

The difference

m(x) - xis called mean shift. The mean-shift algorithm now sets$$m(x) \to x$$ , and repeats the estimation untilm(x)converges. It means, after a sufficient number of steps, the position of the centroid of all the points, and the current location of the window will coincide. This is when we reach convergence, as no new points are added to our window in this step.

Source: en.wikipedia.org

- Self-Organizing Maps (SOMs) are a class of self-organizing clustering techniques.

- It is an unsupervised form of artificial neural networks. A self-organizing map consists of a set of neurons that are arranged in a rectangular or hexagonal grid. Each neuronal unit in the grid is associated with a numerical vector of fixed dimensionality. The learning process of a self-organizing map involves the adjustment of these vectors to provide a suitable representation of the input data.

- Self-organizing maps can be used for clustering numerical data in vector format.

Source: medium.com

- Significance testing addresses an important aspect of cluster validation. Many cluster analysis methods will deliver clusterings even for homogeneous data. They assume implicitly that clustering has to be found, regardless of whether this is meaningful or not.

A critical and challenging question in cluster analysis is whether the identified clusters represent important underlying structure or are artifacts of natural sampling variation.

- Significance testing is performed to distinguish between a clustering that reflects meaningful heterogeneity in the data and an artificial clustering of homogeneous data.

- Significance testing is also used for more specific tasks in cluster analysis, such as; estimating the number of clusters, and for interpreting some or all of the individual clusters, to show the significance of the individual clusters.

Source: www.ncbi.nlm.nih.gov

Multiclass classification means a classification task with more than two classes; e.g., classify a set of images of fruits which may be oranges, apples, or pears. Multiclass classification makes the assumption that each sample is assigned to one and only one label: a fruit can be either an apple or a pear but not both at the same time.

Multilabel classification assigns to each sample a set of target labels. This can be thought of as predicting properties of a data-point that are not mutually exclusive, such as topics that are relevant for a document. A text might be about any of religion, politics, finance or education at the same time or none of these.

Source: stats.stackexchange.com

The Jaccard index, also known as the Jaccard similarity coefficient, is a statistic used for gauging the similarity and diversity of sample sets. The Jaccard coefficient measures similarity between finite sample sets, and is defined as the size of the intersection divided by the size of the union of the sample sets:

Source: en.wikipedia.org

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q11: What are some different types of Clustering Structures that are used in Clustering Algorithms? ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q25: When using various Clustering Algorithms, why is Euclidean Distance not a good metric in High Dimensions? ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q27: How would you choose the number of Clusters when designing a K-Medoid Clustering Algorithm? ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q31: How to tell if data is clustered enough for clustering algorithms to produce meaningful results? ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

[⬆] Cost Function Interview Questions

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

[⬆] Data Structures Interview Questions

A Stack is a container of objects that are inserted and removed according to the last-in first-out (LIFO) principle. In the pushdown stacks only two operations are allowed: push the item into the stack, and pop the item out of the stack.

There are basically three operations that can be performed on stacks. They are:

- inserting an item into a stack (push).

- deleting an item from the stack (pop).

- displaying the contents of the stack (peek or top).

A stack is a limited access data structure - elements can be added and removed from the stack only at the top. push adds an item to the top of the stack, pop removes the item from the top. A helpful analogy is to think of a stack of books; you can remove only the top book, also you can add a new book on the top.

Source: www.cs.cmu.edu

A stack is a recursive data structure, so it's:

- a stack is either empty or

- it consists of a top and the rest which is a stack by itself;

Source: www.cs.cmu.edu

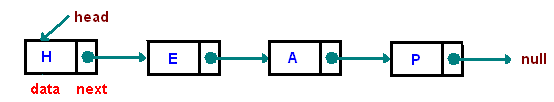

A linked list is a linear data structure where each element is a separate object. Each element (we will call it a node) of a list is comprising of two items - the data and a reference (pointer) to the next node. The last node has a reference to null. The entry point into a linked list is called the head of the list. It should be noted that head is not a separate node, but the reference to the first node. If the list is empty then the head is a null reference.

Source: www.cs.cmu.edu

Arrays are:

- Finite (fixed-size) - An array is finite because it contains only limited number of elements.

- Order -All the elements are stored one by one , in contiguous location of computer memory in a linear order and fashion

- Homogenous - All the elements of an array are of same data types only and hence it is termed as collection of homogenous

Source: codelack.com

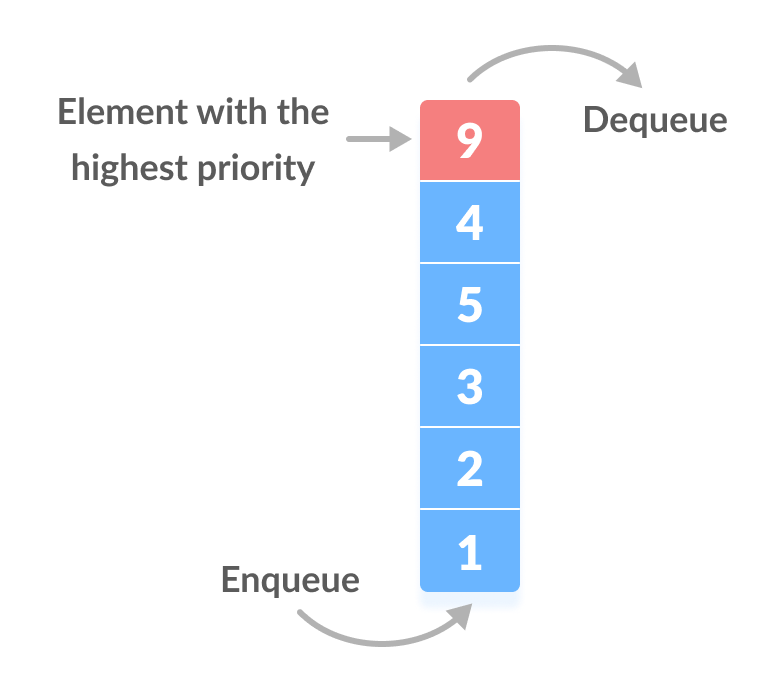

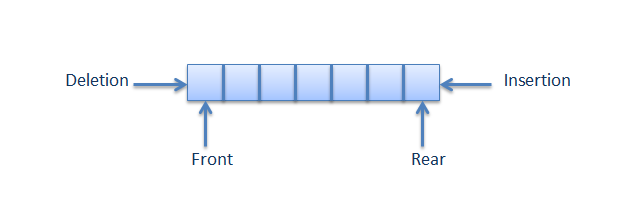

A queue is a container of objects (a linear collection) that are inserted and removed according to the first-in first-out (FIFO) principle. The process to add an element into queue is called Enqueue and the process of removal of an element from queue is called Dequeue.

Source: www.cs.cmu.edu

A Heap is a special Tree-based data structure which is an almost complete tree that satisfies the heap property:

- in a max heap, for any given node C, if P is a parent node of C, then the key (the value) of P is greater than or equal to the key of C.

- In a min heap, the key of P is less than or equal to the key of C. The node at the "top" of the heap (with no parents) is called the root node.

A common implementation of a heap is the binary heap, in which the tree is a binary tree.

Source: www.geeksforgeeks.org

Time Complexity: None Space Complexity: None

A hash table (hash map) is a data structure that implements an associative array abstract data type, a structure that can map keys to values. Hash tables implement an associative array, which is indexed by arbitrary objects (keys). A hash table uses a hash function to compute an index, also called a hash value, into an array of buckets or slots, from which the desired value can be found.

Source: en.wikipedia.org

A priority queue is a data structure that stores priorities (comparable values) and perhaps associated information. A priority queue is different from a "normal" queue, because instead of being a "first-in-first-out" data structure, values come out in order by priority. Think of a priority queue as a kind of bag that holds priorities. You can put one in, and you can take out the current highest priority.

Source: pages.cs.wisc.edu

Time Complexity: None Space Complexity: None

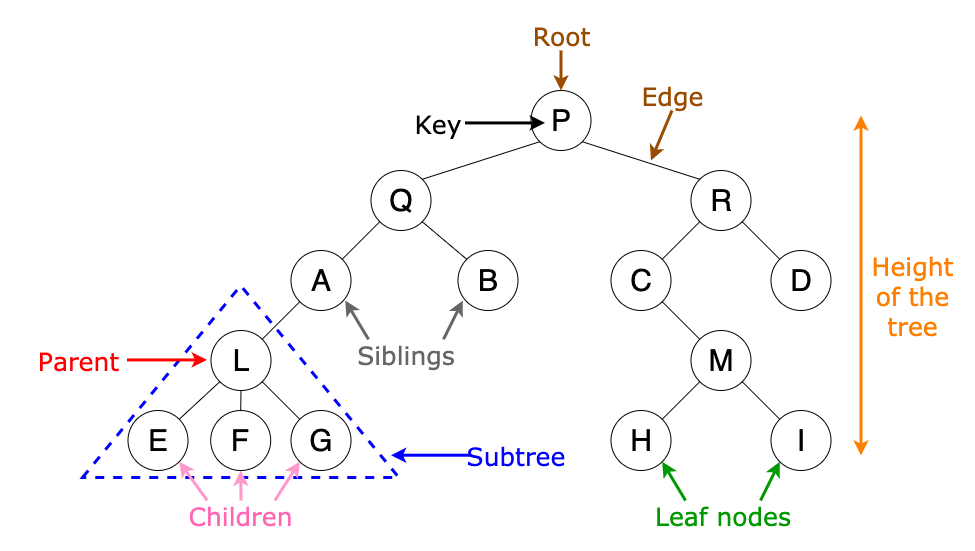

Trees are well-known as a non-linear data structure. They don’t store data in a linear way. They organize data hierarchically.

A tree is a collection of entities called nodes. Nodes are connected by edges. Each node contains a value or data or key, and it may or may not have a child node. The first node of the tree is called the root. Leaves are the last nodes on a tree. They are nodes without children.

Source: www.freecodecamp.org

Time Complexity: None Space Complexity: None

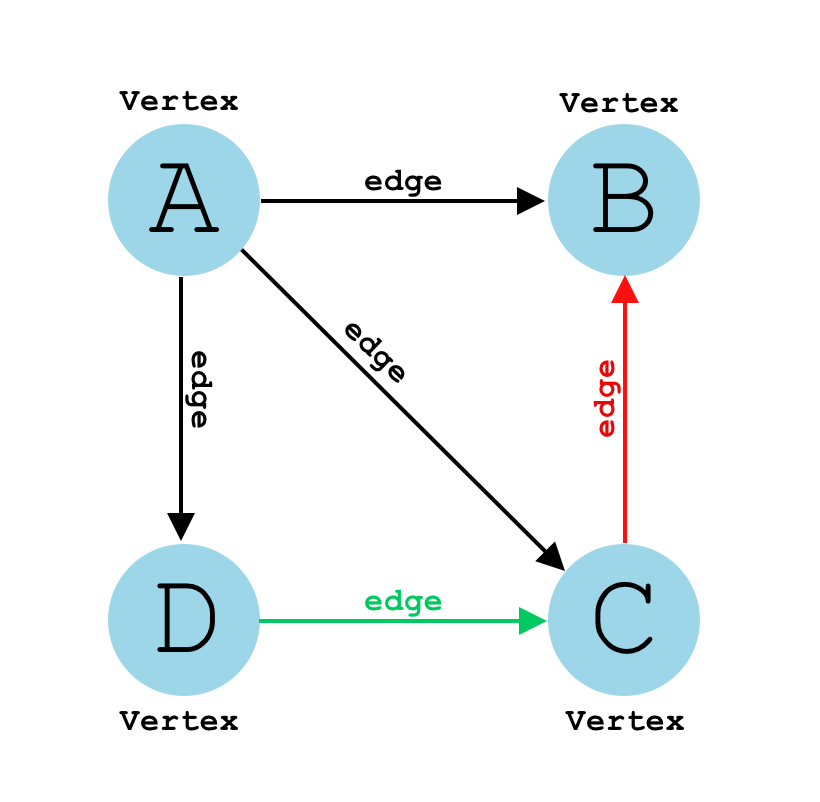

A graph is a common data structure that consists of a finite set of nodes (or vertices) and a set of edges connecting them. A pair (x,y) is referred to as an edge, which communicates that the x vertex connects to the y vertex.

Graphs are used to solve real-life problems that involve representation of the problem space as a network. Examples of networks include telephone networks, circuit networks, social networks (like LinkedIn, Facebook etc.).

Source: www.educative.io

Time Complexity: None Space Complexity: None

A string is generally considered as a data type and is often implemented as an array data structure of bytes (or words) that stores a sequence of elements, typically characters, using some character encoding. String may also denote more general arrays or other sequence (or list) data types and structures.

Source: dev.to

Time Complexity: None Space Complexity: None

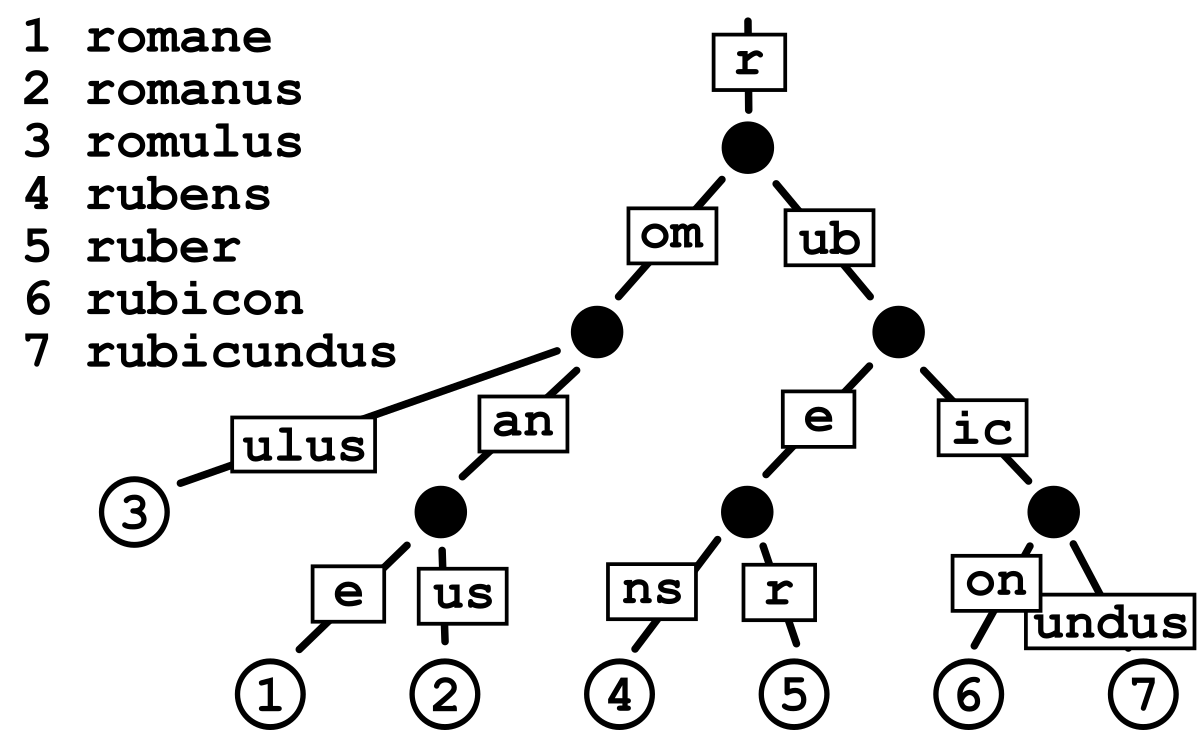

Trie (also called **digital tree **or prefix tree) is a tree-based data structure, which is used for efficient retrieval of a key in a large data-set of strings. Unlike a binary search tree, no node in the tree stores the key associated with that node; instead, its position in the tree defines the key with which it is associated; i.e., the value of the key is distributed across the structure. All the descendants of a node have a common prefix of the string associated with that node, and the root is associated with the empty string. Each complete English word has an arbitrary integer value associated with it (see image).

Source: medium.com

Time Complexity: None Space Complexity: None

A normal tree has no restrictions on the number of children each node can have. A binary tree is made of nodes, where each node contains a "left" pointer, a "right" pointer, and a data element.

There are three different types of binary trees:

- Full binary tree: Every node other than leaf nodes has 2 child nodes.

- Complete binary tree: All levels are filled except possibly the last one, and all nodes are filled in as far left as possible.

- Perfect binary tree: All nodes have two children and all leaves are at the same level.

Source: study.com

Time Complexity: None Space Complexity: None

Because they help manage your data in more a particular way than arrays and lists. It means that when you're debugging a problem, you won't have to wonder if someone randomly inserted an element into the middle of your list, messing up some invariants.

Arrays and lists are random access. They are very flexible and also easily corruptible. If you want to manage your data as FIFO or LIFO it's best to use those, already implemented, collections.

More practically you should:

- Use a queue when you want to get things out in the order that you put them in (FIFO)

- Use a stack when you want to get things out in the reverse order than you put them in (LIFO)

- Use a list when you want to get anything out, regardless of when you put them in (and when you don't want them to automatically be removed).

Source: stackoverflow.com

- Queues offer random access to their contents by shifting the first element off the front of the queue. You have to do this repeatedly to access an arbitrary element somewhere in the queue. Therefore, access is

O(n). - Searching for a given value in the queue requires iterating until you find it. So search is

O(n). - Inserting into a queue, by definition, can only happen at the back of the queue, similar to someone getting in line for a delicious Double-Double burger at In 'n Out. Assuming an efficient queue implementation, queue insertion is

O(1). - Deleting from a queue happens at the front of the queue. Assuming an efficient queue implementation, queue deletion is `

O(1).

Source: github.com

Queue can be classified into following types:

- Simple Queue - is a linear data structure in which removal of elements is done in the same order they were inserted i.e., the element will be removed first which is inserted first.

- Circular Queue - is a linear data structure in which the operations are performed based on FIFO (First In First Out) principle and the last position is connected back to the first position to make a circle. It is also called Ring Buffer. Circular queue avoids the wastage of space in a regular queue implementation using arrays.

- Priority Queue - is a type of queue where each element has a priority value and the deletion of the elements is depended upon the priority value

- In case of max-priority queue, the element will be deleted first which has the largest priority value

- In case of min-priority queue the element will be deleted first which has the minimum priority value.

- De-queue (Double ended queue) - allows insertion and deletion from both the ends i.e. elements can be added or removed from rear as well as front end.

- Input restricted deque - In input restricted double ended queue, the insertion operation is performed at only one end and deletion operation is performed at both the ends.

- Output restricted deque - In output restricted double ended queue, the deletion operation is performed at only one end and insertion operation is performed at both the ends.

Source: www.ques10.com

- A singly linked list

- A doubly linked list is a list that has two references, one to the next node and another to previous node.

- A multiply linked list - each node contains two or more link fields, each field being used to connect the same set of data records in a different order of same set(e.g., by name, by department, by date of birth, etc.).

- A circular linked list - where last node of the list points back to the first node (or the head) of the list.

Source: www.cs.cmu.edu

A dynamic array is an array with a big improvement: automatic resizing.

One limitation of arrays is that they're fixed size, meaning you need to specify the number of elements your array will hold ahead of time. A dynamic array expands as you add more elements. So you don't need to determine the size ahead of time.

Source: www.interviewcake.com

The easiest solution that comes to mind here is iteration:

function fib(n){

let arr = [0, 1];

for (let i = 2; i < n + 1; i++){

arr.push(arr[i - 2] + arr[i -1])

}

return arr[n]

}And output:

fib(4)

=> 3

Notice that two first numbers can not really be effectively generated by a for loop, because our loop will involve adding two numbers together, so instead of creating an empty array we assign our arr variable to [0, 1] that we know for a fact will always be there. After that we create a loop that starts iterating from i = 2 and adds numbers to the array until the length of the array is equal to n + 1. Finally, we return the number at n index of array.

Source: medium.com

Time Complexity: O(n) Space Complexity: O(n)

An algorithm in our iterative solution takes linear time to complete the task. Basically we iterate through the loop n-2 times, so Big O (notation used to describe our worst case scenario) would be simply equal to O(n) in this case. The space complexity is O(n).

function fib(n){

let arr = [0, 1]

for (let i = 2; i < n + 1; i++){

arr.push(arr[i - 2] + arr[i -1])

}

return arr[n]

}double fibbonaci(int n){

double prev=0d, next=1d, result=0d;

for (int i = 0; i < n; i++) {

result=prev+next;

prev=next;

next=result;

}

return result;

}def fib_iterative(n):

a, b = 0, 1

while n > 0:

a, b = b, a + b

n -= 1

return aFew disadvantages of linked lists are :

- They use more memory than arrays because of the storage used by their pointers.

- Difficulties arise in linked lists when it comes to reverse traversing. For instance, singly linked lists are cumbersome to navigate backwards and while doubly linked lists are somewhat easier to read, memory is wasted in allocating space for a back-pointer.

- Nodes in a linked list must be read in order from the beginning as linked lists are inherently sequential access.

- Random access has linear time.

- Nodes are stored incontiguously (no or poor cache locality), greatly increasing the time required to access individual elements within the list, especially with a CPU cache.

- If the link to list's node is accidentally destroyed then the chances of data loss after the destruction point is huge. Data recovery is not possible.

- Search is linear versus logarithmic for sorted arrays and binary search trees.

- Different amount of time is required to access each element.

- Not easy to sort the elements stored in the linear linked list.

Source: www.quora.com

Recursive solution looks pretty simple (see code).

Let’s look at the diagram that will help you understand what’s going on here with the rest of our code. Function fib is called with argument 5:

Basically our fib function will continue to recursively call itself creating more and more branches of the tree until it hits the base case, from which it will start summing up each branch’s return values bottom up, until it finally sums them all up and returns an integer equal to 5.

Source: medium.com

Time Complexity: O(2^n)

In case of recursion the solution take exponential time, that can be explained by the fact that the size of the tree exponentially grows when n increases. So for every additional element in the Fibonacci sequence we get an increase in function calls. Big O in this case is equal to O(2n). Exponential Time complexity denotes an algorithm whose growth doubles with each addition to the input data set.

function fib(n) {

if (n < 2){

return n

}

return fib(n - 1) + fib (n - 2)

}public int fibonacci(int n) {

if (n < 2) return n;

return fibonacci(n - 1) + fibonacci(n - 2);

}def F(n):

if n == 0: return 0

elif n == 1: return 1

else: return F(n-1)+F(n-2)The space complexity of a datastructure indicates how much space it occupies in relation to the amount of elements it holds. For example a space complexity of O(1) would mean that the datastructure alway consumes constant space no matter how many elements you put in there. O(n) would mean that the space consumption grows linearly with the amount of elements in it.

A hashtable typically has a space complexity of O(n).

Source: stackoverflow.com

A Binary Heap is a Binary Tree with following properties:

- It’s a complete tree (all levels are completely filled except possibly the last level and the last level has all keys as left as possible). This property of Binary Heap makes them suitable to be stored in an array.

- A Binary Heap is either Min Heap or Max Heap. In a Min Binary Heap, the key at root must be minimum among all keys present in Binary Heap. The same property must be recursively true for all nodes in Binary Tree. Max Binary Heap is similar to MinHeap.

10 10

/ \ / \

20 100 15 30

/ / \ / \

30 40 50 100 40Source: www.geeksforgeeks.org

Time Complexity: None Space Complexity: None

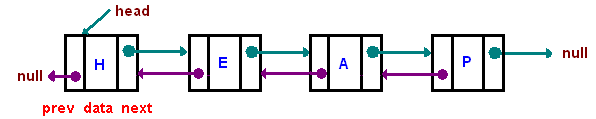

Binary search tree is a data structure that quickly allows to maintain a sorted list of numbers.

- It is called a binary tree because each tree node has maximum of two children.

- It is called a search tree because it can be used to search for the presence of a number in

O(log n)time.

The properties that separates a binary search tree from a regular binary tree are:

- All nodes of left subtree are less than root node

- All nodes of right subtree are more than root node

- Both subtrees of each node are also BSTs i.e. they have the above two properties

Source: www.programiz.com

Time Complexity: None Space Complexity: None

Char arrays:

- Static-sized

- Fast access

- Few built-in methods to manipulate strings

- A char array doesn’t define a data type

Strings:

- Slower access

- Define a data type

- Dynamic allocation

- More built-in functions to support string manipulations

Source: dev.to

Time Complexity: None Space Complexity: None

That is a basic (generic) tree structure that can be used for String or any other object:

Source: stackoverflow.com

Time Complexity: None Space Complexity: None

public class Tree<T> {

private Node<T> root;

public Tree(T rootData) {

root = new Node<T>();

root.data = rootData;

root.children = new ArrayList<Node<T>>();

}

public static class Node<T> {

private T data;

private Node<T> parent;

private List<Node<T>> children;

}

}Generic Tree:

class Tree(object):

"Generic tree node."

def __init__(self, name='root', children=None):

self.name = name

self.children = []

if children is not None:

for child in children:

self.add_child(child)

def __repr__(self):

return self.name

def add_child(self, node):

assert isinstance(node, Tree)

self.children.append(node)

# *

# /|\

# 1 2 +

# / \

# 3 4

t = Tree('*', [Tree('1'),

Tree('2'),

Tree('+', [Tree('3'),

Tree('4')])])Binary tree:

class Tree:

def __init__(self):

self.left = None

self.right = None

self.data = NoneTo convert a singly linked list to a circular linked list, we will set the next pointer of the tail node to the head pointer.

- Create a copy of the head pointer, let's say

temp. - Using a loop, traverse linked list till tail node (last node) using temp pointer.

- Now set the next pointer of the tail node to head node.

temp->next = head

Source: www.techcrashcourse.com

def convertTocircular(head):

# declare a node variable

# start and assign head

# node into start node.

start = head

# check that

while head.next

# not equal to null then head

# points to next node.

while(head.next is not None):

head = head.next

#

if head.next points to null

# then start assign to the

# head.next node.

head.next = start

return startGraph:

- Consists of a set of vertices (or nodes) and a set of edges connecting some or all of them

- Any edge can connect any two vertices that aren't already connected by an identical edge (in the same direction, in the case of a directed graph)

- Doesn't have to be connected (the edges don't have to connect all vertices together): a single graph can consist of a few disconnected sets of vertices

- Could be directed or undirected (which would apply to all edges in the graph)

Tree:

- A type of graph (fit with in the category of Directed Acyclic Graphs (or a DAG))

- Vertices are more commonly called "nodes"

- Edges are directed and represent an "is child of" (or "is parent of") relationship

- Each node (except the root node) has exactly one parent (and zero or more children)

- Has exactly one "root" node (if the tree has at least one node), which is a node without a parent

- Has to be connected

- Is acyclic, meaning it has no cycles: "a cycle is a path [AKA sequence] of edges and vertices wherein a vertex is reachable from itself"

- Trees aren't a recursive data structure

Source: stackoverflow.com

Time Complexity: None Space Complexity: None

Linked lists are very useful when you need :

- to do a lot of insertions and removals, but not too much searching, on a list of arbitrary (unknown at compile-time) length.

- splitting and joining (bidirectionally-linked) lists is very efficient.

- You can also combine linked lists - e.g. tree structures can be implemented as "vertical" linked lists (parent/child relationships) connecting together horizontal linked lists (siblings).

Using an array based list for these purposes has severe limitations:

- Adding a new item means the array must be reallocated (or you must allocate more space than you need to allow for future growth and reduce the number of reallocations)

- Removing items leaves wasted space or requires a reallocation

- inserting items anywhere except the end involves (possibly reallocating and) copying lots of the data up one position

Source: stackoverflow.com

For traversing a (non-empty) binary tree in pre-order fashion, we must do these three things for every node N starting from root node of the tree:

- (N) Process

Nitself. - (L) Recursively traverse its left subtree. When this step is finished we are back at N again.

- (R) Recursively traverse its right subtree. When this step is finished we are back at N again.

Source: github.com

Time Complexity: O(n) Space Complexity: O(n)

// Recursive function to perform pre-order traversal of the tree

public static void preorder(TreeNode root)

{

// return if the current node is empty

if (root == null) {

return;

}

// Display the data part of the root (or current node)

System.out.print(root.data + " ");

// Traverse the left subtree

preorder(root.left);

// Traverse the right subtree

preorder(root.right);

}# Recursive function to perform pre-order traversal of the tree

def preorder(root):

# return if the current node is empty

if root is None:

return

# Display the data part of the root (or current node)

print(root.data, end=' ')

# Traverse the left subtree

preorder(root.left)

# Traverse the right subtree

preorder(root.right)Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Normalization is basically to design a database schema such that duplicate and redundant data is avoided. If the same information is repeated in multiple places in the database, there is the risk that it is updated in one place but not the other, leading to data corruption.

There is a number of normalization levels from 1. normal form through 5. normal form. Each normal form describes how to get rid of some specific problem.

By having a database with normalization errors, you open the risk of getting invalid or corrupt data into the database. Since data "lives forever" it is very hard to get rid of corrupt data when first it has entered the database.

Source: stackoverflow.com

Q2: What is the difference between Data Definition Language (DDL) and Data Manipulation Language (DML)? ⭐⭐

-

Data definition language (DDL) commands are the commands which are used to define the database. CREATE, ALTER, DROP and TRUNCATE are some common DDL commands.

-

Data manipulation language (DML) commands are commands which are used for manipulation or modification of data. INSERT, UPDATE and DELETE are some common DML commands.

Source: en.wikibooks.org

NoSQL is better than RDBMS because of the following reasons/properities of NoSQL:

- It supports semi-structured data and volatile data

- It does not have schema

- Read/Write throughput is very high

- Horizontal scalability can be achieved easily

- Will support Bigdata in volumes of Terra Bytes & Peta Bytes

- Provides good support for Analytic tools on top of Bigdata

- Can be hosted in cheaper hardware machines

- In-memory caching option is available to increase the performance of queries

- Faster development life cycles for developers

Still, RDBMS is better than NoSQL for the following reasons/properties of RDBMS:

- Transactions with ACID properties - Atomicity, Consistency, Isolation & Durability

- Adherence to Strong Schema of data being written/read

- Real time query management ( in case of data size < 10 Tera bytes )

- Execution of complex queries involving join & group by clauses

Source: stackoverflow.com

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

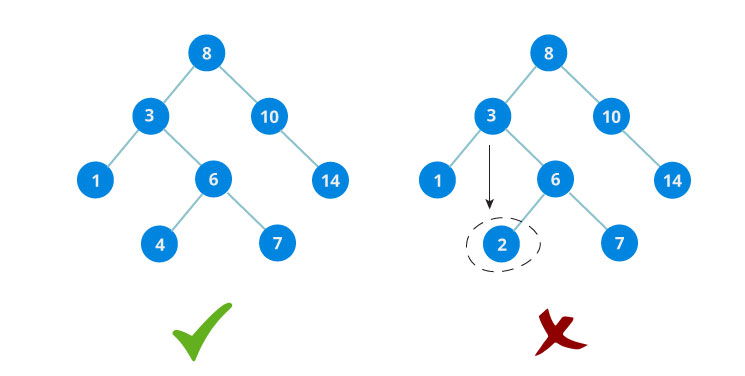

- Covariance measures whether a variation in one variable results in a variation in another variable, and deals with the linear relationship of only

2variables in the dataset. Its value can take range from-∞to+∞. Simply speaking Covariance indicates the direction of the linear relationship between variables.

- Correlation measures how strongly two or more variables are related to each other. Its values are between

-1to1. Correlation measures both the strength and direction of the linear relationship between two variables. Correlation is a function of the covariance.

Source: careerfoundry.com

It's not recommended to perform K-NN on large datasets, given that the computational and memory cost can increase. To understand the reason why we should remember how the K-NN algorithm works:

- Starts by calculating the distances to all vectors in a training set and store them.

- Then, it sorts the calculated distances.

- Then, we store the K nearest vectors.

- And finally, calculate the most frequent class displayed by K nearest vectors.

So implement K-NN on a large dataset it is not only a bad decision to store a large amount of data but it is also computationally costly to keep calculating and sorting all the values. For that reason, K-NN is not recommended and another classification algorithm like Naive Bayes or SVM is preferred in such cases.

Source: towardsdatascience.com

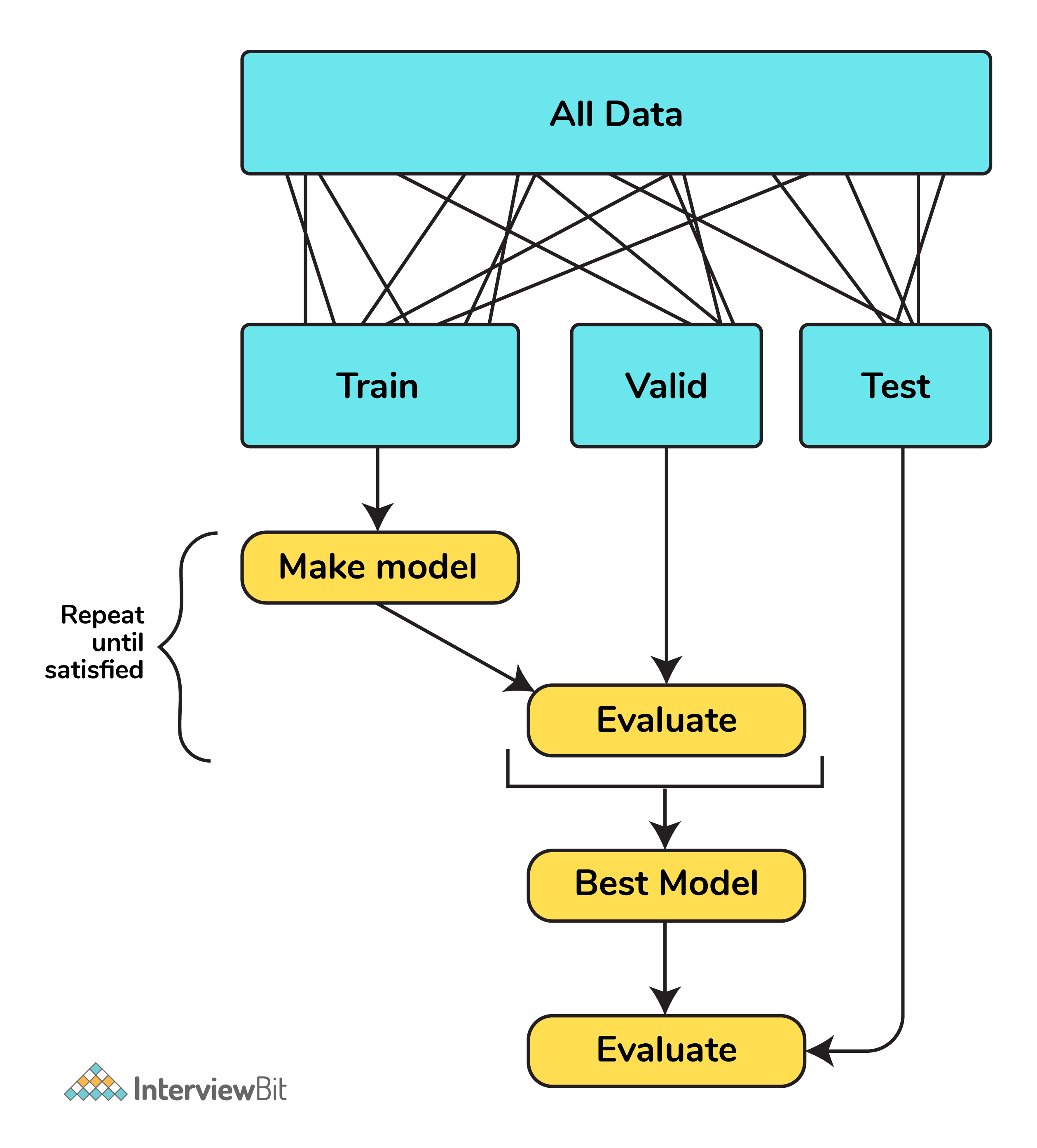

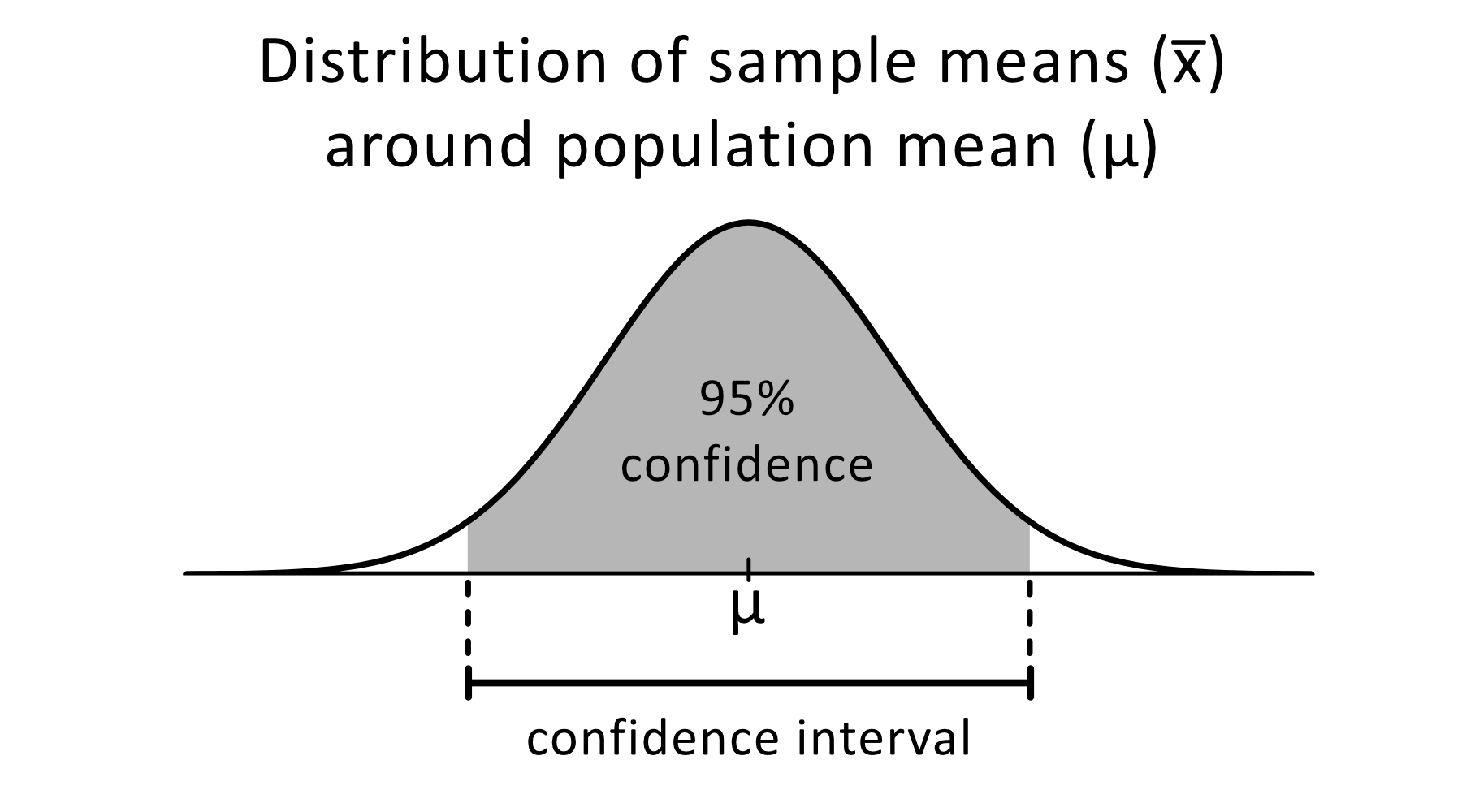

-

Cross-validation is a method of assessing how the results of a statistical analysis will generalize on an independent dataset,

-

It can be used in machine learning tasks to evaluate the predictive capability of the model,

-

It also helps us to avoid overfitting and underfitting,

-

A common way to cross-validate is to divide the dataset into training, validation, and testing where:

- Training dataset is a dataset of known data on which the training is run.

- Validation dataset is the dataset that is unknown against which the model is tested. The validation dataset is used after each epoch of learning to gauge the improvement of the model.

- Testing dataset is also an unknown dataset that is used to test the model. The testing dataset is used to measure the performance of the model after it has finished learning.

Source: en.wikipedia.org

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q20: What's the difference between Random Oversampling and Random Undersampling and when they can be used? ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

[⬆] Decision Trees Interview Questions

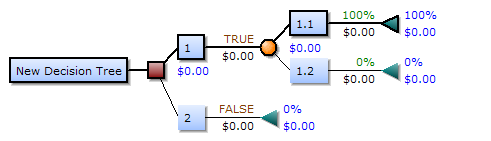

- Decision trees is a tool that uses a tree-like model of decisions and their possible consequences. If an algorithm only contains conditional control statements, decision trees can model that algorithm really well.

- Decision trees are a non-parametric, supervised learning method.

- Decision trees are used for classification and regression tasks.

- The diagram below shows an example of a decision tree (the dataset used is the Titanic dataset to predict whether a passenger survived or not):

Source: towardsdatascience.com

A decision tree is a flowchart-like structure in which:

- Each internal node represents the test on an attribute (e.g. outcome of a coin flip).

- Each branch represents the outcome of the test.

- Each leaf node represents a class label.

- The paths from the root to leaf represent the classification rules.

Source: en.wikipedia.org

A decision tree consists of three types of nodes:

- Decision nodes: Represented by squares. It is a node where a flow branches into several optional branches.

- Chance nodes: Represented by circles. It represents the probability of certain results.

- End nodes: Represented by triangles. It shows the final outcome of the decision path.

Source: en.wikipedia.org

- It is simple to understand and interpret. It can be visualized easily.

- It does not require as much data preprocessing as other methods.

- It can handle both numerical and categorical data.

- It can handle multiple output problems.

Source: scikit-learn.org

- If the Gini Index of the data is

0then it means that all the elements belong to a specific class. When this happens it is said to be pure. - When all of the data belongs to a single class (pure) then the leaf node is reached in the tree.

- The leaf node represents the class label in the tree (which means that it gives the final output).

Source: medium.com

- Random forest is an ensemble learning method that works by constructing a multitude of decision trees. A random forest can be constructed for both classification and regression tasks.

- Random forest outperforms decision trees, and it also does not have the habit of overfitting the data as decision trees do.

- A decision tree trained on a specific dataset will become very deep and cause overfitting. To create a random forest, decision trees can be trained on different subsets of the training dataset, and then the different decision trees can be averaged with the goal of decreasing the variance.

Source: en.wikipedia.org

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

[⬆] Deep Learning Interview Questions

- Machine Learning depends on humans to learn. Humans determine the hierarchy of features to determine the difference between the data input. It usually requires more structured data to learn.

- Deep Learning automates much of the feature extraction piece of the process. It eliminates the manual human intervention required.

- Machine Learning is less dependent on the amount of data as compared to deep learning.

- Deep Learning requires a lot of data to give high accuracy. It would take thousands or millions of data points which are trained for days or weeks to give an acceptable accurate model.

Source: ibm.com

- Deep Learning gives a better performance compared to machine learning if the dataset is large enough.

- Deep Learning does not need the person designing the model to have a lot of domain understanding for feature introspection. Deep learning outshines other methods if there is no feature engineering done.

- Deep Learning really shines when it comes to complex problems such as image classification, natural language processing, and speech recognition.

Source: towardsdatascience.com

- One of the best benefits of Deep Learning is its ability to perform automatic feature extraction from raw data.

- When the number of data fed into the learning algorithm increases, there will be more edge cases taken into consideration and hence the algorithm will learn to make the right decisions in those edge cases.

Source: machinelearningmastery.com

- When researchers started to create large artificial neural networks, they started to use the word deep to refer to them.

- As the term deep learning started to be used, it is generally understood that it stands for artificial neural networks which are deep as opposed to shallow artificial neural networks.

- Deep Artificial Neural Networks and Deep Learning are generally the same thing and mostly used interchangeably.

Source: machinelearningmastery.com

- Early stopping in deep learning is a type of regularization where the training is stopped after a few iterations.

- When training a large network, there will be a point during training when the model will stop generalizing and start learning the statistical noise in the training dataset. This makes the networks unable to predict new data.

- Defining early stopping in a neural network will prevent the network from overfitting.

- One way of defining early stopping is to start training the model and if the performance of the model starts to degrade, then stopping the training process.

Source: Neural Networks and Deep Learning: A Textbook by Charu C. Aggarwal

- Ensemble methods are used to increase the generalization power of a model. These methods are applicable to both deep learning as well as machine learning algorithms.

- Some ensemble methods introduced in neural networks are Dropout and Dropconnect. The improvement in the model depends on the type of data and the nature of neural architecture.

Source: Neural Networks and Deep Learning: A Textbook by Charu C. Aggarwal

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q11: What does the hidden layer in a Neural Network compute? ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q13: What is the difference between Linear Activation Function and Non-linear Activation Function? ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q18: How can you optimise the architecture of a Deep Learning classifier using Genetic Algorithms? ⭐⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

[⬆] Dimensionality Reduction Interview Questions

- As the amount of data required to train a model increases, it becomes harder and harder for machine learning algorithms to handle. As more features are added to the machine learning process, the more difficult the training becomes.

- In very high-dimensional space, supervised algorithms learn to separate points and build function approximations to make good predictions.

When the number of features increases, this search becomes expensive, both from a time and compute perspective. It might become impossible to find a good solution fast enough. This is the curse of dimensionality.

- Using dimensionality reduction of unsupervised learning, the most salient features can be discovered in the original feature set. Then the dimension of this feature set can be reduced to a more manageable number while losing very little information in the process. This will help supervised learning find the optimum function to approximate the dataset.

Source: www.amazon.com

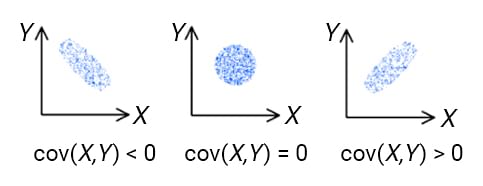

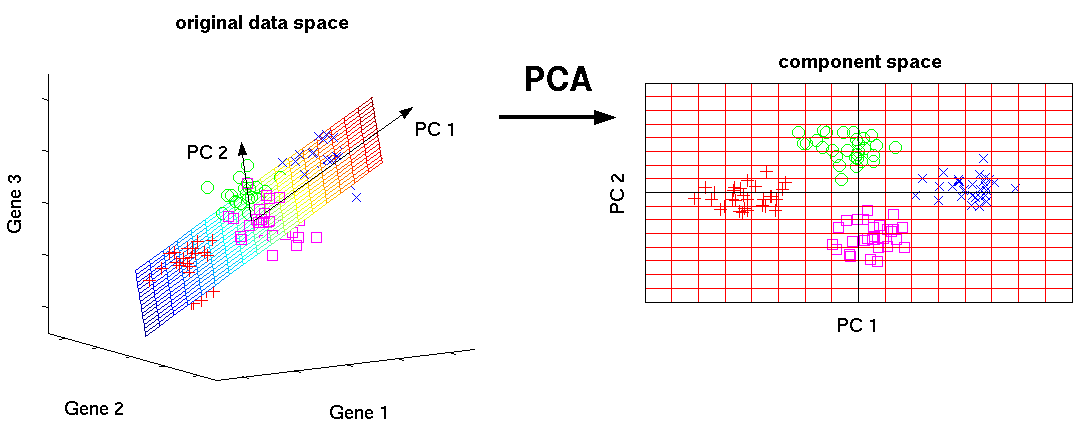

- The Principal Component Analysis (PCA) is the process of computing principal components and using them to perform a change of basis on the data.

- The Principal Component of a collection of points in a real coordinate space are a sequence of

punit vectors, where thei-th vector is the direction of a line that best fits the data while being orthogonal to thei - 1vectors. The best-fitting line is defined as the line that minimizes the average squared distance from the points to the line. - PCA is commonly used in dimensionality reduction by projecting each data point onto only the first few principal components to obtain lower-dimensional data while preserving as much of the data's variation as possible.

Source: en.wikipedia.org

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q16: What are the rules for generating a random matrix when Gaussian Random Projection is used? ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

[⬆] Ensemble Learning Interview Questions

Ensemble learning is a machine learning paradigm where multiple models (often called “weak learners”) are trained to solve the same problem and combined to get better results. The main hypothesis is that when weak models are correctly combined we can obtain more accurate and/or robust models.

Source: towardsdatascience.com

- Random Forests is a type of ensemble learning method for classification, regression, and other tasks.

- Random Forests works by constructing many decision trees at a training time. The way that this works is by averaging several decision trees at different parts of the same training set.

Source: en.wikipedia.org

In ensemble learning theory, we call weak learners (or base models) models that can be used as building blocks for designing more complex models by combining several of them. Most of the time, these basics models perform not so well by themselves either because they have a high bias (low degree of freedom models, for example) or because they have too much variance to be robust (high degree of freedom models, for example).

Source: towardsdatascience.com

- Ensemble methods is a machine learning technique that combines several base models in order to produce one optimal predictive model.

- Random Forest is a type of ensemble method.

- The number of component classifier in an ensemble has a great impact on the accuracy of the prediction, although there is a law of diminishing results in ensemble construction.

Source: towardsdatascience.com

- Random forest is an ensemble learning method that works by constructing a multitude of decision trees. A random forest can be constructed for both classification and regression tasks.

- Random forest outperforms decision trees, and it also does not have the habit of overfitting the data as decision trees do.

- A decision tree trained on a specific dataset will become very deep and cause overfitting. To create a random forest, decision trees can be trained on different subsets of the training dataset, and then the different decision trees can be averaged with the goal of decreasing the variance.

Source: en.wikipedia.org

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q9: What is the difference between a Weak Learner vs a Strong Learner and why they could be usefu? ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q17: What are the trade-offs between the different types of Classification Algorithms? How would do you choose the best one? ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

[⬆] Genetic Algorithms Interview Questions

- Each individual is represented by a chromosome represented by a collection of genes.

- A chromosome is represented by a string of bits, where each bit represents a single gene.

- A population is shown as a group of binary strings where each string represents an individual.

A Genetic Algorithm (GA) is a heuristic search algorithm used to solve search and optimization problems. This algorithm is a subset of evolutionary algorithms, which are used in the computation. Genetic algorithms employ the concept of genetics and natural selection to provide solutions to problems.

These algorithms have better intelligence than random search algorithms because they use historical data to take the search to the best performing region within the solution space.

GAs are also based on the behavior of chromosomes and their genetic structure. Every chromosome plays the role of providing a possible solution. The fitness function helps in providing the characteristics of all individuals within the population. The greater the function, the better the solution.

Source: www.section.io

There are some of the basic terminologies related to genetic algorithms:

- Population: This is a subset of all the probable solutions that can solve the given problem.

- Chromosomes: A chromosome is one of the solutions in the population.

- Gene: This is an element in a chromosome.

- Allele: This is the value given to a gene in a specific chromosome.

- Fitness function: This is a function that uses a specific input to produce an improved output. The solution is used as the input while the output is in the form of solution suitability.

- Genetic operators: In genetic algorithms, the best individuals mate to reproduce an offspring that is better than the parents. Genetic operators are used for changing the genetic composition of this next generation.

Source: www.section.io

- A fitness function is a function that maps the chromosome representation into a scalar value.

- At each iteration of the algorithm, each individual is evaluated using a fitness function.

- The individuals with a better fitness score are more likely to be chosen for reproduction and be represented in the next generation.

- The fitness function seeks to optimize the problem that is being solved.

Source: ai.stackexchange.com

- Mutation introduces new patterns in the chromosomes, and it helps to find solutions in uncharted areas.

- Mutations are implemented as random changes in the chromosomes. It may be programmed, for example, as a bit flip where a single bit of the chromosome changes.

- The purpose of mutation is to periodically refresh the population.

Source: www.amazon.com

- It has the capability to globally optimize the problem instead of just finding the local minima or maxima.

- It can handle problems with complex mathematical representation.

- It can handle problems that lack mathematical representation.

- It is resilient to noise.

- It can support parallel and distributed processing.

- It is suitable for continuous learning.

- It provides answers that improve over time.

- A genetic algorithm does not need derivative information.

Source: www.amazon.com

- While the average fitness of the genetic algorithm increases as the generations go by, the best individuals from the current generations will be lost due to selection, crossover, and mutation operators. This problem is solved by the process known as Elitism.

- Elitism guarantees that the best individuals always make it to the next generation.

npredefined number of individuals are duplicated into the next generation. These individuals selected for duplication are also eligible to be parents of the new individuals.

Source: en.wikipedia.org

The crossover and mutation operations are applied separately for each dimension of the array that forms the real-coded chromosome.

For example, if [1.23, 9.81, 6.34] and [-30.23, 12.67, -42.69] are selected for the crossover operation, the crossover will be done for between; 1.23 and -30.23 (first dimension), 9.81 and 12.67 (second dimension), and 6.34 and -42.69 (third dimension).

Source: www.amazon.com

Read answer on 👉 MLStack.Cafe

Q10: What are the differences between Genetic Algorithms and Traditional Search and Optimization Algorithms? ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q24: Can you explain Elitism in a context of Genetic Algorithms and it's impact on GA performance? ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q26: What are the advantages of using Floating-Point number to represent chromosomes instead of Binary numbers? ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q38: What are Constraint Satisfaction Problems and why is Genetic Algorithms suited to solve them? ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q42: How can you optimise the architecture of a Deep Learning classifier using Genetic Algorithms? ⭐⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

[⬆] Gradient Descent Interview Questions

- A Gradient Descent is a type of optimization algorithm used to find the local minimum of a differentiable function.

- The main idea behind the gradient descent is to take steps in the negative direction of the gradient. This will lead to the steepest descent and eventually it will lead to the minimum point.

- It is shown as an equation by:

Where:

- a is the point.

-

$$\gamma$$ is the step size. - F(x) is the multi-variable function.

Source: en.wikipedia.org

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q4: Compare the Mini-batch Gradient Descent, Stochastic Gradient Descent, and Batch Gradient Descent ⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Read answer on 👉 MLStack.Cafe

Q12: How is the Adam Optimization Algorithm different when compared to Stochastic Gradient Descent? ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

Q13: Name some advantages of using Gradient descent vs Ordinary Least Squares for Linear Regression ⭐⭐⭐⭐

Read answer on 👉 MLStack.Cafe

[⬆] K-Means Clustering Interview Questions

-

K-nearest neighbors or KNN is a supervised classification algorithm. This means that we need labeled data to classify an unlabeled data point. It attempts to classify a data point based on its proximity to other

K-data points in the feature space. -