In this repository there are files are part of the Thesis:

Automating the data acquisition of Businesses and their actions regarding environmental sustainability: The Energy Industry in Greece

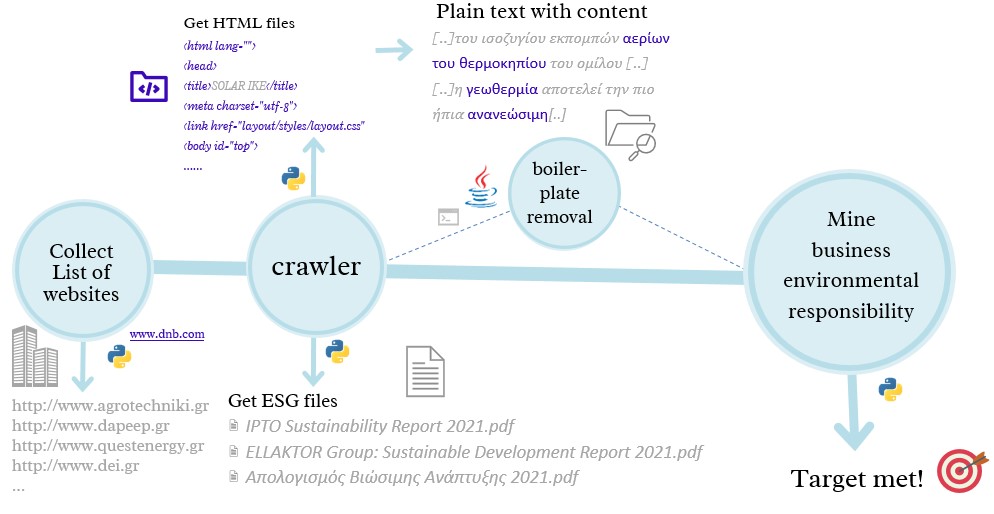

The pipeline of the procedure that is described in the thesis file, is shown in the image below and at the repository can be found the code for the individual steps

The steps are the following:

- Extract a list with domains of all the Greek businesses that belong to the energy factor (result: data_from_dnb (source www.dnb.com) )

- Use a crawler algorithm that will navigate to the URLs in our file as well as all the links that lead to other links & will extract their content (HTML files). Moreover it will extract document files (.pdf) refering to ESG factors using a custom dictionary ESG Dictionary (script related → crawler_pdfs.py)

- Use a boiler plate removal algorithm that removes HTML syntax and keep only the text (not publicly available in the repository)

- Evaluate web-scrapping process:

- Calculate percentage of successfully downloaded webpages

- Calculate tokens found

- Extract useful information:

- Find innovative products of businesses (using trademarks)

- Filter accordingly interesting content in order to distill the action of greek businesses regarding environmental responsability

Export the results in a csv (script related → meta_cleaning_2.py )

- There is also available a script of the crawler designed to read local HTML files (instead of URLs as decribed in step 2), in order to extract ESG pdf files. (script related → Crawler_pdfs_from_html_files.py)

-

Crawler_pdfs_from_html_files.py

In order to run the script, user needs to give as input 2 parameters:- The local input path that contains folders per website under which there are stored all HTML files

(Input parameter parameter: --inpath)

(e.g. --inpath C:\Users\userXXX\inp)

(Sample input data can be found here ) - The local output path that user desires to store the results (ESG/Sustainability pdf files) per website

(Input parameter parameter: --out_dir)

(e.g. --out_dir C:\Users\userXXX\out)

(Example of input & output data can be found here )

Thus the command at terminal will be similar to:

C:\Users\userXXX\...\python.exe C:\Users\userXXX\...\Crawler_pdfs_from_html_files.py --inpath C:\Users\userXXX\D..\inp --out_dir C:\Users\userXXX\..\out.Optional feature: After first run a (default) dictionary for words of interest (ESG Dictionary) is generated at input forlder so that user can maintain it.

- The local input path that contains folders per website under which there are stored all HTML files

-

Crawler_pdfs.py

Similarly, in order to run the script, user needs to give as input 2 parameters:- The local input path for json file that contains the websites under which there are stored all HTML files

(Input parameter parameter: --inpath)

(e.g. --inpath C:\Users\userXXX\inp\data_from_dnb.json) - The local output path that user desires to store the results (ESG/Sustainability pdf files) per website

(Input parameter parameter: --out_dir)

(e.g. --out_dir C:\Users\userXXX\out)

Thus the command at terminal will be similar to:

C:\Users\userXXX\...\python.exe C:\Users\userXXX\...\Crawler_pdfs.py --inpath C:\Users\userXXX\...\inp\data_from_dnb.json --out_dir C:\Users\userXXX\...\out. - The local input path for json file that contains the websites under which there are stored all HTML files

-

meta_cleaning_2.py

Similarly, In order to run the script, user needs to give as input 2 parameters:- The local input path contains folders with html files per website with the extracted plain text (after boilerplate removal).

(Input parameter parameter: --inpath)

(e.g. --inpath C:\Users\userXXX\inp)

Sample data to use as input: html files with plain text in file system - The local output path that user desires to store the results (ESG/Sustainability pdf files)

(Input parameter parameter: --out_dir)

(e.g. --out_dir C:\Users\userXXX\out)

Thus the command at terminal will be similar to:

C:\Users\userXXX\...\python.exe C:\Users\userXXX\...\Crawler_pdfs_from_html_files.py --inpath C:\Users\userXXX\D..\inp --out_dir C:\Users\userXXX\..\out - The local input path contains folders with html files per website with the extracted plain text (after boilerplate removal).

Run the command

pip install -r requirements.txt