Vectorwise Interpretable Attentions for Multimodal Tabular Data

This repository is an official implementation of a model which won first place in the dacon competition. You can see the final result on this post. If you want to reproduce the score of the competition, please check out this documentation which is used to verify by the competition hosts.

SiD is a vectorwise interpretable attention model for multimodal tabular data. It is designed and considered to handle data as vectors so that multimodal data (e.g. text, image and audio) can be encoded into the vectors and used with the the tabular data.

The requirements of this project is as follows:

- numpy

- omegaconf

- pandas

- pytorch_lightning

- scikit_learn

- torch==1.10.1

- transformers

- wandb

Instead, you can simply install the libraries at once:

$ pip install -r requirements.txt| Model Architecture | Residual Block |

|---|---|

|

|

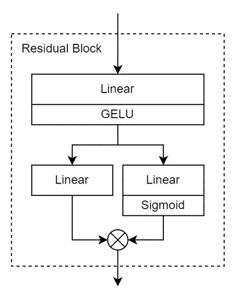

As mentioned above, SiD is considered to extend TabNet with vectorwise approach. Because many multimodal data (e.g. text, image and audio) are encoded into the vectors, it is important to merge the tabular data with the vectors. However, the attention mechanism (attentive transformer) of TabNet does not consider the vectorized features. Therefore we propose the vectorwise interpretable attention model.

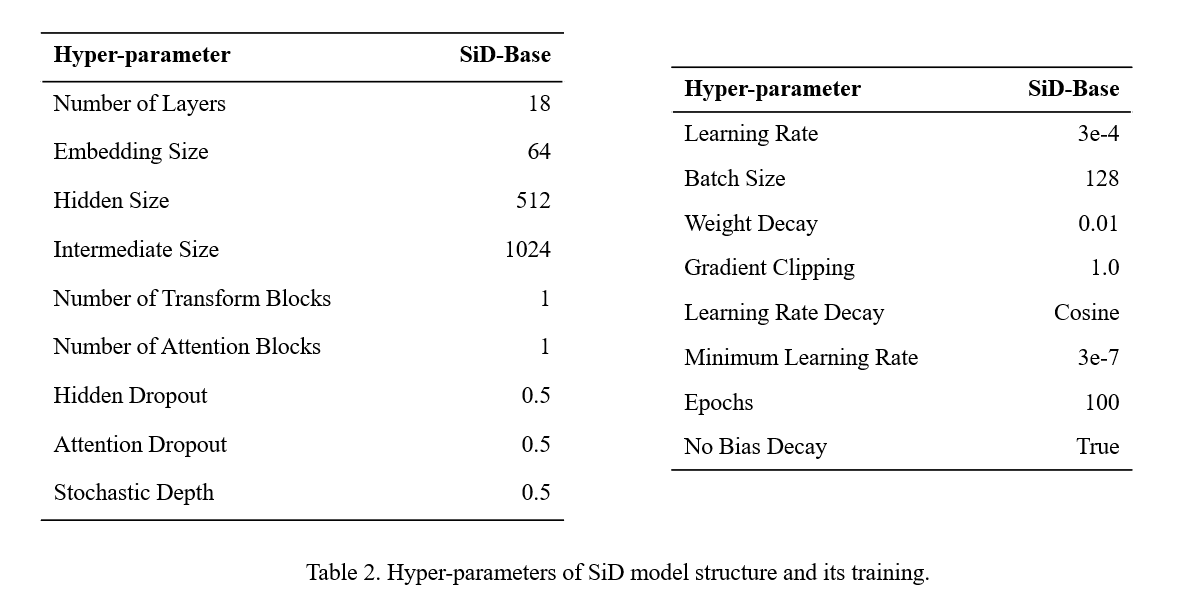

| Hyperparameter Settings |  |

|---|---|

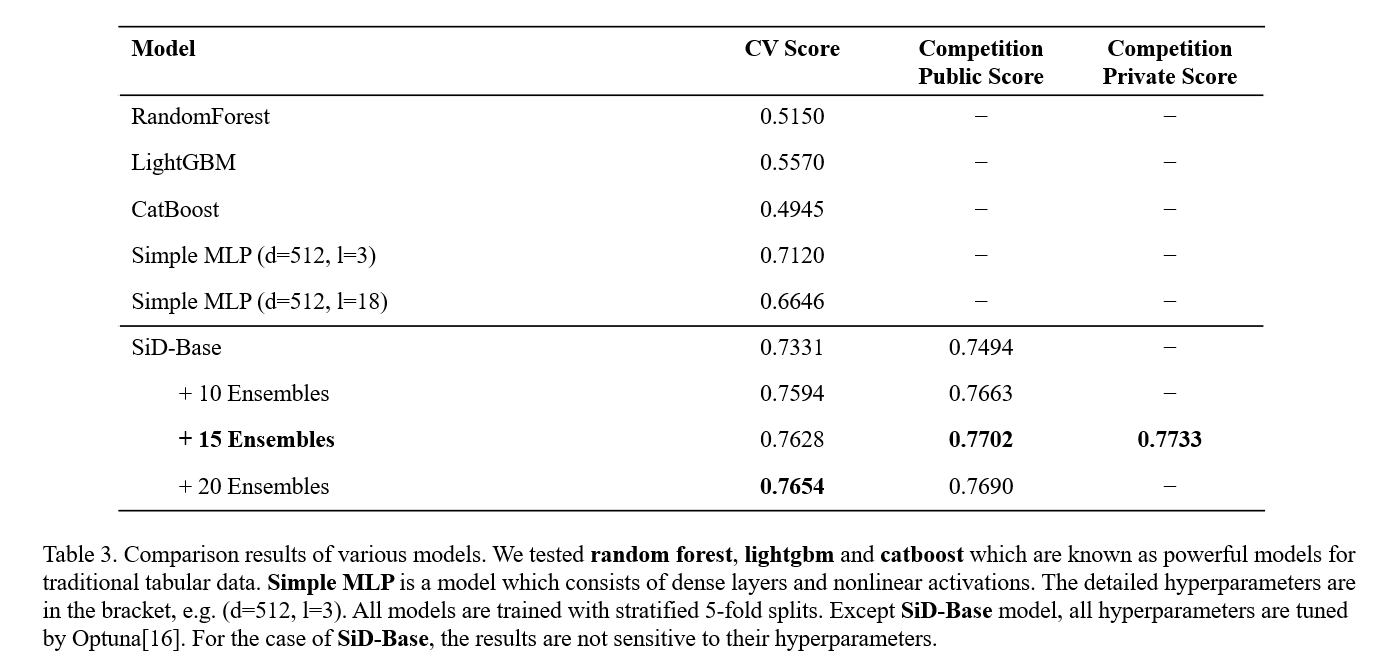

| Experimental Results |  |

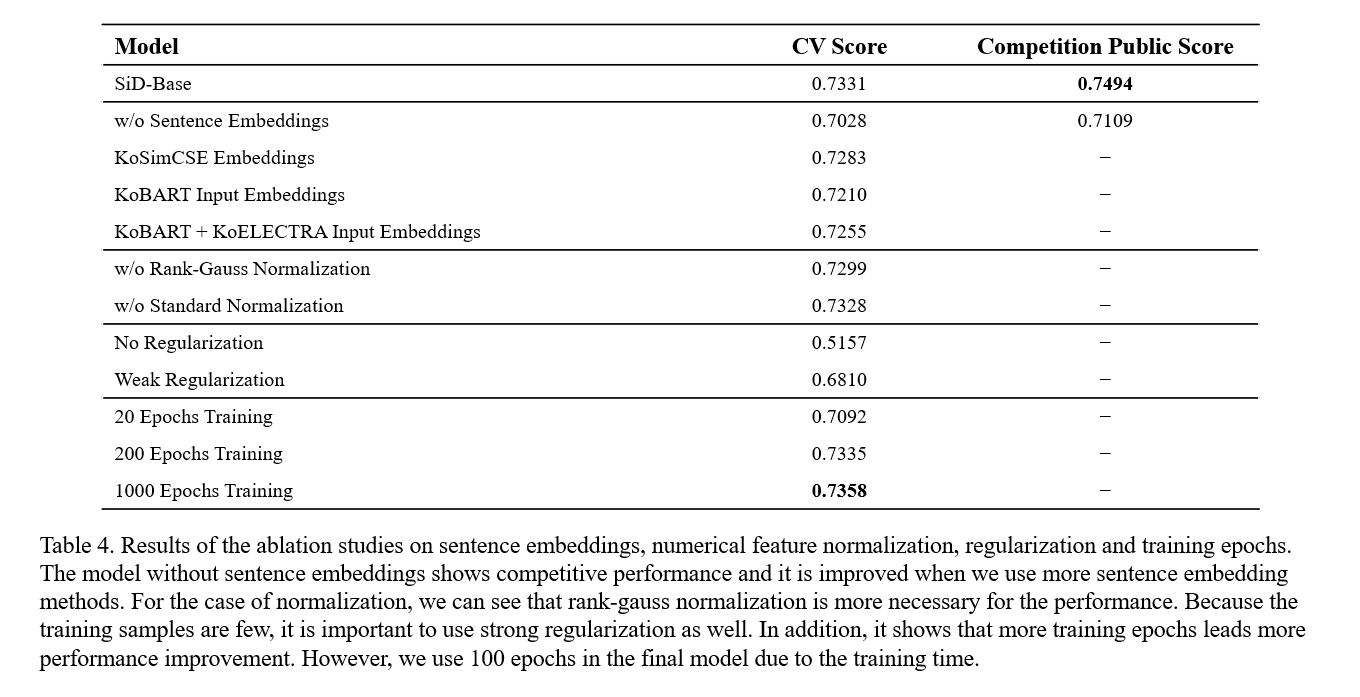

| Ablation Studies |  |

| 2017 |  |

|---|---|

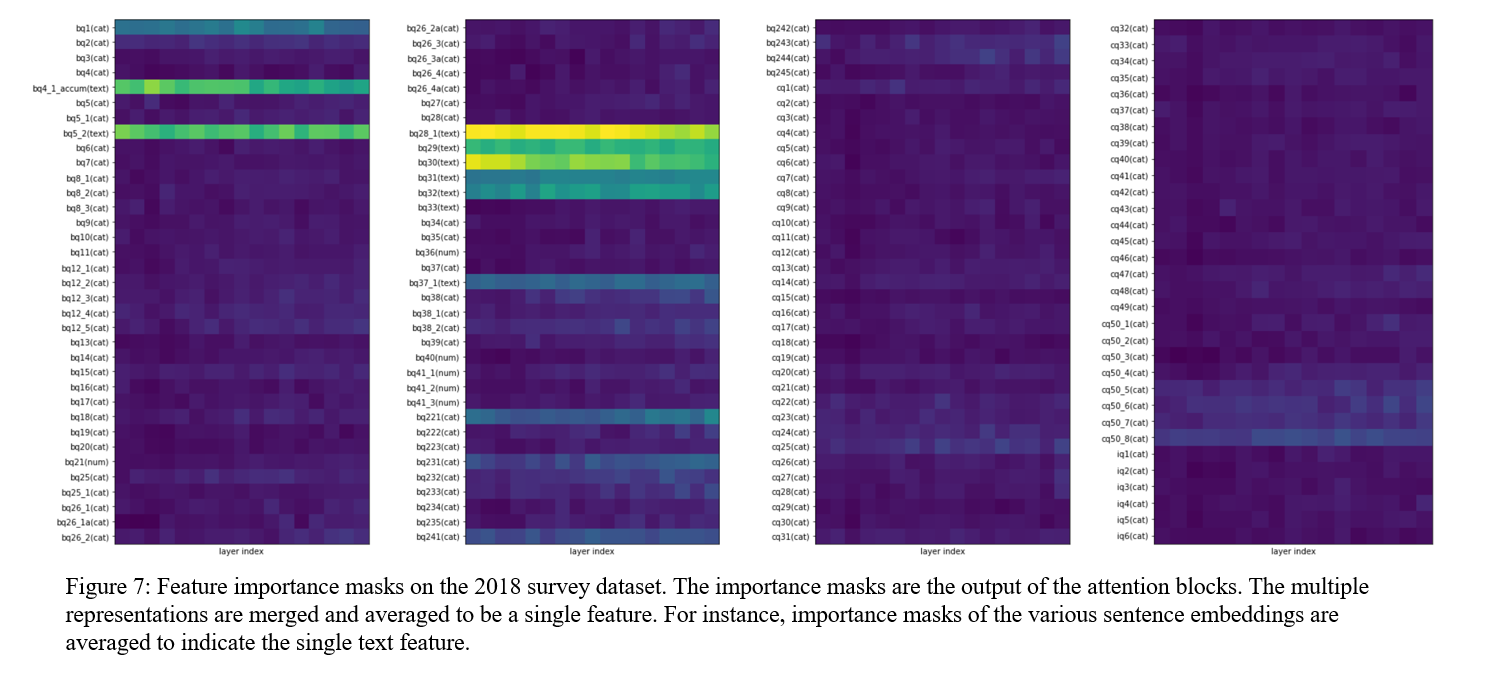

| 2018 |  |

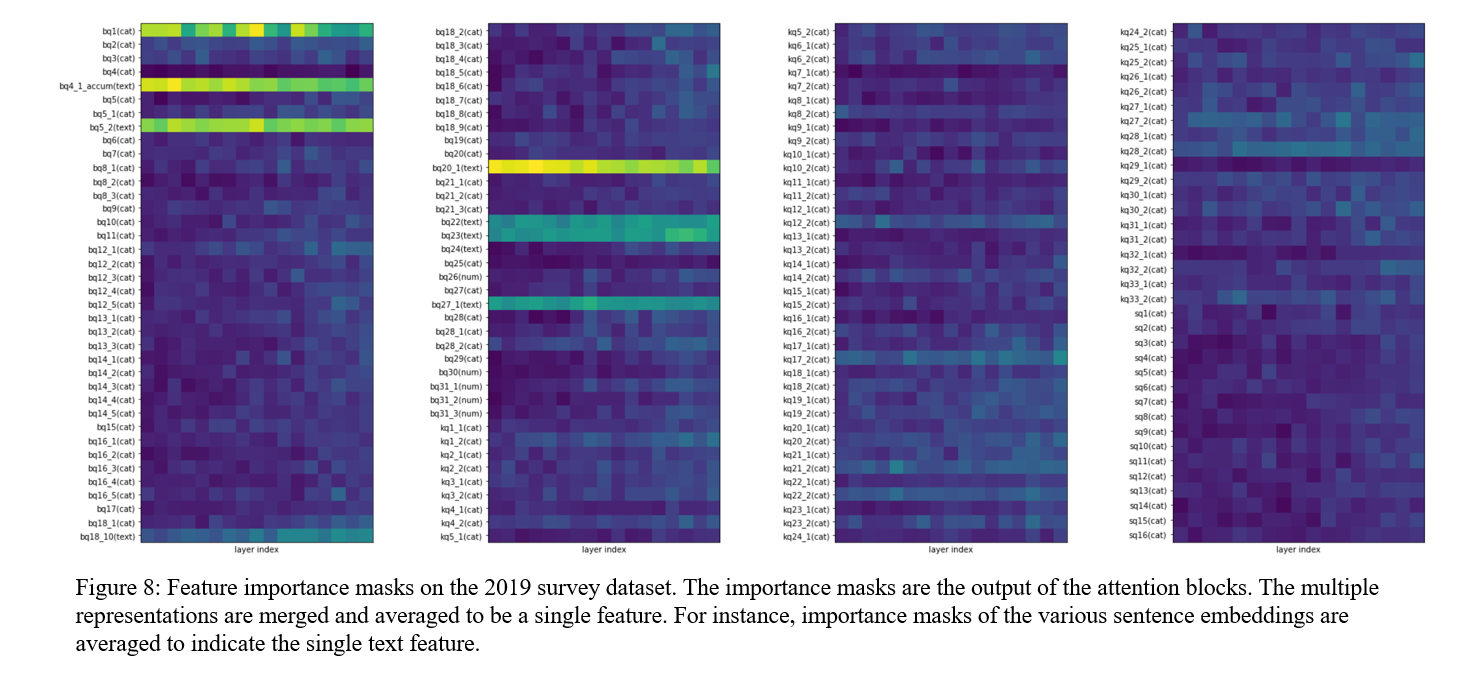

| 2019 |  |

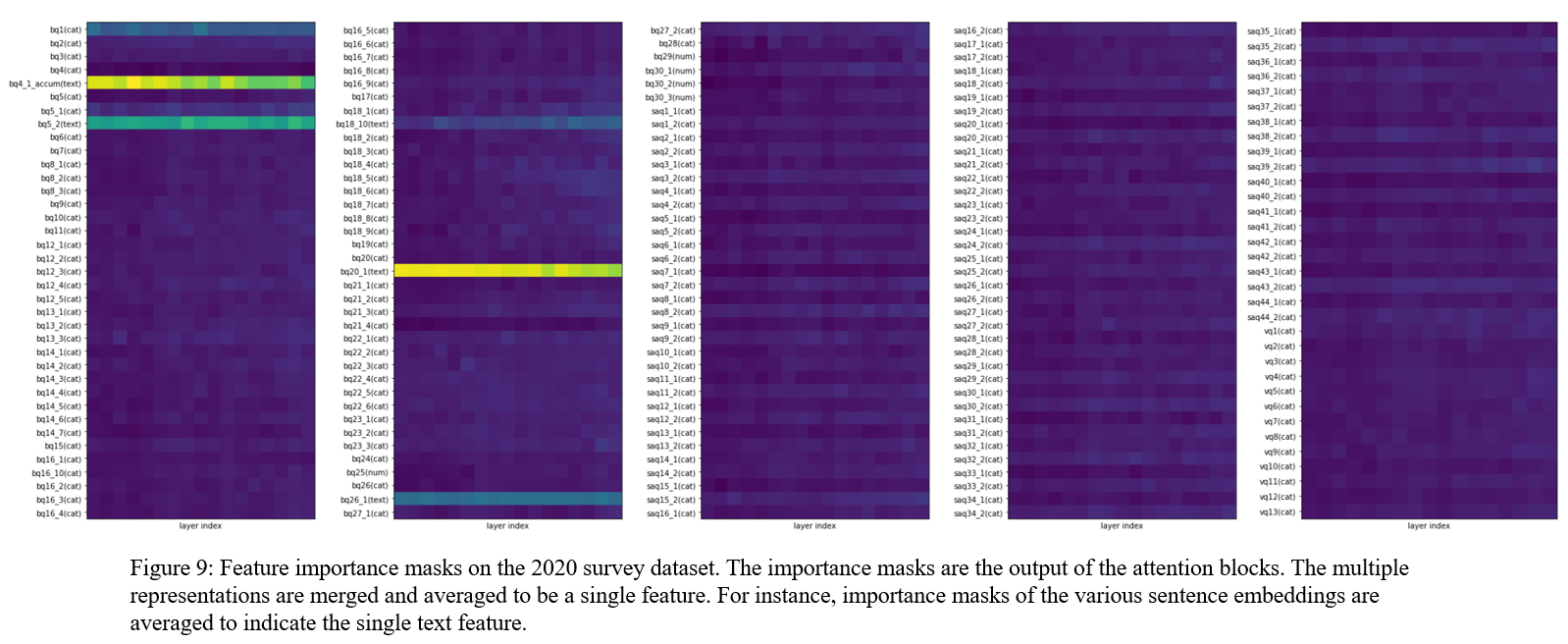

| 2020 |  |

| Importance Mask |  |

|---|---|

| Question Dialogs |  |

This repository is released under the Apache License 2.0. License can be found in LICENSE file.