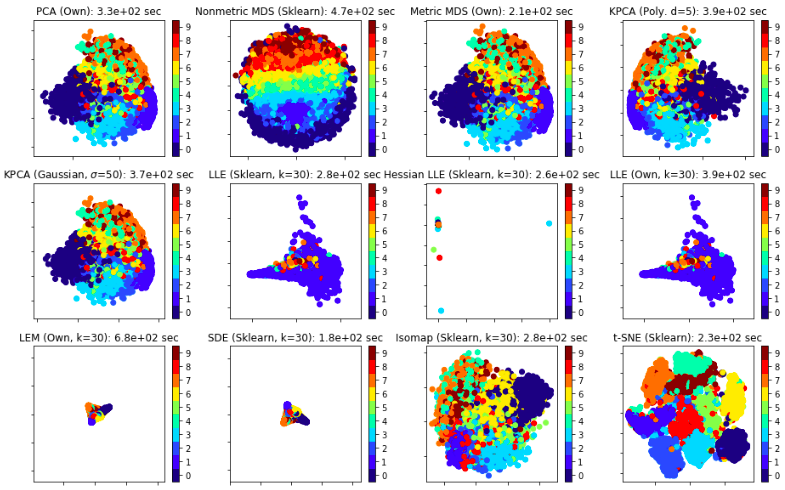

This repository provides our work done as part of the ENSAE lecture: "Advanced Statistics in ML" taught by Stéphan Clémençon (Spring 2019). Our project was based on Nonlinear dimensionality reduction techniques. We focused on Principal Components Analysis, Kernel PCA, Laplacian Eigenmaps algorithm and Locally Linear Embedding, and gave a brief overview of some other successful NLDR methods.

First, we provide a theoretical overview of the main techniques along with some simple numerical experiments on synthetic manifolds. Then, we apply the latter on the MNIST data set following the

All the experiments can be found in the notebook file. The folder Data contains the MNIST data set along with the grid search results. For a more convenient overview of the notebook, see https://nbviewer.jupyter.org/github/afiliot/nonlinear-dimensionality-reduction/blob/master/notebook.ipynb#Neural%20Network.