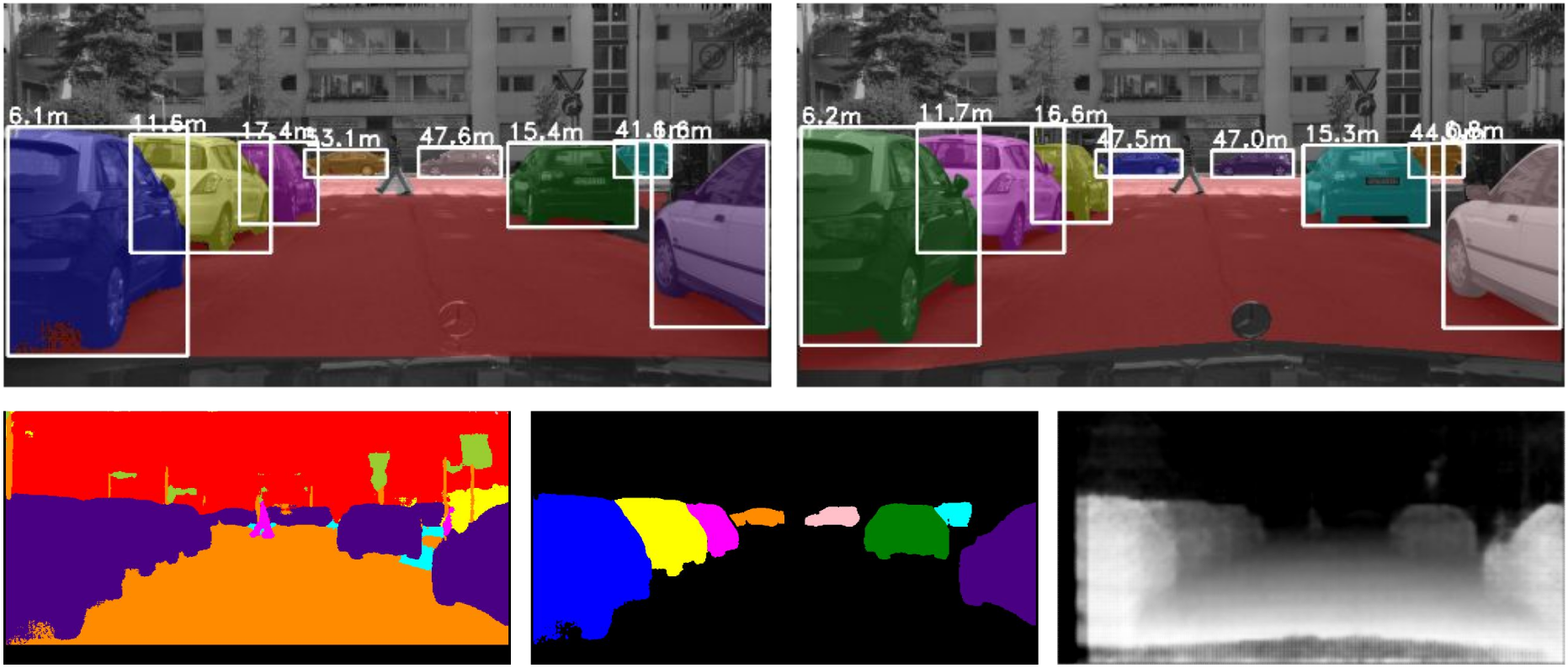

Torch implementation for simultaneous image segmentation, instance segmentation and single image depth. Two videos can be found here and here.

Example:

If you use this code for your research, please cite our papers:

Fast Scene Understanding for Autonomous Driving

Davy Neven, Bert De Brabandere, Stamatios Georgoulis, Marc Proesmans and Luc Van Gool

Published at "Deep Learning for Vehicle Perception", workshop at the IEEE Symposium on Intelligent Vehicles 2017

and

Semantic Instance Segmentation with a Discriminative Loss Function

Bert De Brabandere, Davy Neven and Luc Van Gool

Published at "Deep Learning for Robotic Vision", workshop at CVPR 2017

Torch dependencies:

Data dependencies:

Download Cityscapes and run the script createTrainIdLabelImgs.py and createTrainIdInstanceImgs.py to create annotations based on the training labels. Make sure that the folder is named cityscapes

Afterwards create following txt files:

cd CITYSCAPES_FOLDER

ls leftImg8bit/train/*/*.png > trainImages.txt

ls leftImg8bit/val/*/*.png > valImages.txt

ls gtFine/train/*/*labelTrainIds.png > trainLabels.txt

ls gtFine/val/*/*labelTrainIds.png.png > valLabels.txt

ls gtFine/train/*/*instanceTrainIds.png > trainInstances.txt

ls gtFine/val/*/*instanceTrainIds.png.png > valInstances.txt

ls disparity/train/*/*.png > trainDepth.txt

ls disparity/val/*/*.png.png > valDepth.txt

To download both the pretrained segmentation model for training and a trained model for testing, run:

sh download.sh

To test the pretrained model, make sure you downloaded both the pretrained model and the Cityscapes dataset (+ scripts and txt files, see above). After, run:

qlua test.lua -data_root CITYSCAPES_ROOT with CITYSCAPES_ROOT the folder where cityscapes is located. For other options, see test_opts.lua

To train your own model, run:

qlua main.lua -data_root CITYSCAPES_ROOT -save true -directory PATH_TO_SAVE

For other options, see opts.lua