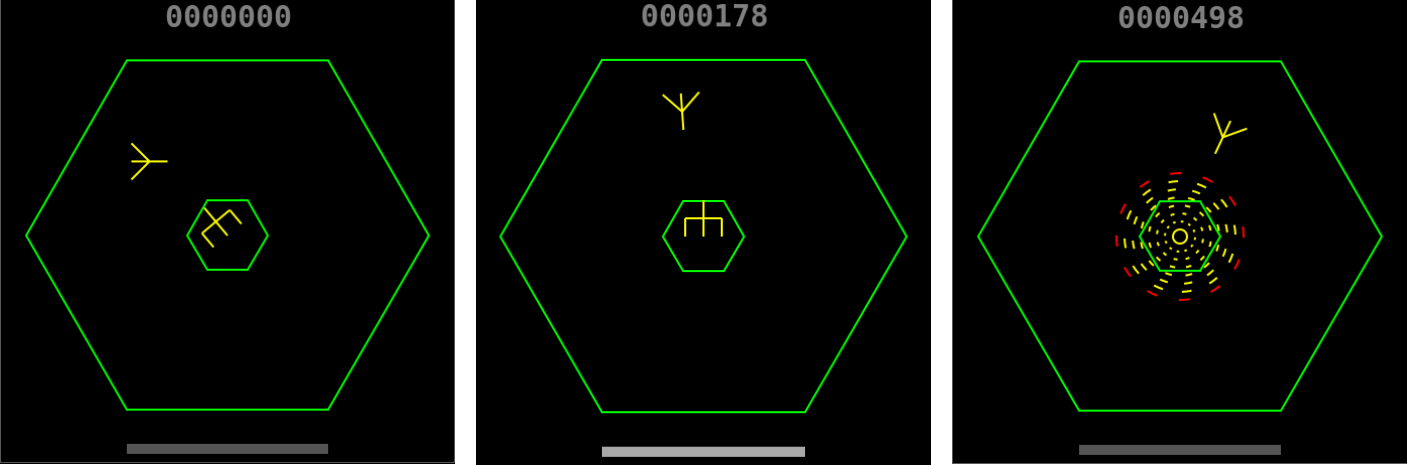

Space Fortress is a benchmark for studying context and temporal sensitivity of deep reinforcement learning algorithms.

This repository contains:

- An OpenAI Gym compatible RL environment for Space Fortress, courtesy of Shawn Betts and Ryan Hope bitbucket url

- Baseline code for deep reinforcement learning (PPO, A2C, Rainbow) on the Space Fortress environments

This repo accompanies our arXiv paper 1809.02206. If you used this code or would like to cite our paper, please cite using the following BibTeX:

@article{agarwal2018challenges,

author = {Akshat Agarwal and Ryan Hope and Katia Sycara},

title = {Challenges of Context and Time in Reinforcement Learning: Introducing Space Fortress as a Benchmark},

journal = {arXiv preprint arXiv:1809.02206},

year = {2018},

}

Needs Python 3.5 and OpenAI Gym

cdinto thepython/spacefortressfolder, and runpip install -e .- Then

cdinto thepython/spacefortress-gymfolder and runpip install -e .Done! You can now addimport spacefortress.gymto your script and start using the Space Fortress environments.

Install PyTorch (v0.4 or higher) from their website, and then run:

pip install numpy tensorboardX gym_vecenv opencv-python atari-py plotlyOR you can install dependencies individually:

- numpy

- PyTorch (v0.4 or higher)

- tensorboardX

- gym_vecenv

- opencv-python

pip install opencv-python - atari-py

- plotly

Credits to ikostrikov and Kaixhin for their excellent implementations of PPO, A2C and Rainbow.

In the rl folder, run

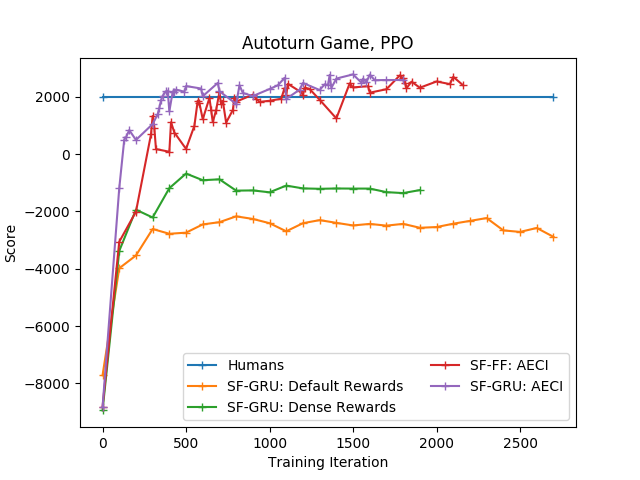

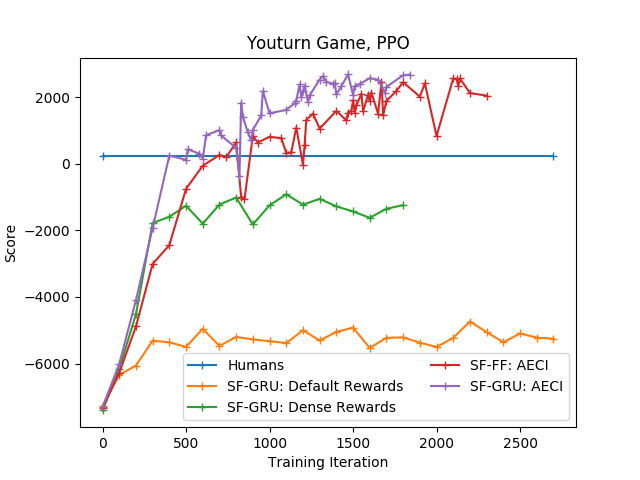

python train.py --env-name youturnBy default, uses recurrent (SF-GRU) architecture and PPO algorithm. To use A2C, add --a2c in the run command, and to use the feedforward SF-FF architecture, add --feedforward in the run command. Change the --env-name flag to autoturn to use that version of Space Fortress. For details on Autoturn and Youturn, please refer to the paper (linked above).

Flags for algorithmic hyperparameters can be found in rl/arguments.py and accordingly specified in the run command.

In the rl/rainbow folder, run

python main.py --game youturnFlags for algorithmic hyperparameters can be found in rl/rainbow/main.py and accordingly specified in the run command.

In the rl folder, run

python evaluate.py --env-name youturn --load-dir <specify trained model file>To evaluate a model with feedforward architecture, add the --feedforward flag to the run command. Similarly, add --render to see the agent playing the game.

In the rl/rainbow folder, run

python main.py --game youturn --evaluate --model <specify trained model file>