A codebase for Kinyarwanda speech synthesis (text-to-speech) based on MB-iSTFT-VITS2 model in PyTorch. The original codebase and description comes from MB-iSTFT-VITS2 which itself is a hybrid combination of vits2_pytorch and MB-iSTFT-VITS.

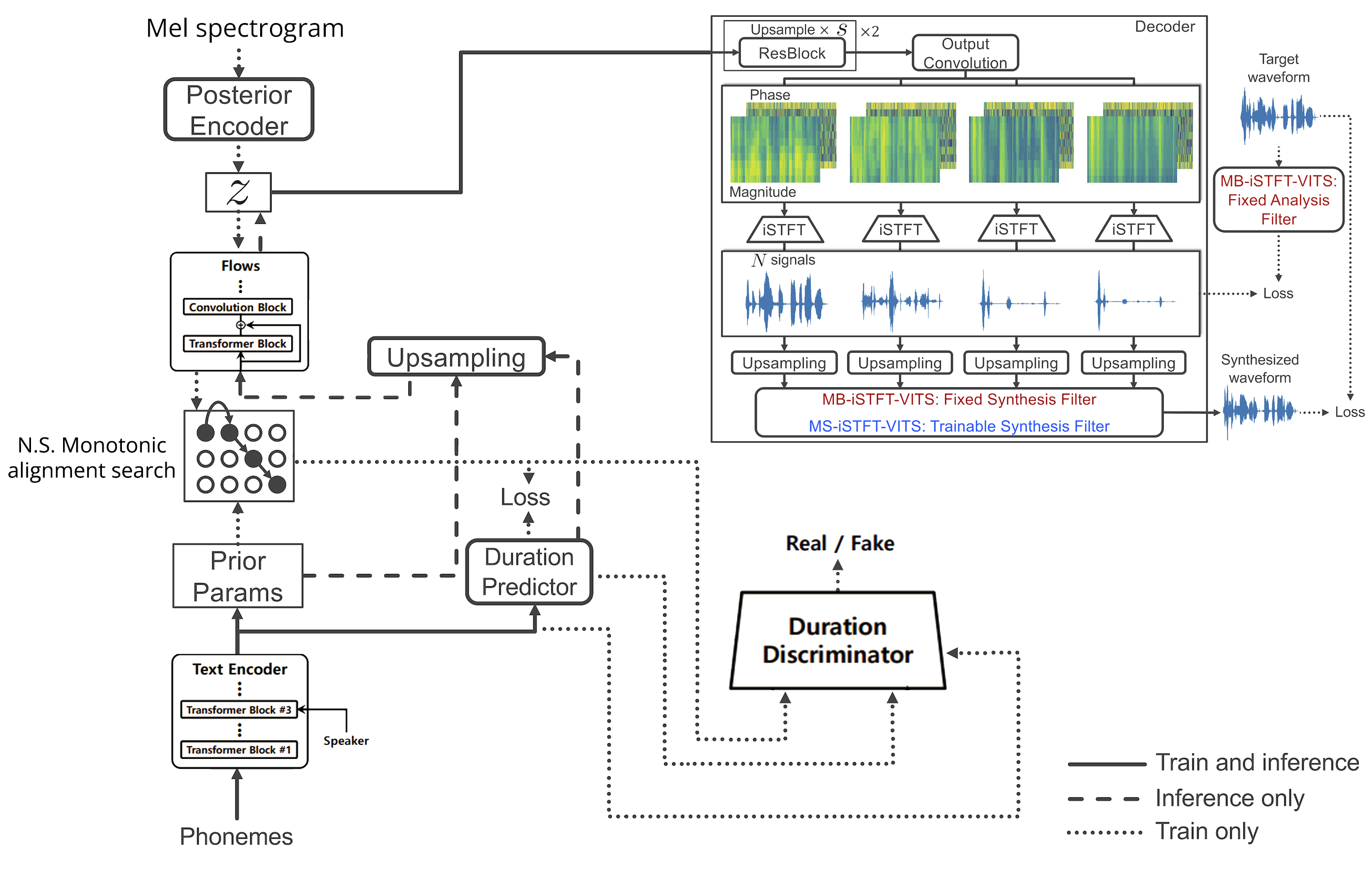

An architectural depiction of the model is presented below and its details can be found the original sources.

- Checkout the code in the Inference directory and install

monotonic_alignthekinyattsmodules

pip install -e ./Inference/monotonic_align/

pip install -e ./Inference/- Download the pre-trained Kinyarwanda TTS model TTS_MODEL_ms_ktjw_istft_vits2_base_1M.pt

- Go to the

kinyattssub-directory and run an inference server using uwsgi

cd Inference/kinyatts/

nohup sh run.sh &

- Alternatively use the provided Jupiter notebook to synthetise speech: Inference/kinyatts/kinyatts_inference.ipynb

Follow instructions in Training codebase to install requirements and train a basic multi-speaker TTS model.