This is the official repository for the ssl-sonar-images paper published at the LatinX in AI workshop @CVPR2022.

Self-supervised Learning for Sonar Image Classification Alan Preciado-Grijalva, Bilal Wehbe, Miguel Bande Firvida, Matias Valdenegro-Toro CVPR 2022

[paper] [poster] [slides] [talk]

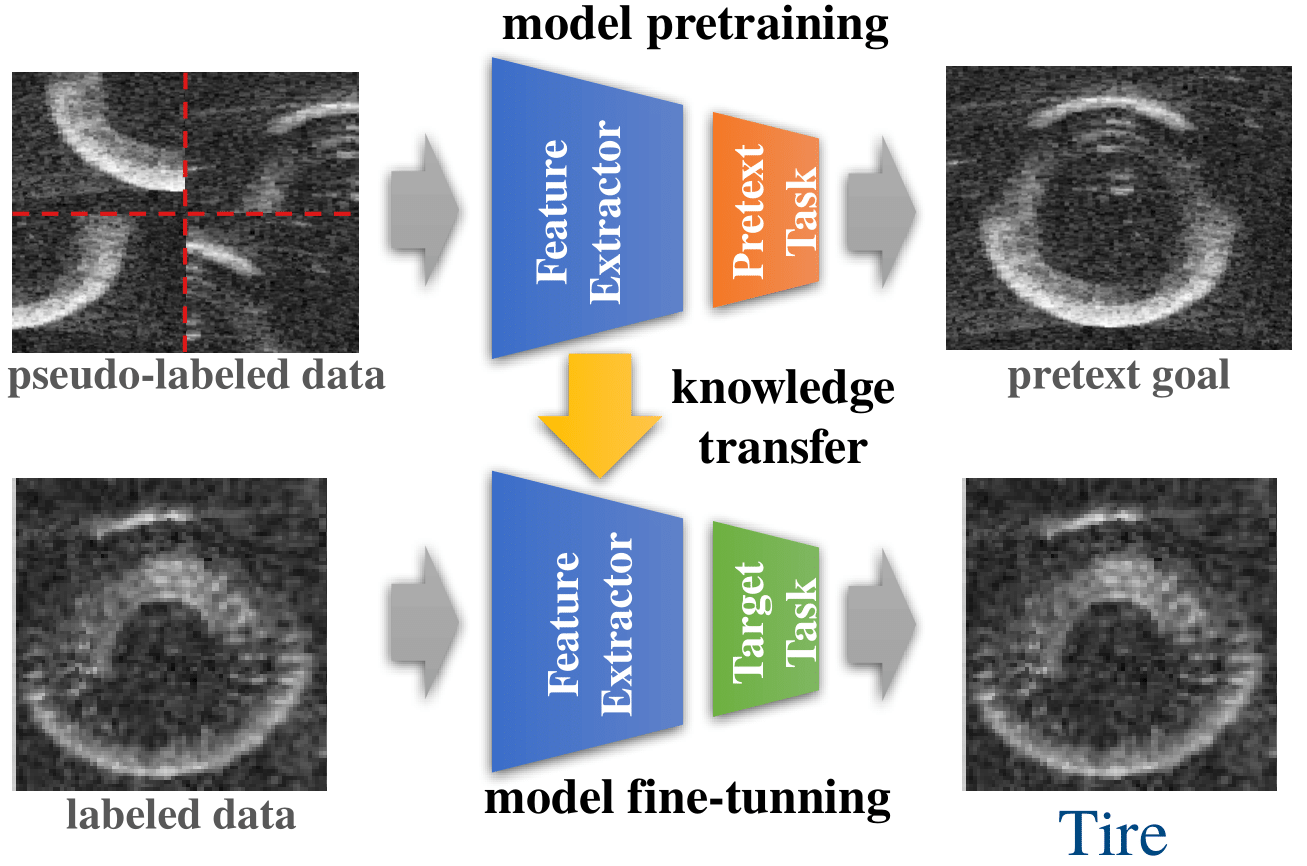

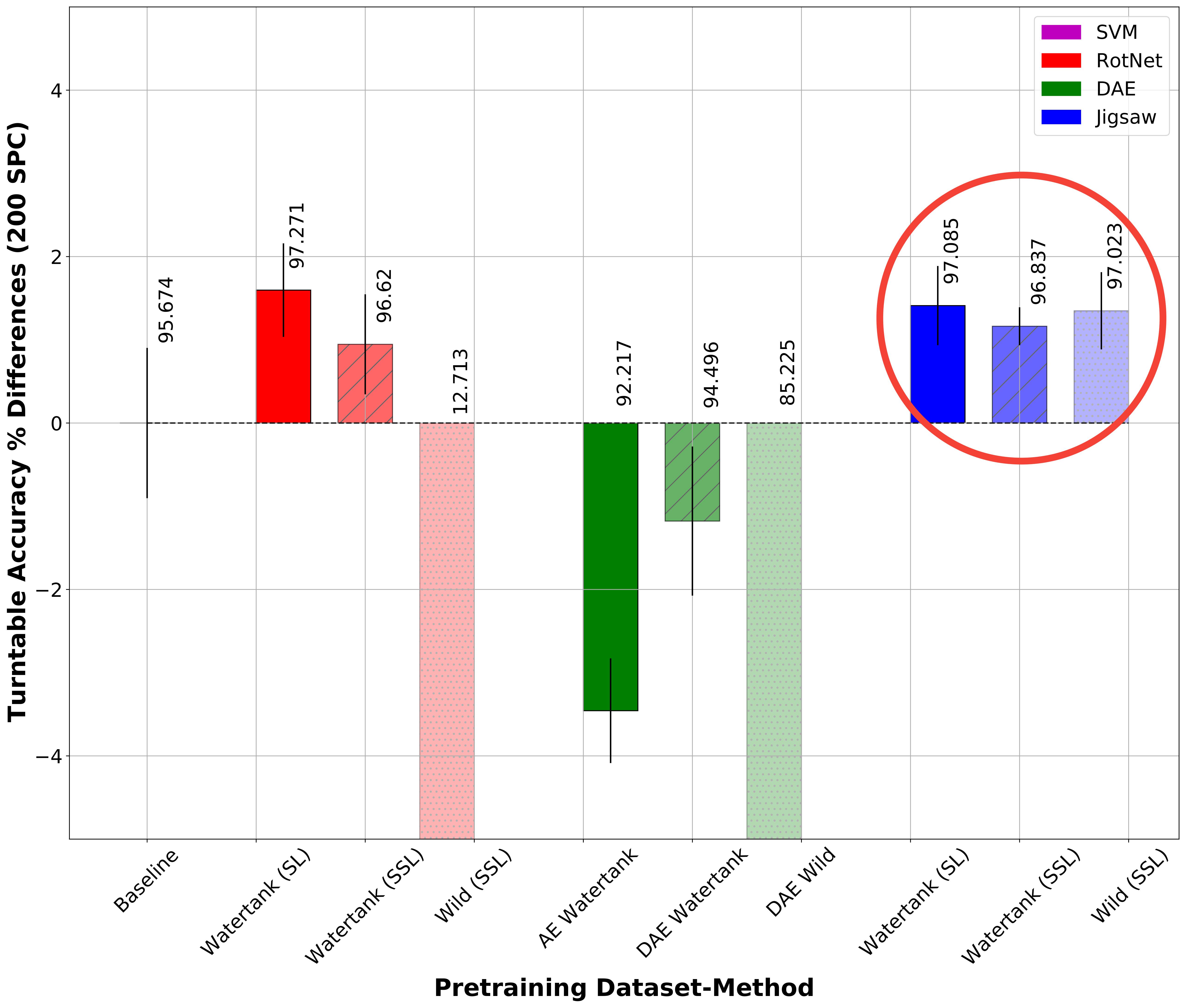

Self-supervised learning has proved to be a powerful approach to learn image representations without the need of large labeled datasets. For underwater robotics, it is of great interest to design computer vision algorithms to improve perception capabilities such as sonar image classification. Due to the confidential nature of sonar imaging and the difficulty to interpret sonar images, it is challenging to create public large labeled sonar datasets to train supervised learning algorithms. In this work, we investigate the potential of three self-supervised learning methods (RotNet, Denoising Autoencoders, and Jigsaw) to learn high-quality sonar image representation without the need of human labels. We present pre-training and transfer learning results on real-life sonar image datasets. Our results indicate that self-supervised pre-training yields classification performance comparable to supervised pre-training in a few-shot transfer learning setup across all three methods.

- Datasets: We present real-life sonar datasets for pretraining and transfer learning evaluations

- SSL Approaches: We study 3 self-supervised learning algorithms to learn representations without labels (RotNet, Denoising Autoencoders, Jigsaw Puzzle)

- Pretrained Models: We provide pretrained models for further computer vision research on sonar image datasets

- Results: Our results indicate that self-supervised pretraining yields competitive results against supervised pretraining

In this work, we performed pretraining and transfer learning experiments on the Watertank and Turntable datasets, respectively. If you want to explore our SSL methods with this data, please download them from the Forward-Looking Sonar Marine Debris Datasets repository and put them in a datasets/ directory.

The install.yml handles the installation dependencies needed to run the models:

conda env create -f install.yml

For RotNet, you can find our pretrained models here:

src/rotnet/pretraining/results

We provide both self-supervised and supervised pretrained models. As an example, you can find a densenet pretrained network in:

results/self_supervised_learning/checkpoints/sonar1/densenet/batch_size_128/96x96_substract_mean_online_aug_width_16

Conversely, you can train a network (e.g. densenet) from scratch, by going to

src/rotnet/pretraining/run_experiments/densenet

And running

bash train_self_supervised_sonar1.sh

For the Denoising Autoencoder, go to

src/denoising_autoencder/

And run the cells in the jupyter notebook

jupyter notebook train_denoising_autoencoder.ipynb

For Jigsaw, cd into

src/jigsaw/

And run the cells in the following jupyter notebook

jupyter notebook train_jigsaw_puzzle_time_distributed_wrapper.ipynb

Note: this will generate permutation datasets that will contain several thousands of images, expect 3-4 GB of memory for this.

For RotNet, you can evaluate the quality of the learned features during pretraining in a transfer learning low-shot setup. For example, if you want to evalute densenet121, go to:

src/rotnet/transfer_learning/run_experiments/densenet121

There will be two jupyter notebooks that compare supervised versus self-supervised pretraining, you can run them via:

# supervised pretraining

jupyter notebook evaluate_SVM_transfer_learning_classification_supervised.ipynb

# self-supervised pretraining

jupyter notebook evaluate_SVM_transfer_learning_classification_self_supervised.ipynb

For the Denoising Autoencoder, run the following notebook

jupyter notebook evaluate_denoising_autoencoder_transfer_learning_svm.ipynb

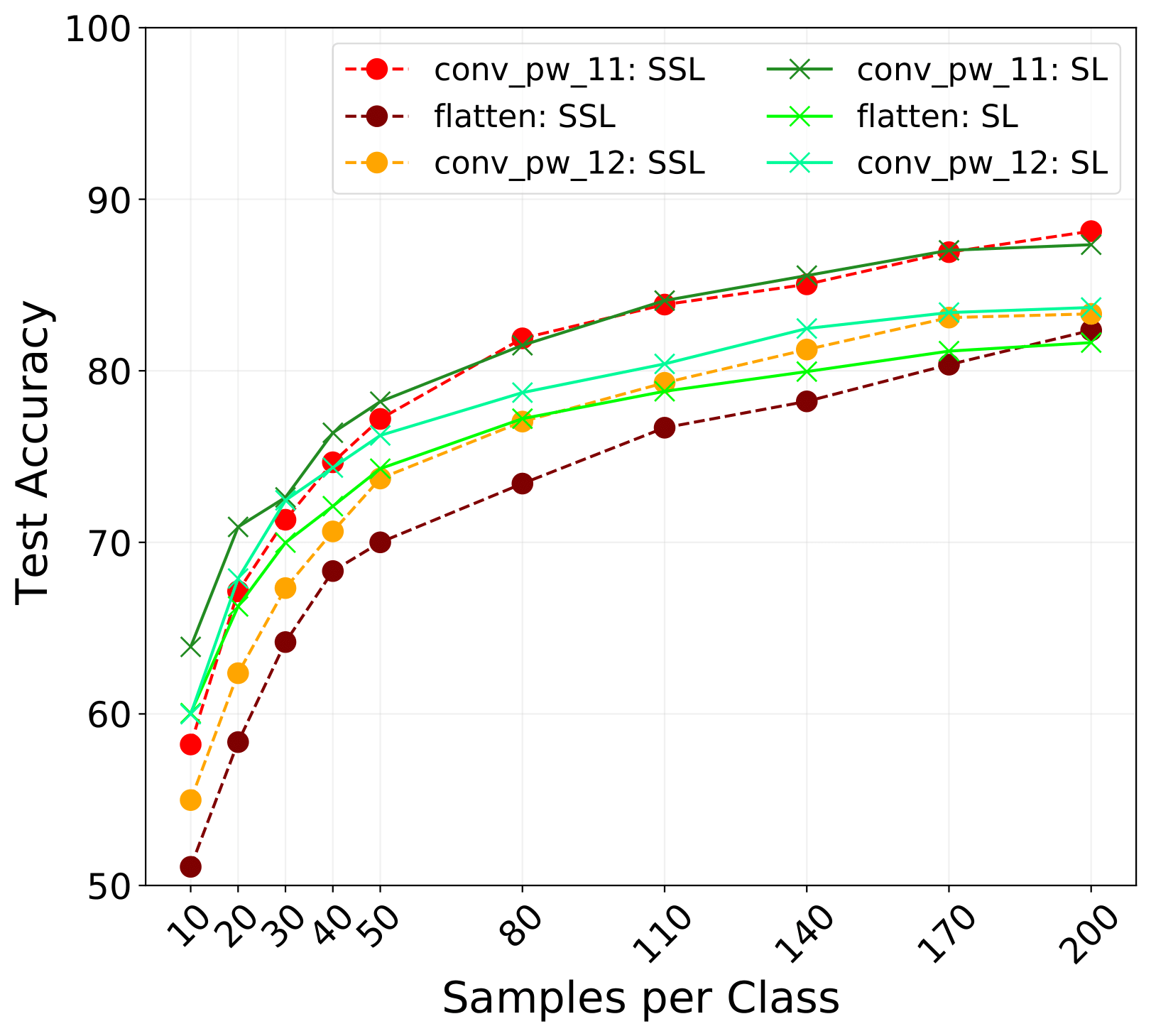

This performs transfer learning evaluations for an increasing number of samples per class and specific hidden layers.

Similarly, for Jigsaw, run the following notebook

jupyter notebook evaluate_jigsaw_transfer_learning_svm.ipynb

If you find our research helpful, please consider citing:

@InProceedings{Preciado-Grijalva_2022_CVPR,

author = {Preciado-Grijalva, Alan and Wehbe, Bilal and Firvida, Miguel Bande and Valdenegro-Toro, Matias},

title = {Self-Supervised Learning for Sonar Image Classification},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

month = {June},

year = {2022},

pages = {1499-1508}

}