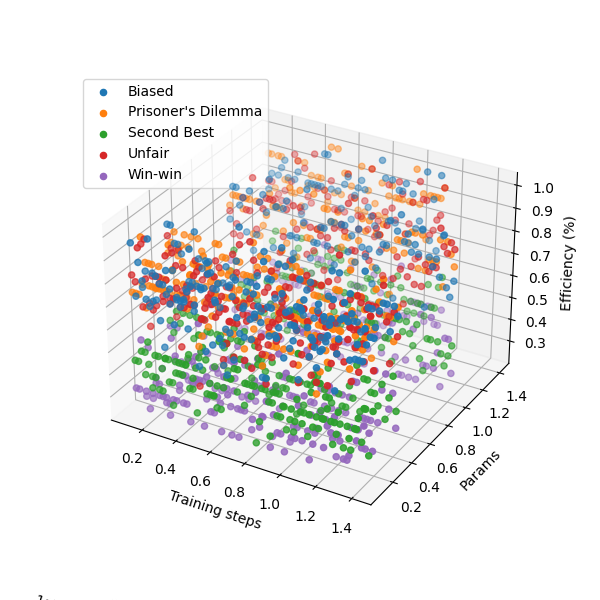

This is a toy project trying to derive scaling laws for cooperative behaviour in language models, adapting the setup from Playing repeated games with Large Language Models and using Pythia pretrained models to evaluate changes in behaviour at different parameter counts and training lengths.

This was inspired by this idea by Gabe Mukobi.