Operationalizing Data Analytics and Machine Learning workloads can be challenging; because the ecosystem of platforms and services involved used to build such workloads is big; which increases the complexity of deploying such workloads to production. The complexity also increases with the continous adoption of running in containers and using container orchestration frameworks such as Kubernetes.

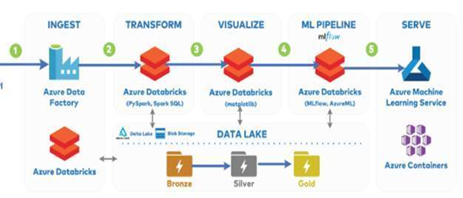

This repo demonstrates an approach of implementing DevOps pipelines for large-scale Data Analytics and Machine Learning (also called Data/MLOps) using a combination of Azure Databricks, MLFlow, and AzureML.

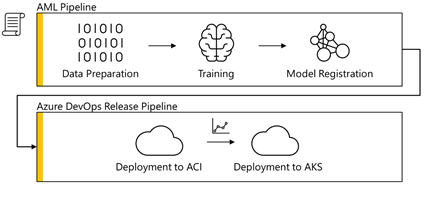

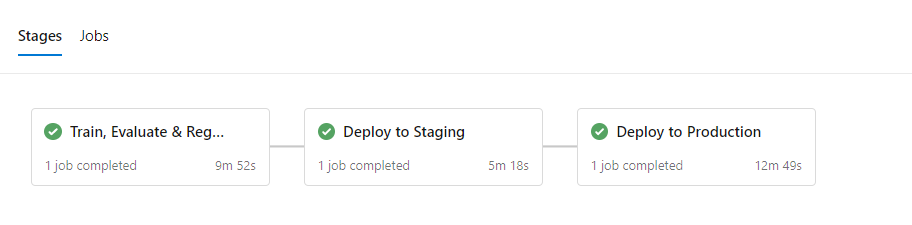

The DevOps pipeline is implemented in Azure DevOps, and it deploys the workload in a containerized form simulating staging & production environments to Azure Container Services and Azure Kubernetes Services.

The diagram below shows a high-level overview of a generic DevOps process; that build pipelines build the project's artifacts followed by a testing & release process. such process enables faster deployment for modules without impacting the overall system as well as the flexibility to deploy to one or more environments.

This tutorial fits ML as well as DataOps workloads, to simplify things it will walk you through how to implement Data/ML Ops in the following general form:

Azure Databricks offers great capabilities for developing & building analytics & ML workloads for data ingestion, data engineering, and data science for various applications (e.g. Data Management, Batch processing, Stream processing, Graph processing, and distributed machine learning).

Such capabilities are offered in a unified experience for collaboration between the different team stakeholders; supporting writing code in Scala, Python, SQL, and R and writing standard as well as Apache Spark applications.

Additionally, the platform provides a very convenient infrastructure management layer:

- Deploying workloads to highly scalable clusters is very easy.

- Clusters can be configured to auto-scale to adapt to the processing workload.

- Clusters can be set to work in an epheremal way; clusters will automatically terminate once a job is done; or no activity. This feature adds great value for managing costs.

- Databricks supports running containers through Databricks Container Services

Such execution capabilities makes Azure Databricksa great fit, not only for developing & building workloads, but also for running such workloads and serving Data as well.

This tutorial demonstrates also how Databricks Notebooks can leverage MLFlow and Azure ML, while they can be seen similar, but their usage & application shine depends on where they're used.

MLFlow is natively supported within Databricks, as MLFlow manages the machine learning experiment and the runs within the Databricks workspace development environment. Therefore, it conviently offers data scientists & engineers with such ML management and tracking capability for their models without having to leave their development environment.

The integration between MLFlow and AzureML however provides such management across environments, and AzureML is eventually used - from within the MLFlow Experiment - to build a Docker container image for the best scored model, and publish such workload(s) to the Azure container registry, and deploy it afterwards to either Azure Container Instances and Azure Kubernetes Services.

This repo is configured to run and use Azure DevOps, therefore you need to prepare you enviroment with the following steps.

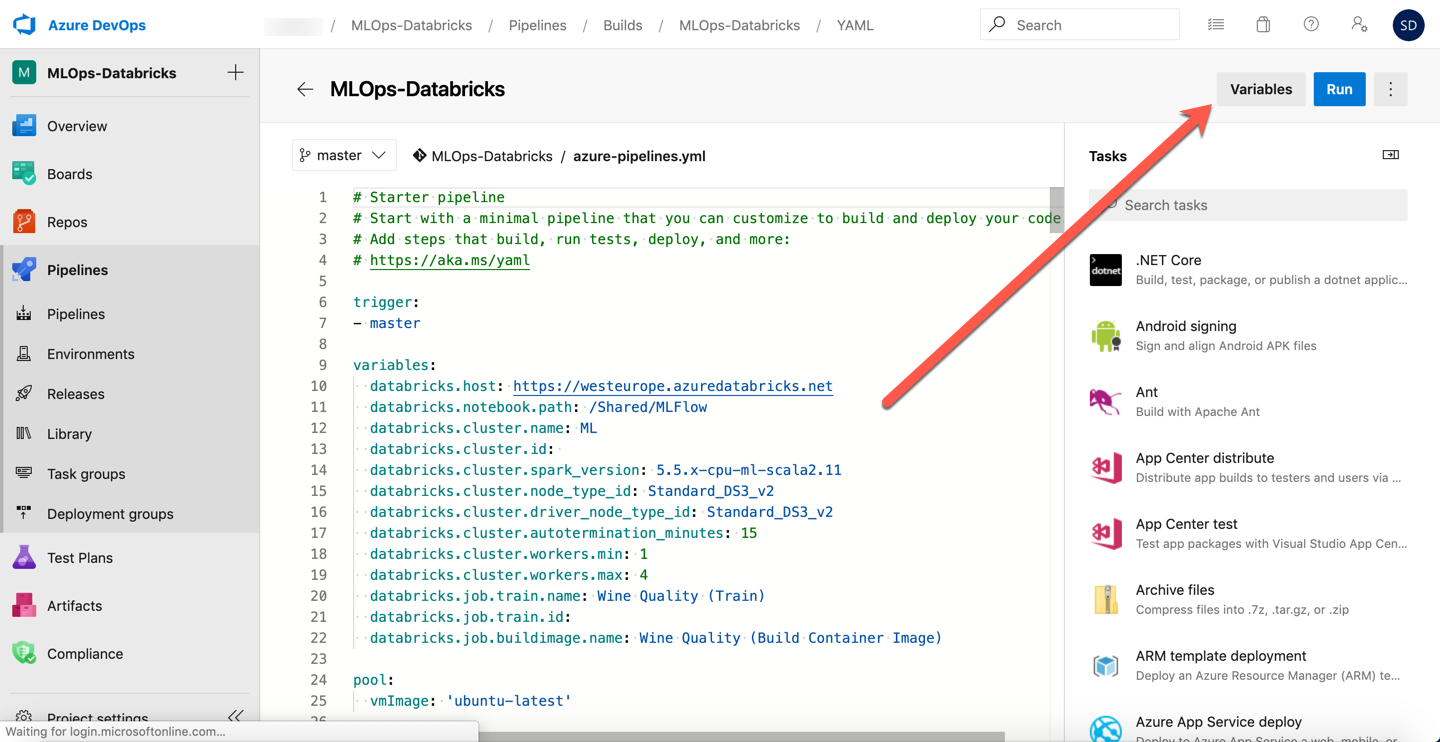

The DevOps Pipeline is a "multi-staged" pipeline and it is defined using the YAML file azure-pipelines.yml for Azure DevOps.

Note: Building Github workflow actions is in progress as well.

This example uses Azure DevOps as an CI/CD toolset, as well as Microsoft Azure services to host the trained Machine Learning Model.

- At the time of creating this tutorial, GitHub Actions were still beta. If you wan't to try this new feature, you have to Sign up for the beta first.

In your Azure subsciption, you need to create an Azure Databricks workspace to get started.

NOTE: I recommend to place the Azure Databricks Workspace in a new Resource Group, to be able to clean everything up more easily afterwards.

As soon as you have access to the Azure DevOps platform, you're able to create a project to host your MLOps pipeline.

As soon as this is created, you can import this GitHub repository into your Azure DevOps project.

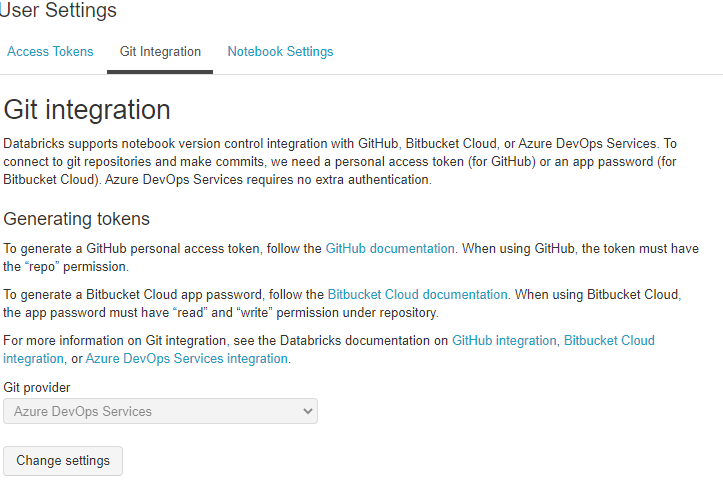

It is recommended to connect your notebooks to the Azure DevOps repo. This will ensure your changes & updates are pushed to the repo automatically and gets built properly. The pipeline is automatically triggered by any commit/push to the repo.

To configure this, go to the "User Settings" and click on "Git Integration".

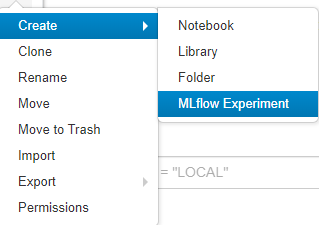

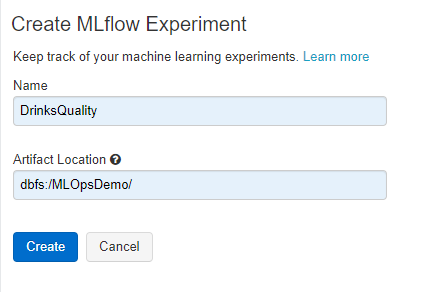

The Databricks notebooks use MLFlow under the hoods; in order to create the MLFlow experiment you need to do this after importing the notebooks in the Databricks workspace.

Clicking on the above link will open a screen where you can specify the name of the experiment and its location on DBFS. For this demo, make sure the MLFlow experiment's name is DrinksQuality.

By importing the GitHub files, you also imported the azure-pipelines.yml file.

This file can be used to create your first Build Pipeline.

This Build Pipeline is using a feature called "Multi-Stage Pipelines". This feature might not be enabled for you, so in order to use it, you should enable this preview feature.

To be able to run this pipeline, you also need to connect your Azure Databricks Workspace with the pipeline.

Therefore, yor first need to generate an access token on Databricks.

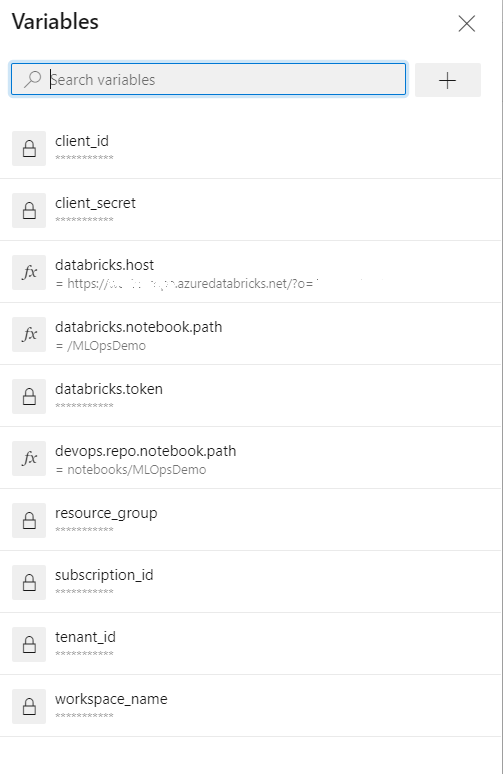

This token must be stored as encrypted secret in your Azure DevOps Build Pipeline...

NOTE: The variable must be called databricks.token as it is referenced within the pipeline YAML file. NOTE: There are additional variables that need to be defined to ease the build & deployment operation. You're free to decide if those variables should be defined as secrets or text values.

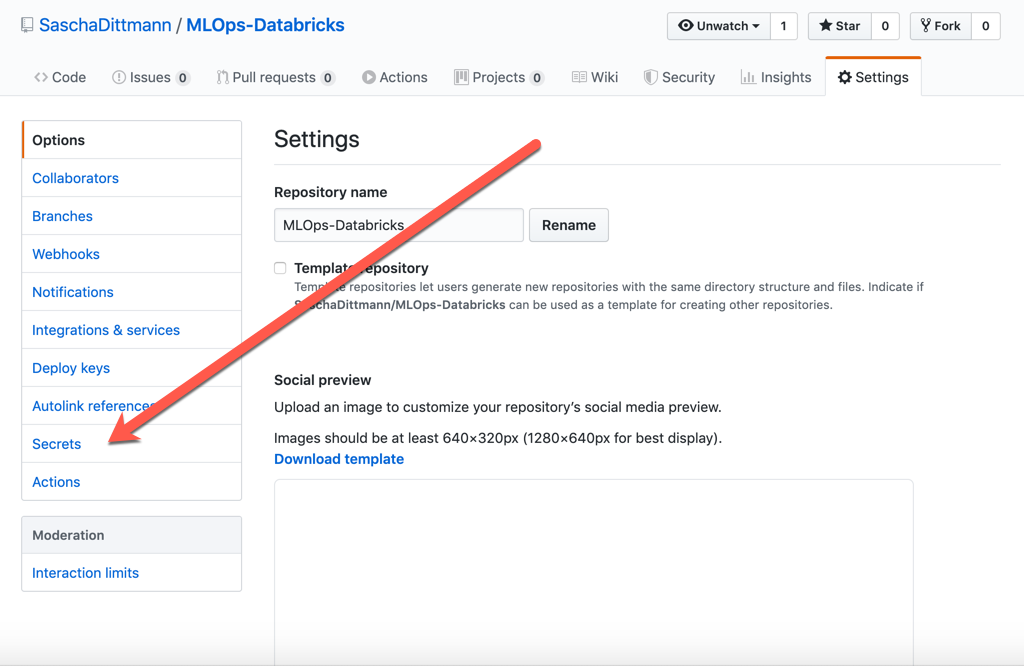

... or your GitHub Project.

NOTE: The GitHub Secret must be called DATABRICKS_TOKEN

The Databricks-Notebooks will be used also for serving your model, by leveraging and creating an Azure Machine Learning Workspace (and other resources) for you.

Azure Databricks Service requires access rights to do that, therefore you need to create a Service Principal in your Azure Active Directory.

You can do that directly in the Cloud Shell of the Azure Portal, by using one these two commands:

az ad sp create-for-rbac -n "http://MLOps-Databricks"Least Privilege Principle: If you want to narrow that down to a specific Resource Group and Azure Role, use the following command

az ad sp create-for-rbac -n "http://MLOps-Databricks" --role contributor --scopes /subscriptions/{SubID}/resourceGroups/{ResourceGroup1}Make a note of the result of this command, as you will need it in a later step.

Azure Databricks has its own place to store secrets.

At the time of creating this example, this store can be only accessed via the Databricks command-line interface (CLI).

Although not required, but you can install this CLI on your local machine or in the Azure Cloud Shell.

pip install -U databricks-cliNOTE: You need python 2.7.9 or later / 3.6 or later to install and use the Databricks command-line interface (CLI)

Using the Databricks CLI, you can now create your own section (scope) for your secrets...

databricks secrets create-scope --scope azureml... and add the required secrets to the scope.

# Use the "tenant" property from the Azure AD Service Principal command output

databricks secrets put --scope azureml --key tenant_id

# Use the "appId" property from the Azure AD Service Principal command output

databricks secrets put --scope azureml --key client_id

# Use the "password" property from the Azure AD Service Principal command output

databricks secrets put --scope azureml --key client_secret

databricks secrets put --scope azureml --key subscription_id

databricks secrets put --scope azureml --key resource_group

databricks secrets put --scope azureml --key workspace_nameNOTE: The Azure DevOps Pipeline installs and defines these secrets automatically. Databricks Secrets Scopes can be passed as parameters to give flexibility to the Notebook using secrets between environments.

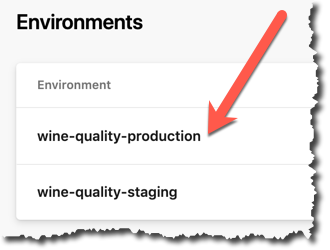

To avoid high costs from the Azure Kubernetes Service, which will be created by the "Deploy To Production" stage, I recommend that you set up a Pre-Approval Check for the drinks-quality-production environment.

This can be done in the Environments section of your Azure Pipelines.

- Notebooks should be configured to pull variables from Databricks Secrets

- Notebooks secrets values should be defined in separate Secrets Scopes.

- Secret Scopes can be set to the same variable, is updated using Databricks CLI, from the Azure DevOps pipeline.

- Manage AzureML workspace & Environments from within Azure DevOps pipeline instead of the Python SDK (within Databricks notebooks).

- Use Databricks automated clusters (job clusters) instead of Interactive clusters.

- Multi-Stage pipelines are very nice; but they might become harder to maintain. Think about separating your pipelines and connecting them together.

Disclaimer: This work is inspired by and based on efforts done by Sascha Dittman.