English | 简体中文

A reverse engineered unofficial ChatGPT proxy (bypass Cloudflare 403 Access Denied)

- API key acquisition

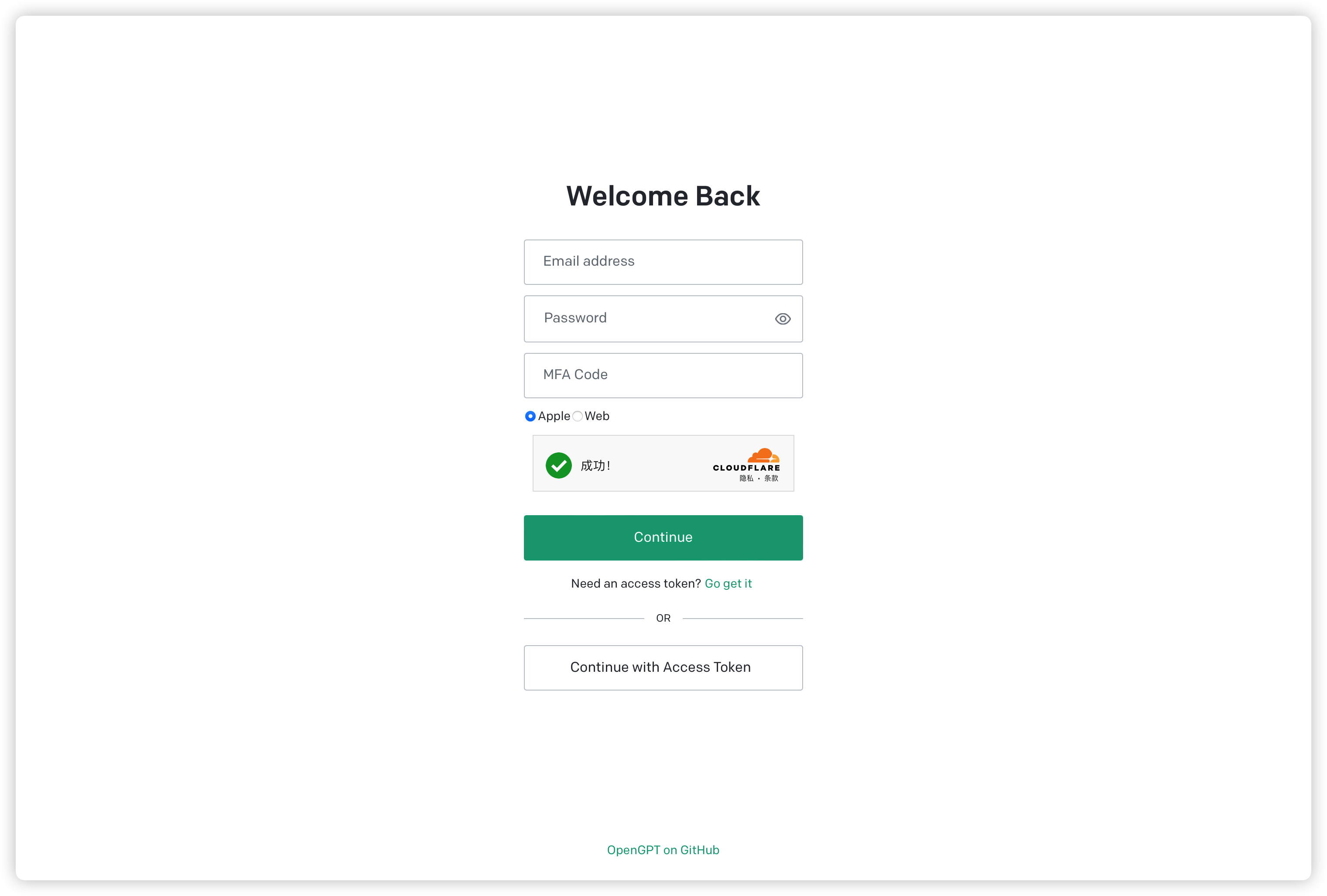

- Email/password account authentication (Google/Microsoft third-party login is not supported for now because the author does not have an account)

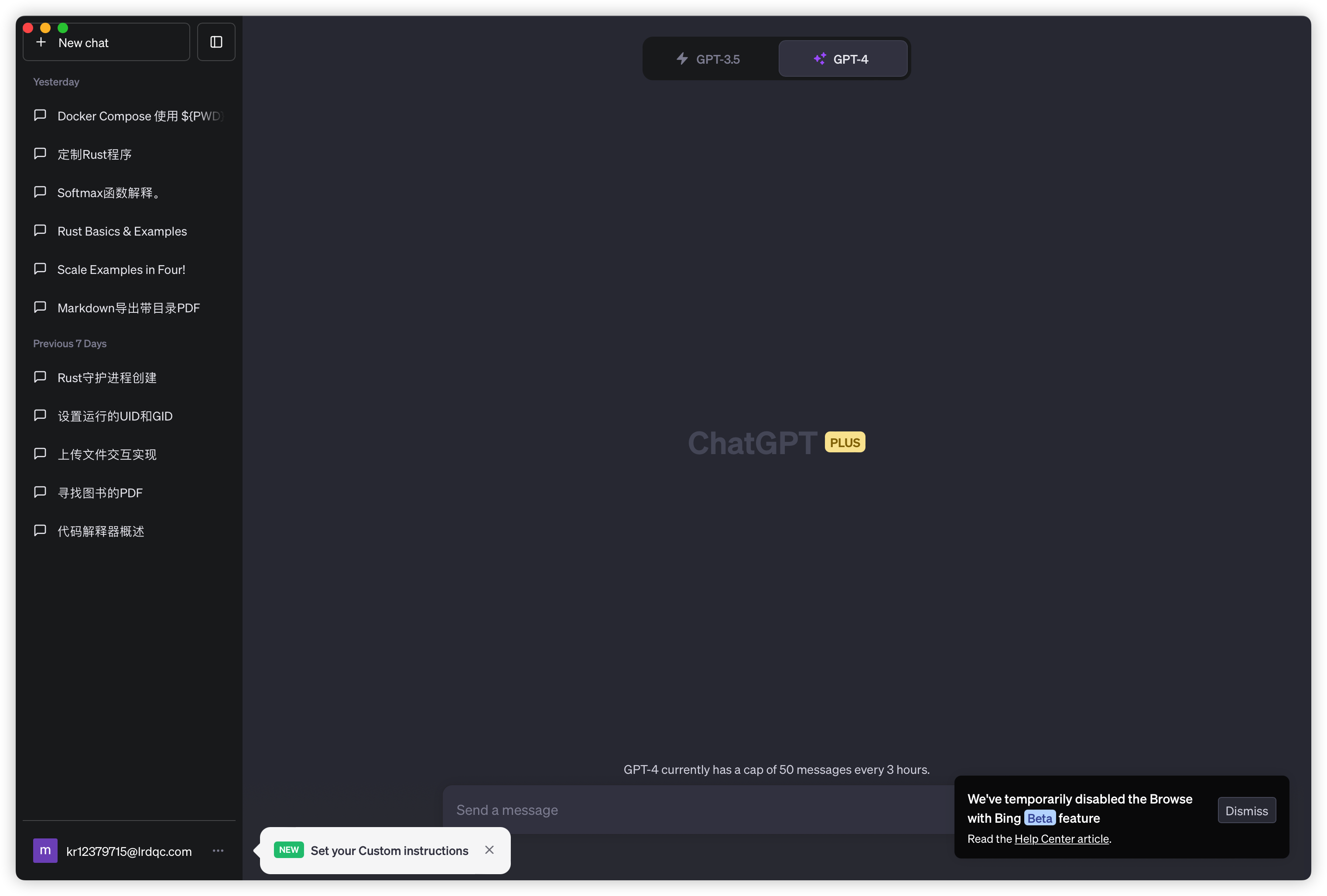

Unofficial/Official/ChatGPT-to-APIHttp API proxy (for third-party client access)- The original ChatGPT WebUI

- Minimal memory usage

Limitations: This cannot bypass OpenAI's outright IP ban

- Linux musl current supports

x86_64-unknown-linux-muslaarch64-unknown-linux-muslarmv7-unknown-linux-musleabiarmv7-unknown-linux-musleabihfarm-unknown-linux-musleabiarm-unknown-linux-musleabihfarmv5te-unknown-linux-musleabi

- Windows current supports

x86_64-pc-windows-msvc

- MacOS current supports

x86_64-apple-darwinaarch64-apple-darwin

Making Releases has a precompiled deb package, binaries, in Ubuntu, for example:

wget https://github.com/gngpp/opengpt/releases/download/v0.3.9/opengpt-0.3.9-x86_64-unknown-linux-musl.deb

dpkg -i opengpt-0.3.9-x86_64-unknown-linux-musl.deb

opengpt serve rundocker run --rm -it -p 7999:7999 --hostname=opengpt \

-e OPENGPT_WORKERS=1 \

-e OPENGPT_LOG_LEVEL=info \

gngpp/opengpt:latest serve rundocker-compose

version: '3'

services:

opengpt:

image: ghcr.io/gngpp/opengpt:latest

container_name: opengpt

restart: unless-stopped

environment:

- TZ=Asia/Shanghai

- OPENGPT_PROXY=socks5://cloudflare-warp:10000

# - OPENGPT_CONFIG=/serve.toml

# - OPENGPT_PORT=8080

# - OPENGPT_HOST=0.0.0.0

# - OPENGPT_WORKERS=10

# - OPENGPT_TIMEOUT=360

# - OPENGPT_CONNECT_TIMEOUT=60

# - OPENGPT_TCP_KEEPALIVE=60

# - OPENGPT_TLS_CERT=

# - OPENGPT_TLS_KEY=

# - OPENGPT_UI_API_PREFIX=

# - OPENGPT_SIGNATURE=

# - OPENGPT_TB_ENABLE=

# - OPENGPT_TB_STORE_STRATEGY

# - OPENGPT_TB_REDIS_URL=

# - OPENGPT_TB_CAPACITY=

# - OPENGPT_TB_FILL_RATE=

# - OPENGPT_TB_EXPIRED=

# - OPENGPT_CF_SITE_KEY=

# - OPENGPT_CF_SECRET_KEY=

# volumes:

# - ${PWD}/ssl:/etc

# - ${PWD}/serve.toml:/serve.toml

command: serve run

ports:

- "8080:7999"

depends_on:

- cloudflare-warp

cloudflare-warp:

container_name: cloudflare-warp

image: ghcr.io/gngpp/cloudflare-warp:latest

restart: unless-stopped

watchtower:

container_name: watchtower

image: containrrr/watchtower

volumes:

- /var/run/docker.sock:/var/run/docker.sock

command: --interval 3600 --cleanup

restart: unless-stopped

There are pre-compiled ipk files in GitHub Releases, which currently provide versions of aarch64/x86_64 and other architectures. After downloading, use opkg to install, and use nanopi r4s as example:

wget https://github.com/gngpp/opengpt/releases/download/v0.3.9/opengpt_0.3.9_aarch64_generic.ipk

wget https://github.com/gngpp/opengpt/releases/download/v0.3.9/luci-app-opengpt_1.0.2-1_all.ipk

wget https://github.com/gngpp/opengpt/releases/download/v0.3.9/luci-i18n-opengpt-zh-cn_1.0.2-1_all.ipk

opkg install opengpt_0.3.9_aarch64_generic.ipk

opkg install luci-app-opengpt_1.0.2-1_all.ipk

opkg install luci-i18n-opengpt-zh-cn_1.0.2-1_all.ipkPublic API,

*means anyURLsuffix

- backend-api, https://host:port/backend-api/*

- public-api, https://host:port/public-api/*

- platform-api, https://host:port/v1/*

- dashboard-api, https://host:port/dashboard/*

- chatgpt-to-api, https://host:port/conv/v1/chat/completions

Detailed API documentation

-

Authentic ChatGPT WebUI

-

Expose

unofficial/official APIproxies -

The

APIprefix is consistent with the official -

ChatGPTToAPI -

Accessible to third-party clients

-

Access to IP proxy pool to improve concurrency

-

API documentation

-

Parameter Description

--level, environment variableOPENGPT_LOG_LEVEL, log level: default info--host, environment variableOPENGPT_HOST, service listening address: default 0.0.0.0,--port, environment variableOPENGPT_PORT, listening port: default 7999--workers, environment variableOPENGPT_WORKERS, worker threads: default 1--tls-cert, environment variableOPENGPT_TLS_CERT', TLS certificate public key. Supported format: EC/PKCS8/RSA--tls-key, environment variableOPENGPT_TLS_KEY, TLS certificate private key--proxies, environment variableOPENGPT_PROXY, proxies,support multiple proxy pools, format: protocol://user:pass@ip:port

...

$ opengpt serve --help

Start the http server

Usage: opengpt serve run [OPTIONS]

Options:

-C, --config <CONFIG>

Configuration file path (toml format file) [env: OPENGPT_CONFIG=]

-H, --host <HOST>

Server Listen host [env: OPENGPT_HOST=] [default: 0.0.0.0]

-L, --level <LEVEL>

Log level (info/debug/warn/trace/error) [env: OPENGPT_LOG_LEVEL=] [default: info]

-P, --port <PORT>

Server Listen port [env: OPENGPT_PORT=] [default: 7999]

-W, --workers <WORKERS>

Server worker-pool size (Recommended number of CPU cores) [env: OPENGPT_WORKERS=] [default: 1]

--concurrent-limit <CONCURRENT_LIMIT>

Concurrent limit (Enforces a limit on the concurrent number of requests the underlying) [env: OPENGPT_CONCUURENT_LIMIT=] [default: 65535]

--proxies <PROXIES>

Server proxies pool, Example: protocol://user:pass@ip:port [env: OPENGPT_PROXY=]

--timeout <TIMEOUT>

Client timeout (seconds) [env: OPENGPT_TIMEOUT=] [default: 600]

--connect-timeout <CONNECT_TIMEOUT>

Client connect timeout (seconds) [env: OPENGPT_CONNECT_TIMEOUT=] [default: 60]

--tcp-keepalive <TCP_KEEPALIVE>

TCP keepalive (seconds) [env: OPENGPT_TCP_KEEPALIVE=] [default: 60]

--tls-cert <TLS_CERT>

TLS certificate file path [env: OPENGPT_TLS_CERT=]

--tls-key <TLS_KEY>

TLS private key file path (EC/PKCS8/RSA) [env: OPENGPT_TLS_KEY=]

--puid <PUID>

PUID cookie value of Plus account [env: OPENGPT_PUID=]

--puid-user <PUID_USER>

Obtain the PUID of the Plus account user. Supports split: ':', '-', '--' ... , Example: `user:pass` or `user:pass:mfa` [env: OPENGPT_PUID_USER=]

--api-prefix <API_PREFIX>

Web UI api prefix [env: OPENGPT_UI_API_PREFIX=]

--arkose-endpoint <ARKOSE_ENDPOINT>

Arkose endpoint, Example: https://client-api.arkoselabs.com [env: OPENGPT_ARKOSE_ENDPOINT=]

-A, --arkose-token-endpoint <ARKOSE_TOKEN_ENDPOINT>

Get arkose-token endpoint [env: OPENGPT_ARKOSE_TOKEN_ENDPOINT=]

--arkose-yescaptcha-key <ARKOSE_YESCAPTCHA_KEY>

yescaptcha client key [env: OPENGPT_ARKOSE_YESCAPTCHA_KEY=]

-S, --sign-secret-key <SIGN_SECRET_KEY>

Enable url signature (signature secret key) [env: OPENGPT_SIGNATURE=]

-T, --tb-enable

Enable token bucket flow limitation [env: OPENGPT_TB_ENABLE=]

--tb-store-strategy <TB_STORE_STRATEGY>

Token bucket store strategy (mem/redis) [env: OPENGPT_TB_STORE_STRATEGY=] [default: mem]

--tb-redis-url <TB_REDIS_URL>

Token bucket redis url, Example: redis://user:pass@ip:port [env: OPENGPT_TB_REDIS_URL=] [default: redis://127.0.0.1:6379]

--tb-capacity <TB_CAPACITY>

Token bucket capacity [env: OPENGPT_TB_CAPACITY=] [default: 60]

--tb-fill-rate <TB_FILL_RATE>

Token bucket fill rate [env: OPENGPT_TB_FILL_RATE=] [default: 1]

--tb-expired <TB_EXPIRED>

Token bucket expired (seconds) [env: OPENGPT_TB_EXPIRED=] [default: 86400]

--cf-site-key <CF_SITE_KEY>

Cloudflare turnstile captcha site key [env: OPENGPT_CF_SITE_KEY=]

--cf-secret-key <CF_SECRET_KEY>

Cloudflare turnstile captcha secret key [env: OPENGPT_CF_SECRET_KEY=]

-D, --disable-webui

Disable WebUI [env: OPENGPT_DISABLE_WEBUI=]

-h, --help

Print help- Linux compile, Ubuntu machine for example:

sudo apt update -y && sudo apt install rename

# Native compilation

git clone https://github.com/gngpp/opengpt.git && cd opengpt

./build.sh

# Cross-platform compilation, relying on docker (if you can solve cross-platform compilation dependencies on your own)

# Default using docker build linux/windows platform

./build_cross.sh

# The MacOS platform is built on MacOS by default

os=macos ./build_cross.sh

# Compile a single platform binary, take aarch64-unknown-linux-musl as an example:

docker run --rm -it --user=$UID:$(id -g $USER) \

-v $(pwd):/home/rust/src \

-v $HOME/.cargo/registry:/root/.cargo/registry \

-v $HOME/.cargo/git:/root/.cargo/git \

ghcr.io/gngpp/opengpt-builder:x86_64-unknown-linux-musl \

cargo build --release- OpenWrt compile

cd package

svn co https://github.com/gngpp/opengpt/trunk/openwrt

cd -

make menuconfig # choose LUCI->Applications->luci-app-opengpt

make V=s