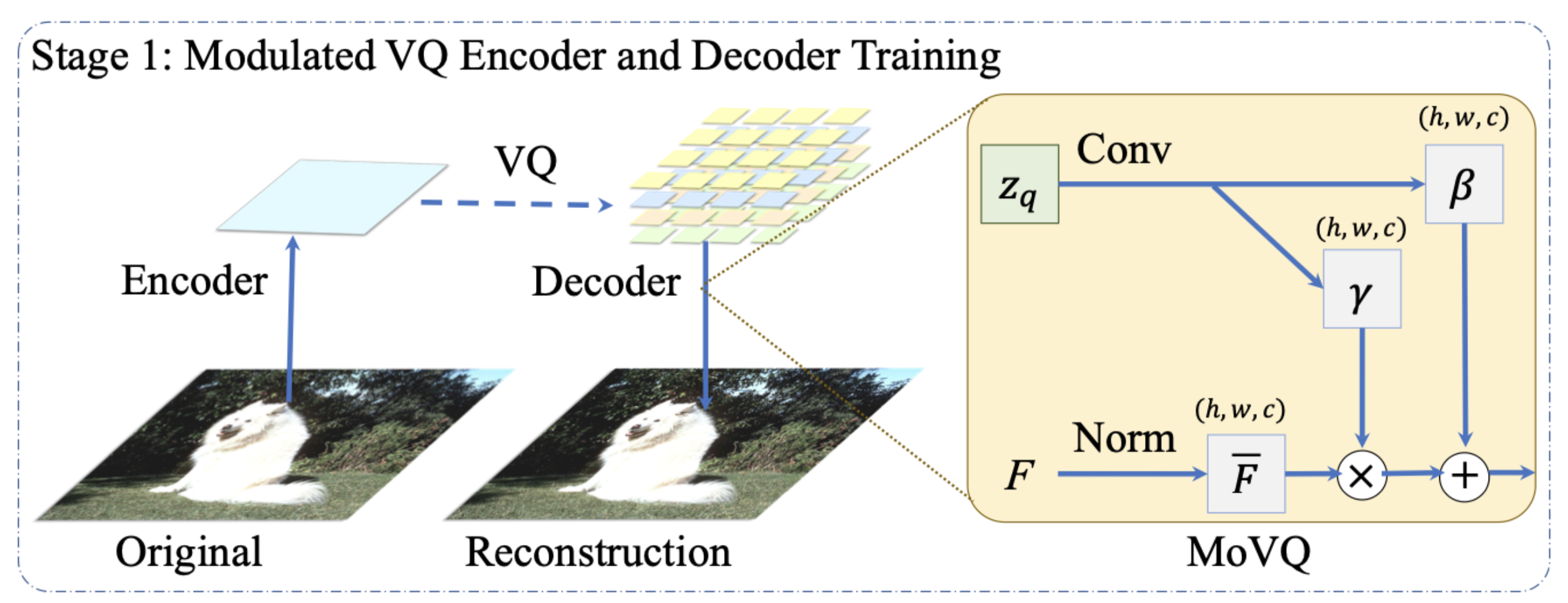

SBER-MoVQGAN (Modulated Vector Quantized GAN) is a new SOTA model in the image reconstruction problem. This model is based on code from the VQGAN repository and modifications from the original MoVQGAN paper. The architecture of SBER-MoVQGAN is shown below in the figure.

SBER-MoVQGAN was successfully implemented in Kandinsky 2.1, and became one of the architecture blocks that allowed to significantly improve the quality of image generation from text.

The following table shows a comparison of the models on the Imagenet dataset in terms of FID, SSIM, and PSNR metrics. A more detailed description of the experiments and a comparison with other models can be found in the Habr post.

| Model | Latent size | Num Z | Train steps | FID | SSIM | PSNR | L1 |

|---|---|---|---|---|---|---|---|

| ViT-VQGAN* | 32x32 | 8192 | 500000 | 1,28 | - | - | - |

| RQ-VAE* | 8x8x16 | 16384 | 10 epochs | 1,83 | - | - | - |

| Mo-VQGAN* | 16x16x4 | 1024 | 40 epochs | 1,12 | 0,673 | 22,42 | - |

| VQ CompVis | 32x32 | 16384 | 971043 | 1,34 | 0,65 | 23,847 | 0,053 |

| KL CompVis | 32x32 | - | 246803 | 0,968 | 0,692 | 25,112 | 0,047 |

| SBER-VQGAN (from pretrain) | 32x32 | 8192 | 1 epoch | 1,439 | 0,682 | 24,314 | 0,05 |

| SBER-MoVQGAN 67M | 32x32 | 16384 | 2M | 0,965 | 0,725 | 26,449 | 0,042 |

| SBER-MoVQGAN 102M | 32x32 | 16384 | 2360k | 0,776 | 0,737 | 26,889 | 0,04 |

| SBER-MoVQGAN 270M | 32x32 | 16384 | 1330k | 0,686💥 | 0,741💥 | 27,037💥 | 0,039💥 |

pip install "git+https://github.com/ai-forever/MoVQGAN.git"

python main.py --config configs/movqgan_270M.yaml

Check jupyter notebook with example in ./notebooks folder or

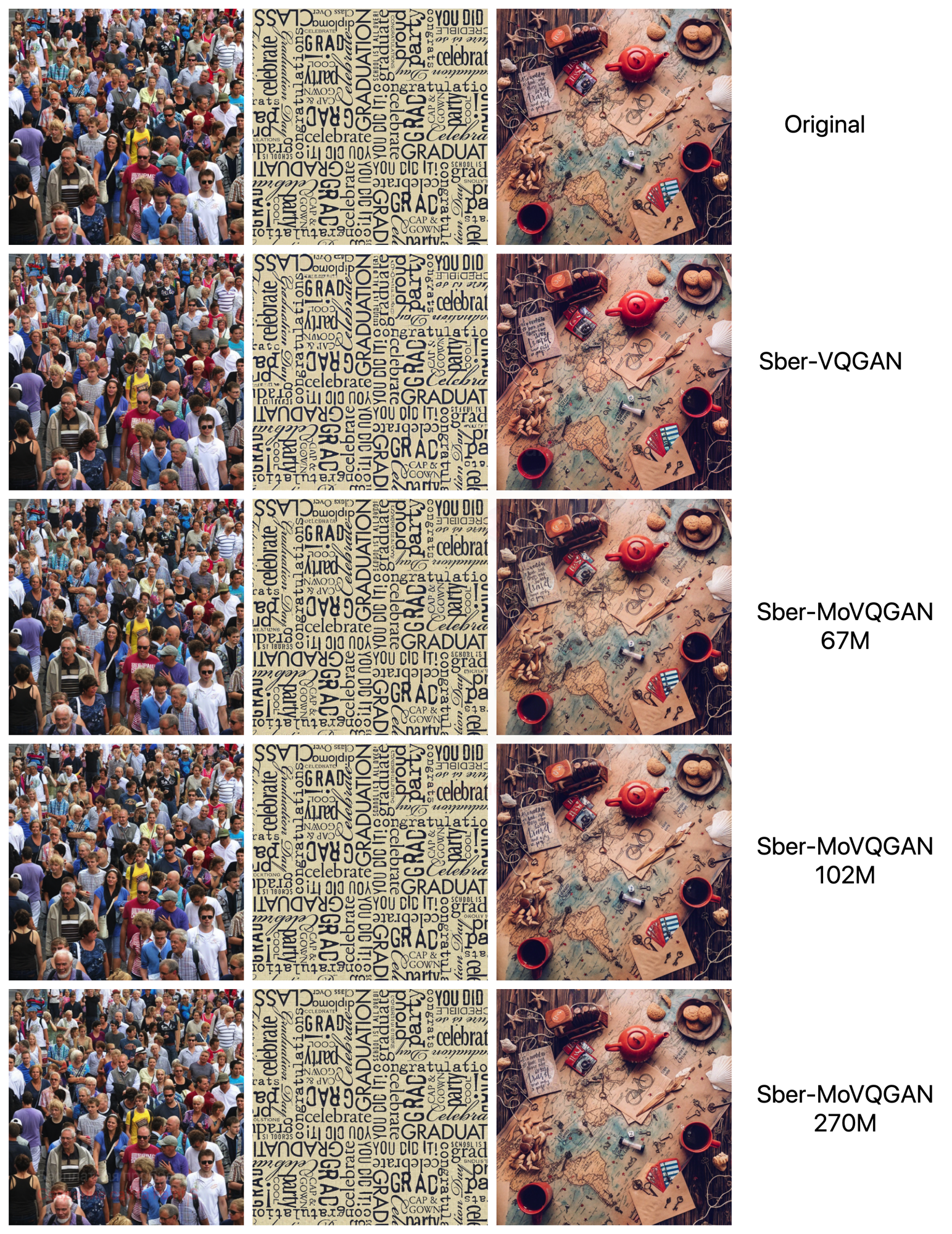

This section provides examples of image reconstruction for all versions of SBER-MoVQGAN on hard-to-recover domains such as faces, text, and other complex scenes.