RefiNet: 3D Human Pose Refinement with Depth Maps

This is the official PyTorch implementation of the publication:

A. D’Eusanio, S. Pini, G. Borghi, R. Vezzani, R. Cucchiara

RefiNet: 3D Human Pose Refinement with Depth Maps

In International Conference on Pattern Recognition (ICPR) 2020

[Paper] [Project Page]

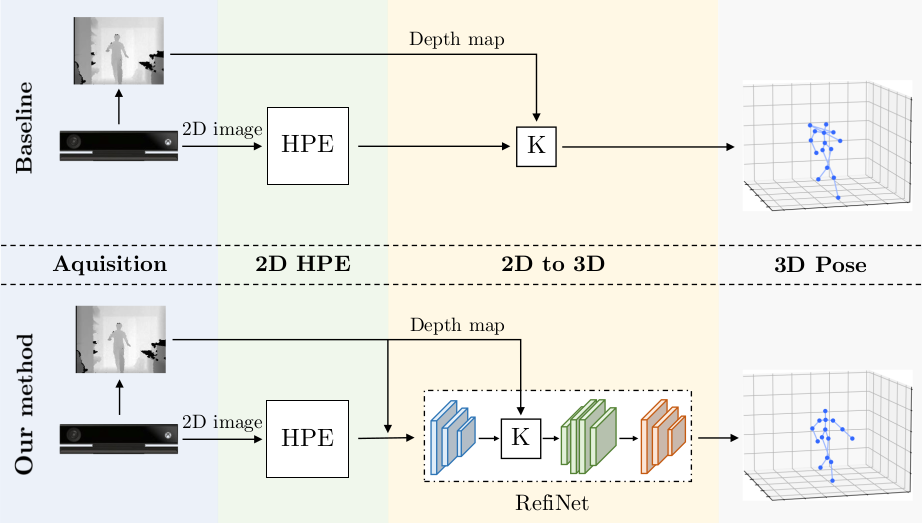

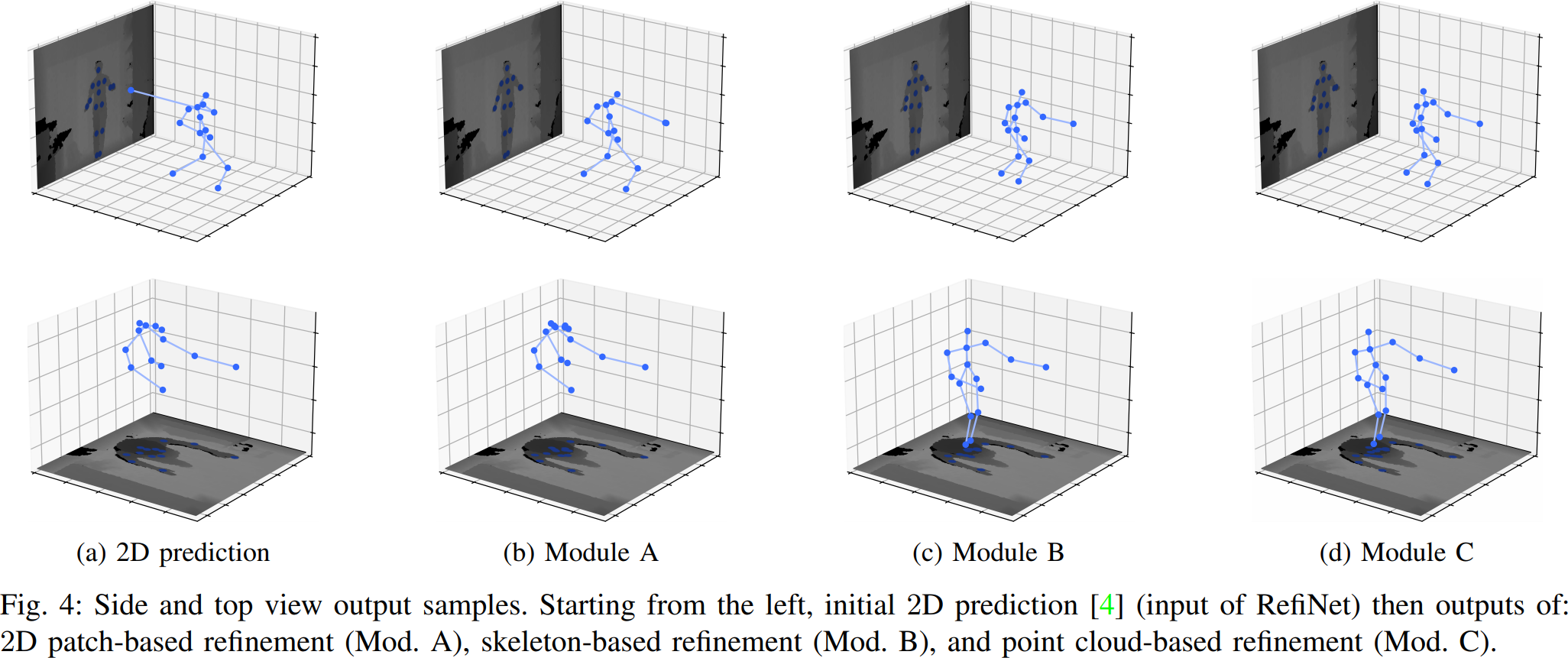

Human Pose Estimation is a fundamental task for many applications in the Computer Vision community and it has been widely investigated in the 2D domain, i.e. intensity images. Therefore, most of the available methods for this task are mainly based on 2D Convolutional Neural Networks and huge manually annotated RGB datasets, achieving stunning results. In this paper, we propose RefiNet, a multi-stage framework that regresses an extremely-precise 3D human pose estimation from a given 2D pose and a depth map. The framework consists of three different modules, each one specialized in a particular refinement and data representation, i.e. depth patches, 3D skeleton and point clouds. Moreover, we present a new dataset, called Baracca, acquired with RGB, depth and thermal cameras and specifically created for the automotive context. Experimental results confirm the quality of the refinement procedure that largely improves the human pose estimations of off-the-shelf 2D methods.

Getting Started

These instructions will give you a copy of the project up and running on your local machine for development and testing purposes. There isn't much to do, just install the prerequisites and download all the files.

Prerequisites

Things you need to install to run the code:

Python >= 3.6.7

PyTorch >= 1.6

Install CUDA and PyTorch following the main website directive.

Run the command:

pip install requirements.txt

Download datasets

The employed datasets are publicy available:

Once downloaded, unzip anywhere in your drive.

Baseline keypoints and pretrained model

Baseline keypoints are available at this link. Pretrained pytorch models will be soon available at the same link.

Setup configuration

For this project we used a json file, located in the hypes folder, such as:

hypes/baracca/depth/test.json

In there, you can set several parameters, like:

- train_dir, path to training dataset.

- phase, select if training or testing, can either add it on config or as added parameter (see below).

- Data-type, select the type of data for training, depth 2D, 3D joints, 3D poitclouds.

- from_gt, choose if training from gt with high gaussian noise.

- sigma, gaussian noise sigma value.

- mu, gaussian noise mu value.

For every other information check the file.

Usage

python main.py --hypes hypes/itop/depth/train.json

--hypes, path to configuration file.--phase, train or test phase.

Authors

- Andrea D'Eusanio - Deusy94

- Stefano Pini - stefanopini

- Guido Borghi - gdubrg

- Roberto Vezzani - robervez

- Rita Cucchiara - Rita Cucchiara

Ciatation

If you use this code, please cite our paper:

@inproceedings{deusanio2020refinet,

title={{RefiNet: 3D Human Pose Refinement with Depth Maps}},

author={D'Eusanio, Andrea and Pini, Stefano and Borghi, Guido and Vezzani, Roberto and Cucchiara, Rita},

booktitle={International Conference on Pattern Recognition (ICPR)},

year={2020}

}

License

This project is licensed under the MIT License - see the LICENSE file for details