ClearML - Auto-Magical Suite of tools to streamline your ML workflow

Experiment Manager, ML-Ops and Data-Management

Note regarding Apache Log4j2 Remote Code Execution (RCE) Vulnerability - CVE-2021-44228 - ESA-2021-31

According to ElasticSearch's latest report, supported versions of Elasticsearch (6.8.9+, 7.8+) used with recent versions of the JDK (JDK9+) are not susceptible to either remote code execution or information leakage due to Elasticsearch’s usage of the Java Security Manager.

As the latest version of ClearML Server uses Elasticsearch 7.10+ with JDK15, it is not affected by these vulnerabilities.

As a precaution, we've upgraded the ES version to 7.16.2 and added the mitigation recommended by ElasticSearch to our latest docker-compose.yml file.

While previous Elasticsearch versions (5.6.11+, 6.4.0+ and 7.0.0+) used by older ClearML Server versions are only susceptible to the information leakage vulnerability (which in any case does not permit access to data within the Elasticsearch cluster), we still recommend upgrading to the latest version of ClearML Server. Alternatively, you can apply the mitigation as implemented in our latest docker-compose.yml file.

Update 15 December: A further vulnerability (CVE-2021-45046) was disclosed on December 14th. ElasticSearch's guidance for Elasticsearch remains unchanged by this new vulnerability, thus not affecting ClearML Server.

Update 22 December: To keep with ElasticSearch's recommendations, we've upgraded the ES version to the newly released 7.16.2

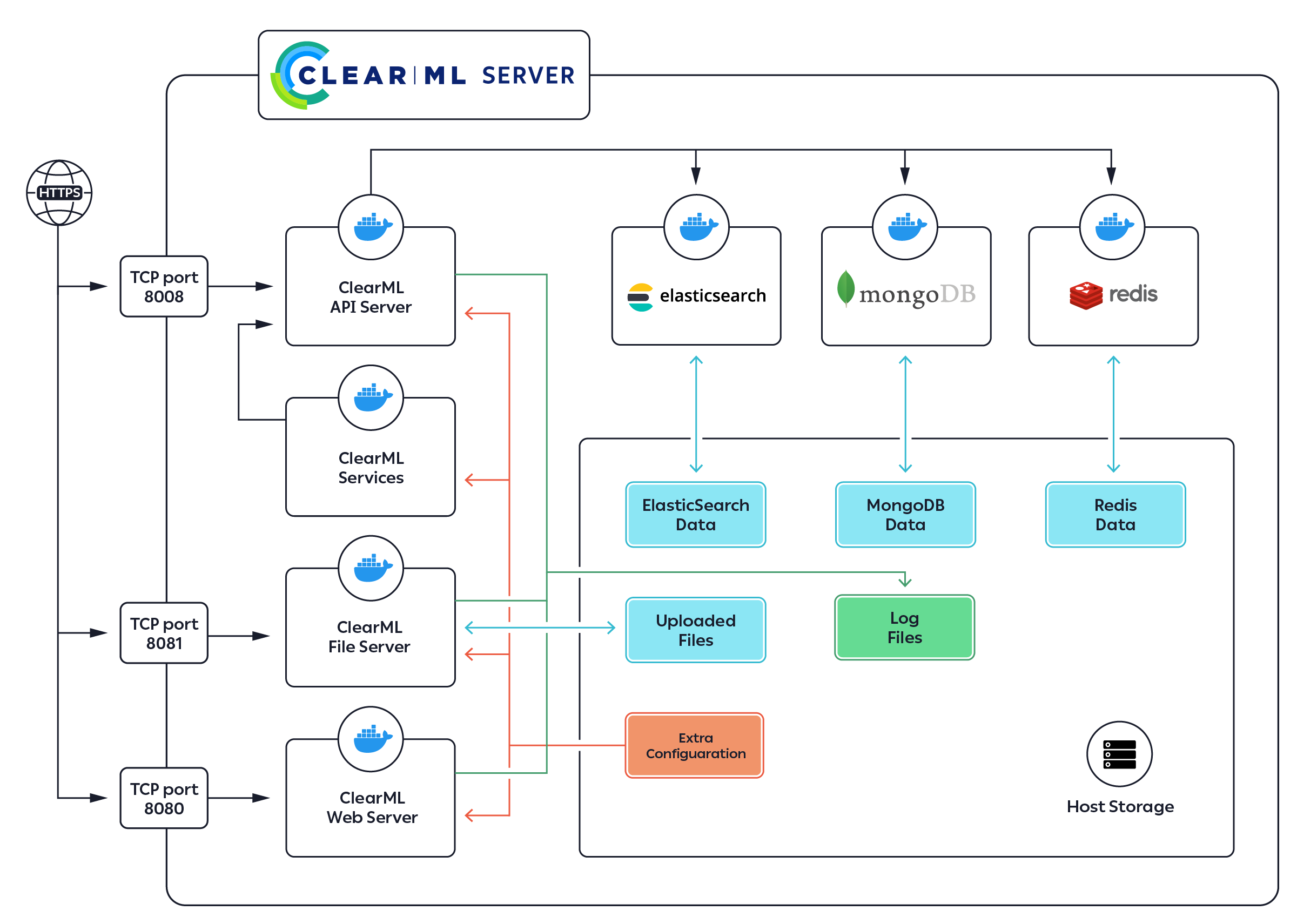

The ClearML Server is the backend service infrastructure for ClearML. It allows multiple users to collaborate and manage their experiments. ClearML offers a free hosted service, which is maintained by ClearML and open to anyone. In order to host your own server, you will need to launch the ClearML Server and point ClearML to it.

The ClearML Server contains the following components:

- The ClearML Web-App, a single-page UI for experiment management and browsing

- RESTful API for:

- Documenting and logging experiment information, statistics and results

- Querying experiments history, logs and results

- Locally-hosted file server for storing images and models making them easily accessible using the Web-App

You can quickly deploy your ClearML Server using Docker, AWS EC2 AMI, or Kubernetes.

The ClearML Server has two supported configurations:

-

Single IP (domain) with the following open ports

- Web application on port 8080

- API service on port 8008

- File storage service on port 8081

-

Sub-Domain configuration with default http/s ports (80 or 443)

- Web application on sub-domain: app.*.*

- API service on sub-domain: api.*.*

- File storage service on sub-domain: files.*.*

The ports 8080/8081/8008 must be available for the ClearML Server services.

For example, to see if port 8080 is in use:

-

Linux or macOS:

sudo lsof -Pn -i4 | grep :8080 | grep LISTEN -

Windows:

netstat -an |find /i "8080"

Launch The ClearML Server in any of the following formats:

- Pre-built AWS EC2 AMI

- Pre-built GCP Custom Image

- Pre-built Docker Image

- Kubernetes

In order to set up the ClearML client to work with your ClearML Server:

-

Run the

clearml-initcommand for an interactive setup. -

Or manually edit

~/clearml.conffile, making sure the server settings (api_server,web_server,file_server) are configured correctly, for example:api { # API server on port 8008 api_server: "http://localhost:8008" # web_server on port 8080 web_server: "http://localhost:8080" # file server on port 8081 files_server: "http://localhost:8081" }

Note: If you have set up your ClearML Server in a sub-domain configuration, then there is no need to specify a port number, it will be inferred from the http/s scheme.

After launching the ClearML Server and configuring the ClearML client to use the ClearML Server,

you can use ClearML in your experiments and view them in your ClearML Server web server,

for example http://localhost:8080.

For more information about the ClearML client, see ClearML.

As of version 0.15 of ClearML Server, dockerized deployment includes a ClearML-Agent Services container running as part of the docker container collection.

ClearML-Agent Services is an extension of ClearML-Agent that provides the ability to launch long-lasting jobs that previously had to be executed on local / dedicated machines. It allows a single agent to launch multiple dockers (Tasks) for different use cases. To name a few use cases, auto-scaler service (spinning instances when the need arises and the budget allows), Controllers (Implementing pipelines and more sophisticated DevOps logic), Optimizer (such as Hyper-parameter Optimization or sweeping), and Application (such as interactive Bokeh apps for increased data transparency)

ClearML-Agent Services container will spin any task enqueued into the dedicated services queue.

Every task launched by ClearML-Agent Services will be registered as a new node in the system,

providing tracking and transparency capabilities.

You can also run the ClearML-Agent Services manually, see details in ClearML-agent services mode

Note: It is the user's responsibility to make sure the proper tasks are pushed into the services queue.

Do not enqueue training / inference tasks into the services queue, as it will put unnecessary load on the server.

The ClearML Server provides a few additional useful features, which can be manually enabled:

To restart the ClearML Server, you must first stop the containers, and then restart them.

docker-compose down

docker-compose -f docker-compose.yml upClearML Server releases are also reflected in the docker compose configuration file.

We strongly encourage you to keep your ClearML Server up to date, by keeping up with the current release.

Note: The following upgrade instructions use the Linux OS as an example.

To upgrade your existing ClearML Server deployment:

-

Shut down the docker containers

docker-compose down

-

We highly recommend backing up your data directory before upgrading.

Assuming your data directory is

/opt/clearml, to archive all data into~/clearml_backup.tgzexecute:sudo tar czvf ~/clearml_backup.tgz /opt/clearml/dataRestore instructions:

To restore this example backup, execute:

sudo rm -R /opt/clearml/data sudo tar -xzf ~/clearml_backup.tgz -C /opt/clearml/data -

Download the latest

docker-compose.ymlfile.curl https://raw.githubusercontent.com/allegroai/trains-server/master/docker/docker-compose.yml -o docker-compose.yml

-

Configure the ClearML-Agent Services (not supported on Windows installation). If

CLEARML_HOST_IPis not provided, ClearML-Agent Services will use the external public address of the ClearML Server. IfCLEARML_AGENT_GIT_USER/CLEARML_AGENT_GIT_PASSare not provided, the ClearML-Agent Services will not be able to access any private repositories for running service tasks.export CLEARML_HOST_IP=server_host_ip_here export CLEARML_AGENT_GIT_USER=git_username_here export CLEARML_AGENT_GIT_PASS=git_password_here

-

Spin up the docker containers, it will automatically pull the latest ClearML Server build

docker-compose -f docker-compose.yml pull docker-compose -f docker-compose.yml up

* If something went wrong along the way, check our FAQ: Common Docker Upgrade Errors.

If you have any questions, look to the ClearML FAQ, or tag your questions on stackoverflow with 'clearml' tag.

For feature requests or bug reports, please use GitHub issues.

Additionally, you can always find us at clearml@allegro.ai

Server Side Public License v1.0

The ClearML Server relies on both MongoDB and ElasticSearch. With the recent changes in both MongoDB's and ElasticSearch's OSS license, we feel it is our responsibility as a member of the community to support the projects we love and cherish. We believe the cause for the license change in both cases is more than just, and chose SSPL because it is the more general and flexible of the two licenses.

This is our way to say - we support you guys!