Spring 2022 Project

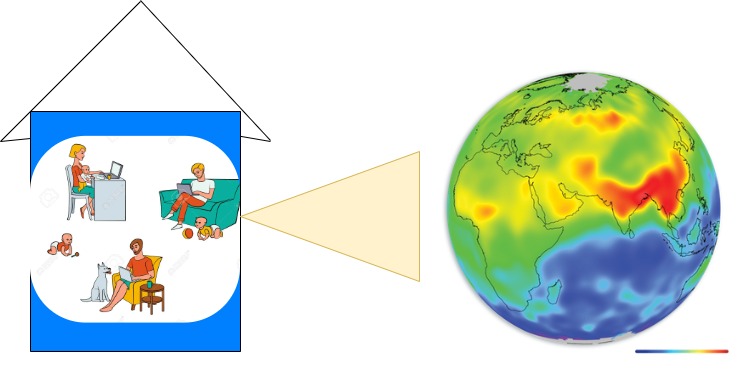

Application to visualises user requested NEXRAD data.

Softwares/prerequisites needed to run garuda: Docker

Note: You'll need latest version of docker engine

Export ENV variables

export $PROJECT=PROJECT3Pull the latest images from DockerHub

docker-compose pullStart application services

docker-compose upRun the above command on your terminal from the root of project folder to create all the resources to run the project.

sudo sh scripts/host.shNote: The above command creates 6 containers for the running the application.

Note: The services run in non-detached mode. On exiting the process from terminal all the containers stop.

Note: This command might take some time to run. It's spinning up all the containers required to run the project. After all the resources are done loading, logs won't be printing on the terminal. You can use the application now !

URL for the web-application: http://garuda.org:3000

Type : CTLR + C to exit

Done playing around ? Run this command to remove all the created resources.

docker-compose down

Build resource again if needed

docker-compose buildNote: Before building make sure you have these env variables exported in terminal

- NASA_USERNAME - NASA MERRA2 dashboard username

- NASA_PASSWORD - NASA MERRA2 dashboard password

- AWS_ACCESS_KEY_ID - JetStream Object Store access key ID

- AWS_SECRET_ACCESS_KEY - JetStream Object Store access secret

- PROJECT - Version of project you want to build

Softwares/prerequisites needed to run garuda: Docker

Note: You'll need latest version of docker for windows

Export ENV variables

export $PROJECT=PROJECT3Pull the latest images from DockerHub

docker-compose pullStart application services

docker-compose upRun the above command on your cmd from the root of project folder to create all the resources to run the project.

sudo sh scripts/host.shNote: The above command creates 6 containers for the running the application.

Note: The services run in non-detached mode. On exiting the process from terminal all the containers stop.

Note: This command might take some time to run. It's spinning up all the containers required to run the project. After all the resources are done loading, logs won't be printing on the terminal. You can use the application now !

URL for the web-application: http://garuda.org:3000

Type : CTLR + C to exit

Done playing around ? Run this command to remove all the created resources.

docker compose down

Build resource again if needed

docker compose buildNote: Before building make sure you have these env variables exported in terminal

- NASA_USERNAME - NASA MERRA2 dashboard username

- NASA_PASSWORD - NASA MERRA2 dashboard password

- AWS_ACCESS_KEY_ID - JetStream Object Store access key ID

- AWS_SECRET_ACCESS_KEY - JetStream Object Store access secret

- PROJECT - Version of project you want to build

Add changes in etc/hosts/ for production url

sudo sh scripts/prod_host.shInstall CORS plugin in browser to enable cors headers since application is using jetstream object strore sample cors plugin

Access application at http://garuda.org

-

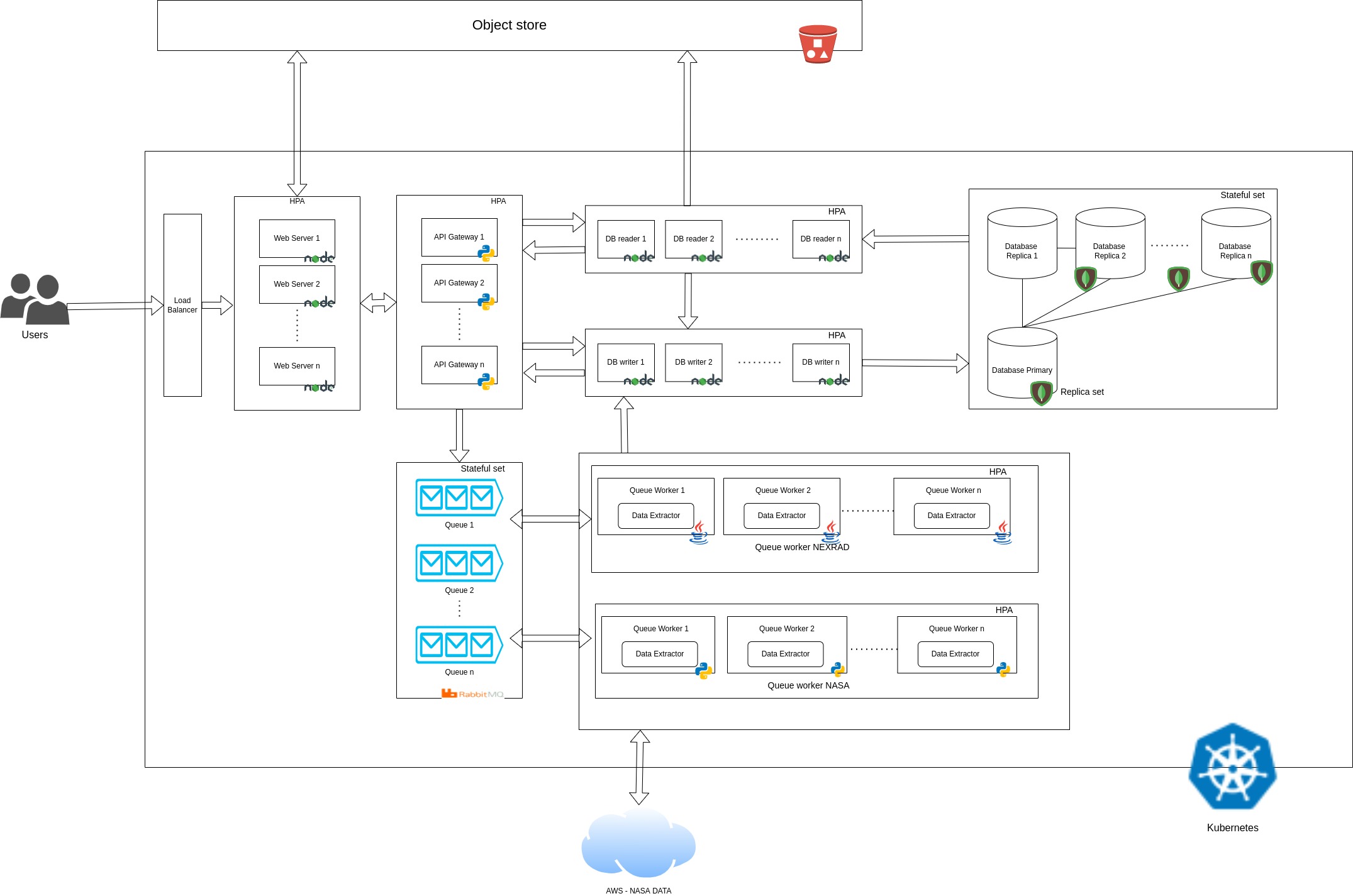

Data Extractor : Apache Maven project to build a utility JAR file which extracts requested NEXRAD data from S3.

-

Queue Worker : Apache Maven project to build a JAR file which runs a consumer on a rabbitmq queue. It processes the request using data_extractor utitlity JAR and published the data to a API endpoint.

-

DB_Middleware: Microservice to interact with database. This microservices provides APIs to perform read and writes to database. Reads are performed by API_Gateway module and Writes are performed by Queue_Worker module and API_Gateway module. It also dumps the dataset of the request to the object store (AWS S3 bucket) and saves the object url in the database

-

API_Gateway: API_Gateway module provides a middle-ware layer for all the back-end services. Front-end application communicate with API_Gateway module to interact with all other micro-services.

-

Web_App: Web Application module is the application with which the end users interacts. It communicates with API_Gateway module to maintain user data and fetch NEXRAD data.

-

Queue Worker Nasa : Python application which runs a consumer on a rabbitmq queue. It processes the request using extractor utitlity and published the data in a conerted formatted to a API endpoint.

In the project 3 milestone after brainstorming we found scope for improvement in our system through which we reduced load from the backend significantly. The improvement was to store request dataset to the object-store(AWS S3 bucket) and then web app retrieves the data from the object store whenever user requests to plot the map. To check the systems performance with and without object store. We benchmarked the system with JMeter by making 100 concurrent request. The average response time in without object store was 14194ms and in with object store was 399ms. The average response time was reduced by 135% . The details of the reports are present here

-

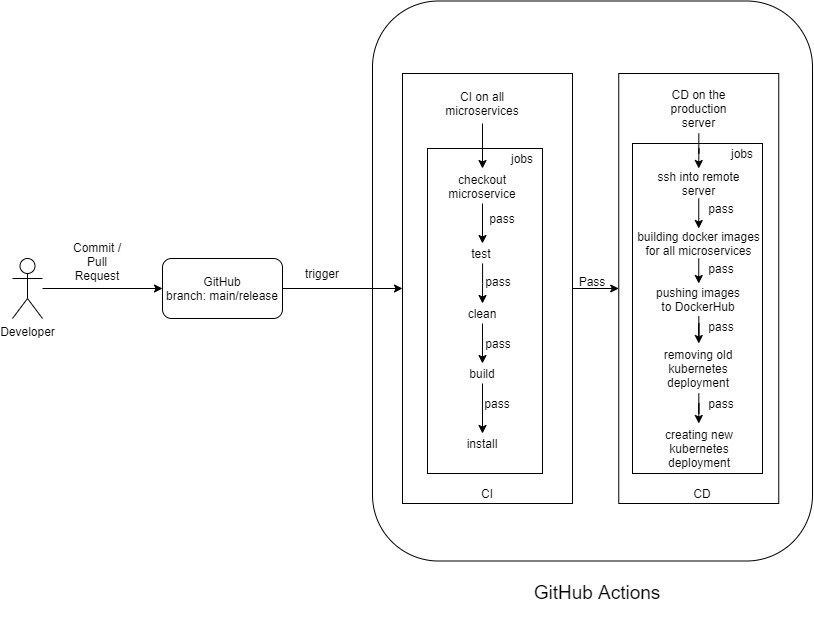

CI : Github Action workflow is used as CI. Any pull request/ commit to main branch triggers CI workflow. Garuda_CI

-

CD :

-

Github Pages is used to deploy data_extractor's javadocs.

-

Github Pages also host static assets. docs/ folder is hosted via GitHub Pages.

-

CD is triggered on each push to master branch.

-

CD logs into JetStream2 remote server, builds all the docker images, pushes the docker images to DockerHub, replaces old deployments with latest deployments on remote kubernetes cluster.

-

Garuda's Data Extractor Maven Package

-

Pranav Palani Acharya

-

Rishabh Deepak Jain

-

Tanmay Dilipkumar Sawaji