This project provides a Docker container that you can start with just one docker run command, allowing you to quickly get up and running with Llama2 on your local laptop, workstation or anywhere for that matter! 🚀

docker run -p 8888:8888 -e HUGGINGFACEHUB_API_TOKEN=<YOUR_API_TOKEN> aishwaryaprabhat/llama-in-a-container:v1- Hassle-free Setup: Get started with Llama2 effortlessly using a single command.

- Customizable Environment: Modify the environment variables to choose the LLM you want to download in the container.

- Jupyter Lab Included: Access Jupyter Lab for interactive NLP experimentation.

Before you dive into the world of Llama2 with this container, make sure you have the following prerequisites ready:

- Docker 🐳

- Hugging Face Access Token 🔐

- Git (Optional for version control) 📦

There is a pre-built container ready and waiting for you to run and start tinkering with Llama2. Just run

docker run -p 8888:8888 -e HUGGINGFACEHUB_API_TOKEN=<YOUR_API_TOKEN> aishwaryaprabhat/llama-in-a-container:v1Replace YOUR_API_TOKEN with your Hugging Face Hub API token. If you don't have an API token, you can obtain one from Hugging Face. 🛡️

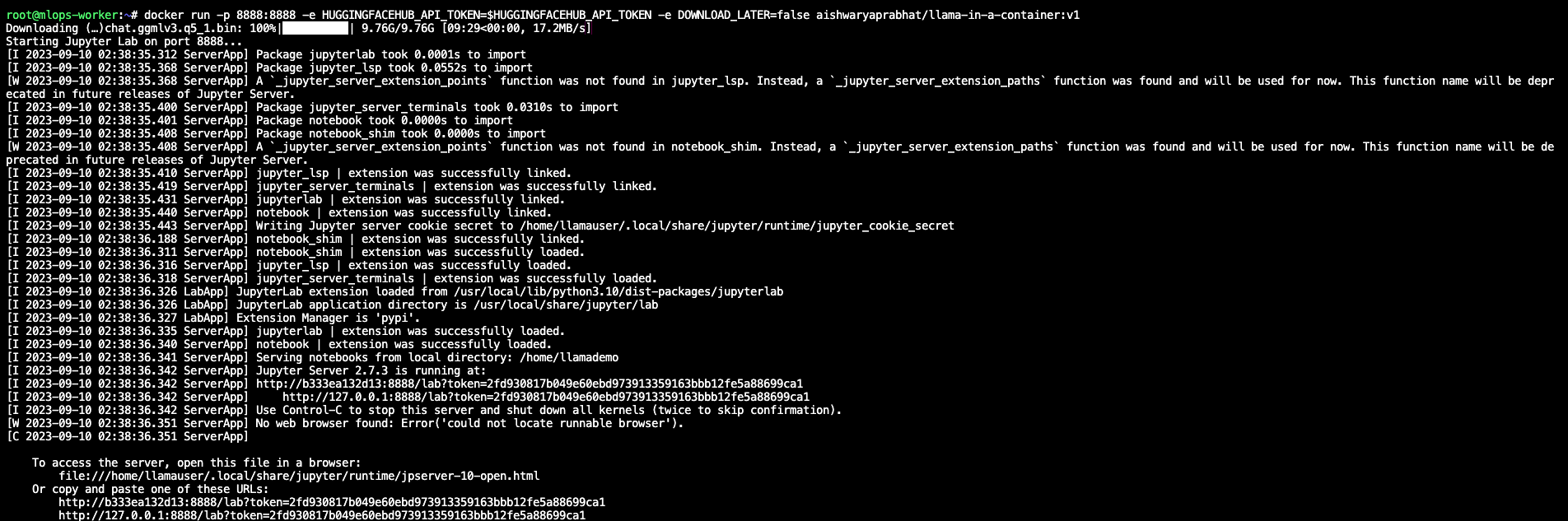

Depending on the speed of model download, you should soon see the following on your terminal:

Once the container is up and running, access Jupyter Lab by opening your web browser and navigating to:

http://localhost:8888/lab

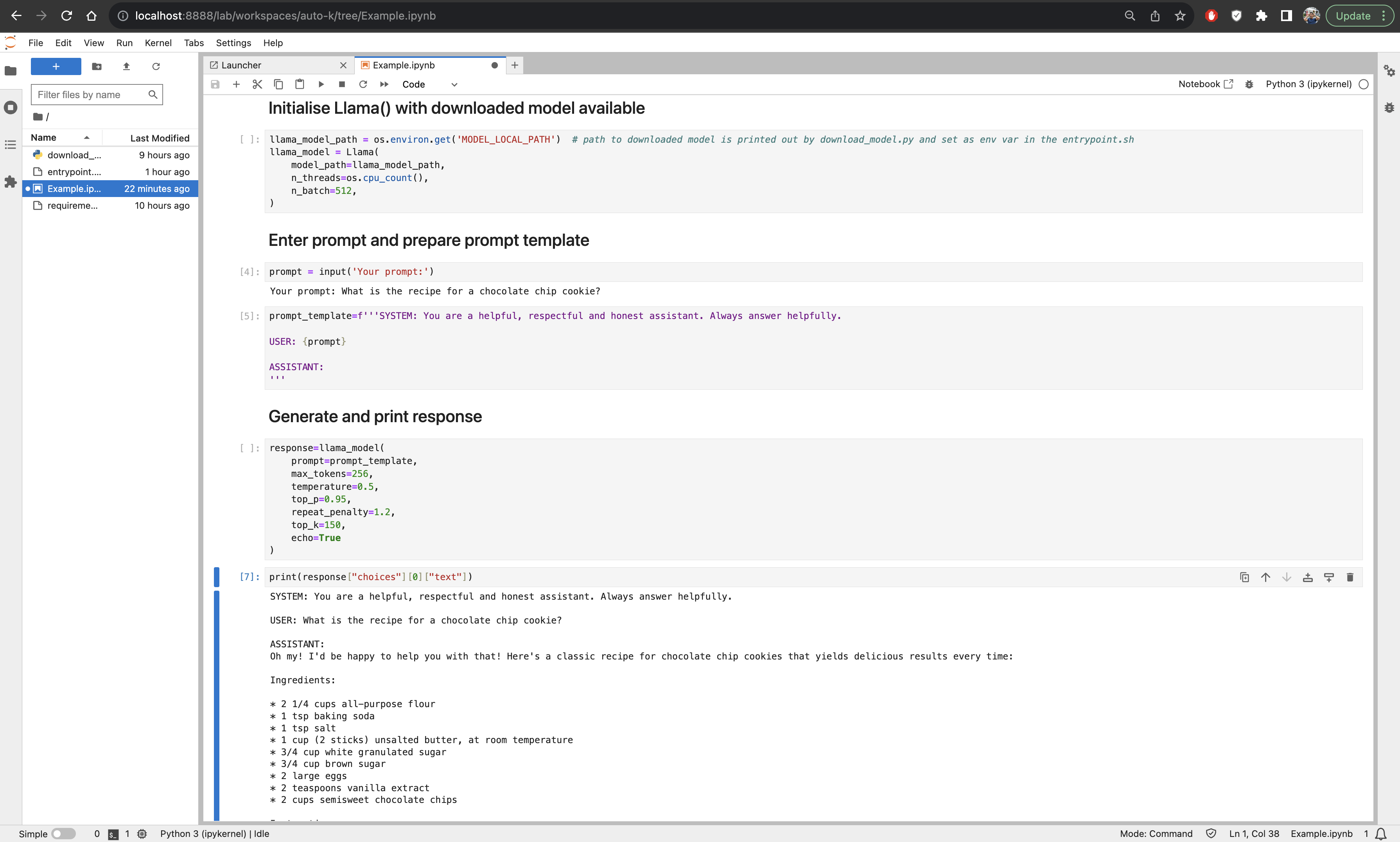

There is some code waiting and ready in Example.ipynb for you to get your hands dirty!

If you'd like to play around with the Dockerfile or any of the other scripts...

First, clone the Llama in a Container repository to your local machine:

git clone https://github.com/aishwaryaprabhat/llama-in-a-container.git

cd llama-in-a-containerBuild the Docker image using the provided Dockerfile. This step will set up the container with all the necessary dependencies:

docker build -t llama-in-a-container .Now, you can start the container with a single command. The following command starts the container and exposes Jupyter Lab on port 8888:

docker run -p 8888:8888 -e HUGGINGFACEHUB_API_TOKEN=<YOUR_API_TOKEN> llama-in-a-containerReplace YOUR_API_TOKEN with your Hugging Face Hub API token. If you don't have an API token, you can obtain one from Hugging Face. 🔑

Once the container is up and running, access Jupyter Lab by opening your web browser and navigating to:

http://localhost:8888/lab

You can now start using Llama2 for your NLP tasks right from your web browser! 📊

Llama in a Container allows you to customize your environment by modifying the following environment variables in the Dockerfile:

HUGGINGFACEHUB_API_TOKEN: Your Hugging Face Hub API token (required).HF_REPO: The Hugging Face model repository (default: TheBloke/Llama-2-13B-chat-GGML).HF_MODEL_FILE: The Llama2 model file (default: llama-2-13b-chat.ggmlv3.q5_1.bin).JUPYTER_PORT: The port for Jupyter Lab (default: 8888).DOWNLOAD_LATER: Set to "true" to skip model download (default: false). 🔄

If you encounter any issues or have suggestions for improvements, please feel free to open an issue on the GitHub repository. Contributions and pull requests are also welcome! 🙌

This project is licensed under the Llama2 Community License file for details. 📜

Happy llama-ing! 🦙📦