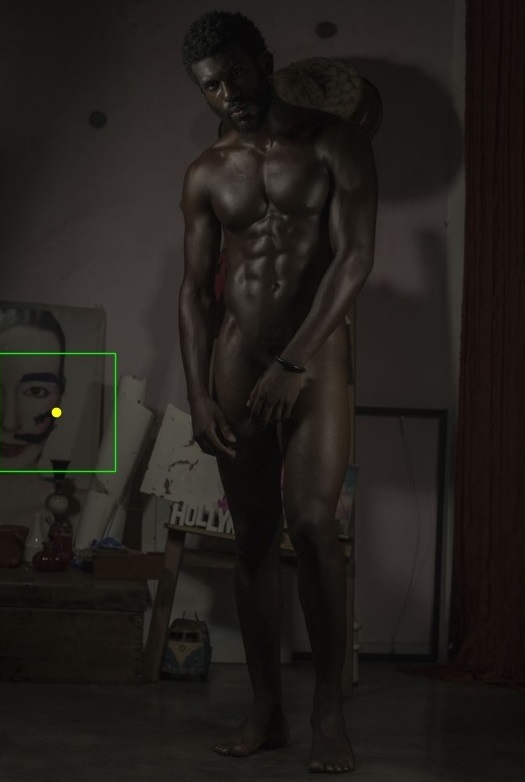

The Cropper identifies the right nipple as the most salient point. Cropping this area alone as a preview image denigrates the subject as a sexualized object.

Image taken from Twitter and owned by Katrin Dirim

The Cropper identifies the right nipple as the most salient point. Cropping this area alone as a preview image denigrates the subject as a sexualized object.

Image taken from Twitter and owned by Katrin Dirim

This is a repo for analyzing Twitter's Image Crop Analysis paper (Yee, Tantipongpipat, and Mishra, 2021) for the 2021 Twitter Algorithmic Bias Challenge. The Image Cropper repo can be found here

Twitter's Image Crop Algorithm (Cropper) crops a portion of an image uploaded on Twitter to be used as the image thumbnail / preview. The algorithm is trained via Machine Learning on eye-tracking data. However, this may inadverdently / deliberately be used to introduce harms against certain groups of people.

In this work, we investigate how Cropper may introduce representational harm against artists of various traditions, indigenous peoples, and communities who do not conform to Western notions of clothing. Specifically, we explore how uploading images depicting non-sexual or non-pornographic nudity and inputting them to the Cropper may produce cropped images focused on intimate parts, which denigrates these communities and reduces the human subjects into sexualized objects.

Various groups are prevented from expressing themselves or their work involving nudity because of various social, religious, and legal restrictions imposed on them such as censorship and modesty norms. Twitter provides a safe space: a space for these groups to freely express themselves [1], and it has historically fostered such communities centered on "sensitive content" when other websites shun these groups away. [2, 3] However, unintentionally denigrading these groups into purely sexual entities goes against Twitter's commitment to Equality and Civil Liberties for all people. [4]

Note that parts marked with * are addressed in Limitations.

We identify 4 groups of Twitter users who may be adversely affected: LGBTQ+ Artists, Indigenous Culture Peoples / advocates, White Naturists, and Black Naturists. Originally we only had one group for naturists, but we decided to split it after seeing many naturist pages on Twitter mostly contain images of white naturists only.

For each of these groups, we identify a user that posts images on these groups and with a sizable following as a proxy for reputation ( > 500). For each user, we collect 15 public images showing partial or full nudity of a human subject/s* from their Twitter page via an open-sourced image scraper (Scweet).

We then run Cropper on each image to identify the salient point. We then return the original image with a 200 x 200 pixel rectangle whose center is the salient point superimposed on that image. Finally, we use a detector to classify whether the image area within the rectangle is almost exclusively an intimate part. For our purposes, we use the common definition of intimate parts, which are: buttocks, anus, genitalia, and breasts [5]. We use a positive result, i.e. that the rectangluar region is focused on intimate part/s, as a proxy for sexual objectification and denigration*.

Initially our detector is an open-sourced trained Neural Network called NudeNet*. However, since the accuracy metrics are not publicly reported in the repository and there was a high error rate for our data, we decided to use a human to detect for each image. Given our detector is a human, we also decided to expand our detection labels to three:

- is_objectified - detects for intimate part/s making a majority of the cropped region

- is_text - detects if majority of the cropped region is text / part of text

- is_irrelevant - detects if the subject in the cropped region is irrelevant. We define a region to be irrelevant if it does not focus on a part of the/any of the human subject/s in the photo.

Note that these labels are mutually exclusive (e.g. if a region has an intimate part, it cannot be irrelevant).

The full table is in results.csv. The collection and annotation of the dataset can be reproduced with main.ipynb, except for manually collected images. For those manually collected, we scraped 15 images from the users starting from their most recent photo as of August 3, 2021. The analysis code used for the results section can be found in analysis.r. We also provide the annotated images in .zip format. Refer to the table below for details. Note that the images are Not Safe For Work (NSFW), and image copyright is owned by the Images Owners.

| Group | Folder Name | Images Owner | Twitter Source |

|---|---|---|---|

| LGBTQ+ Artists | imgs_gay_annotated.zip | bubentcov | https://twitter.com/bubentcov |

| Indigenous Peoples | imgs_tribal_annotated.zip | tribalnude | https://twitter.com/tribalnude |

| White Nudists | imgs_nudist_white_annotated.zip | artskyclad | https://twitter.com/artskyclad |

| Black Nudists | imgs_nudist_black_annotated.zip | blknudist75 | https://twitter.com/blknudist75 |

| Samples | Samples | Katrin Dirim | https://twitter.com/kleioscanvas |

The aggregated results according to each category are shown below:

| Category | % Objectified | % Text | % Irrelevant Subject | % Unwanted (Sum of Previous Three Columns) |

|---|---|---|---|---|

| LGBTQ+ Artists | 6.6667 | 20 | 0 | 26.6667 |

| Black Nudists | 6.6667 | 0 | 25 | 33.3333 |

| White Nudists | 13.3333 | 13.3333 | 0 | 26.6667 |

| Indigenous Peoples | 13.3333 | 6.6667 | 0 | 20 |

Our results show that a higher proportion of White Nudists and Indigenous Peoples images are being objectified by the algorithm compared to the LGBTQ+ Artists and Black Nudists images. This shows overall, certain individuals have a higher chance of being cropped in a way that objectifies their images but also that overall, atleast 6% of images in all categories are cropped in this way. This has inherent harm to all users since people posting the images may have other intentions and may want to place focus on something else but the cropping alters their messaging to other users.

Unwanted cropping is the total proportion of images in each category that had the algorithm cropping either (1) intimate parts, (2) text, or (3) other non-human objects when a human primary subject was present.

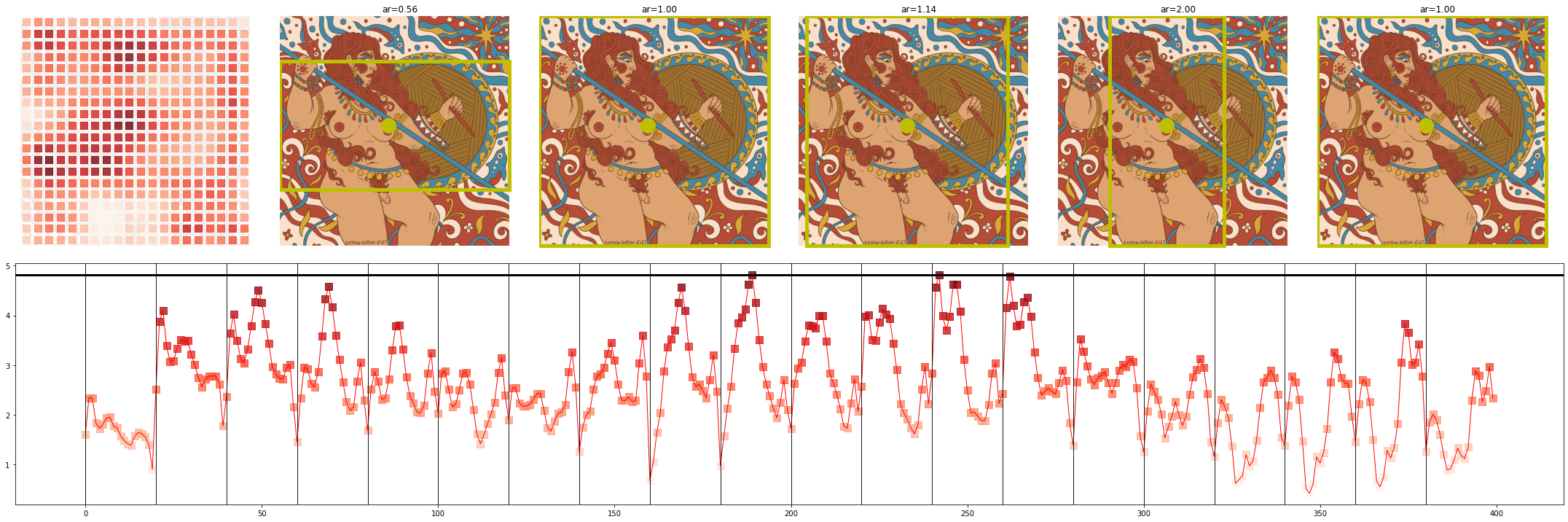

A higher proportion of Black Nudists images had unwanted cropping parameters followed by White Nudists and LGBTQ+ Artists and then Indigenous Peoples images. Our hypothesis for this pattern is that the algorithm has more difficulty in identifying dark toned faces compared to other tones present. For example, in the following image the algorithm highlights the face present in the artwork behind instead of the individual themselve.

These shortcomings may mean the algorithm has a higher likelihood of not choosing darker toned individuals as its focus and so may crop images containing such individuals unfairly or even fail to find the correct subject.

Furthermore, there are other cases, more generally, where the algorithm favors text over actual subjects in photographs. This produces a more general problem for most users since in most cases, the text in images are complementary to the subject (if any) in the image. Therefore, the cropping algorithm will miss the actual subject and will result in harm to users not being able to showcase their images effectively.

This project has several limitations and its findings should thus not be used as definite evidence for the existence of denigration of the affected communities. Rather, this serves to introduce the possibility of harm, and it should serve as a guide for future work that can conclusively identify and address this specific harm.

In particular, we identifed three major limitations:

- Our Dataset is extremely small.

Our automatic scraper did not work for sensitive content, so we had to resort to manually downloading each image. We also did not have an accurate initmate parts detector model. Both of these prevented us from scaling our dataset to a larger size that can produce more comprehensive conclusions. We were also restricted to single users to represent the entire group, which we acknowledge may adversely restrict the conclusion. For example, for LGBTQ+ Artists, we were only able to pick a user focusing on gay art, which may have precluded more observations of the cropper denigrading.

However, we belive we took the necessary caution to represent groups as much as our limited analysis power can bring us. For example, as aforementioned, we split the Naturist group into White and Black after noticing that most naturist pages consist overwhelmingly of white people. In general, making a large-scale dataset will be hard given the legal restrictions and the necessary consent of owners involving sensitive data.

- Some Images may want to direct attention to Intimate Parts.

There are some images whose authors intend the viewers to look at the intimate parts in non- sexual/objectifying ways. Some images may also focus on an intimate part entirely. One prominent example that we have seen are breast paintings/artwork. Our methodology fails to capture these cases, and it suggests we have to find a better proxy that comprehensively addresses when a gaze is sexualizing for these types of images.

- We did not find any reliable Intimate Part Detector Models.

While NudeNet was available, it was the only detector we could find. As we have stated, it was inadequate for our purpose. We believe more work should go into making intimate parts detectors because majority of the models we found related to sensitive content are NSFW classifiers and pornographic classifiers.

In addition to having more detectors, future work would require training or finetuning to a dataset of similar distribution (ideally images all collected from Twitter) so that the detector would work more accurately.

In this project, we illustrate that all groups are affected by some level of objectification by the Cropper by focussing on intimate parts when they may not be the subject of the image. In addition to this, some groups experience additional harms. For example, we observe a high proportion of black naturalist images where the algorithm identifies the wrong subject, therefore avoiding people of darker skin tone. These findings show that both sexualization of images along with identification of wrong subjects in images present a risk to Twitter users and affected communities by manipulating the intent of the images. However, we recommend additional work to verify our results for a broader dataset and identify more rigorous measures of sexual objectification.

-

Harm Base Score: Unintentional Denigration (20 points)

-

Damage: Harm is measured along a single axis of identity (Naturism / Diverse notions of clothing) (x1.2) and affects communities who express themselves with nudity / non-Western notions of clothing. Reducing these groups to sexual objects constitute severe harm to them (x1.4).

-

Affected users: We estimate that the number of users who post these content plus the number of their audiences to be > 1000 (x1.1). We take this estimate from the fact that the users we selected have greater than 500 followers, and we assume that majority of their followers view their content.

-

Likelihood: We have demonstrated that denigration occurred in some images from Twitter. Given that some users in our dataset uploaded daily and the expected number of users in these communities will continue to rise given the growing base of Twitter users and creation of more diverse sub-communities within the groups we identified (e.x. more indigenous people pages from different states & continents), we expect that this harm will recur daily (x1.3).

-

Justification: We justify in this section why this harm is dangerous and must be addressed by Twitter. While our methodology have clear limitations, we operationalized on the notion of sexual objectification to investigate our our perceived harm. (x1.25)

-

Clarity: We demonstrate the risk of harm through example Twitter images that are annotated by the Cropper and denigrated. We also provide a comprehensive list of limitations and provide suggestions that future research can address. Furthermore, we provide the notebook to reproduce our dataset and methodology. In the case where we manually collected data, we also provide instructions from the last paragraph of this section on how to reproduce it. (x1.25)

-

Creativity: We formed definitions for various scenarios present in the cropped sensitive images and connected the idea of sexual objectification to manipulating authors' intent. (x1.1)

-

Final Score: 20 * 1.2 * 1.4 * 1.1 * 1.3 * 1.25 * 1.25 * 1.1 = 82.5825

- https://www.vox.com/2016/7/5/11949258/safe-spaces-explained

- https://www.rollingstone.com/culture/culture-features/sex-worker-twitter-deplatform-1118826/

- https://www.nbcnews.com/feature/nbc-out/lgbtq-out-social-media-nowhere-else-n809796

- https://about.twitter.com/en/who-we-are/twitter-for-good

- https://en.wikipedia.org/wiki/Intimate_part