Due to be updated, it works, but some approaches need to be reworked.

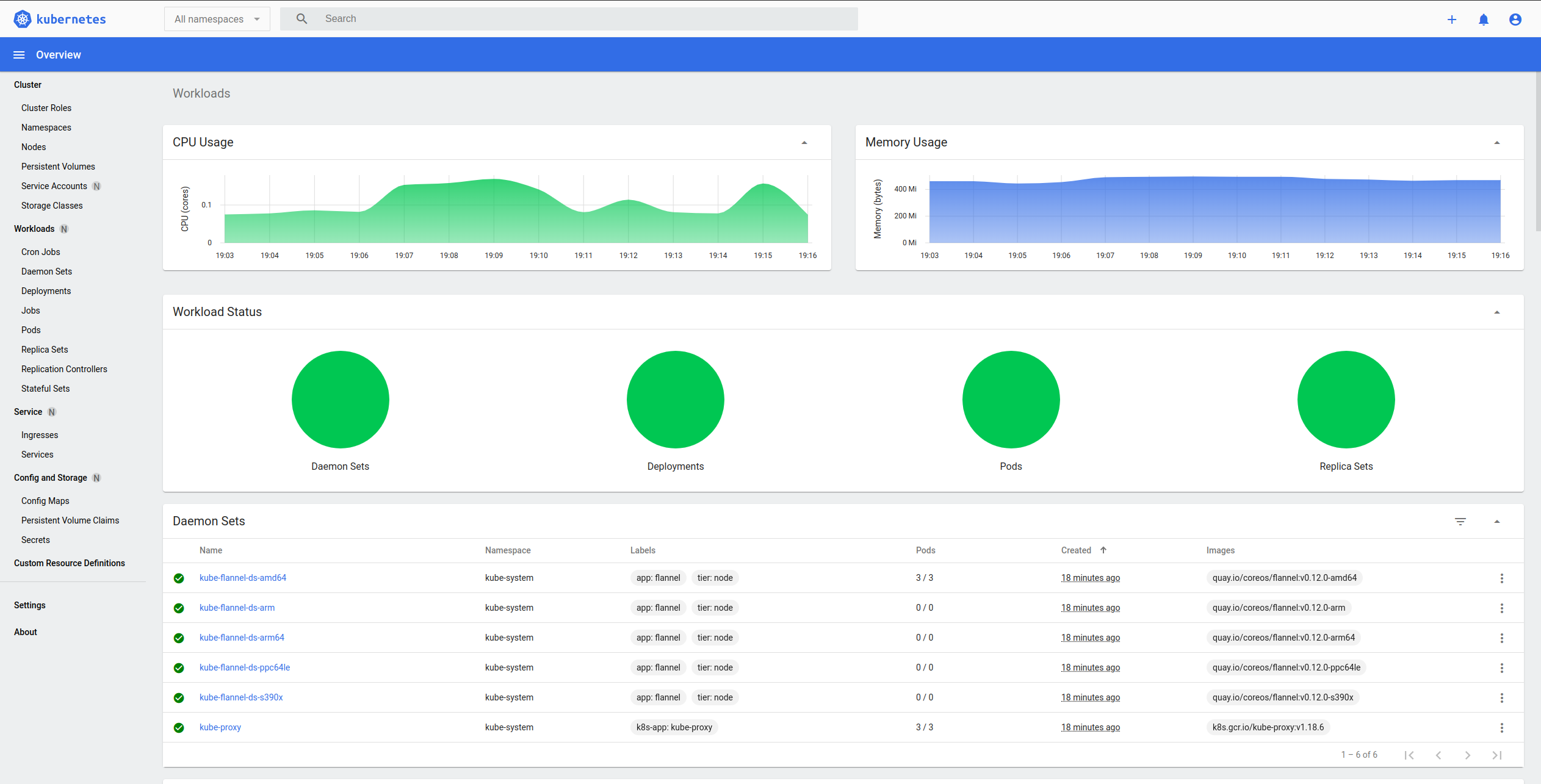

Deploys Kubernetes with loadbalancer, dashboard, persistent storage, and monitoring

on local machine using Vagrant.

By default you get 1 master, 2 working nodes, NFS server, loadbalancer, monitoring, image registry, and deployed dashboard.

Version of deployed Kubernetes cluster is 1.20.1, version of Ubuntu VirtualBox image is 18.04.

Dashboard is available via port 30000 on worker nodes. If you didn't change network ip range or number of worker nodes in Vagrantfile, dashboard will be available on both:

Note:

In order to access dashboard via Chromium based browsers, you'll have to bypass

invalid error certificate error on above mentioned URLs. In order to do that,

just type thisisunsafe while on dashboard page. You don't need any input

field, just typing it while on that page will do the trick.

Persistent volume pv-nfs and 5GB persistent volume claim pvc-nfs are provided automatically.

For examples on how to use/create your own pv/pvc, check /storage directory.

For bare-metal loadbalancing purposes MetalLB is used.

For deployment manifests check /lb directory.

Prometheus operator is installed, if you wish to consume it, check example in

monitoring/prometheus-example.yaml. Full blown setup with node-exporter,

kube-state-metrics, alertmanager, and Grafana is a bit too resource expensive

for what this project aims to achieve.

Docker Registry v2 is used for image registry inside of the cluster.

If you wish to use it, you can access it on port 30001. Simple web ui is hosted

on port 30002 (check endpoints in docker-registry namespace).

You will have to add insecure registry entry

to your docker daemon.

Note:

In case web ui is not showing repositories properly, check REGISTRY_URL in its

deployment, and make sure it has proper value.

Note:

Vagrantfile uses ansible_local module, which means you don't need Ansible

installed on your workstation. However, if you already have it installed,

and prefer to use that instead of letting Vagrant install Ansible on each

node separately, change *vm.provision from ansible_local to ansible in

Vagrantfile.

Another option is having dedicated "controller" node, which can be used as Ansible host. You can learn more by visiting this link.

- Clone this repository

- Run

vagrant up

- Run

vagrant halt

- Run

vagrant status

- While in project directory, type:

vagrant ssh $NAME_OF_THE_NODEExample:

vagrant ssh k8s-master- Create dedicated config directory in local

.kubedirectory

# Create directory in which you're going to store Kubernetes config

mkdir -p ~/.kube/kubernetes-localhost

# Copy file from Kubernetes master

scp -i ~/.vagrant.d/insecure_private_key vagrant@192.168.100.100:~/.kube/config ~/.kube/kubernetes-localhostNote: In case you redeploy cluster, you'll have to remove previous entry from .ssh/known_hosts or you'll

get WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED! warning, you can use

this command for example:

sed -i "/192.168.100.100/d" ~/.ssh/known_hosts- You can now access Kubernetes cluster without sshing into Vagrant box:

kubectl --kubeconfig=/home/$USER/.kube/kubernetes-localhost get pods --all-namespaces

Optional: Create alias in configuration file of your shell, for example:

# Open rc file

vim ~/.zshrc

# Insert alias

alias kubectl_local='kubectl --kubeconfig=/home/$USER/.kube/kubernetes-localhost'

# Save and exit Vim (can't help you there), and reload config

source ~/.zshrc

# Test

kubectl_local get pods --all-namespaces- Check for secret name

kubectl get secrets -n kube-system | grep 'dashboard'

# Example:

kubectl describe secret/dashboard-access-token-mb7fw -n kube-system- Navigate to one of above mentioned URLs and paste it in there

- Run

vagrant destroy -f - Remove repository