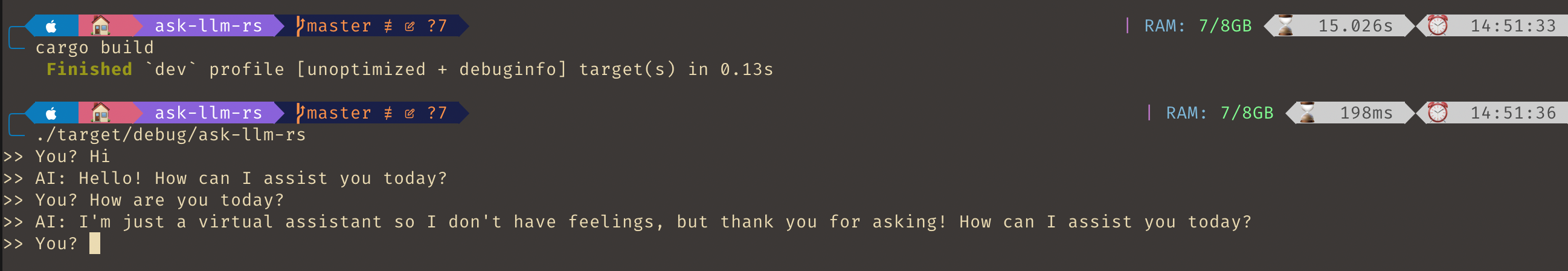

CLI build with Rust to interact with any LLM services.

# create .env

cp .env.example .env

cargo runUsing Local LLM services

LLM_BASE_URL=http://localhost:3928/v1/chat/completions

LLM_API_KEY=

LLM_MODEL=

Using Managed LLM Services

LLM_BASE_URL=https://api.openai.com/v1/chat/completions

LLM_API_KEY=

LLM_MODEL=gpt-3.5-turbo

LLM_BASE_URL=https://api.anthropic.com/v1/messages

LLM_API_KEY=

LLM_MODEL=claude-3-5-sonnet-20240620

MIT