Log Viewer is a Django-based application that provides a user-friendly log viewer for searching and filtering logs. It consists of a Log Ingestor for inserting logs from different services and a Query Interface for efficient log retrieval.

Table of Contents

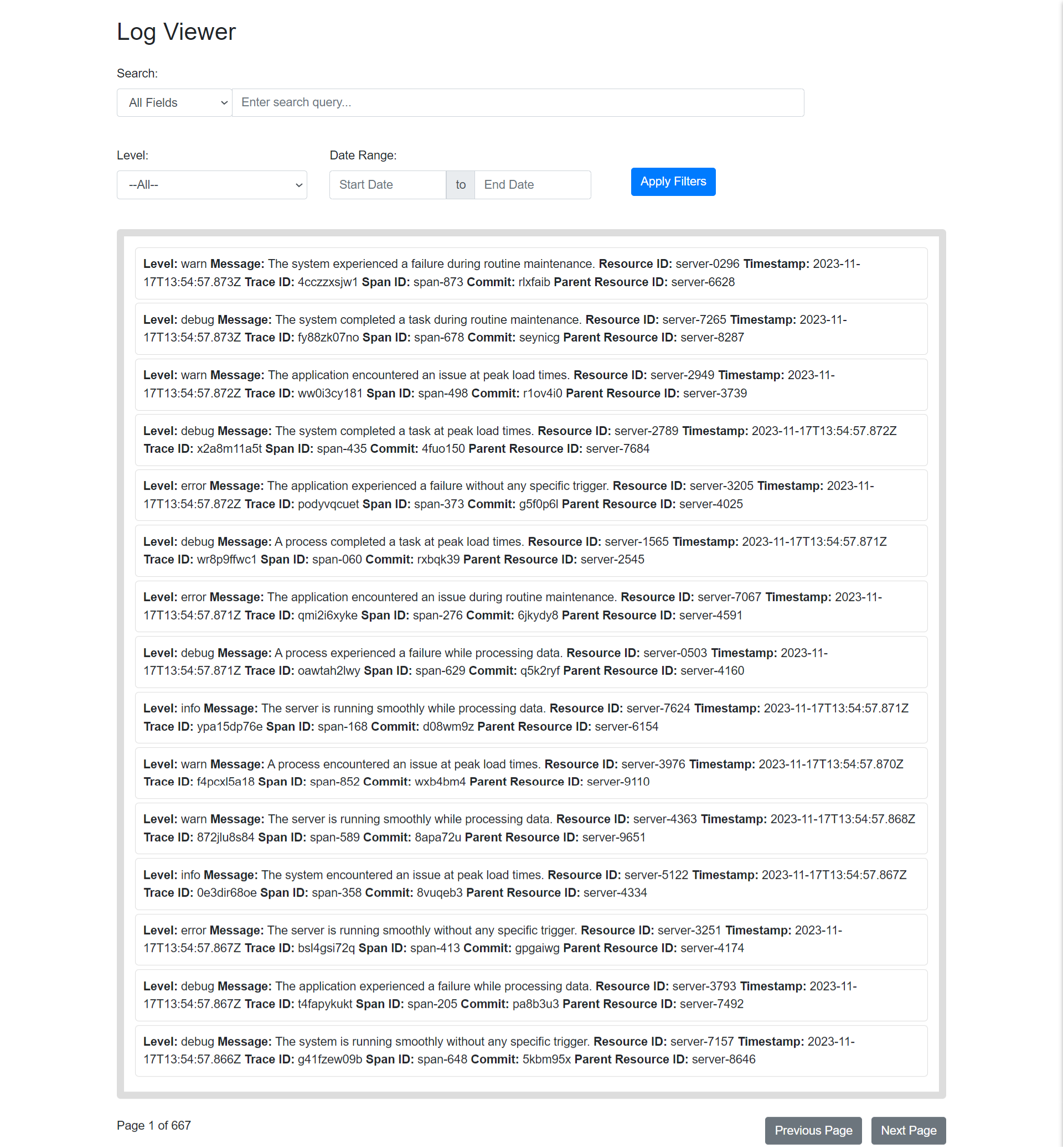

![Product Name Screen Shot][product-screenshot]

This log viewer has simple user friend ui for searching and filtering logs. For efficient log ingression the system used kafka message que and stores logs in postgres database I uses batch insersion to reduce db load and reduce latency.

- Python 3.x

- Django

- Kafka

- Bootstrap

- Postgres

- Docker

Clone the repo

git clone https://github.com/dyte-submissions/november-2023-hiring-acash-r.gitDjango project directory starts with name log_viewer The poject uses python v3.11 and Django v4.x

Create a python virtual environment

- install packages using pip

- pip

pip3 install -r requirements.txt

- Setup database

This require you to install postgress database and set your db name, username, password and host in .env file preassing inside django project directory

Example DB_NAME="my_log_viewer" DB_USERNAME="my_logadmin" DB_PASSWORD="my_logs@12345" DB_HOST="localhost" DB_PORT=5433

- Now run the following command to make migrations and migrate the database

-

The command should be run inside django project directory log_viewer containing manage.py file

python3 manage.py makemigrations python3 manage.py migrate

-

Install docker and run docker compose

docker compose -f docker_compose.yml up -d

this command will start the docker containers

-

Ensure that app_logs folder to be present in log_viewer project directory to store app logs

-

Run the Django server in Django project directory

python manage.py startserver

This will start server on port 3000 by default

- The log viewer and log consumer will be running on port 3000

- If you want to run server on another port run the following command

python manage.py runserver 8001

-

Run log consumer in another terminal This command runs kafka consumer that picks logs from kafka broker and stores it in postgres database

python manage.py runconsumer -

You Dont need to run log ingressor seperatorly as the endpoint which consumes logs is in the same project that we started earlier with this command

python manage.py startserver

- Start the Log Ingestor by running python manage.py startserver and start sending post request to http://localhost:3000/ingest-logs/

curl -X POST \

'http://localhost:3000/ingest-logs/' \

--header 'Accept: */*' \

--header 'User-Agent: Thunder Client (https://www.thunderclient.com)' \

--header 'Content-Type: application/json' \

--data-raw '{

"level": "warn",

"message": "The server is running smoothly during routine maintenance.",

"resourceId": "server-1491",

"timestamp": "2023-11-05T13:54:57.797882Z",

"traceId": "h830f0kd7q",

"spanId": "span-532",

"commit": "mc11wbf",

"metadata": {

"parentResourceId": "server-3518"

}

}'-

Access the Query Interface at http://localhost:3000 to search and filter logs using the provided interface.

-

If the data is comming at faster rates it will help if you increase the batch_size in queue_listener.py file.

- Efficient log ingestion mechanism ensure scalability to high volume of logs.

- Batch insertion to reduce db load and potentially reduce latency.

- Scalable architecture for handling high volumes of logs.

- Runs on port 3000 by default

- User-friendly Web UI for log search and filtering.

- Filter logs based on various parameters such as level, message, resourceId, timestamp, traceId, spanId, commit, metadata.parentResourceId.

- Quick and efficient search results.

- Searches logs between two date ranges.

- Provide real-time log ingestion and searching capabilities.

- Provide real-time log ingestion and searching capabilities.

- Implement ElasticSearch for faster search

- ElasticSearch Supports Regular Expression Searching

- Make batch size,broker url and other configurations configurable from .env file

- For generating log data you can use the script generate_dummy_logs.py

- this can be used to send post request to server and populating database with dummy data

- To run use this commnd :

python generate_dummy_logs.py

- dummy_logs.log is sample log file

- you can use populate_logs_from_log_file if you have a log file

Contributions are what make the open source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated.

If you have a suggestion that would make this better, please fork the repo and create a pull request. You can also simply open an issue with the tag "enhancement". Don't forget to give the project a star! Thanks again!

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

Distributed under the MIT License. See LICENSE.txt for more information.

Akash Raj - @twitter_handle - akashraj7713@gmail.com

Project Link: https://github.com/akashraj98/