Train with MNIST and generate hand-written numbers with generative models including VAE, DCGAN and RealNVP.

The following generative models are included, with configs in config.ini:

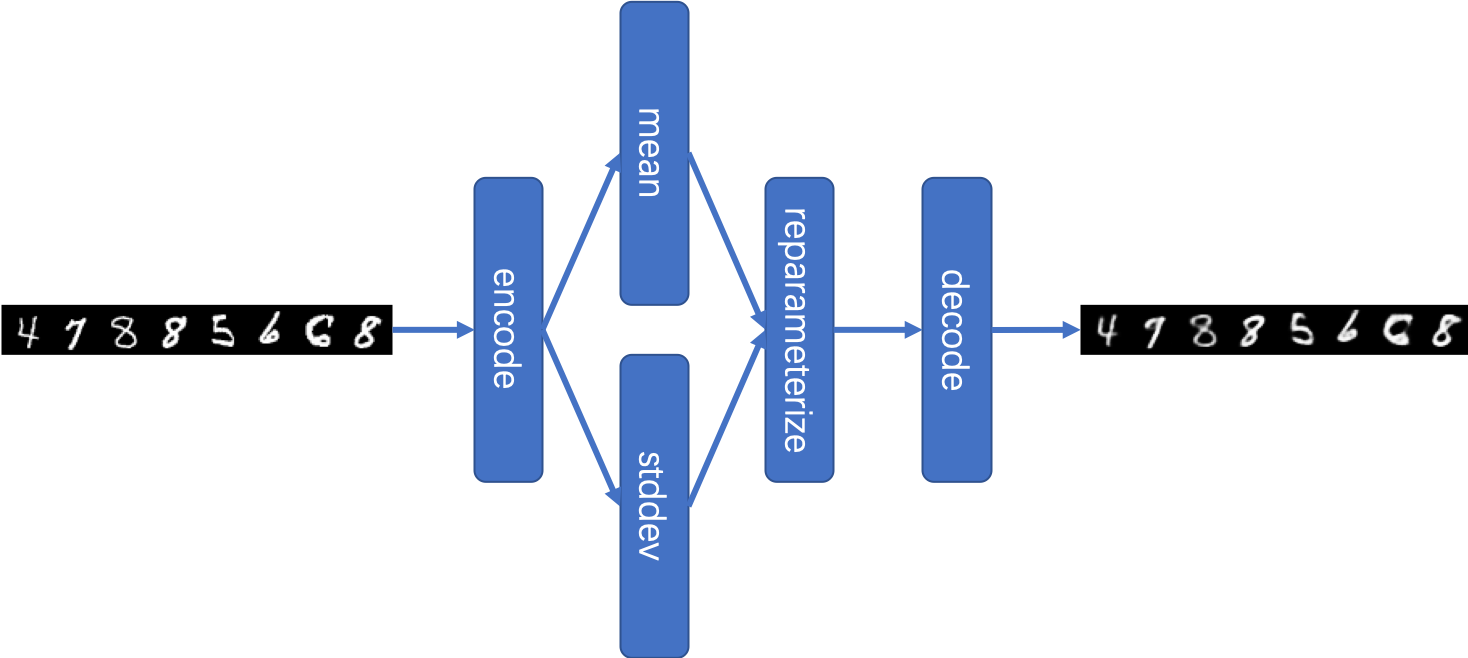

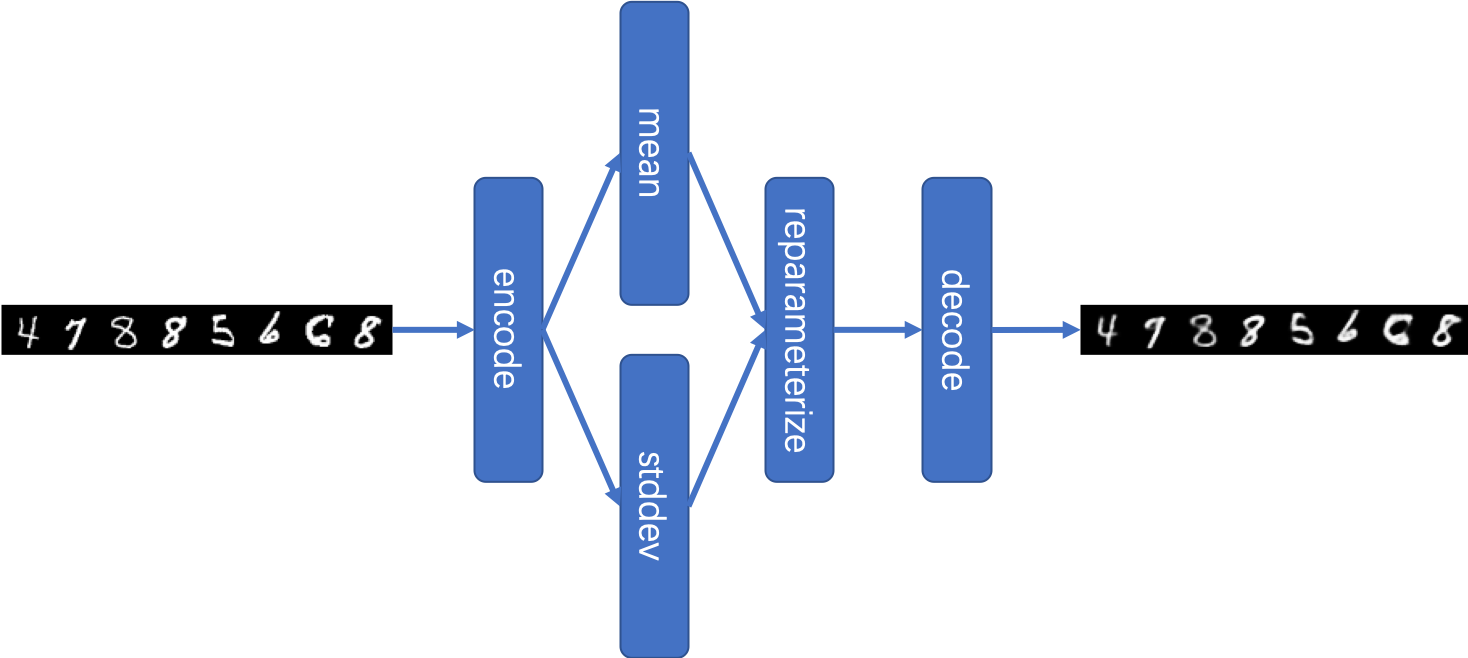

- VAE (Variational Auto-Encoder) in

vae.py

- DCGAN (Deep Convolutional Generative Adversarial Networks) in

dcgan.py

- RealNVP (Real-valued Non-Volume Preserving) in

realnvp.py

- Install the required packages by:

pip install -r requirements.txt

| MNIST |

Train |

Dev |

Test |

| Amount |

48000 |

12000 |

10000 |

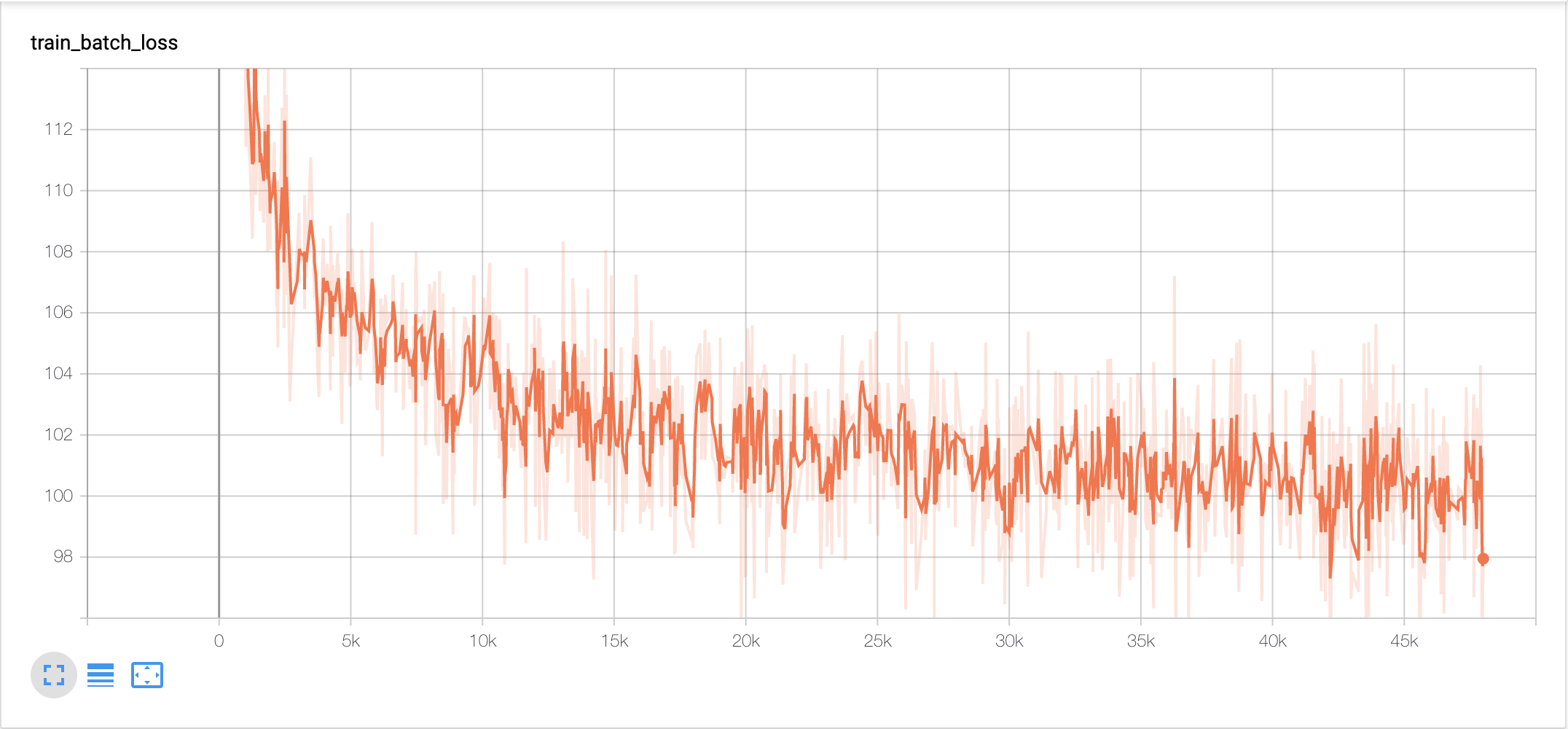

tensorboard --logdir=log/vae/

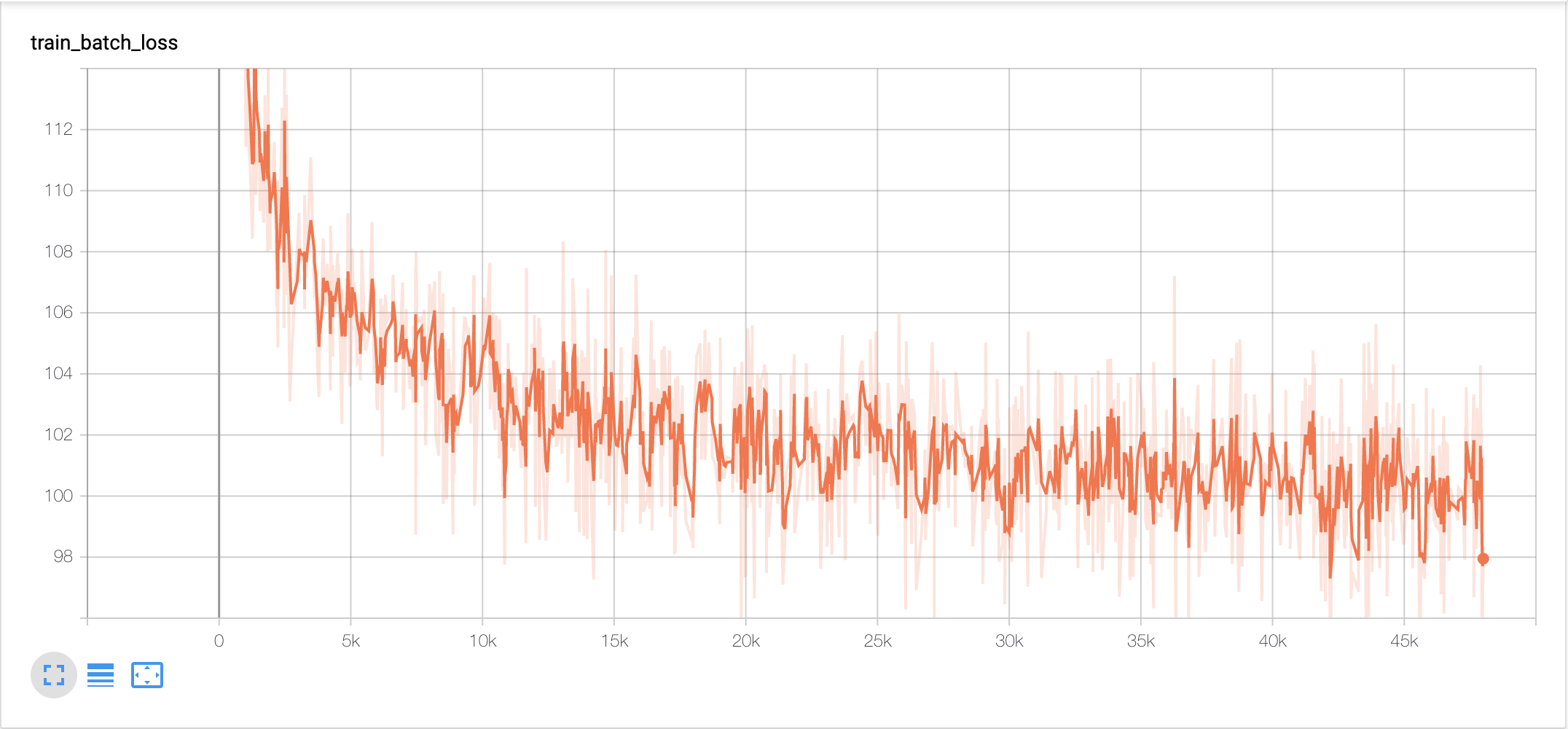

| Train Batch Loss |

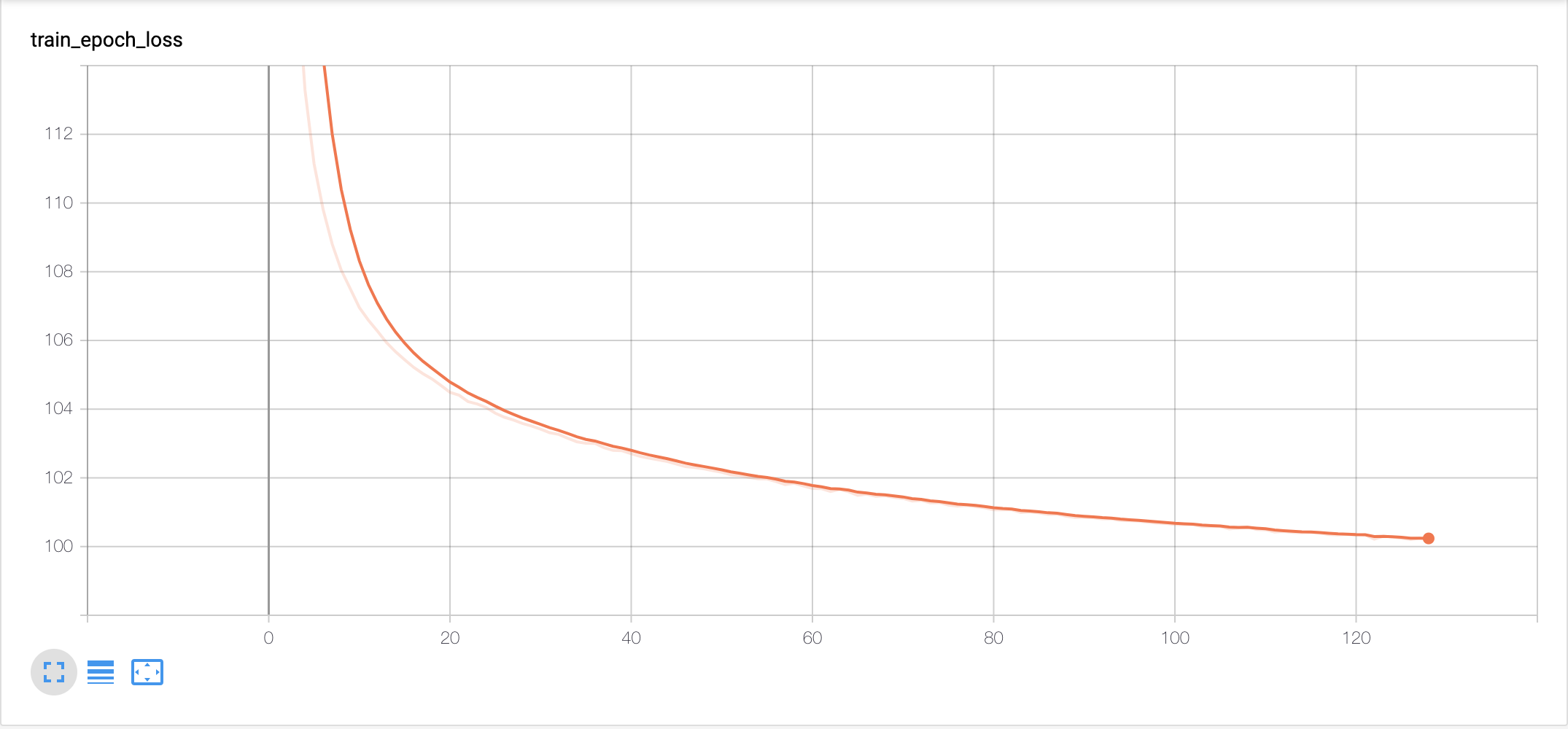

Train Epoch Loss |

|

|

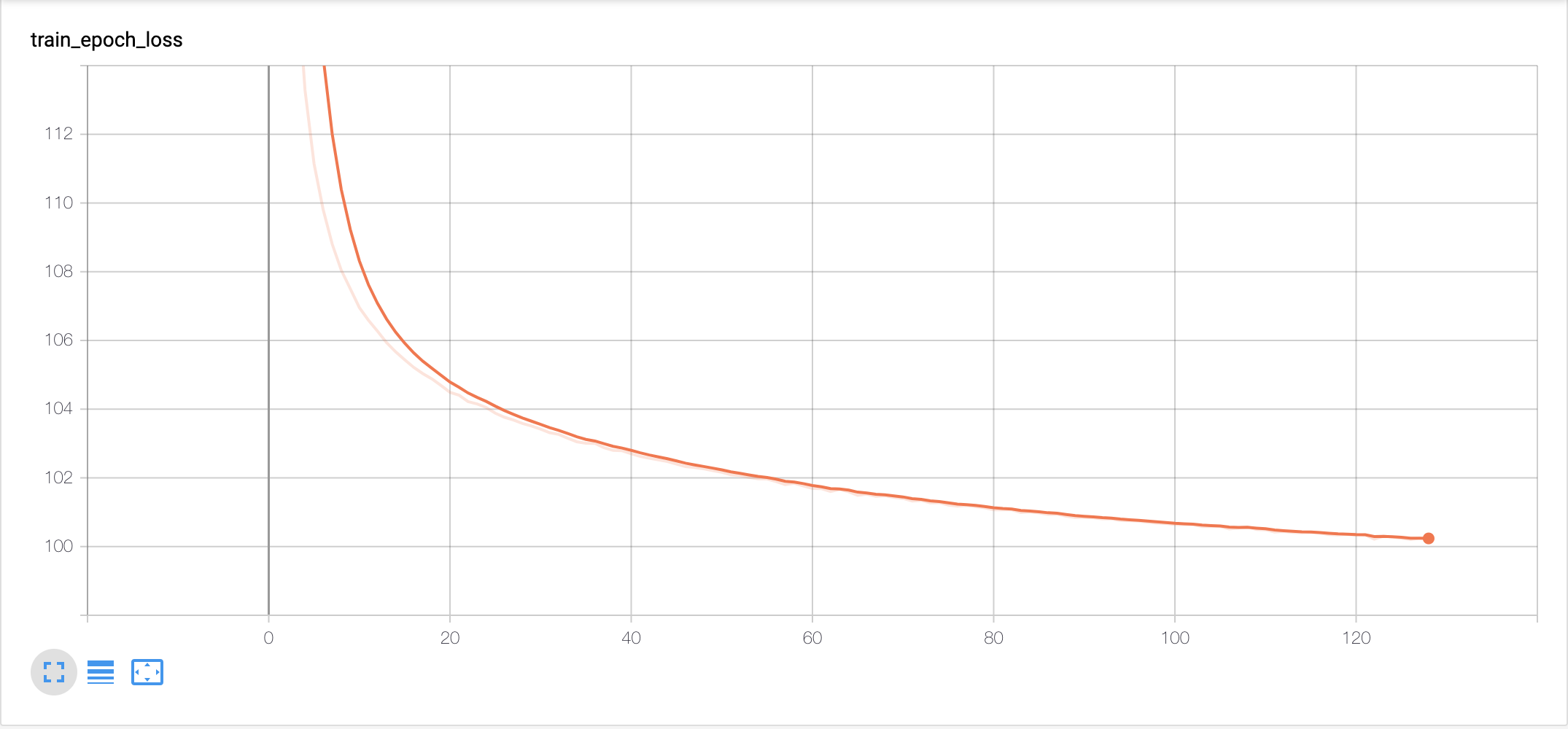

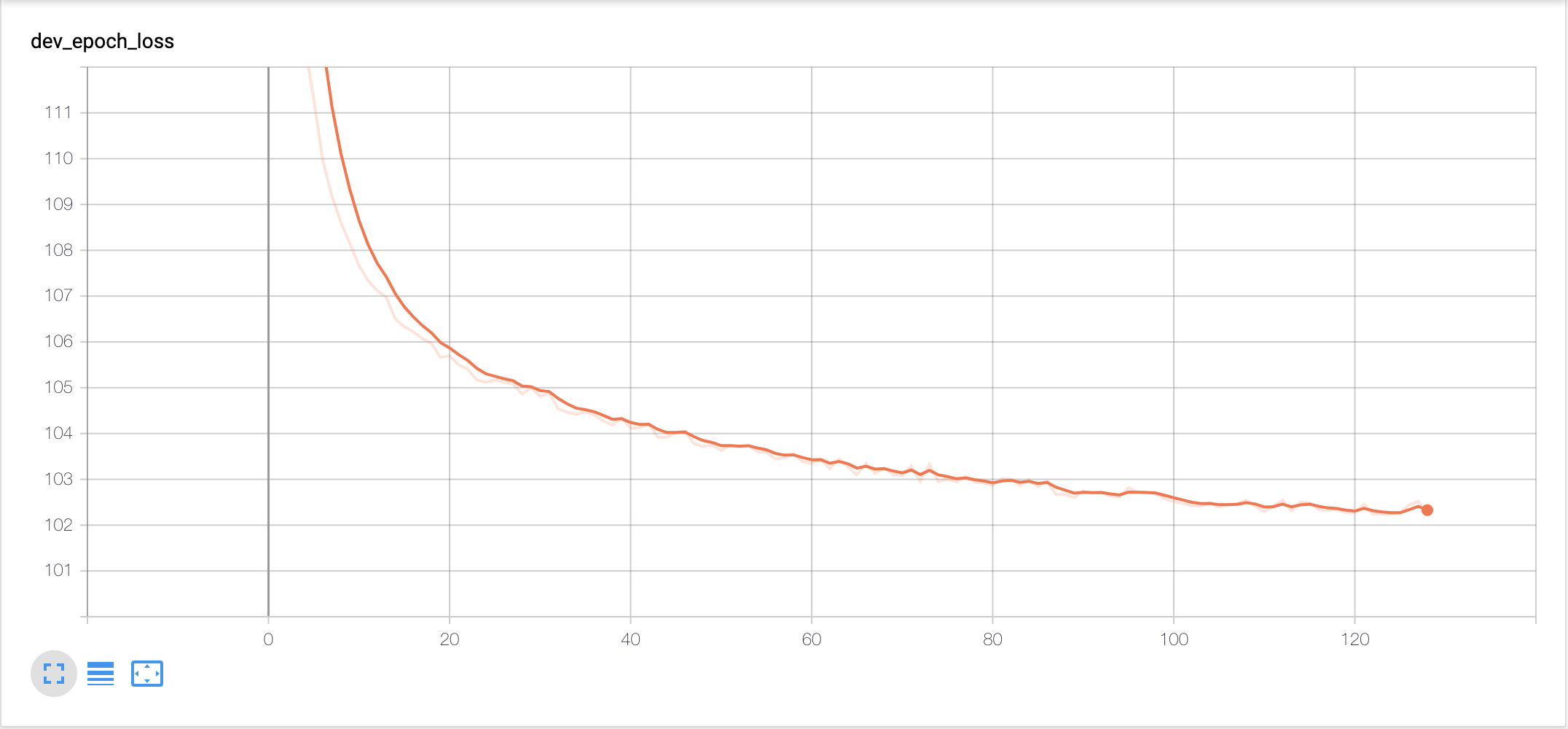

| Dev Loss with best epoch 62 |

Test Loss |

|

102.0952 |

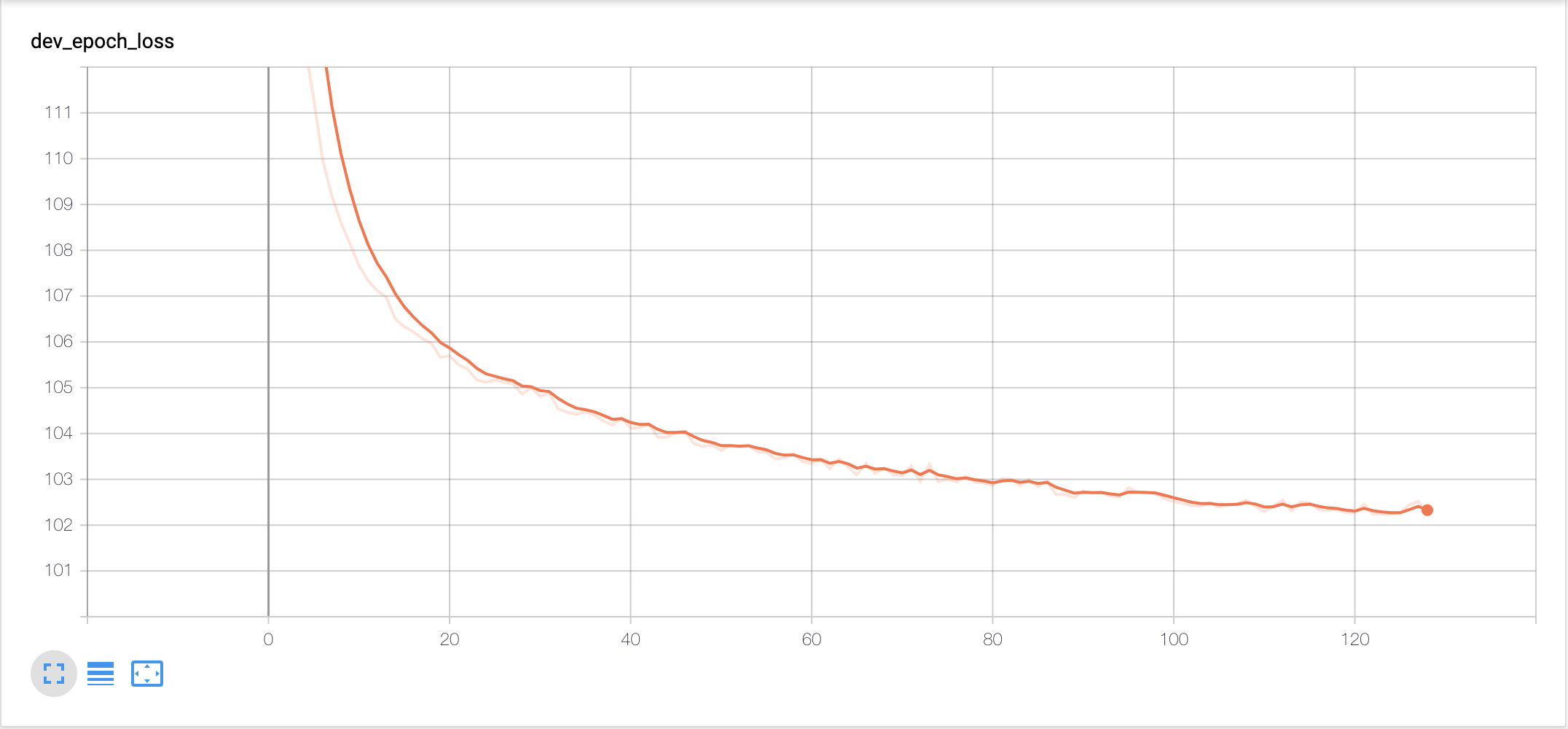

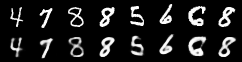

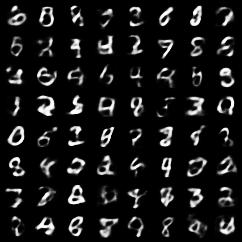

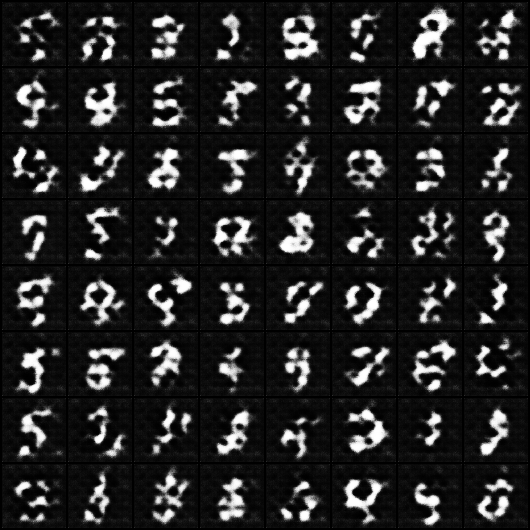

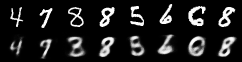

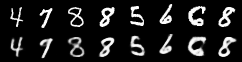

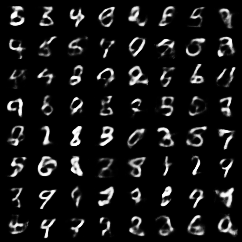

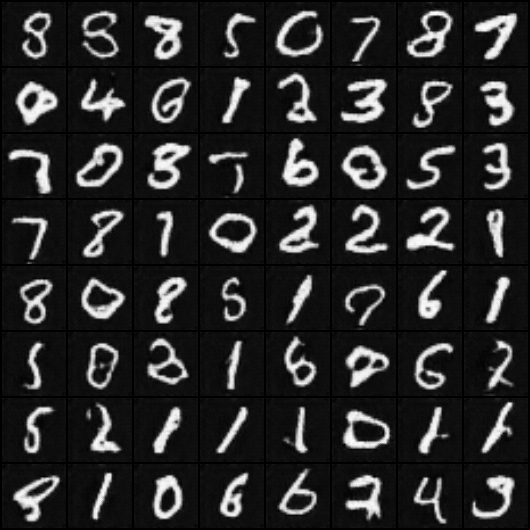

| Epoch 1 |

Epoch 10 |

Epoch 20 |

|

|

|

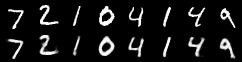

| Epoch 30 |

Epoch 40 |

Epoch 50 |

|

|

|

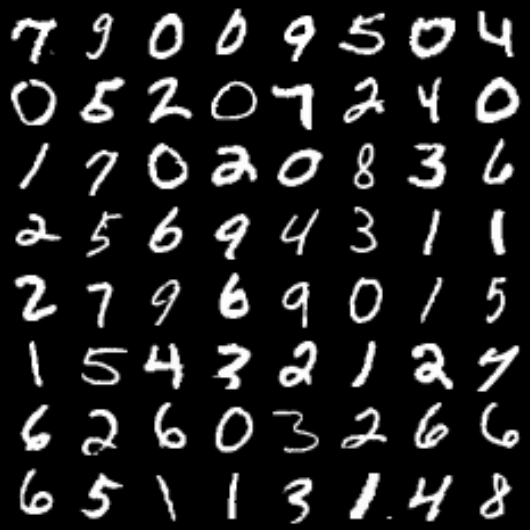

| Epoch 64 |

Test Result |

|

|

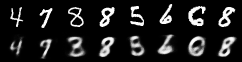

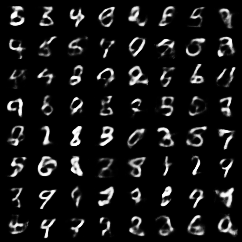

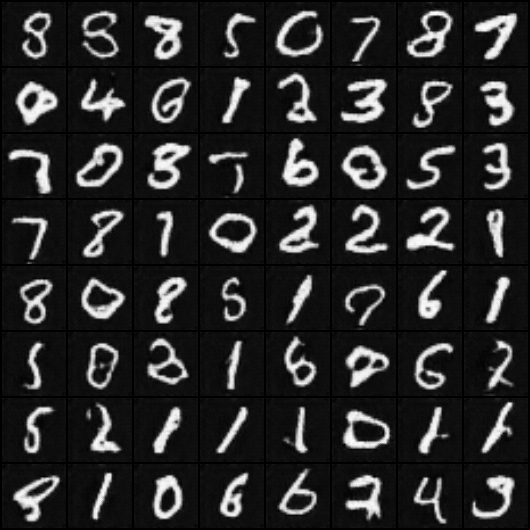

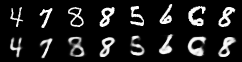

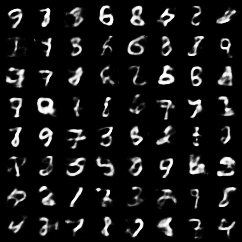

| Epoch 1 |

Epoch 10 |

Epoch 20 |

|

|

|

| Epoch 30 |

Epoch 40 |

Epoch 50 |

|

|

|

| Epoch 64 |

|

Auto-Encoding Variational Bayes

VAE in pytorch/examples

Generator(

(main): Sequential(

(0): ConvTranspose2d(100, 512, kernel_size=(4, 4), stride=(1, 1), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace)

(3): ConvTranspose2d(512, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace)

(6): ConvTranspose2d(256, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(7): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(8): ReLU(inplace)

(9): ConvTranspose2d(128, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(10): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(11): ReLU(inplace)

(12): ConvTranspose2d(64, 1, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(13): Tanh()

)

)

Discriminator(

(main): Sequential(

(0): Conv2d(1, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(1): LeakyReLU(negative_slope=0.2, inplace)

(2): Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(3): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): LeakyReLU(negative_slope=0.2, inplace)

(5): Conv2d(128, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(6): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): LeakyReLU(negative_slope=0.2, inplace)

(8): Conv2d(256, 512, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(9): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(10): LeakyReLU(negative_slope=0.2, inplace)

(11): Conv2d(512, 1, kernel_size=(4, 4), stride=(1, 1), bias=False)

(12): Sigmoid()

)

)

tensorboard --logdir=log/dcgan/

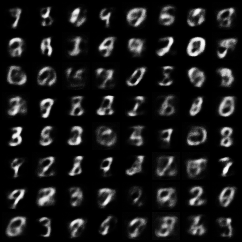

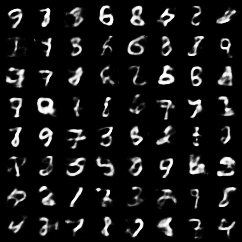

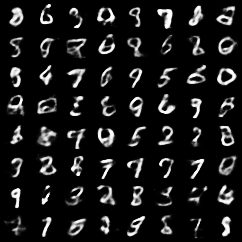

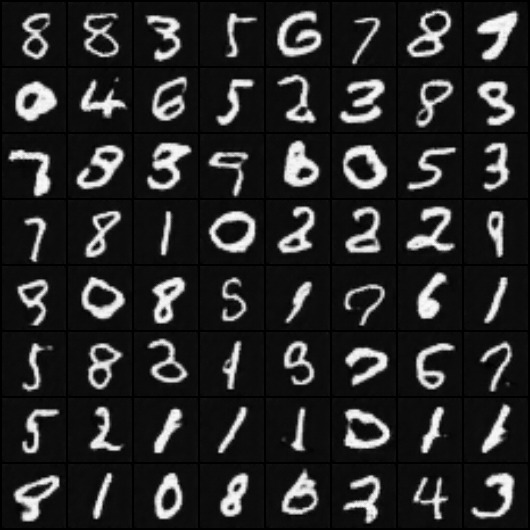

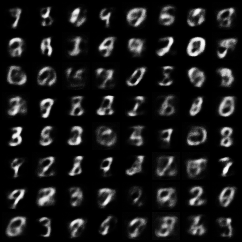

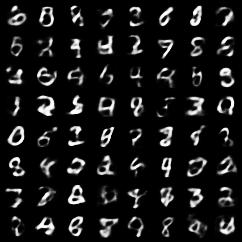

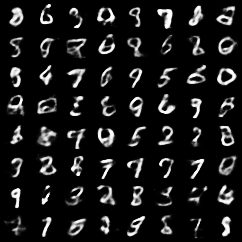

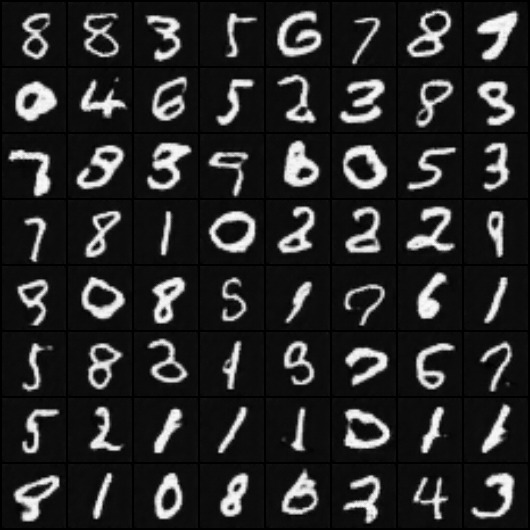

| Epoch 1 |

Epoch 10 |

Epoch 20 |

|

|

|

| Epoch 30 |

Epoch 40 |

Epoch 50 |

|

|

|

| Epoch 64 |

|

DCGAN in pytorch/examples

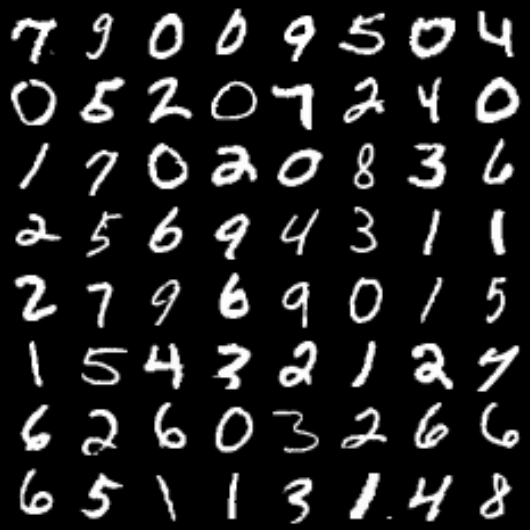

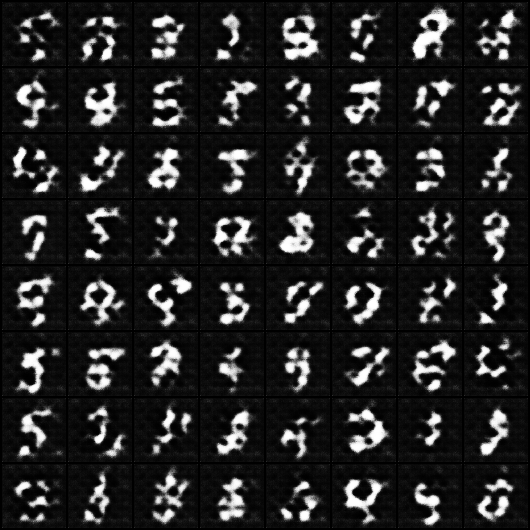

tensorboard --logdir=log/realnvp/

Density Estimation using Real NVP

Zhongyu Chen