This repo demonstrates building a generic RAG pattern with PromptFlow and Azure AI Hybrid Search

- To simplify development of complex LLM app workflow

- To systemtically evaluate and quantify LLM quality

- To simplify operationalizing LLM app as a docker on managed endpoints

- Azue AI Search makes it possible to combine the best of Keyword search (BM25), Semantic Search and Vectorized Search for an improved RAG performance (see benchamrking)

-

Open

.env.templatefile and configure following Azure service's connection details.Key Value AZURE_SEARCH_SERVICE_NAME Azure AI Search service name (e.g. jixjia-search-dev) SEARCH_ENDPOINT Azure AI Search service endpoint url (e.g. https://YOUR_SEARCH_SERVICE.search.windows.net) SEARCH_API_KEY Azure AI Search management key AOAI_KEY Azure OpenAI service API key AOAI_ENDPOINT Azure OpenAI service endpoint url (e.g. https://YOUR_AOAI.openai.azure.com/) -

Rename

.env.templateto.env -

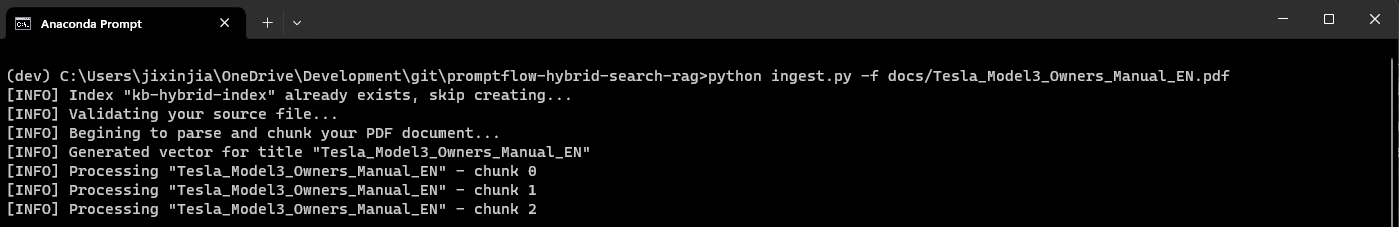

Run

ingest.pyto add your PDF source to Azure Search index. This tool will perform parsing, chunking and batch indexation of the PDF document for you.e.g.

python ingest.py -f PATH_TO_PDF_FILE -

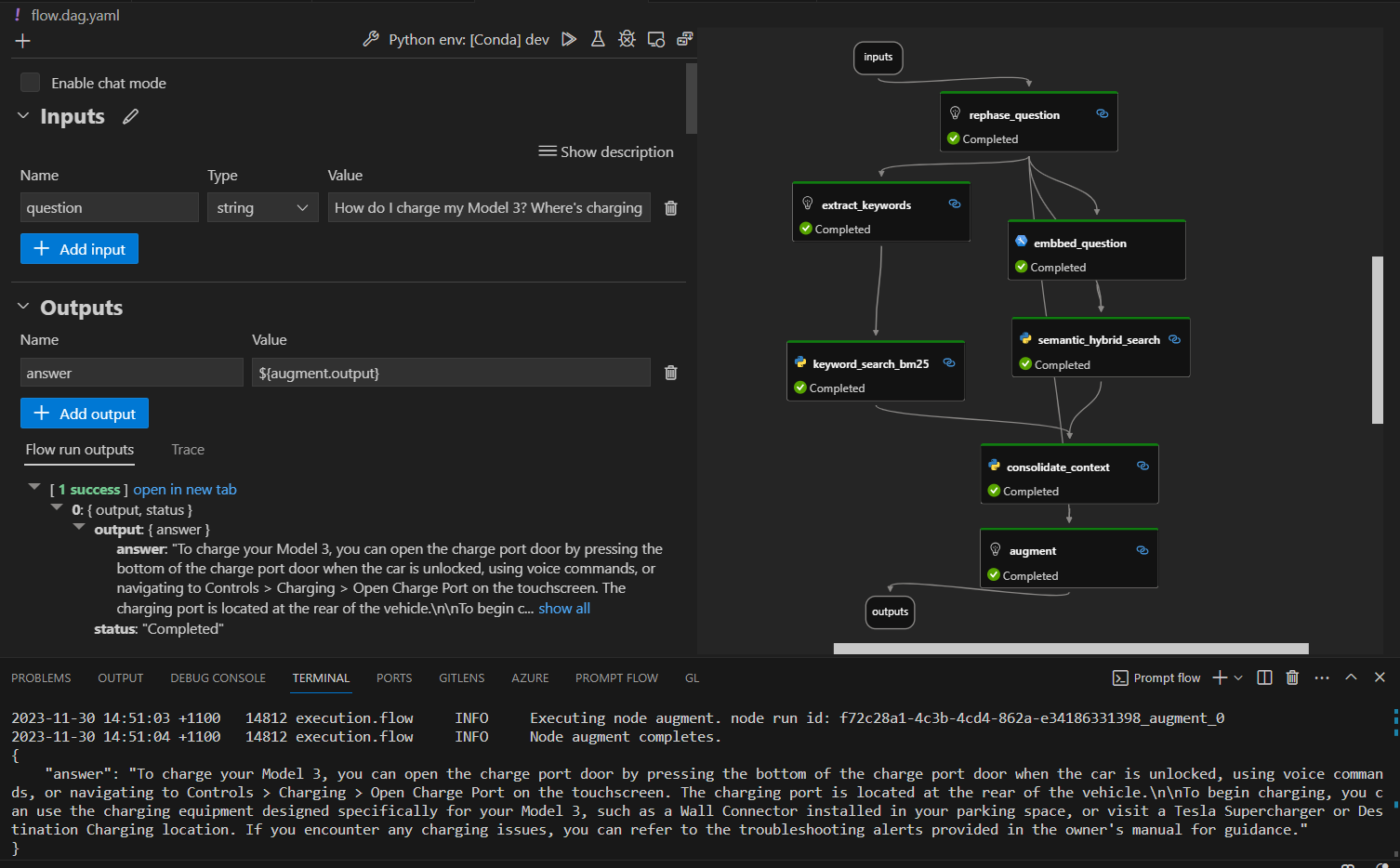

Run

flow.dag.yamlto edit the RAG LLM App workflow where necessary.

I recommend you to install the PromptFlow VS Code Extension for best local dev experience. -

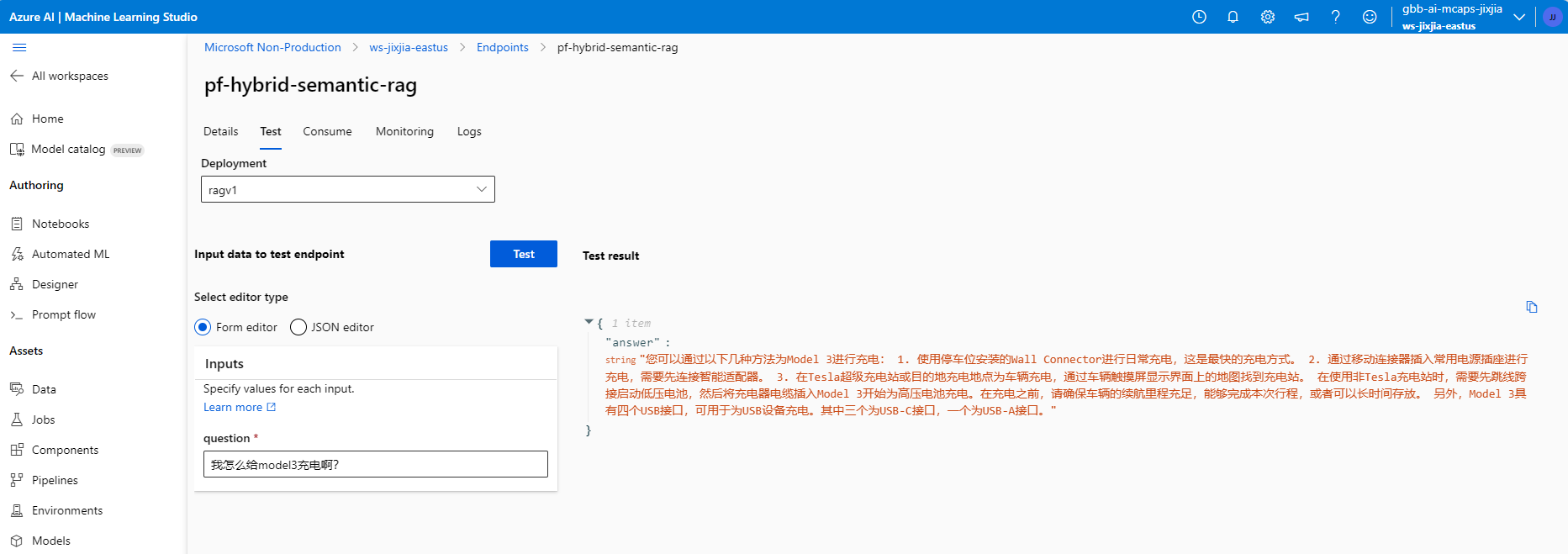

Deploy the LLM App to Azure ML Management Endpoint