CleanRL dedicates to be the most user-friendly Reinforcement Learning library. The implementation is clean, simple, and self-contained; you don't have to look through dozens of files to understand what is going on. Just read, print out a few things and you can easily customize.

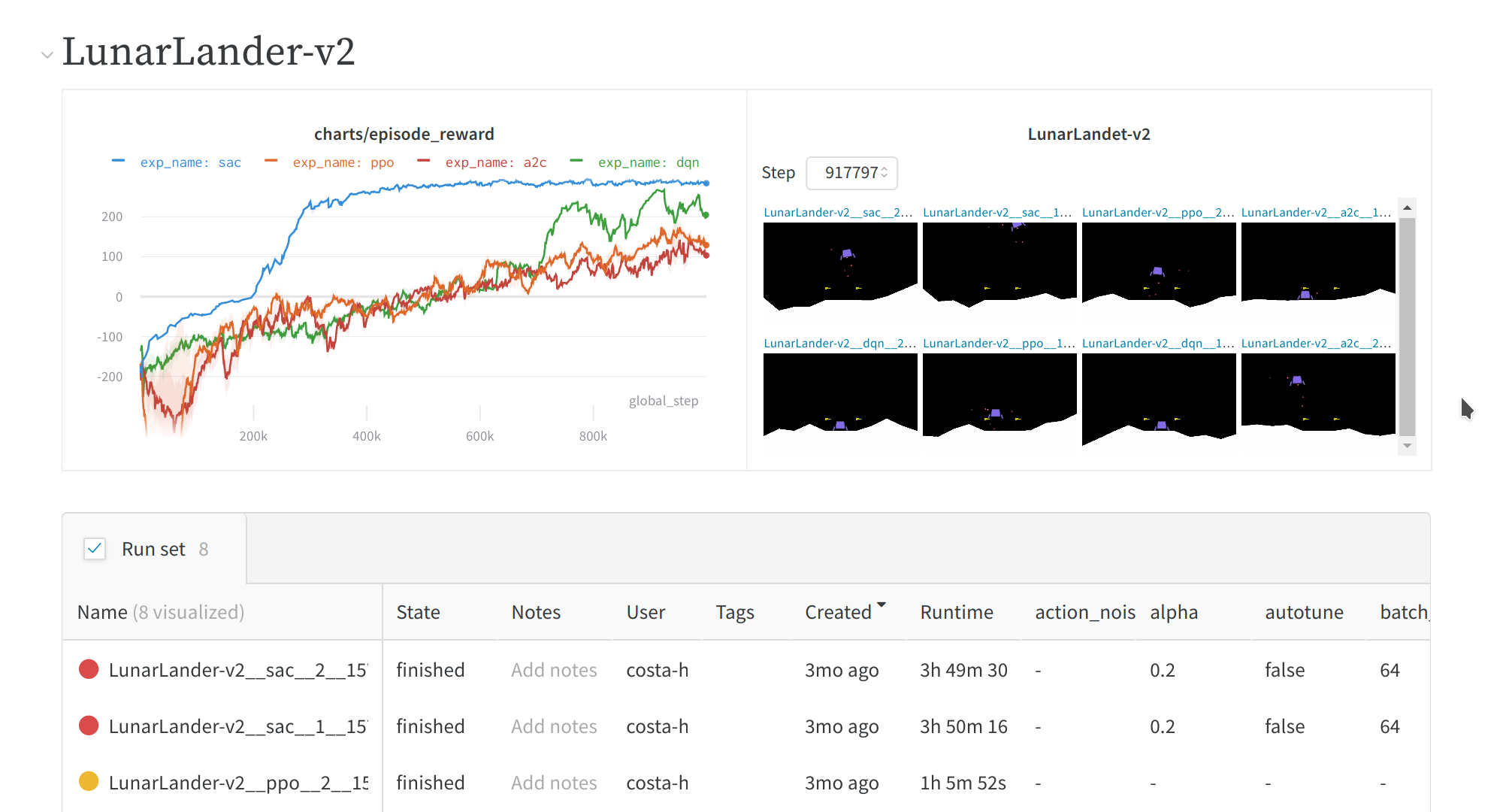

At the same time, CleanRL tries to supply many research-friendly features such as cloud experiment management, support for continuous and discrete observation and action spaces, video recording of the game play, etc. These features will be very helpful for doing research, especially the video recording feature that allows you to visually inspect the agents' behavior at various stages of the training.

Good luck have fun 🚀

The highlight features of this repo are:

- Our implementation is self-contained in a single file. Everything about an algorithm is right there! Easy to understand and do research with.

- Easy logging of training processes using Tensorboard and Integration with wandb.com to log experiments on the cloud. Check out https://cleanrl.costa.sh.

- Hackable and being able to debug directly in Python’s interactive shell (Especially if you use the Spyder editor from Anaconda :) ).

- Simple use of command line arguments for hyper-parameters tuning; no need for arcane configuration files.

Our implementation is benchmarked to ensure quality. We log all of our benchmarked experiments using wandb so that you can check the hyper-parameters, videos of the agents playing the game, and the exact commands to reproduce it. See https://cleanrl.costa.sh.

The current dashboard of wandb does not allow us to show the agents performance in all the games at the same panel, so you have to click each panel in https://cleanrl.costa.sh to check the benchmarked performance, which can be inconvenient sometimes. So we additionally post the benchmarked performance for each game using seaborn as follows (the result is created by using benchmark/plot_benchmark.py

| Benchmarked Learning Curves | Atari |

|---|---|

| Metrics, logs, and recorded videos are at | cleanrl.benchmark/reports/Atari |

|

|

|

|

|

| Benchmarked Learning Curves | Mujoco |

|---|---|

| Metrics, logs, and recorded videos are at | cleanrl.benchmark/reports/Mujoco |

|

|

|

|

|

|

|

|

|

|

|

| Benchmarked Learning Curves | Pybullet |

|---|---|

| Metrics, logs, and recorded videos are at | cleanrl.benchmark/reports/PyBullet-and-Other-Continuous-Action-Tasks |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Benchmarked Learning Curves | Classic Control |

|---|---|

| Metrics, logs, and recorded videos are at | cleanrl.benchmark/reports/Classic-Control |

|

|

|

|

| Benchmarked Learning Curves | Others (Experimental Domains) |

|---|---|

| Metrics, logs, and recorded videos are at | cleanrl.benchmark/reports/Others |

|

This is a rather challenging continuous action tasks that usually require 100M+ timesteps to solve. |

|

This is a self-play environment from https://github.com/hardmaru/slimevolleygym, so its episode reward should not steadily increase. Check out the video for the agent's actual performance (i.e. go check out cleanrl.benchmark/reports/Others ) |

|

This is a MicroRTS environment to build as many combat units as possible, see https://github.com/vwxyzjn/gym-microrts. These runs are created by https://github.com/vwxyzjn/gym-microrts/blob/master/experiments/ppo.py, which additionally implements invalid action masking and handling of multi-discrete action space for PPO. |

To run experiments locally, give the following a try:

$ git clone https://github.com/vwxyzjn/cleanrl.git && cd cleanrl

$ pip install -e .

$ cd cleanrl

$ python ppo.py \

--seed 1 \

--gym-id CartPole-v0 \

--total-timesteps 50000 \

# open another temrminal and enter `cd cleanrl/cleanrl`

$ tensorboard --logdir runsTo use wandb integration, sign up an account at https://wandb.com and copy the API key. Then run

$ cd cleanrl

$ pip install wandb

$ wandb login ${WANBD_API_KEY}

$ python ppo.py \

--seed 1 \

--gym-id CartPole-v0 \

--total-timesteps 50000 \

--prod-mode \

--wandb-project-name cleanrltest

# Then go to https://app.wandb.ai/${WANDB_USERNAME}/cleanrltest/Checkout the demo sites at https://app.wandb.ai/costa-huang/cleanrltest

-

Advantage Actor Critic (A2C)- Since A2C is a special case of PPO when setting

update-epochs=1, where the clipped objective becomes essentially the A2C's objective, we neglect the implementation for A2C. We might add it back in the future for educational purposes. However, we kept the old A2C implementations in theexperimentsfolder- experiments/a2c.py

- (Not recommended for using) For discrete action space.

- experiments/a2c_continuous_action.py

- (Not recommended for using) For continuous action space.

- experiments/a2c.py

- Since A2C is a special case of PPO when setting

- Deep Q-Learning (DQN)

- dqn.py

- For discrete action space.

- dqn_atari.py

- For playing Atari games. It uses convolutional layers and common atari-based pre-processing techniques.

- dqn_atari_visual.py

- Adds q-values visulization for

dqn_atari.py.

- Adds q-values visulization for

- dqn.py

- Categorical DQN (C51)

- c51.py

- For discrete action space.

- c51_atari.py

- For playing Atari games. It uses convolutional layers and common atari-based pre-processing techniques.

- c51_atari_visual.py

- Adds return and q-values visulization for

dqn_atari.py.

- Adds return and q-values visulization for

- c51.py

- Proximal Policy Gradient (PPO)

- All of the PPO implementations below are augmented with some code-level optimizations. See https://costa.sh/blog-the-32-implementation-details-of-ppo.html for more details

- ppo.py

- For discrete action space.

- ppo_continuous_action.py

- For continuous action space. Also implemented Mujoco-specific code-level optimizations

- ppo_atari.py

- For playing Atari games. It uses convolutional layers and common atari-based pre-processing techniques.

- ppo_atari_visual.py

- Adds action probability visulization for

ppo_atari.py.

- Adds action probability visulization for

- experiments/ppo_self_play.py

- Implements a self-play agent for https://github.com/hardmaru/slimevolleygym

- experiments/ppo_microrts.py

- Implements invalid action masking and handling of

MultiDiscreteaction space for https://github.com/vwxyzjn/gym-microrts

- Implements invalid action masking and handling of

- experiments/ppo_simple.py

- (Not recommended for using) Naive implementation for discrete action space. I keep it here for educational purposes because I feel this is what most people would implement if they had just read the paper, usually unaware of the amount of implementation details that come with the well-tuned PPO implmentation.

- experiments/ppo_simple_continuous_action.py

- (Not recommended for using) Naive implementation for continuous action space.

- Soft Actor Critic (SAC)

- sac_continuous_action.py

- For continuous action space.

- sac_continuous_action.py

- Deep Deterministic Policy Gradient (DDPG)

- ddpg_continuous_action.py

- For continuous action space.

- ddpg_continuous_action.py

- Twin Delayed Deep Deterministic Policy Gradient (TD3)

- td3_continuous_action.py

- For continuous action space.

- td3_continuous_action.py

We have a Slack Community for support. Feel free to ask questions. Posting in Github Issues and PRs are also welcome.

In addition, we also have a monthly development cycle to implement new RL algorithms. Feel free to participate or ask questions there, too. You can sign up for our mailing list at our Google Groups to receive event RVSP which contains the Hangout video call address every week. Our past video recordings are available at YouTube

CleanRL focuses on early and mid stages of RL research, where one would try to understand ideas and do hacky experimentation with the algorithms. If your goal does not include messing with different parts of RL algorithms, perhaps library like stable-baselines, ray, or catalyst would be more suited for your use cases since they are built to be highly optimized, concurrent and fast.

CleanRL, however, is built to provide a simplified and streamlined approach to conduct RL experiment. Let's give an example. Say you are interested in implementing the GAE (Generalized Advantage Estimation) technique to see if it improves the A2C's performance on CartPole-v0. The workflow roughly looks like this:

- Make a copy of

cleanrl/cleanrl/a2c.pytocleanrl/cleanrl/experiments/a2c_gae.py - Implement the GAE technique. This should relatively simple because you don't have to navigate into dozens of files and find the some function named

compute_advantages() - Run

python cleanrl/cleanrl/experiments/a2c_gae.pyin the terminal or using an interactive shell like Spyder. The latter gives you the ability to stop the program at any time and execute arbitrary code; so you can program on the fly. - Open another terminal and type

tensorboard --logdir cleanrl/cleanrl/experiments/runsand checkout theepisode_rewards,losses/policy_loss, etc. If something appears not right, go to step 2 and continue. - If the technique works, you want to see if it works with other games such as

Taxi-v3or different parameters as well. ExecuteAnd then you can monitor the performances and keep good track of all the parameters used in your experiments$ wandb login ${WANBD_API_KEY} $ for seed in {1..2} do (sleep 0.3 && nohup python a2c_gae.py \ --seed $seed \ --gym-id CartPole-v0 \ --total-timesteps 30000 \ --wandb-project-name myRLproject \ --prod-mode ) >& /dev/null & done $ for seed in {1..2} do (sleep 0.3 && nohup python a2c_gae.py \ --seed $seed \ --gym-id Taxi-v3 \ # different env --total-timesteps 30000 \ --gamma 0.8 \ # a lower discount factor --wandb-project-name myRLproject \ --prod-mode ) >& /dev/null & done - Continue this process

This pipline described above should give you an idea of how to use CleanRL for your research.

- Add automatic benchmark

- Support continuous action spaces

- Preliminary support with a2c_continuous_action.py

- Support using GPU

- Support using multiprocessing

I have been heavily inspired by the many repos and blog posts. Below contains a incomplete list of them.

- http://inoryy.com/post/tensorflow2-deep-reinforcement-learning/

- https://github.com/seungeunrho/minimalRL

- https://github.com/Shmuma/Deep-Reinforcement-Learning-Hands-On

- https://github.com/hill-a/stable-baselines

The following ones helped me a lot with the continuous action space handling: