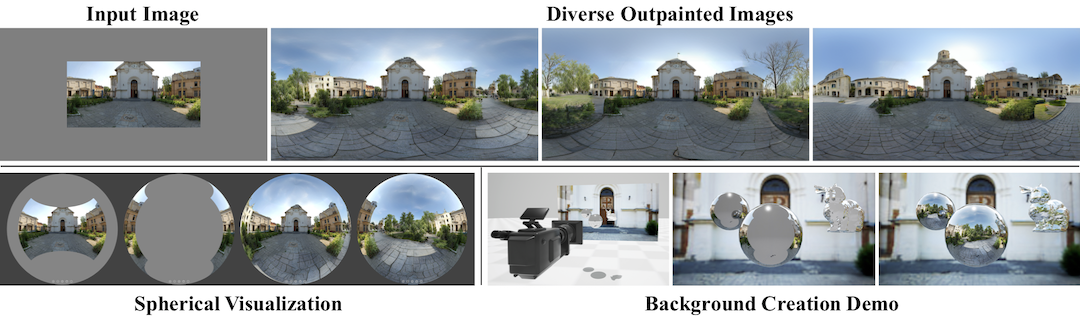

Diverse Plausible 360-Degree Image Outpainting for Efficient 3DCG Background Creation (CVPR 2022)

Naofumi Akimoto,

Yuhi Matsuo,

Yoshimitsu Aoki

arXiv | BibTeX | Project Page | Supp Video

A suitable conda environment named omnidreamer can be created

and activated with:

conda env create -f environment.yaml

conda activate omnidreamer

Please send us an email. We will send you the URL for downloading. You may distribute the trained models to others, but please do not reveal the URL.

- Put trained weights under

logs/ - Comment out

ckpt_pathto VQGAN models from each{*}-project.yaml

CUDA_VISIBLE_DEVICES=0 python sampling.py \

--config_path logs/2021-07-27T05-57-41_sun360_basic_transformer/configs/2021-07-27T05-57-41-project.yaml \

--ckpt_path logs/2021-07-27T05-57-41_sun360_basic_transformer/checkpoints/last.ckpt \

--config_path_2 logs/2021-07-27T10-49-57_sun360_refine_net/configs/2021-07-27T10-49-57-project.yaml \

--ckpt_path_2 logs/2021-07-27T10-49-57_sun360_refine_net/checkpoints/last.ckpt \

--outdir outputs/test

CUDA_VISIBLE_DEVICES=0 python sampling.py \

--config_path logs/2021-07-27T05-57-41_sun360_basic_transformer/configs/2021-07-27T05-57-41-project.yaml \

--ckpt_path logs/2021-07-27T05-57-41_sun360_basic_transformer/checkpoints/last.ckpt \

--config_path_2 logs/2021-07-27T10-49-57_sun360_refine_net/configs/2021-07-27T10-49-57-project.yaml \

--ckpt_path_2 logs/2021-07-27T10-49-57_sun360_refine_net/checkpoints/last.ckpt \

--mask_path assets/90binarymask.png \

--outdir outputs/test

CUDA_VISIBLE_DEVICES=0 python sampling.py \

--config_path logs/2021-08-12T03-27-04_sun360_basic_transformer/configs/2021-08-12T03-27-04-project.yaml \

--ckpt_path logs/2021-08-12T03-27-04_sun360_basic_transformer/checkpoints/last.ckpt \

--config_path_2 logs/2021-08-12T03-42-53_sun360_refine_net/configs/2021-08-12T03-42-53-project.yaml \

--ckpt_path_2 logs/2021-08-12T03-42-53_sun360_refine_net/checkpoints/last.ckpt \

--mask_path assets/90binarymask.png \

--outdir outputs/test

We train the four networks separately (VQGAN_1, VQGAN_2, Transformer, and AdjustmentNet).

Order of training

- For the training of the Transformer, trained VQGAN_1 and VQGAN_2 are required.

- For the training of AdjustmentNet (RefineNet), trained VQGAN_2 is required.

- Therefore, VQGAN_1 and VQGAN_2 can be trained in parallel, and Transformer and AdjustmentNet (RefineNet) can also be trained in parallel.

Sample Commands

- VQGAN_1

Seeconfigs/sun360_comp_vqgan.yamlfor the details. Run it withimage_key: concat_input,concat_input: True,in_channels: 7, andout_ch: 7. We trained 30 epochs totaly (only for transformer, 15).

CUDA_DEVICE_ORDER=PCI_BUS_ID CUDA_VISIBLE_DEVICES=0,1 python main.py --base configs/sun360_comp_vqgan.yaml -t True --gpus 0,1

- VQGAN_2

Useconfigs/sun360_comp_vqgan.yamlfor VQGAN_2, that is the same configuration file, but setimage_key: image,concat_input: False,in_channels: 3, andout_ch: 3.

CUDA_DEVICE_ORDER=PCI_BUS_ID CUDA_VISIBLE_DEVICES=0,1 python main.py --base configs/sun360_comp_vqgan.yaml -t True --gpus 0,1

- Transformer

Write the paths of the trained VQGAN_1 and VQGAN_2 in the yaml configuration fileconfigs/sun360_basic_transformer.yaml. Even if you train VQGAN with 256x256 images before, you can train a transformer with 256x512. If using 256x512, setblock size: 1024.

CUDA_DEVICE_ORDER=PCI_BUS_ID CUDA_VISIBLE_DEVICES=0,1 python main.py --base configs/sun360_basic_transformer.yaml -t True --gpus 0,1

- RefineNet(AdjustmentNet)

Write the paths of the trained VQGAN_2 in the yaml configuration fileconfigs/sun360_refine_net.yaml.

CUDA_DEVICE_ORDER=PCI_BUS_ID CUDA_VISIBLE_DEVICES=0,1 python main.py --base configs/sun360_refine_net.yaml -t True --gpus 0,1

- Ubuntu 18.04

- Titan RTX or RTX 3090

- CUDA11

This repo is built on top of VQGAN. See the license.

@inproceedings{akimoto2022diverse,

author = {Akimoto, Naofumi and Matsuo, Yuhi and Aoki, Yoshimitsu},

title = {Diverse Plausible 360-Degree Image Outpainting for Efficient 3DCG Background Creation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2022},

}