This repo accompanies the research paper, How Far Are We from Intelligent Visual Deductive Reasoning, ICLR AGI Workshop 2024.

Vision-Language Models (VLMs), like GPT-4V, have made significant progress in various tasks but face challenges in visual deductive reasoning. Using Raven’s Progressive Matrices (RPMs), we find blindspots in VLMs' abilities for multi-hop relational reasoning. Specifically, we provide the following contributions:

-

Evaluation Framework:

- Systematically assessed various SOTA VLMs on three datasets: Mensa IQ test, IntelligenceTest, and RAVEN.

- Comprehensive performance evaluation reveals a gap between text-based and pure image-based reasoning capabilities in large foundation models.

-

Performance Bottleneck Analysis:

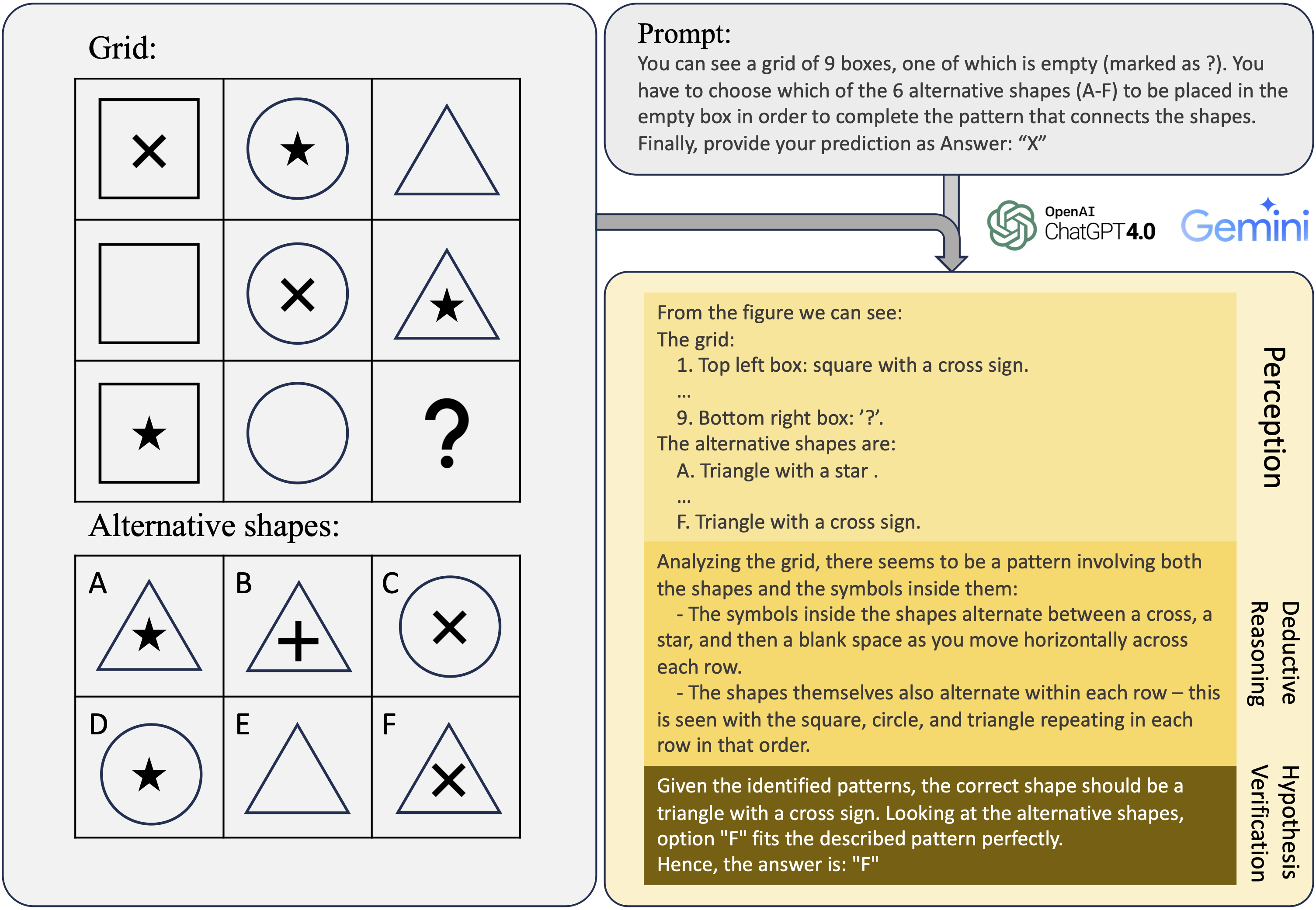

- Breakdown of VLM capability into perception, deductive reasoning, and hypothesis verification.

- Case study of GPT-4V highlights specific issues.

-

Issues/Findings in Current VLMs:

- Perception emerges as the primary limiting factor in current VLMs' performance.

- Complementary text description is needed for optimal deductive reasoning.

- Some effective LLM strategies (e.g., in-context learning) do not seamlessly transfer to VLMs.

- Overconfidence, sensitivity to prompt design, and ineffective utilization of in-context examples.

- Evaluate your VLMs against popular VLMs across hundreds of RPM tasks in three datasets.

- Determine whether your VLMs can significantly mitigate the compounding errors or confounding errors outlined in the paper.

| Mensa | IntelligenceTest | RAVEN | ||||

|---|---|---|---|---|---|---|

| Entropy |

Accuracy |

Entropy |

Accuracy |

Entropy |

Accuracy |

|

| GPT-4V | ||||||

| Gemini Pro | ||||||

| QWen-VL-Max | ||||||

| LLaVA-1.5-13B | ||||||

| GPT-4V (0-shot) | ||||||

| GPT-4V (1-shot) | ||||||

| GPT-4V (Self-consistency) | ||||||

| Gemini Pro (0-shot) | ||||||

| Gemini Pro (1-shot) | ||||||

| Gemini Pro (Self-consistency) |

pip install -r requirements.txtexport OPENAI_API_KEY="sk-XXXX"Data used in our paper:

#### Raven:

data/raven.tsv

#### Intelligence Test:

data/it-pattern.tsv

Note:

For Raven dataset, there are images in this repo.

For Intelligence Test data, our repo do not host any images, but the urls of the images are provided: data/it-pattern/it-pattern.jsonl.

Generate your own Raven data:

python data/raven/src/main.py --num-samples 20 --save-dir data/raven/images

Here we provide a simply script to eval GPT4V with mensa examples:

python src/main.py --data data/manually_created.tsv --model GPT4V --prompt mensa --output_folder output

Required:

--data: Specifies the input data to the script.--model: Specifies the model name used for generation--prompt: Specifies the prompt name used for generation

Optional:

--output_folder: Path to the output folder containing generation and prediction

Please consider citing our work if it is helpful to your research.

@article{zhang2024far,

title={How Far Are We from Intelligent Visual Deductive Reasoning?},

author={Yizhe Zhang and He Bai and Ruixiang Zhang and Jiatao Gu and Shuangfei Zhai and Josh Susskind and Navdeep Jaitly},

year={2024},

eprint={2403.04732},

archivePrefix={arXiv},

primaryClass={cs.AI}

}