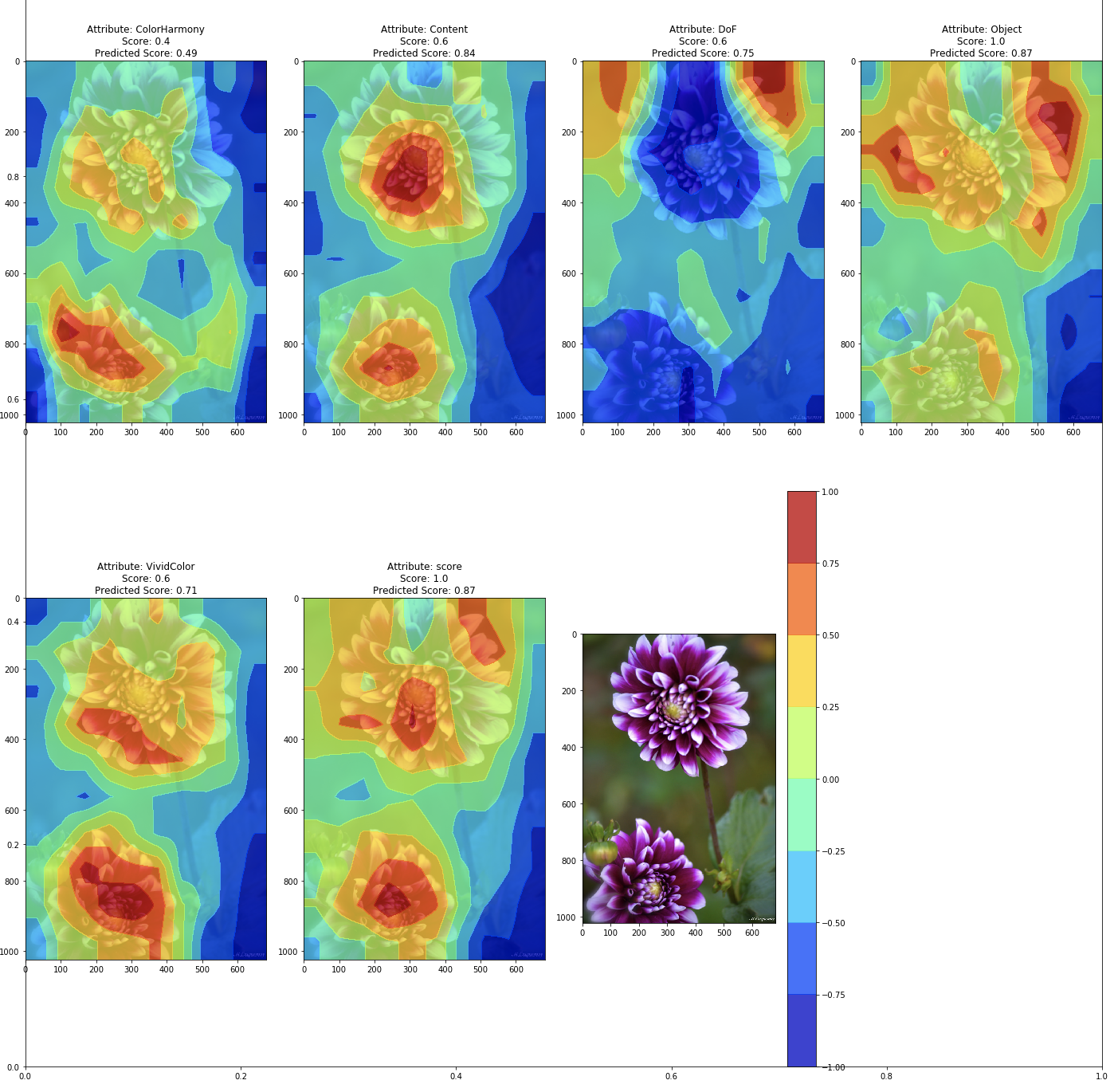

This is an implementation of the paper Learning Photography Aesthetics with Deep CNNs in PyTorch. By pooling the feature maps of the output of each ResNet block, we are able to use gradCAM to visualize which parts of the image contribute to the aesthetics of the image.

The authors of the paper have a Keras implementation here though the code is quite messy, and there were a few bugs I had to fix with their visualization code

To install the environment (assuming you have Anaconda installed) just do:

conda env create -f environment.yml -n <env_name>

Then activate the environment

source activate <env_name>

The training images can be downloaded from my Google Drive

The data files are in data/*.csv

Training uses config file which is included, to train:

python train.py --config_file_path config.json

The training should take ~3 mins per epoch

Or if you don't want to train the model, you can go through to the notebook and do the visualization/evaluation there. I have included a checkpoint from a pretrained model :)

ColorHarmony 0.503174

Content 0.597445

DoF 0.688919

Object 0.654278

VividColor 0.706658

score 0.716499

These results are better than the ones in the paper probably because I fine-tuned the weights of resnet50 in additional to training the FC layers connected to the GAP features from the resnet blocks. The reason was because I wasn't able to produce their results in the paper.

More performance measures can be seen in the notebook, for example the loss/epoch, the correlation/epoch etc.

The visualization code is in notebook/Pytorch Visualization-V2.ipynb

Here is an image picked randomly from the dataset:

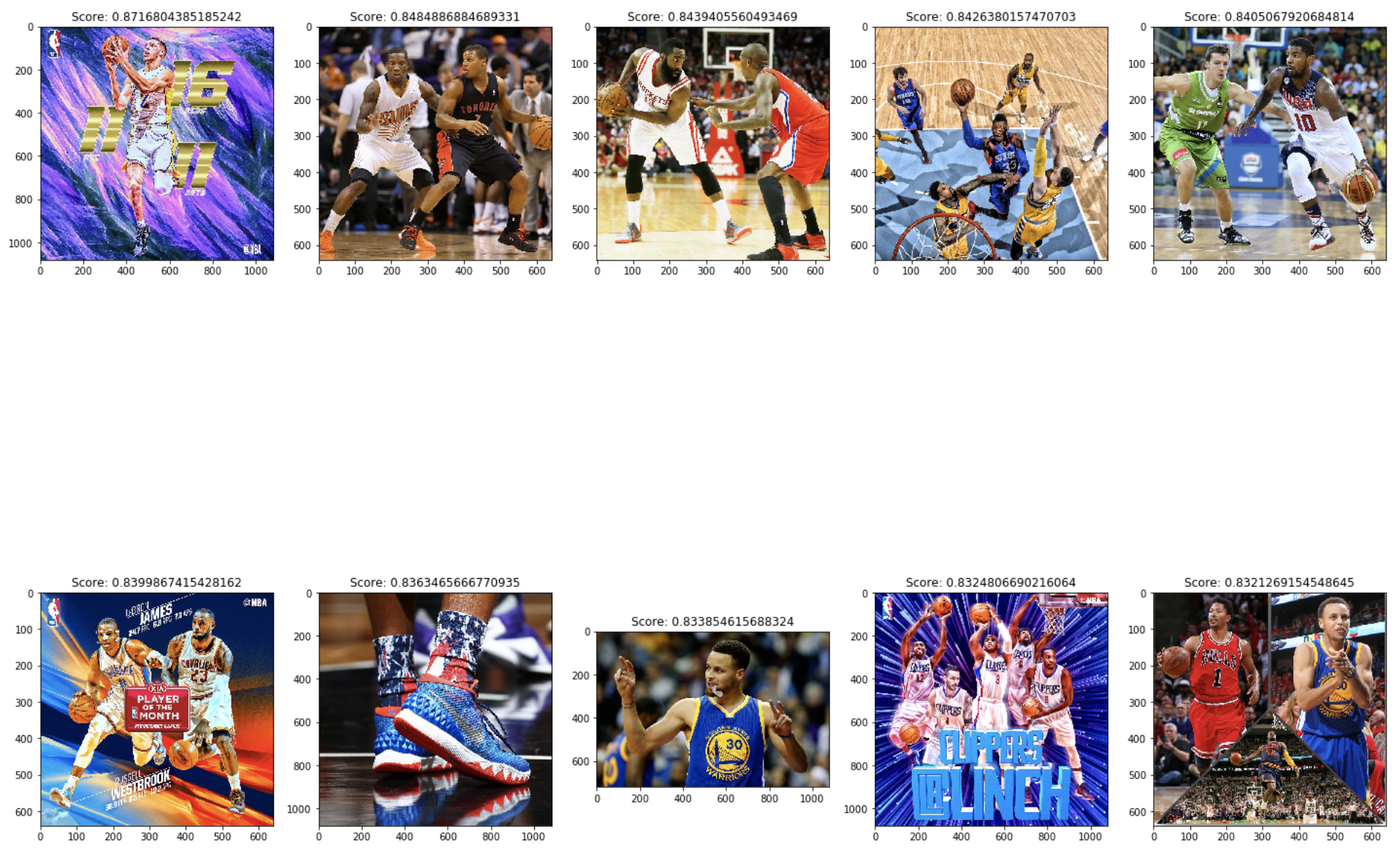

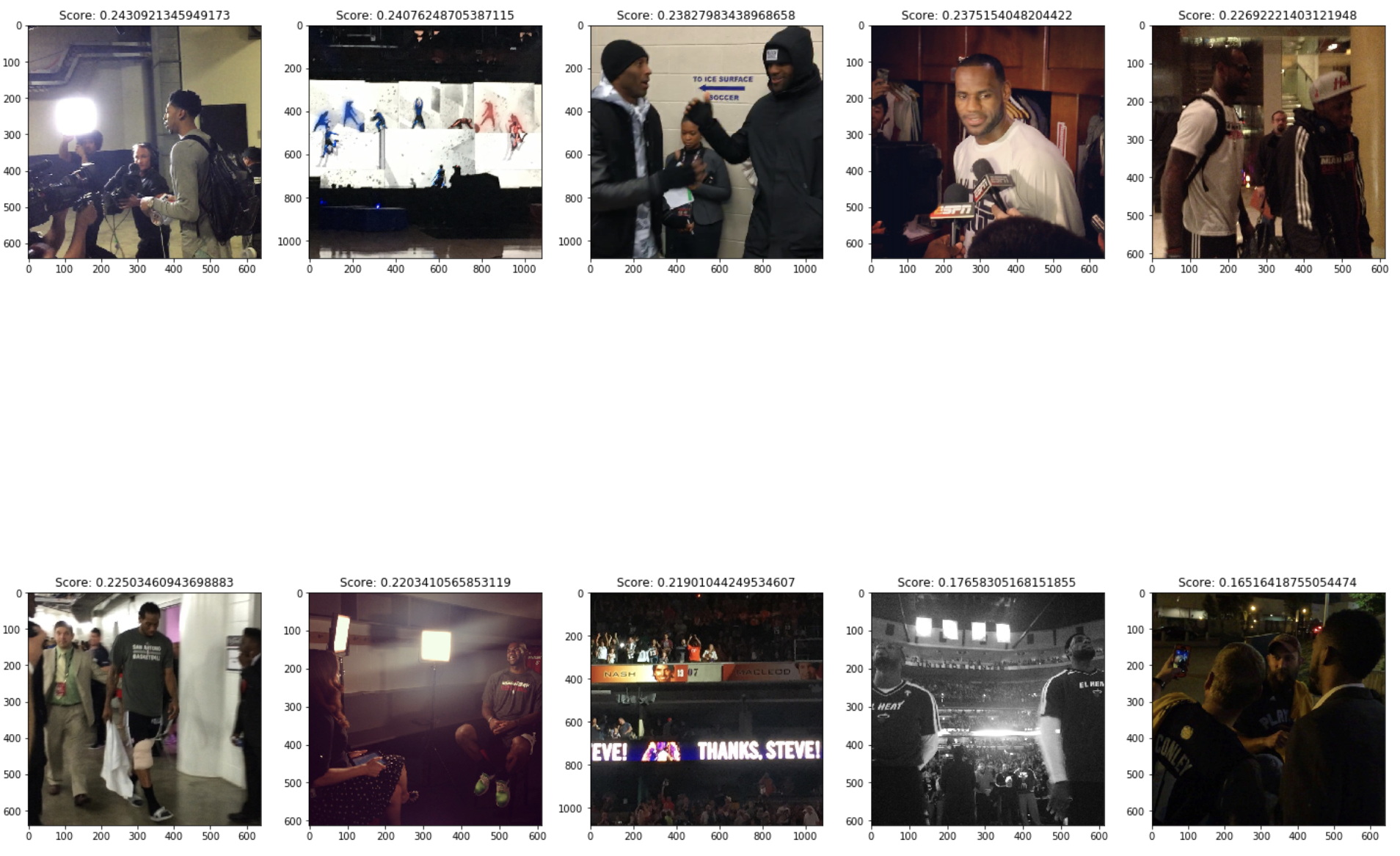

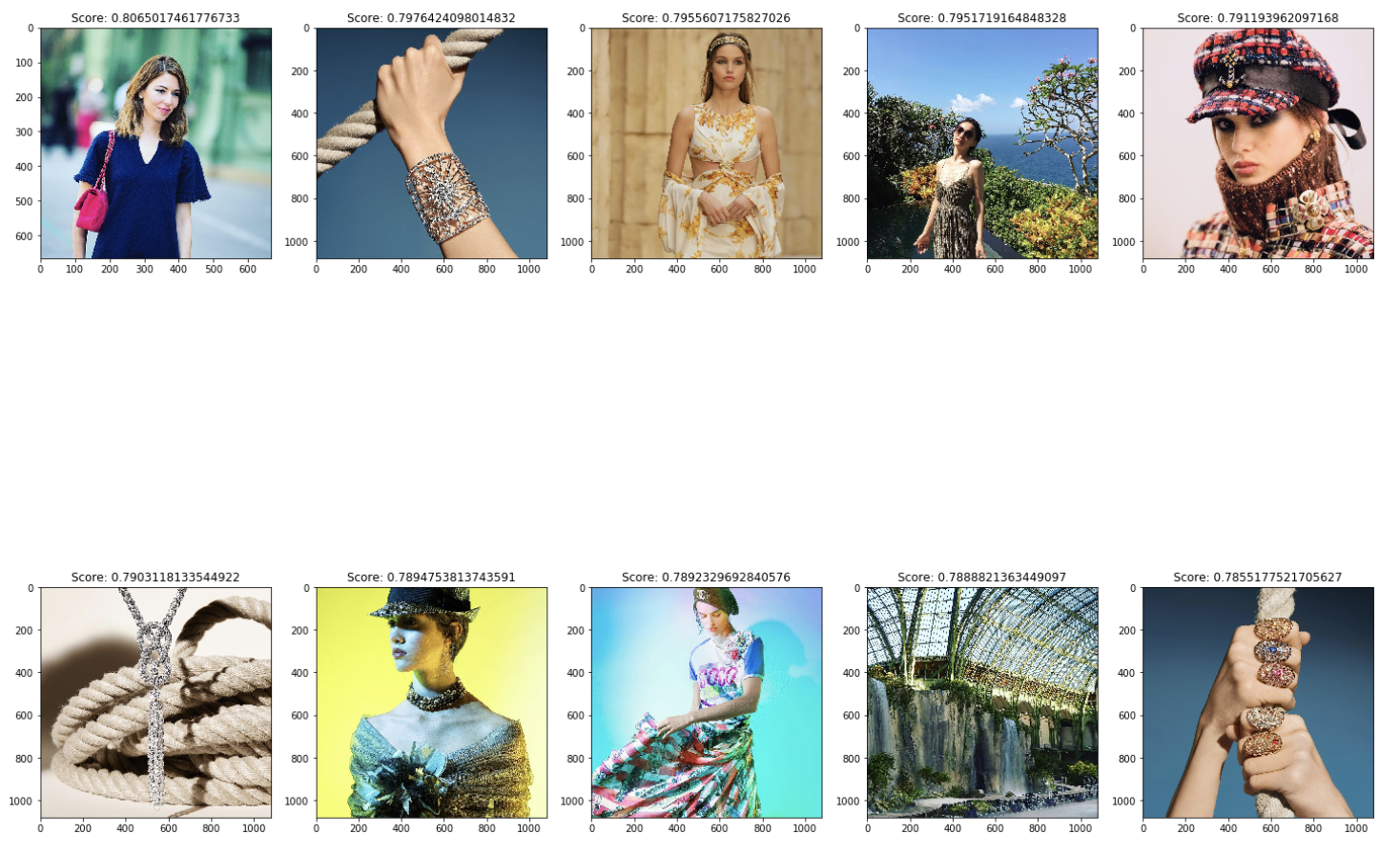

For fun I scraped some images off Instagram to see if my model was really making sense!

Here are the top 10 rated images using images scraped from NBA's Instagram account:

Here are the bottom 10 rated images:

Here are the top 10 rated images using images scraped from Dior's Instagram account:

Here are the bottom 10 rated images using images scraped from Dior's Instagram account: