Learning Grimaces By Watching TV

By Samuel Albanie and Andrea Vedaldi

This repo contains links to the datasets and code to reproduce the experiments contained in the paper Learning Grimaces By Watching TV.

Models

The trained emotion recognition models used in this work can be downloaded for use with MatConvNet and Pytorch:

Training Code

The code is organised as follows:

fer-expscontains the experiments used to produce the benchmarks in Table 2 of the papersfew-expscontains the experiments used to produce the benchmarks in Table 3 in the paperfacevalue-expscontains the experiments used to produce Table 4 of the papercorecontains shared code used by each of the experiments

Dependencies: The training code uses the MatConvNet framework which you can get from here.

Datasets

The experiments in this paper use three datasets:

- The FER dataset is a publicly accessible dataset that contains about 35,000 emotion labelled faces (available here).

- The SFEW dataset is a dataset that is freely available for research use. It contains about 1,500 emotion labelled faces (available here)

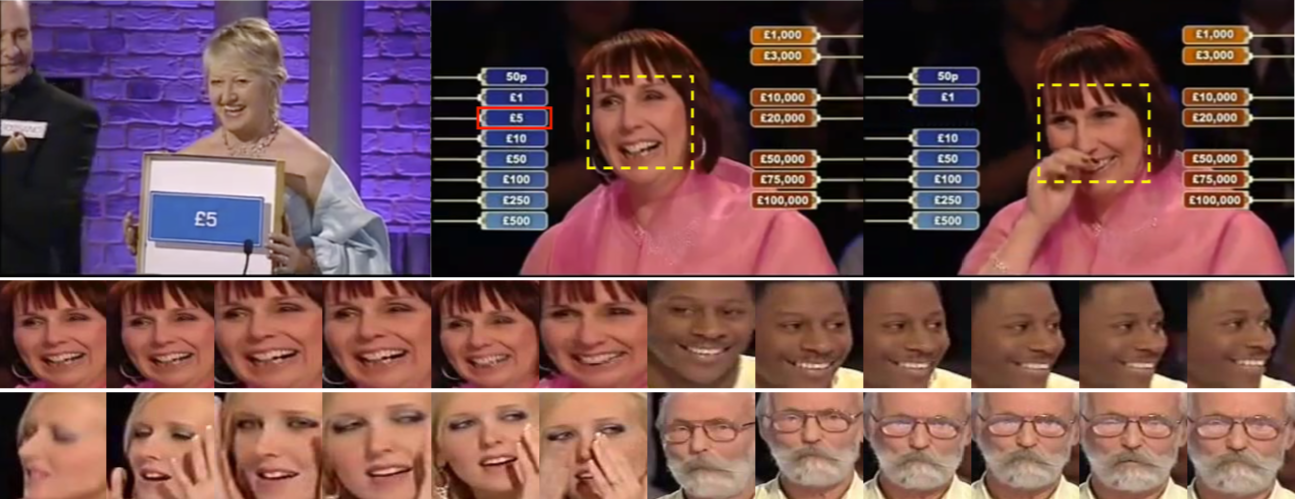

- The facevalue dataset is a dataset that is freely available for research use, released alongside the paper. It contains about 192,000 event labelled faces (available here). Some example face tracks are shown below:

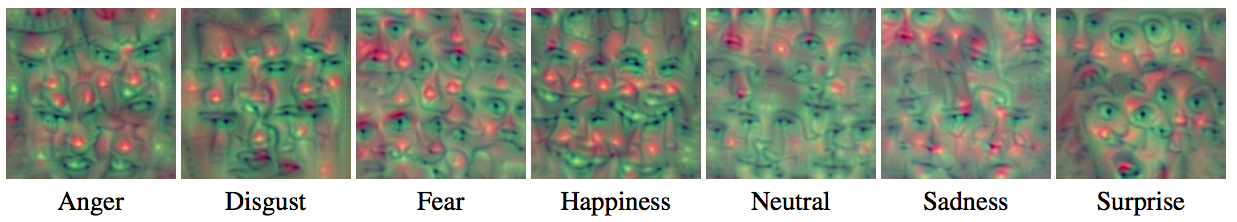

Emotion recognition model visualizations

The network inversions shown in Figure 2 of the paper (and reproduced below) were produced by applying Aravindh Mahendran's deep goggle code (which can be found here) to the VGG-VD-16 model trained on FER.

Citation

If you find this work useful, please consider citing:

S. Albanie and A. Vedaldi, "Learning Grimaces by Watching TV",

Proceedings of the British Machine Vision Conference (BMVC), 2016