Develop an application which facilitates:

- Gender recognition of a person based on a voice recording.

- Age group identification of a person based on a voice recording.

- Spoken language recognition of a person based on a voice recording.

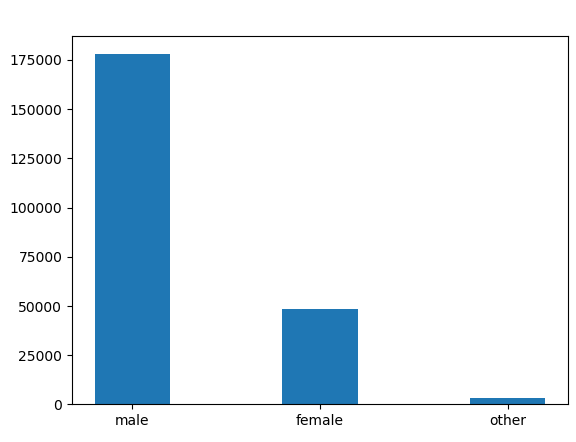

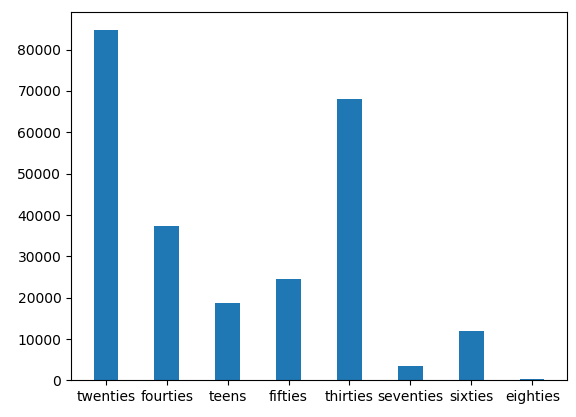

For this project we've used the Mozilla Common Voice Dataset, namely, the English dataset for gender classification and age classification. And the English, French and German datasets for language classification. The gender dataset contains 230,000 samples, labeled as male, female or other. The age dataset contains 250,000 samples labeled from teens to eighties, in tens of years increments. The language dataset is self-explanatory.

Before the data preparation step we performed some dataset preparation. After equally sampling from the dataset.

- For the gender dataset we've took only adults (age > 20).

- For the age dataset we've took only those younger than 70 years.

- For the language dataset we've took samples only from the ones who are in their 20s and 30s.

For the training/validation of the gender model we've taken 97,000 samples split into two classes: male and female. For the training/validation of the age model 71,000 samples were taken, split into 6 classes (teens, twenties, thirties, fourties, fifties and sixties). And 15,000 for the language model, with 5,000 from each language (english, french and german).

The audio preprocessing part and feature extraction was done using librosa. Starting with the original audio, we've loaded it using librosa with a sample rate of 44.1k, converting the signal to mono.

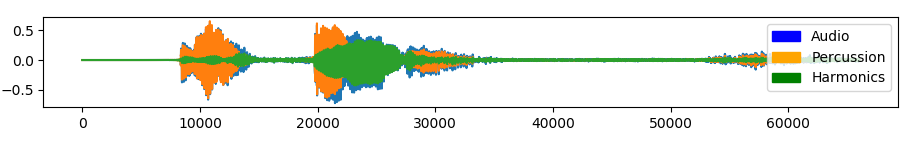

Initial audio sample:

After loading the audio, we've done some preprocess to it, removing

the background noise and the silence fragments from the audio.

After loading the audio, we've done some preprocess to it, removing

the background noise and the silence fragments from the audio.

This was done in two steps:

* apply a thresholding approach for the whole audio (silence <= 18db)

* apply a thresholding apporach for the leading and trailing

parts of the audio signal (silence <= 10db).

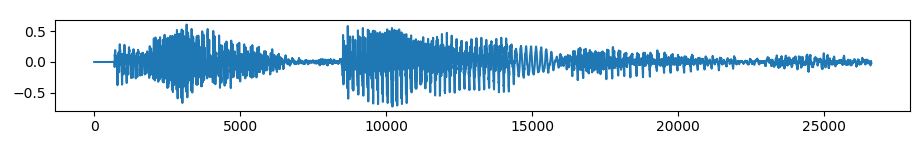

Audio after first step:

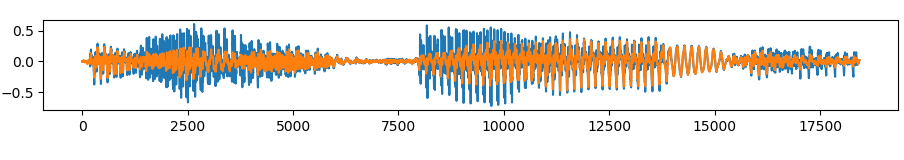

Audio after second step:

With orange are the harmonics of the audio, the melody part, and with green are

the percussive parts of the audio. We've decided to keep both.

With orange are the harmonics of the audio, the melody part, and with green are

the percussive parts of the audio. We've decided to keep both.

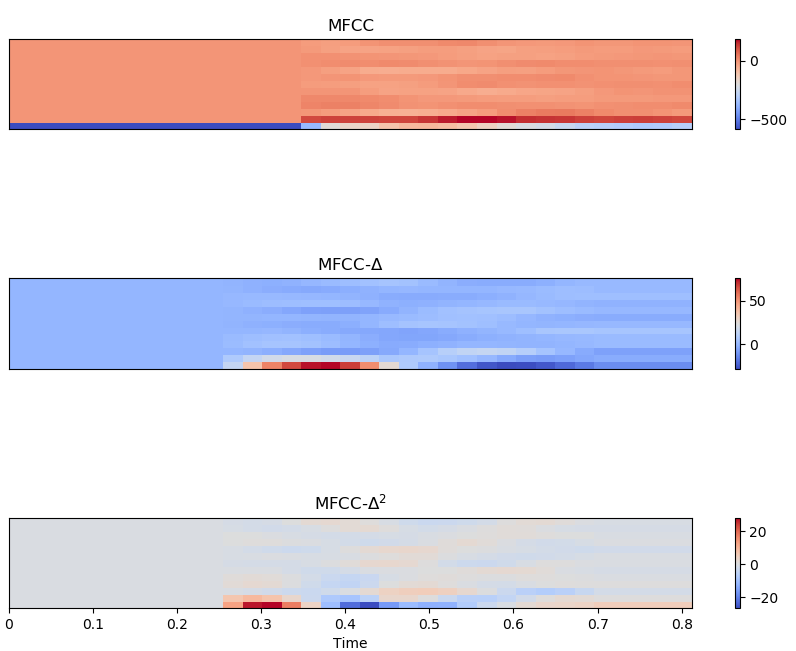

We've chosen to extract from each audio file 41 features (13 MFCCs, 13 delta-MFCCs, 13 delta-delta-MFCCs, 1 pitch-estimate, 1 magnitude vector). We've chosen 13 as the number of MFCC due to its popularity in the literature.

MFCCs, delta-MFCCs and delta-delta-MFCCs:

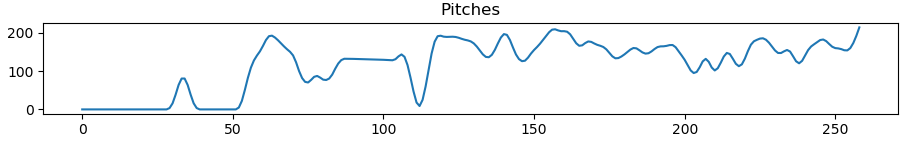

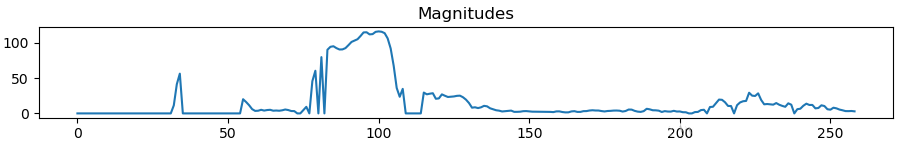

Pitches and magnitudes were extracted from the audio sample using librosa, then the maximum of those values were extracted, and then a smooth function was applied (Hann function) over the pitches with a window of 10ms.

Magnitudes for the audio sample:

We've chose a DNN as the model due to their success in exploiting large quantities of data without overtraining and their multilevel representation. As the dataset is composed of temporal sequences of speech, to avoid stacking audio frames as the inputs and because RNNs are prone to gradient vanishing and fail to learn when time deltas exceed more than 5-10 time steps, we've chosen the Long Short-Term Memory(or LSTM) architecture.

For the purpose of evaluating the resulting models a train/validation split was made on the dataset with 80% of the samples for the training of the models and 20% for the validation. Both the training and the validation sets were normalized to have zero mean and unit standard deviation. Each model was optimized mini-batch-wise with a mini-batch size of 128 samples.

LSTM cell visual representation, source: Google

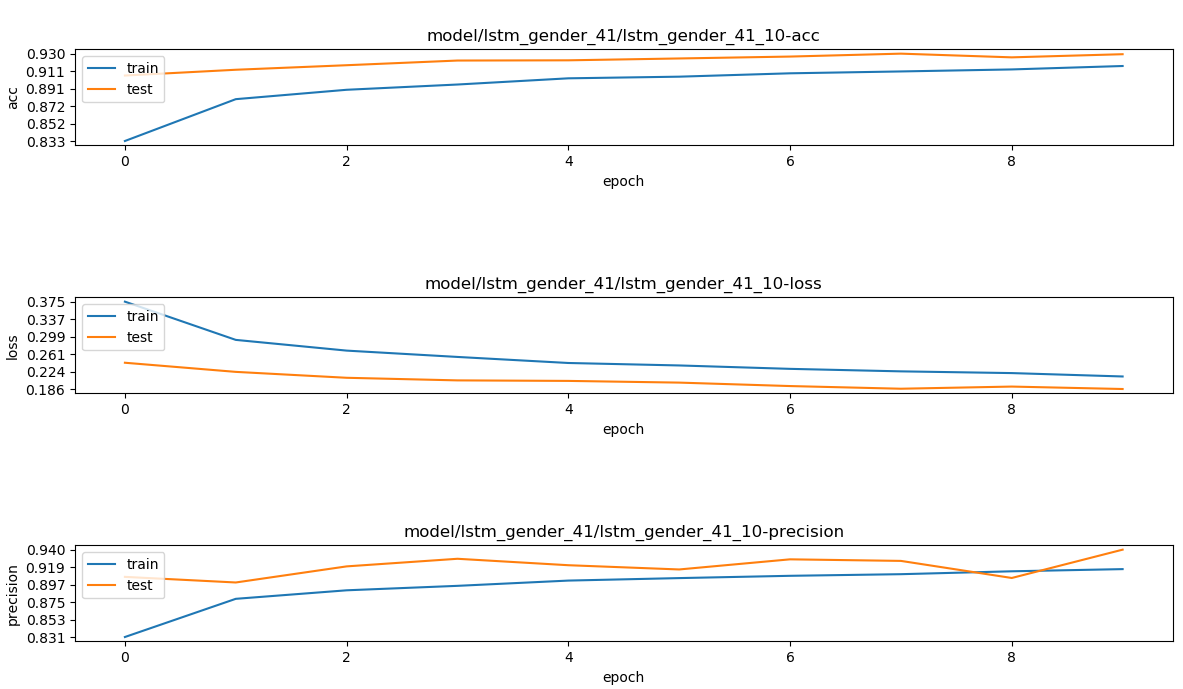

For the gender classification we are using a model containing 2 LSTM layers with 100 units on each layer, the first one has a dropout of 30% applied to it and the second one a dropout of 20%, and an output layer with 2 units using softmax as the activation function.

The model is trained for 10 epochs using the categorical crossentropy function as the loss function and the ADAM optimizer to optimize the network weights. After 10 epochs we've obtained a loss-score of 0.19, an accuracy of 0.93 and a precision of 0.94 on the validation set.

Gender model performance chart:

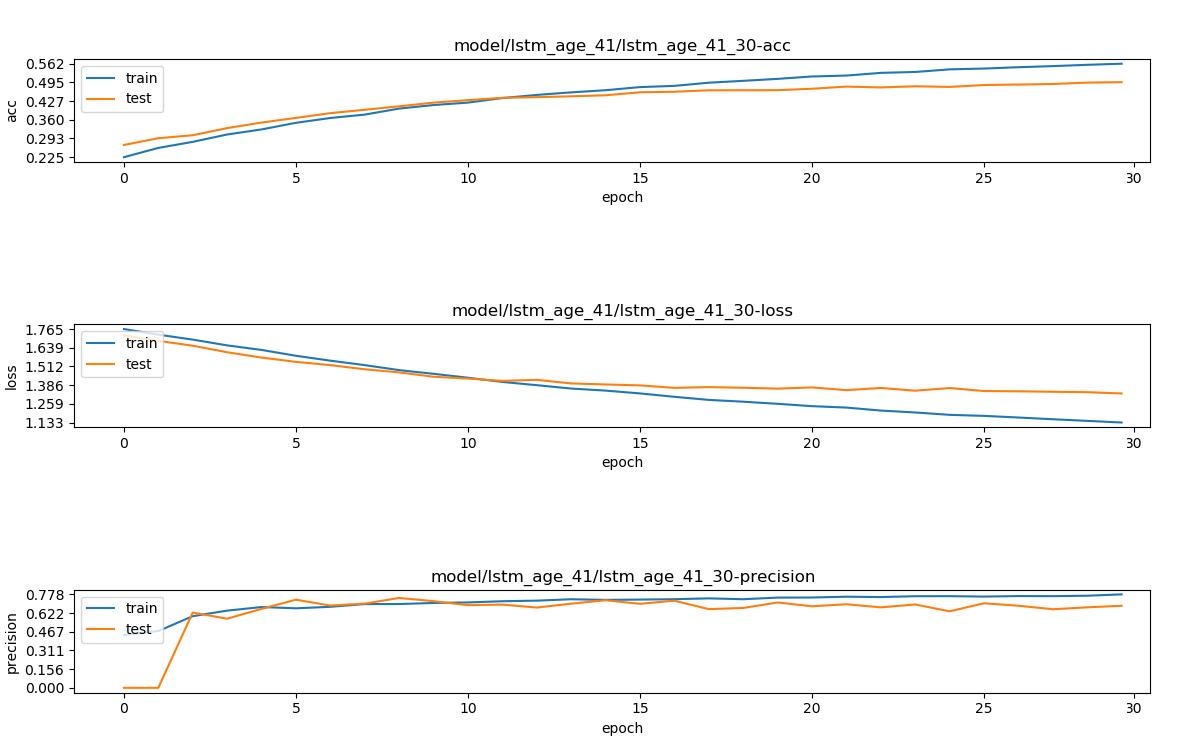

For the age classification we set a LSTM with 2 hidden layers of size 256. To prevent the LSTM from overfitting, we have applied a dropout of 30% for each hidden layer. We've then added a fully-connected layer of the same size using ReLU as the activation function. Finally, the output layer has a size equal to the number of age classes using softmax as the activation function.

The model is trained for 30 epochs using the categorical crossentropy function as the loss function and the ADAM optimizer for weight learning.

After 30 epochs we've obtained a loss-score of 1.32, an accuracy of 0.49 and a precision of 0.68 on the validation set.

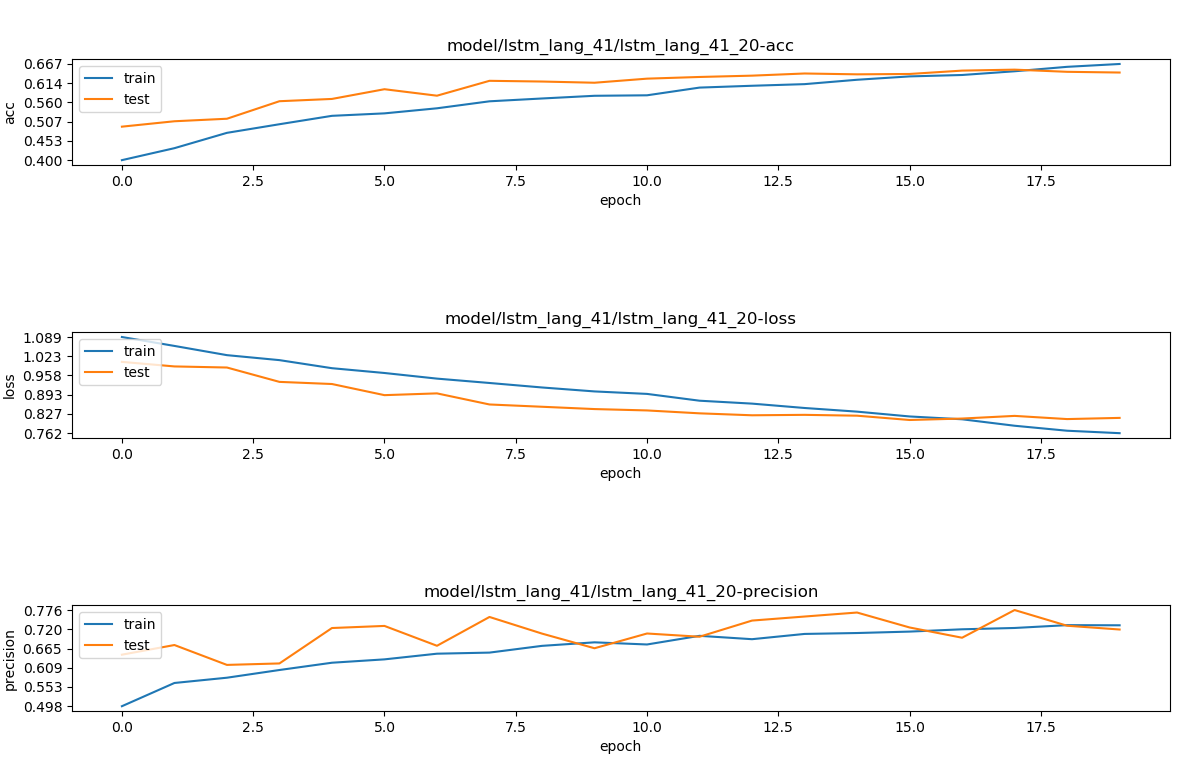

For the language classification we trained a LSTM model containing 2 hidden layers of 512 units each, and set a dropout of 60% respectively 40% to avoid model overfitting. The output layer consists of 3 units, one for each language to be identified, and uses softmax for its activation.

The model is trained for 20 epochs using the categorical crossentropy function as the loss function and the ADAM optimizer for weight learning.

After 20 epochs we've obtained a loss-score of 0.8, an accuracy of 0.64 and a precision of 0.71 on the validation set.

Language model performance chart:

Forgacs Amelia

Enasoae Simona

Dragan Alex