Intuitive demonstration of a feedforward neural network for classification with a streamlit UI front-end

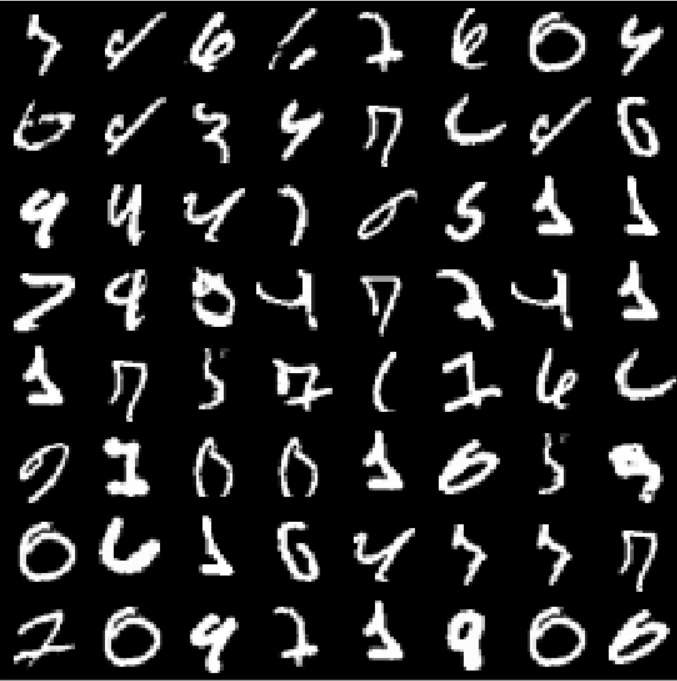

This repo contains the code for a 2-layer feedforward neural network with ReLU activations and dropout trained on the MNIST image classification task.

The MNIST dataset contains 28 x 28 grayscale images

of handwritten digits.

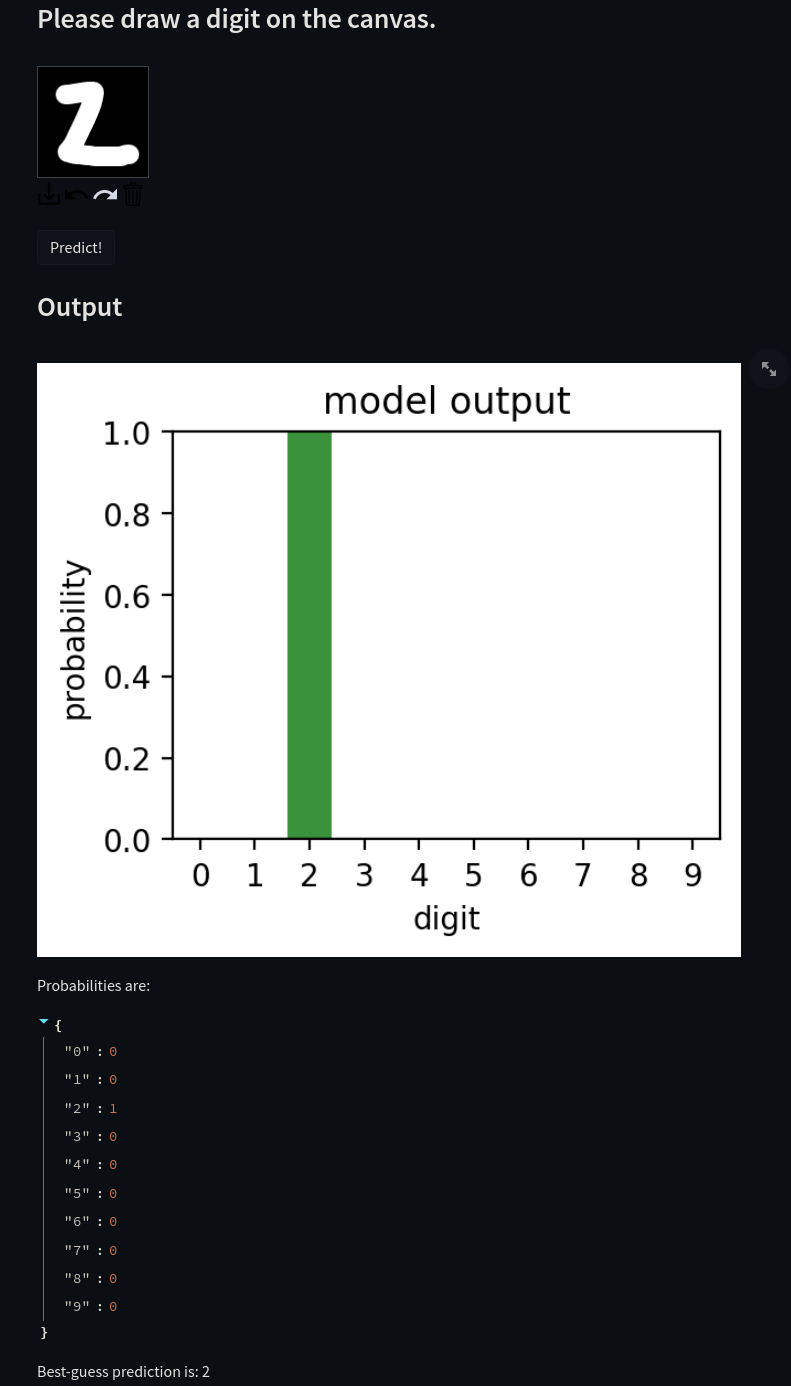

The model consists of a 784-dimensional input layer, followed by two fully-connected layers of dimension 512 and 128, followed by a 10-neuron output layer. ReLU activation and dropout were performed between layers and a softmax operation normalized the logits after the output layer.

A streamlit app provides an interactive demo for testing the model on new user input.