Made in Vancouver, Canada by Picovoice

This is a minimalist and extensible framework for benchmarking different speech-to-text engines. It has been developed and tested on Ubuntu 18.04 (x86_64) using Python3.6.

This framework has been developed by Picovoice as part of the Cheetah project. Cheetah is Picovoice's streaming speech-to-text engine, specifically designed to run efficiently on the edge (offline). Deep learning has been the main driver in recent improvements in speech recognition but due to stringent compute/storage limitations of IoT platforms, it is mostly beneficial to cloud-based engines. Picovoice's proprietary deep learning technology enables transferring these improvements to IoT platforms with significantly lower CPU/memory footprint.

LibriSpeech dataset is used for benchmarking. We use the test-clean portion.

This benchmark considers three metrics: word error rate, real-time factor, and model size.

Word error rate (WER) is defined as the ratio of Levenstein distance between words in a reference transcript and words in the output of the speech-to-text engine, to the number of words in the reference transcript.

Real time factor (RTF) is measured as the ratio of CPU (processing) time to the length of the input speech file. A speech-to-text engine with lower RTF is more computationally efficient. We omit this metric for cloud-based engines.

The aggregate size of models (acoustic and language), in MB. We omit this metric for cloud-based engines.

Amazon Transcribe is a cloud-based speceh recognition engine, offered by AWS. Find more information here.

PocketSphinx works offline and can run on embedded platforms such as Raspberry Pi.

A cloud-based speech recognition engine offered by Google Cloud Platform. Find more information here.

Mozilla DeepSpeech is an open-source implementation of Baidu's DeepSpeech by Mozilla.

Cheetah is a streaming speech-to-text engine developed using Picovoice's proprietary deep learning technology. It works offline and is supported on a growing number of platforms including Android, iOS, and Raspberry Pi.

Leopard is a speech-to-text engine developed using Picovoice's proprietary deep learning technology. It works offline and is supported on a growing number of platforms including Android, iOS, and Raspberry Pi.

Below is information on how to use this framework to benchmark the speech-to-text engines.

- Make sure that you have installed DeepSpeech and PocketSphinx on your machine by following the instructions on their official pages.

- Unpack DeepSpeech's models under resources/deepspeech.

- Download the test-clean portion of LibriSpeech and unpack it under resources/data.

- For running Google Speech-to-Text and Amazon Transcribe, you need to sign up for the respective cloud provider and setup permissions / credentials according to their documentation. Running these services may incur fees.

Word Error Rate can be measured by running the following command from the root of the repository:

python benchmark.py --engine_type AN_ENGINE_TYPEThe valid options for the engine_type

parameter are: AMAZON_TRANSCRIBE, CMU_POCKET_SPHINX, GOOGLE_SPEECH_TO_TEXT, MOZILLA_DEEP_SPEECH,

PICOVOICE_CHEETAH, PICOVOICE_CHEETAH_LIBRISPEECH_LM, PICOVOICE_LEOPARD, and PICOVOICE_LEOPARD_LIBRISPEECH_LM.

PICOVOICE_CHEETAH_LIBRISPEECH_LM is the same as PICOVOICE_CHEETAH

except that the language model is trained on LibriSpeech training text similar to

Mozilla DeepSpeech. The same applies to Leopard.

The time command is used to measure the execution time of different engines for a given audio file, and then divide

the CPU time by audio length. To measure the execution time for Cheetah, run:

time resources/cheetah/cheetah_demo \

resources/cheetah/libpv_cheetah.so \

resources/cheetah/acoustic_model.pv \

resources/cheetah/language_model.pv \

resources/cheetah/cheetah_eval_linux.lic \

PATH_TO_WAV_FILEThe output should have the following format (values may be different):

real 0m4.961s

user 0m4.936s

sys 0m0.024sThen, divide the user value by the length of the audio file, in seconds. The user value is the actual CPU time spent in the program.

To measure the execution time for Leopard, run:

time resources/leopard/leopard_demo \

resources/leopard/libpv_leopard.so \

resources/leopard/acoustic_model.pv \

resources/leopard/language_model.pv \

resources/leopard/leopard_eval_linux.lic \

PATH_TO_WAV_FILEFor DeepSpeech:

time deepspeech \

--model resources/deepspeech/output_graph.pbmm \

--lm resources/deepspeech/lm.binary \

--trie resources/deepspeech/trie \

--audio PATH_TO_WAV_FILEFinally, for PocketSphinx:

time pocketsphinx_continuous -infile PATH_TO_WAV_FILEThe below results are obtained by following the previous steps. The benchmarking was performed on a Linux machine running Ubuntu 18.04 with 64GB of RAM and an Intel i5-6500 CPU running at 3.2 GHz. WER refers to word error rate and RTF refers to real time factor.

| Engine | WER | RTF (Desktop) | RTF (Raspberry Pi 3) | RTF (Raspberry Pi Zero) | Model Size (Acoustic and Language) |

|---|---|---|---|---|---|

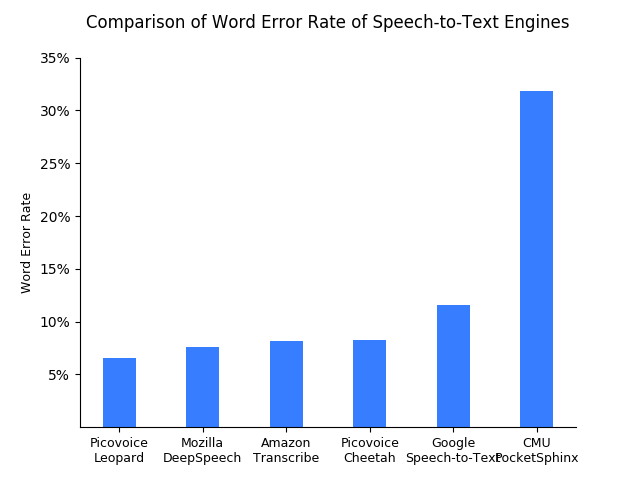

| Amazon Transcribe | 8.21% | N/A | N/A | N/A | N/A |

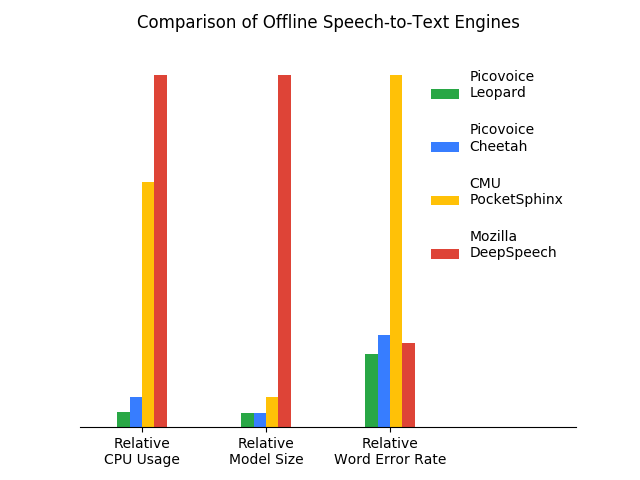

| CMU PocketSphinx (0.1.15) | 31.82% | 0.32 | 1.87 | 2.04 | 97.8 MB |

| Google Speech-to-Text | 11.98% | N/A | N/A | N/A | N/A |

| Mozilla DeepSpeech (0.6.1) | 14.48% | 0.46 | N/A | N/A | 1146.8 MB |

| Picovoice Cheetah (v1.2.0) | 10.49% | 0.04 | 0.62 | 3.11 | 47.9 MB |

| Picovoice Cheetah LibriSpeech LM (v1.2.0) | 8.25% | 0.04 | 0.62 | 3.11 | 45.0 MB |

| Picovoice Leopard (v1.0.0) | 8.34% | 0.02 | 0.55 | 2.55 | 47.9 MB |

| Picovoice Leopard LibriSpeech LM (v1.0.0) | 6.58% | 0.02 | 0.55 | 2.55 | 45.0 MB |

The figure below compares the word error rate of speech-to-text engines. For Picovoice, we included the engine that was trained on LibriSpeech training data similar to Mozilla DeepSpeech.

The figure below compares accuracy and runtime metrics of offline speech-to-text engines. For Picovoice we included the engines that were trained on LibriSpeech training data similar to Mozilla DeepSpeech. Leopard achieves the highest accuracy while being 23X faster and 27X smaller in size compared to second most accurate engine (Mozilla DeepSpeech).

The benchmarking framework is freely available and can be used under the Apache 2.0 license. The provided Cheetah and Leopard resources (binary, model, and license file) are the property of Picovoice. They are only to be used for evaluation purposes and their use in any commercial product is strictly prohibited.

For commercial enquiries contact us via this form.