Wild PPS dataset on GoogleDrive

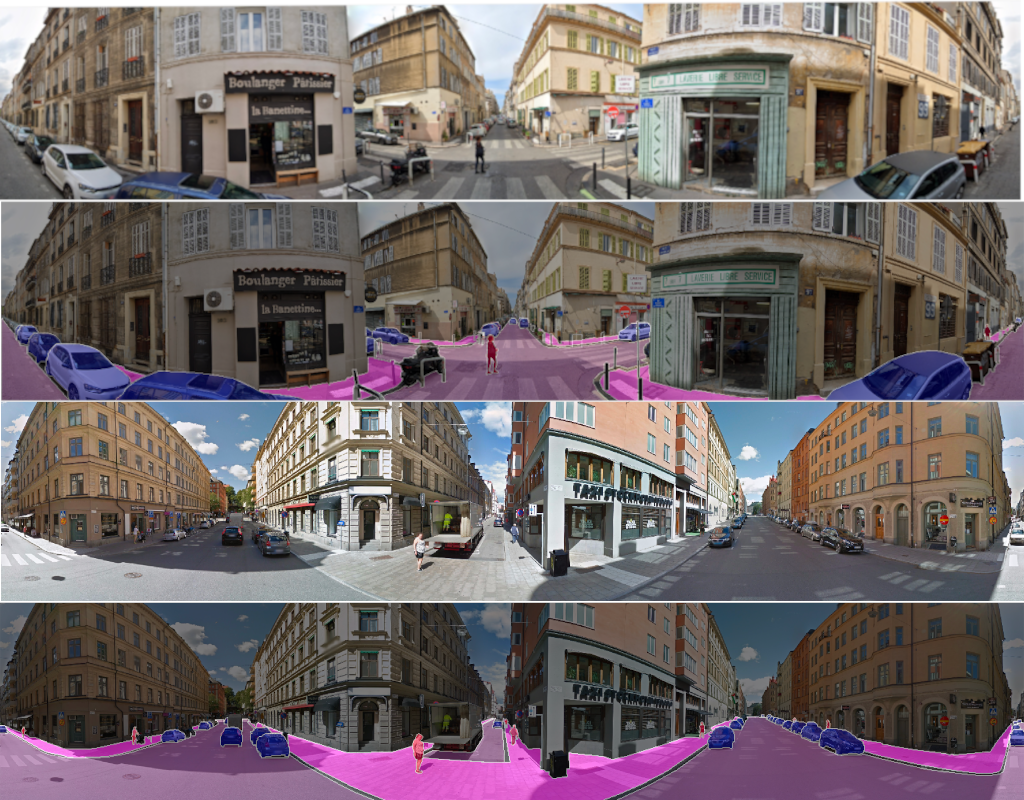

We provide a diverse dataset consisting of 80 panoramic images from 40 different cities taken from WildPASS along with panoptic annotations for the most essential street scence classes (Stuff: Road, Sidewalk & Thing: Person, Car) in cityscapes annotation format.

We provide our proposed robust seamless segmentation model. The weights can be downloaded from Google drive Robust Seamless Segmentation Model.

- Follow the installation steps for the seamless segmentation model as described in the official repository

- Download the provided weights

- Download the WildPPS dataset. If you are using a custom dataset, make sure your dataset is in the official cityscapes data format. If it is not the case, use cityscapesScripts to bring it to the required format.

- Panoptic predictions can be computed as described in the official seamseg repository. Make sure to use the

--rawflag to compute quantitative results. - Use the provided

robust_seamseg.iniconfig file and update the path to the weights in line 13

- Convert the provided WildPPS dataset into the required format for the seamless segmentation model with the help of this script or download the precomputed WildPPS_seamsegformat dataset. This is only necessary if you use the seamless segmentation models. Other popular libraries such as DETECTRON2 can directly work with the normal format.

- Use the provided

measure_panoptic.pyscript to compute the panoptic score of the predictions. Make sure to have the seamless segmentation model installed. python measure_panoptic.py --gt <Location of converted WildPPS dataset> --target <Location of seamseg model predictions> --result <Location to write the results to>

If you use our dataset, model or other code please consider referencing one of our following papers

Jaus, Alexander, Kailun Yang, and Rainer Stiefelhagen. "Panoramic panoptic segmentation: Towards complete surrounding understanding via unsupervised contrastive learning." 2021 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2021. [PDF]

Jaus, Alexander, Kailun Yang, and Rainer Stiefelhagen. "Panoramic panoptic segmentation: Insights into surrounding parsing for mobile agents via unsupervised contrastive learning." IEEE Transactions on Intelligent Transportation Systems (2023). [PDF]