A Scheduler Based Sqlalchemy for Celery.

NOTE: At first I developed this project for flask with celery to change scheduler from database, like django-celery-beat for django. And now I maybe haven't time to develop for new feature. No new feature develop plan for it. Just fix bugs. If someone found some bugs, welcome to issue or PR. Thank you for your attention.

- Python 3

- celery >= 4.2.0

- sqlalchemy

First you must install celery and sqlalchemy, and celery should be >=4.2.0.

$ pip install celery

$ pip install sqlalchemy

Install from PyPi:

$ pip install celery-sqlalchemy-scheduler

Install from source by cloning this repository:

$ git clone git@github.com:AngelLiang/celery-sqlalchemy-scheduler.git

$ cd celery-sqlalchemy-scheduler

$ python setup.py install

After you have installed celery_sqlalchemy_scheduler, you can easily start with following steps:

This is a demo for exmaple, you can check the code in examples directory

-

start celery worker

$ celery worker -A tasks -l info -

start the celery beat with

DatabaseScheduleras scheduler:$ celery beat -A tasks -S celery_sqlalchemy_scheduler.schedulers:DatabaseScheduler -l info

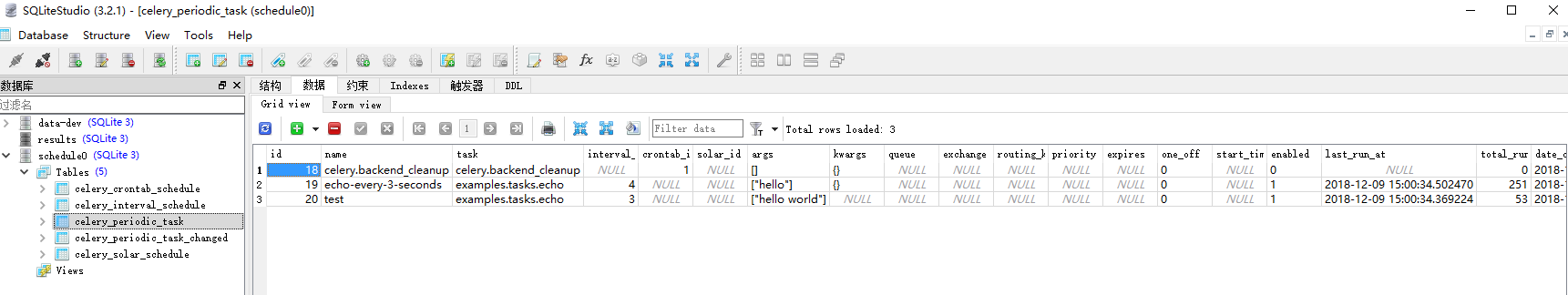

After the celery beat is started, by default it create a sqlite database(schedule.db) in current folder. You can use SQLiteStudio.exe to inspect it.

When you want to update scheduler, you can update the data in schedule.db. But celery_sqlalchemy_scheduler don't update the scheduler immediately. Then you shoule be change the first column's last_update field in the celery_periodic_task_changed to now datetime. Finally the celery beat will update scheduler at next wake-up time.

You can configure sqlalchemy db uri when you configure the celery, example as:

from celery import Celery

celery = Celery('tasks')

beat_dburi = 'sqlite:///schedule.db'

celery.conf.update(

{'beat_dburi': beat_dburi}

)Also, you can use MySQL or PostgreSQL.

# MySQL: `pip install mysql-connector`

beat_dburi = 'mysql+mysqlconnector://root:root@127.0.0.1:3306/celery-schedule'

# PostgreSQL: `pip install psycopg2`

beat_dburi = 'postgresql+psycopg2://postgres:postgres@127.0.0.1:5432/celery-schedule'View examples/base/tasks.py for details.

How to quickstart: AngelLiang#15 (comment)

To create a periodic task executing at an interval you must first create the interval object:

>>> from celery_sqlalchemy_scheduler.models import PeriodicTask, IntervalSchedule

>>> from celery_sqlalchemy_scheduler.session import SessionManager

>>> from celeryconfig import beat_dburi

>>> session_manager = SessionManager()

>>> engine, Session = session_manager.create_session(beat_dburi)

>>> session = Session()

# executes every 10 seconds.

>>> schedule = session.query(IntervalSchedule).filter_by(every=10, period=IntervalSchedule.SECONDS).first()

>>> if not schedule:

... schedule = IntervalSchedule(every=10, period=IntervalSchedule.SECONDS)

... session.add(schedule)

... session.commit()That's all the fields you need: a period type and the frequency.

You can choose between a specific set of periods:

IntervalSchedule.DAYSIntervalSchedule.HOURSIntervalSchedule.MINUTESIntervalSchedule.SECONDSIntervalSchedule.MICROSECONDS

note:

If you have multiple periodic tasks executing every 10 seconds,

then they should all point to the same schedule object.

Now that we have defined the schedule object, we can create the periodic task entry:

>>> task = PeriodicTask(

... interval=schedule, # we created this above.

... name='Importing contacts', # simply describes this periodic task.

... task='proj.tasks.import_contacts', # name of task.

... )

>>> session.add(task)

>>> session.commit()Note that this is a very basic example, you can also specify the

arguments and keyword arguments used to execute the task, the queue to

send it to[*], and set an expiry time.

Here's an example specifying the arguments, note how JSON serialization is required:

>>> import json

>>> from datetime import datetime, timedelta

>>> periodic_task = PeriodicTask(

... interval=schedule, # we created this above.

... name='Importing contacts', # simply describes this periodic task.

... task='proj.tasks.import_contacts', # name of task.

... args=json.dumps(['arg1', 'arg2']),

... kwargs=json.dumps({

... 'be_careful': True,

... }),

... expires=datetime.utcnow() + timedelta(seconds=30)

... )

... session.add(periodic_task)

... session.commit()

A crontab schedule has the fields: minute, hour, day_of_week,

day_of_month and month_of_year, so if you want the equivalent of a

30 * * * * (execute every 30 minutes) crontab entry you specify:

>>> from celery_sqlalchemy_scheduler.models import PeriodicTask, CrontabSchedule

>>> schedule = CrontabSchedule(

... minute='30',

... hour='*',

... day_of_week='*',

... day_of_month='*',

... month_of_year='*',

... timezone='UTC',

... )

The crontab schedule is linked to a specific timezone using the 'timezone' input parameter.

Then to create a periodic task using this schedule, use the same

approach as the interval-based periodic task earlier in this document,

but instead of interval=schedule, specify crontab=schedule:

>>> periodic_task = PeriodicTask(

... crontab=schedule,

... name='Importing contacts',

... task='proj.tasks.import_contacts',

... )

... session.add(periodic_task)

... session.commit()

You can use the enabled flag to temporarily disable a periodic task:

>>> periodic_task.enabled = False

>>> session.add(periodic_task)

>>> session.commit()

The periodic tasks still need 'workers' to execute them. So make sure the default Celery package is installed. (If not installed, please follow the installation instructions here: https://github.com/celery/celery)

Both the worker and beat services need to be running at the same time.

-

Start a Celery worker service (specify your project name):

$ celery -A [project-name] worker --loglevel=info -

As a separate process, start the beat service (specify the scheduler):

$ celery -A [project-name] beat -l info --scheduler celery_sqlalchemy_scheduler.schedulers:DatabaseScheduler