Official Jax Implementation of the CVPR 2022 Paper

[Paper] [Project Page] [Demo Colab]

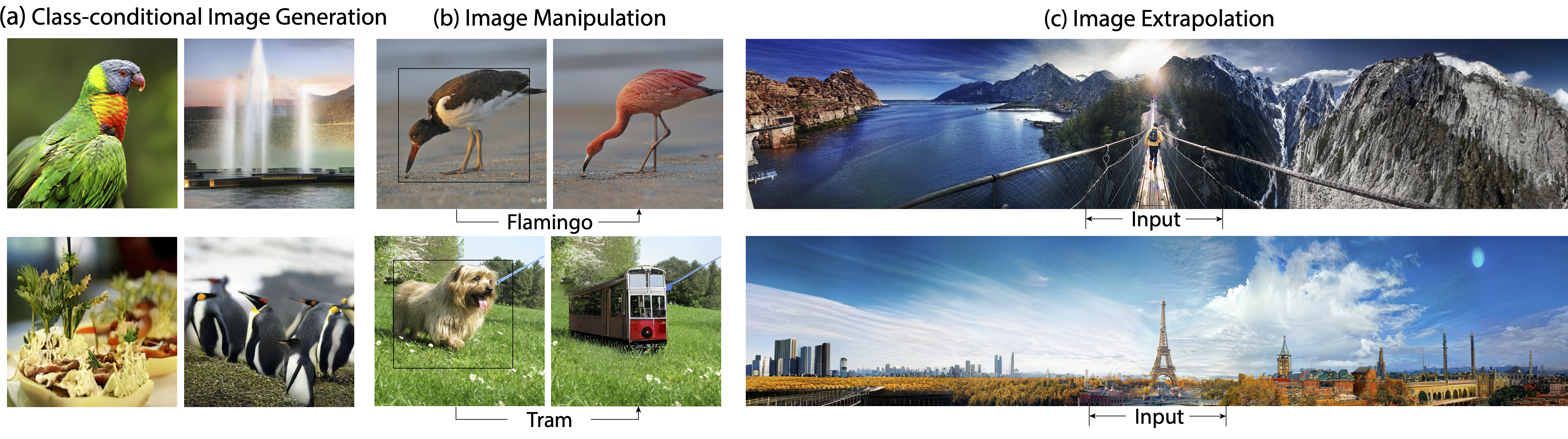

MaskGIT is a novel image synthesis paradigm using a bidirectional transformer decoder. During training, MaskGIT learns to predict randomly masked tokens by attending to tokens in all directions. At inference time, the model begins with generating all tokens of an image simultaneously, and then refines the image iteratively conditioned on the previous generation.

Class conditional Image Genration models:

| Dataset | Resolution | Model | Link | FID |

|---|---|---|---|---|

| ImageNet | 256 x 256 | Tokenizer | checkpoint | 2.28 (reconstruction) |

| ImageNet | 512 x 512 | Tokenizer | checkpoint | 1.97 (reconstruction) |

| ImageNet | 256 x 256 | MaskGIT Transformer | checkpoint | 6.06 (generation) |

| ImageNet | 512 x 512 | MaskGIT Transformer | checkpoint | 7.32 (generation) |

You can run these models for class-conditional image generation and editing in the demo Colab.

[Coming Soon]

@InProceedings{chang2022maskgit,

title = {MaskGIT: Masked Generative Image Transformer},

author={Huiwen Chang and Han Zhang and Lu Jiang and Ce Liu and William T. Freeman},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022}

}

This is not an officially supported Google product.