Our submission for the HDC2021.

Install the package using:

pip install -e .

The neural networks weights can be downloaded using this link: https://seafile.zfn.uni-bremen.de/d/c19be19f1f134c0d8ad2/. Afterwards move all weights to deblurrer/, i.e. folder structure deblurrer/hdc2021_weights/step_i for each step i=0,...,19.

Prediction on images in a folder can be done using:

python deblurrer/main.py path-to-input-files path-to-output-files step

We want to reconstruct the original image from blurred and noisy data

. The forward operator is given by

. This corresponds to an inverse problem:

Our goal is to define an reconstructor which produces an unblurred image from the blurred

:

Our approach is inspired by the recent trend of combining physical forward models and learned iterative schemes (Adler et. al.). There is also experimental evidence that this approach can work well even with an approximated forward model (Hauptmann et. al.). This approach has two steps:

- First, we define an approximate forward model for the blurring process.

- This approximate model is integrated into a learned gradient descent scheme.

This approach is repeated for every step. The same training setup and neural network architecture was used for all blurring steps. The learned gradient scheme was retrained on each blurring step.

For a fixed blurring level the out-of-focus blur can be modeled by as a linear, position invariant convolution with a circular point-spread-function:

This model works well for small blurring levels. For higher blurring levels the average error between the approximate model and the real measurements gets bigger.

Variational methods try to recover the original image as the minimizer of an objective function

where is a data fidelity term and

is a regularizer. In many examples, the regularizer is convex but not differentiable (e.g. TV or l1). In these cases proximal gradient descent can be used to obtain the minimizer:

with a step size . The idea of learned iterative methods is to unroll this iteration for a fixed number of steps and replace the proximal mapping with a convolutional neural network:

We use the K-th iterate as the final reconstruction . The mappings

are implemted as convolutional UNets. The parameter

includes all parameters of all subnetworks, i.e.

. We split the provided data into a training, validation and test part. We train our learned iterative method by minimizing the mean squared error between the reconstruction and the groundtruth model on the training set:

Here, the mean square error served us as a proxy for the real goal: high accuracy for character recognition.

We tried our own sanity check on images from the STL10 dataset. STL10 is an image reconginition dataset consisting of natural images of 10 different classes. We used our approximate forward model to simulate a blurred version of STL10. We evaluated our learned iterative model on this blurred natural images.

Figure: Sanity Check on intial model fails.

It was clear, that our initial model would not pass the sanity check.

Due to the sanity check, we have a kind of constrained optimization problem. Maximize the OCR accuracy under the constraint that we have a slight beblurring effect on natural images. In order to tackle this problem, we used a combined training of the provided challenge data with STL10 images. For every training step we checked if the PSNR between the reconstruction and the unblurred image

was lower than the PSNR btween the blurred image

and the unblurred image

. If our reconstruction was more than 2dB lower in terms of PSNR than the blurred image we added one STL10 image to the current training batch.

The large image size 1460x2360 the usage of large deep learning approaches is limited. There are ways around it, i.e. use invertible neural networks or checkpointing. We tackle this problem by simply downsample the original images to 181x294 (about 8 times downsampling). This was motivated by the fact, that the target area on the e-ink display has a resolution of 200x300. Downsampling was performed using an average filter. All learned iterative networks were trained on this downsampled images. The final upsampling is performed by a small convolutional neural network, consiting of bilinear upsampling layers and a few convolutional layers.

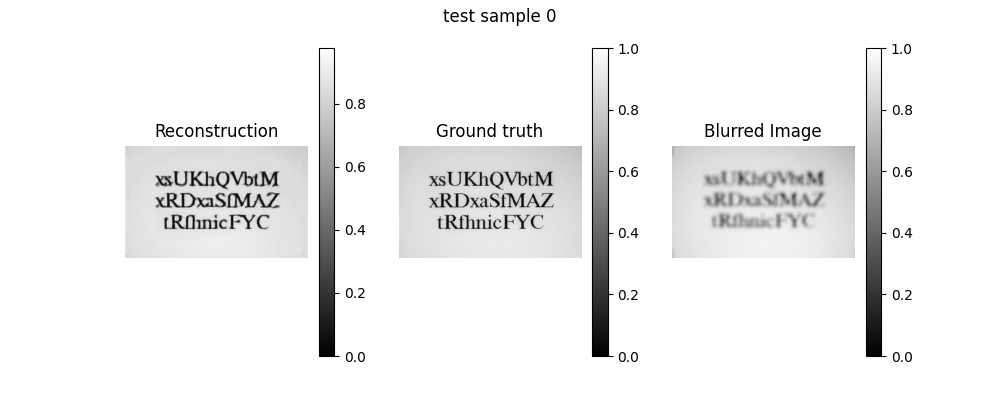

Figure: Example for step 5 (test data).

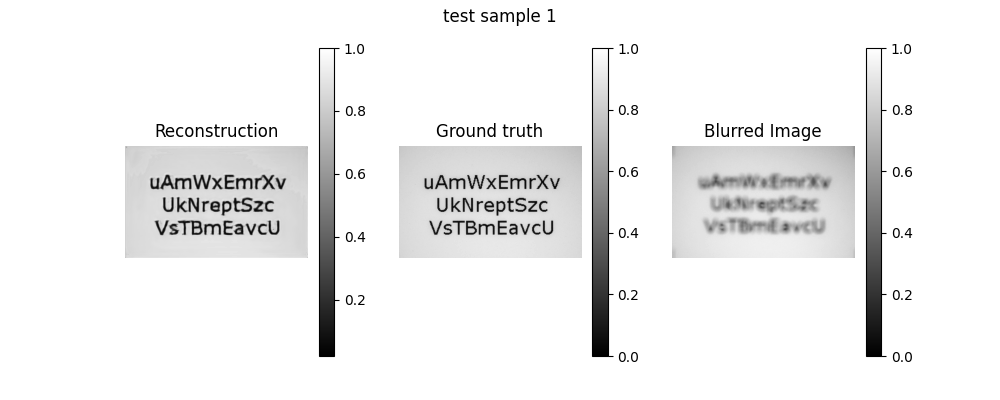

Figure: Example for step 6 (test data).

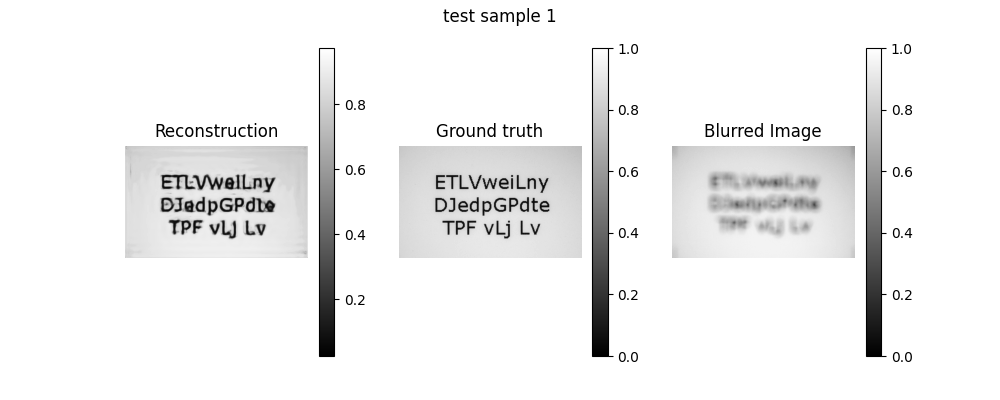

Figure: Example for step 8 (test data).

- numpy = 1.20.3

- pytorch = 1.9.0

- pytorch-lightning = 1.3.8

- torchvision = 0.10.0

Team University of Bremen, Center of Industrial Mathematics (ZeTeM):

- Alexander Denker, Maximilian Schmidt, Johannes Leuschner, Sören Dittmer, Judith Nickel, Clemens Arndt, Gael Rigaud, Richard Schmähl