PyCameraServer is a Flask video / image / Youtube / IP Camera frames online web-editor with live streaming preview for objects recognition, extraction, segmentation, resolution upscaling, styling, colorization, interpolation, using OpenCV with neural network models: YOLO, Mask R-CNN, Caffe, DAIN, EDSR, LapSRN, FSRCNN, ESRGAN.

[Compiling OpenCV with Nvidia GPU support]

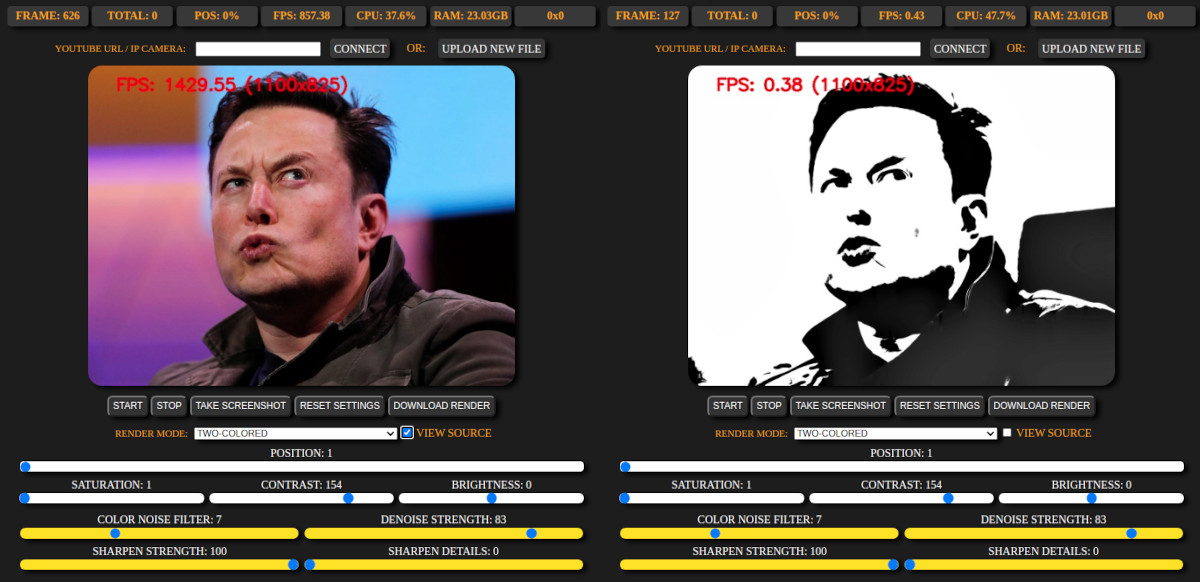

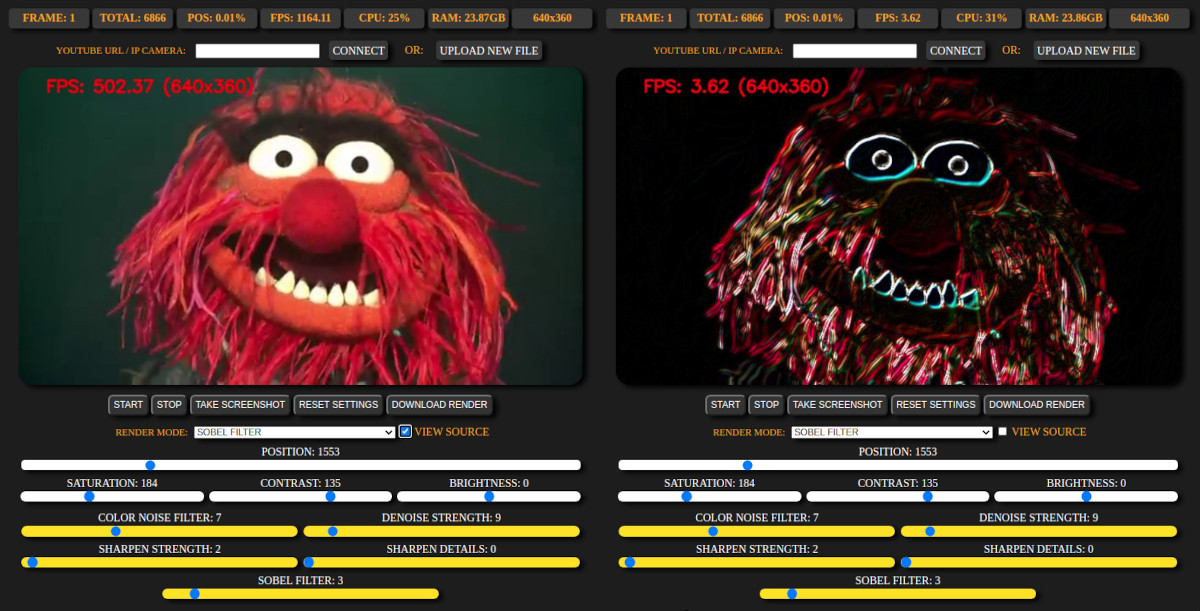

Editor Demo #1 (other modes)

YOLO v3 object detector: Website | GitHub | Paper

Mask R-CNN segmentation: GitHub | Paper

Colorization with Caffe: GitHub | Paper

Enhanced SRGAN (ESRGAN): GitHub | Paper | Pre-trained models

Super-resolution models: EDSR - Paper | LapSRN - Paper | FSRCNN - Paper

Depth-Aware Video Frame Interpolation (DAIN): GitHub | Paper

- Image files: png, jpg, gif

- Video files: mp4, avi, m4v, webm, mkv

- Youtube: URL (video, stream)

- IP Cameras: URL for MJPEG camera without login

Rendering modes [View gallery]

Using neural networks:

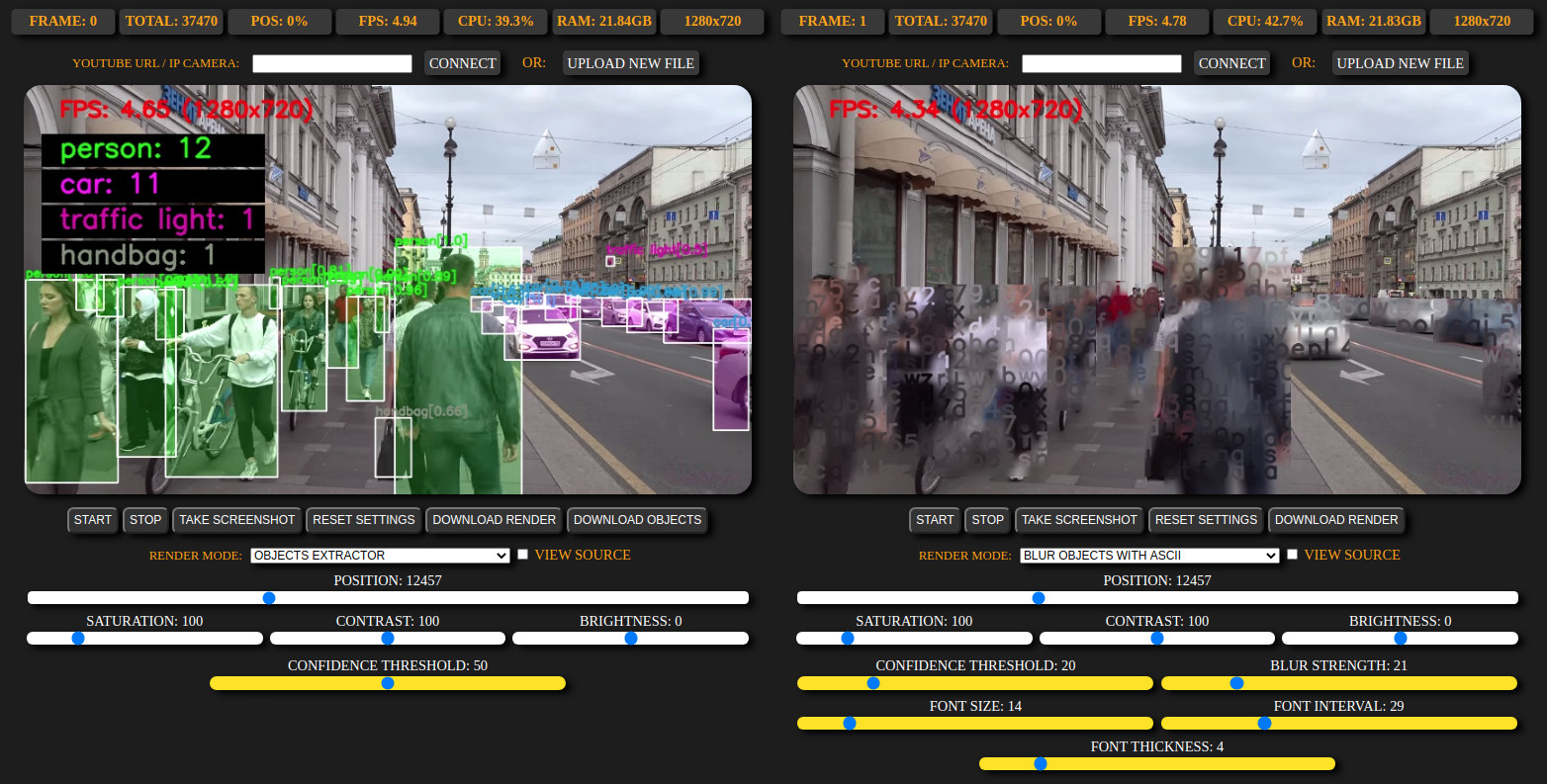

- (GPU/CPU) YOLO: Extract objects in frames (download zip with images), draw detected boxes and labels

- (GPU/CPU) YOLO: Blur objects with ASCII chars

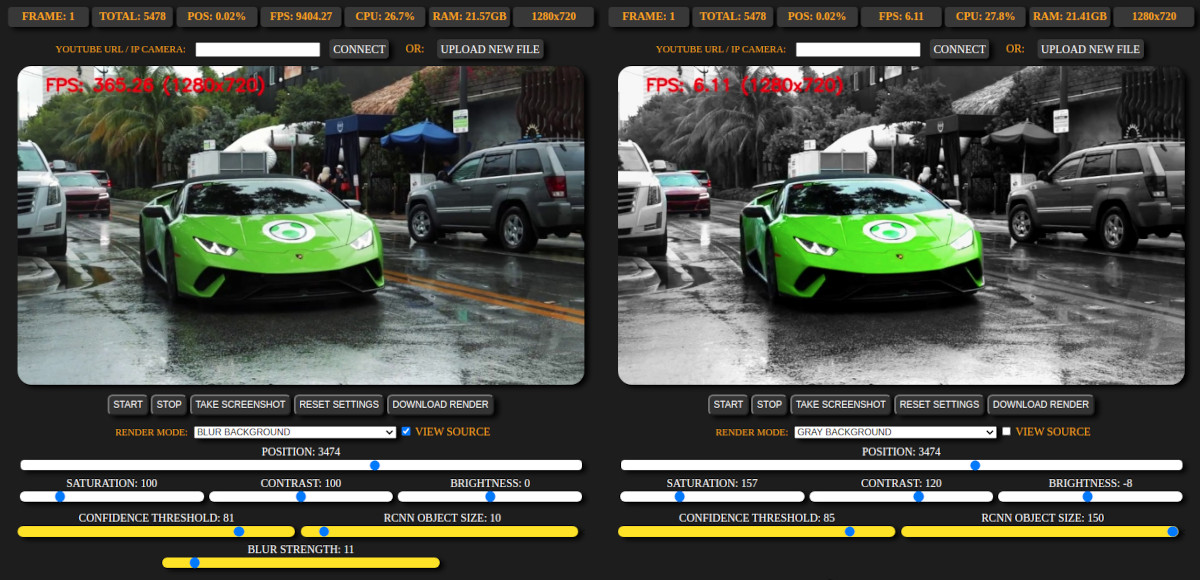

- (GPU/CPU) Mask R-CNN: Convert background to gray

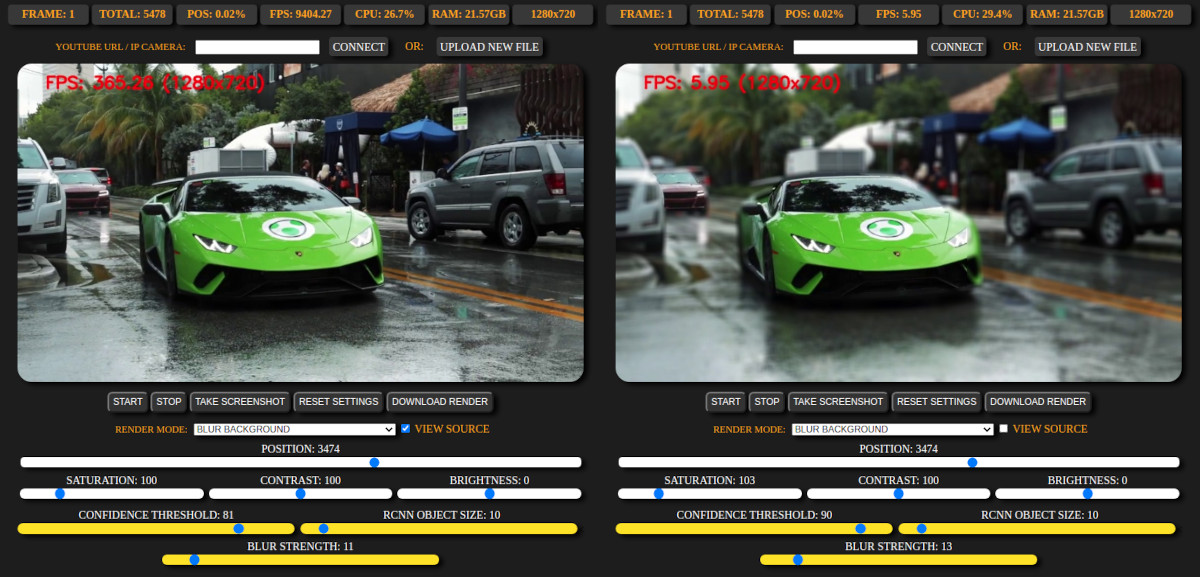

- (GPU/CPU) Mask R-CNN: Blur background

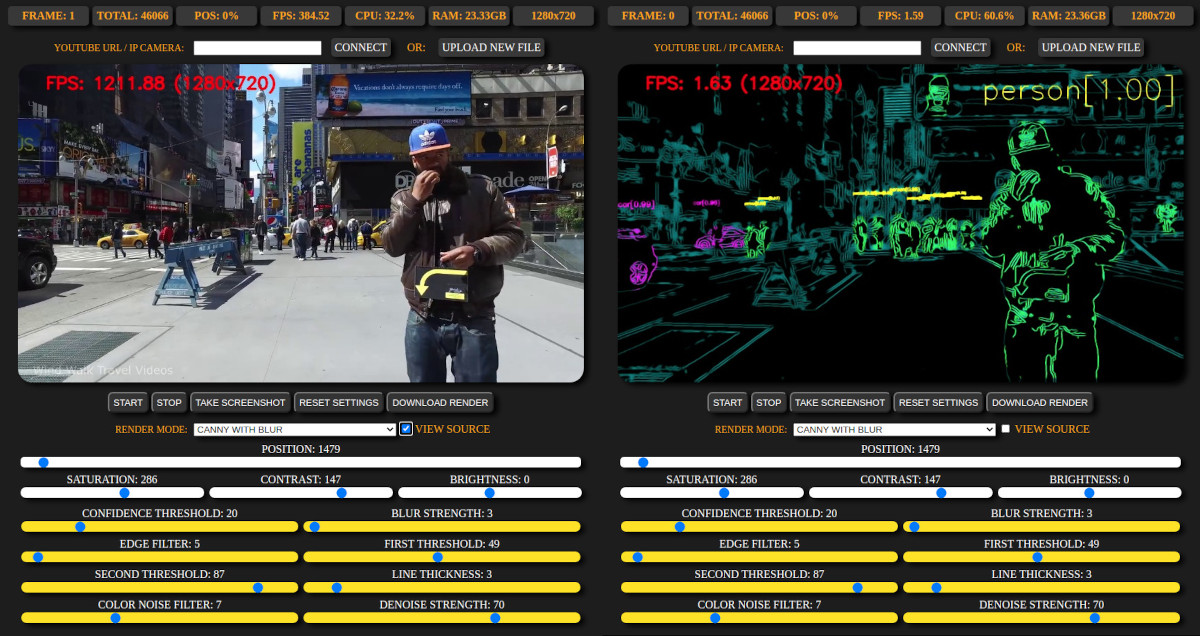

- (GPU/CPU) Mask R-CNN: Canny edge detection with masks

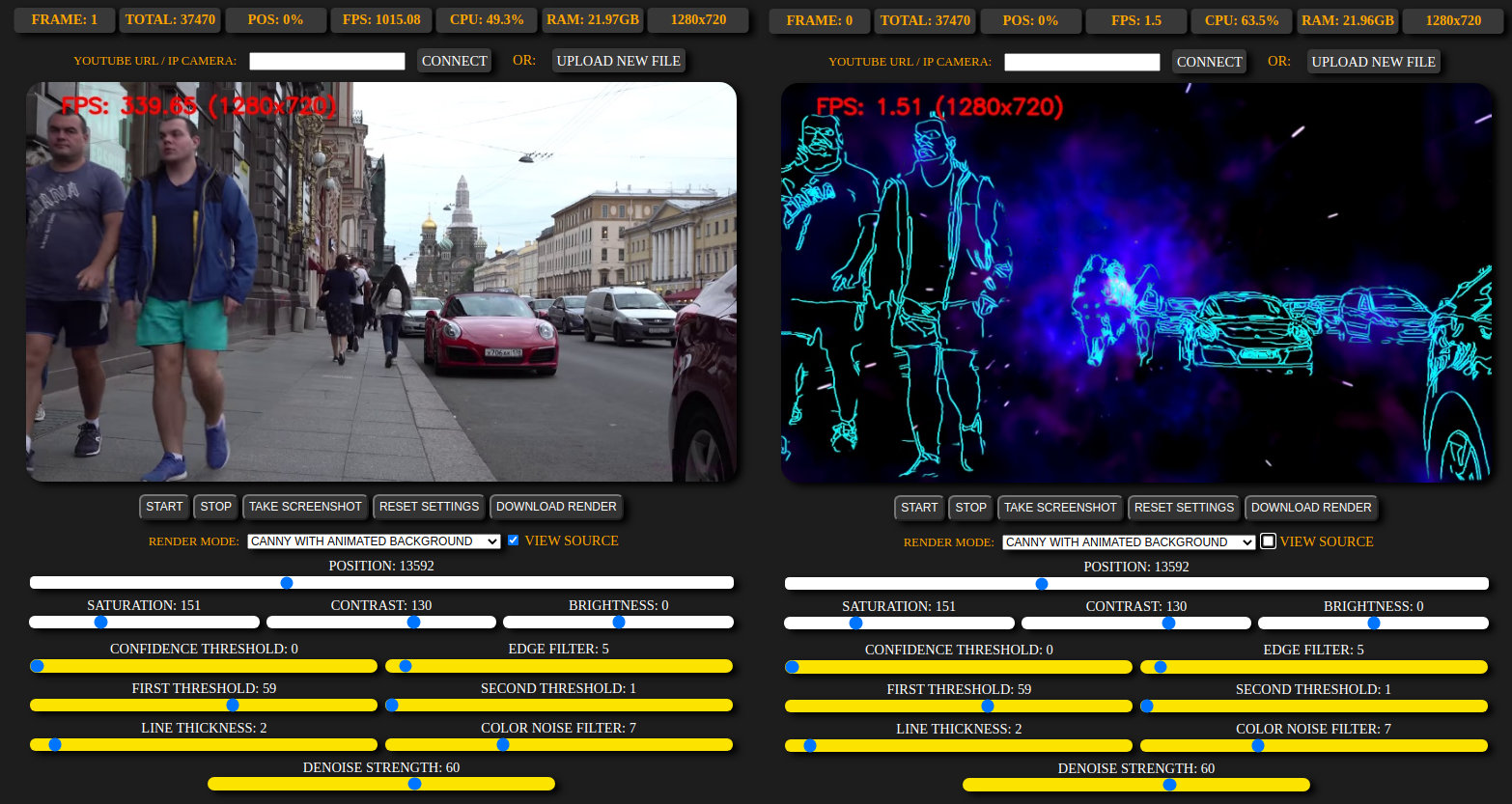

- (GPU/CPU) Mask R-CNN: Replace background with video animation

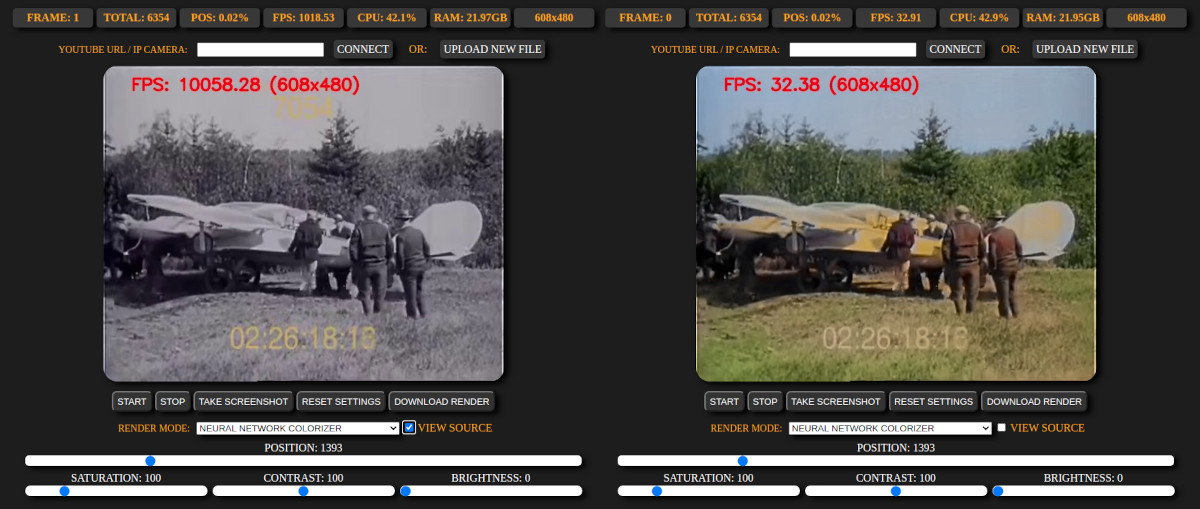

- (GPU) Caffe: colorize grayscale with neural network

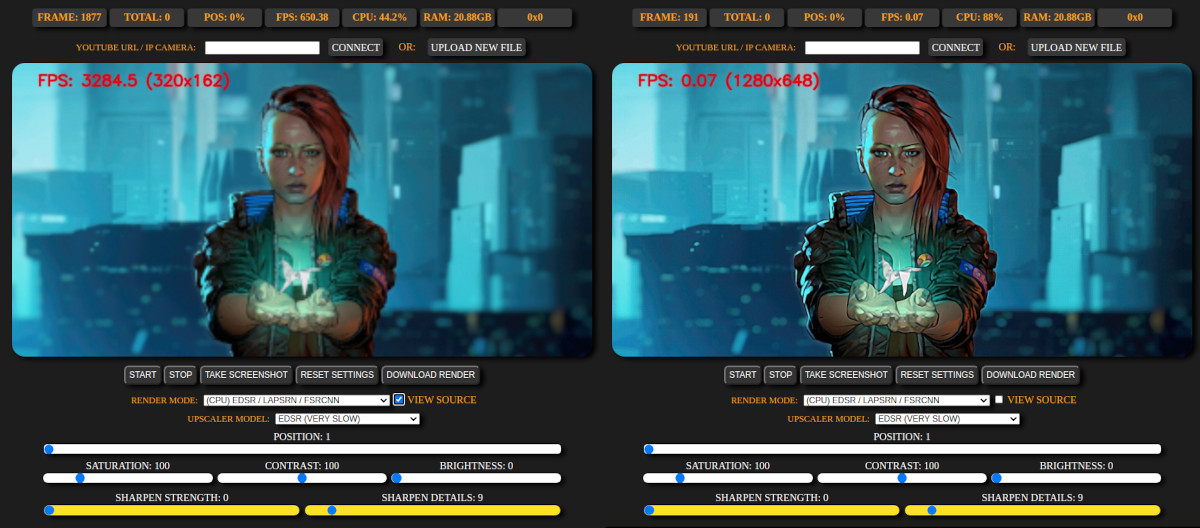

- (CPU) EDSR / LapSRN / FSRCNN: x4 resolution upscale

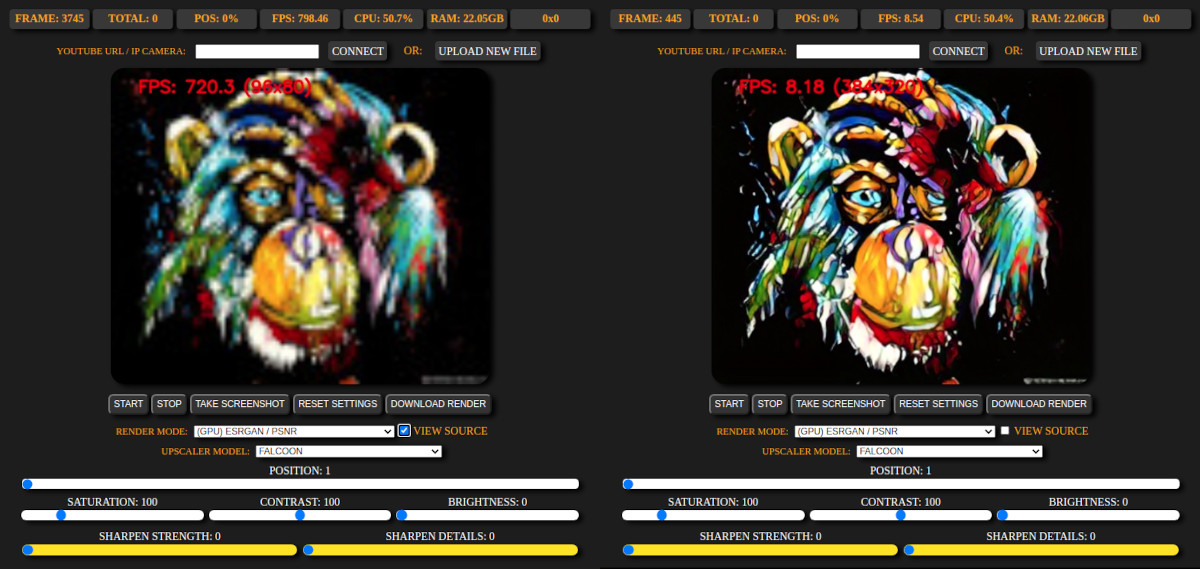

- (GPU) ESRGAN / RRDB_PSNR: x4 resolution upscale (models included: FALCOON, MANGA109, ESRGAN/RRDB_PSNR interpolation 0.2, 0.4, 0.6, 0.8)

- (GPU) Depth-Aware Video Frame Interpolation: create smooth video by creating new frames (boost x2, x4, x8 fps)

Without neural networks:

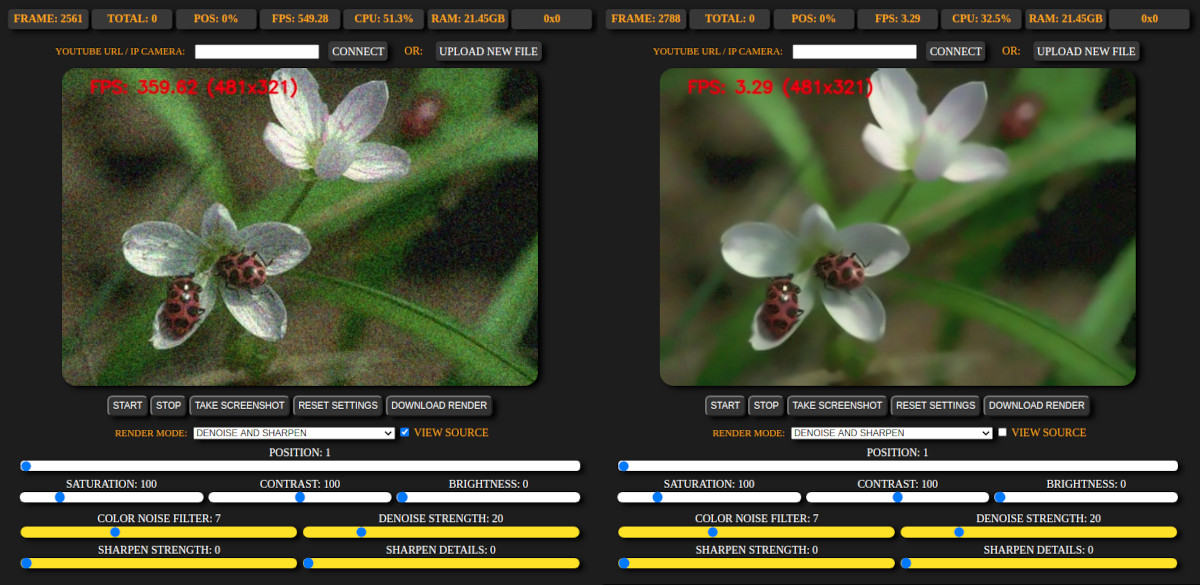

- (CPU) Denoiser with two sharpening methods

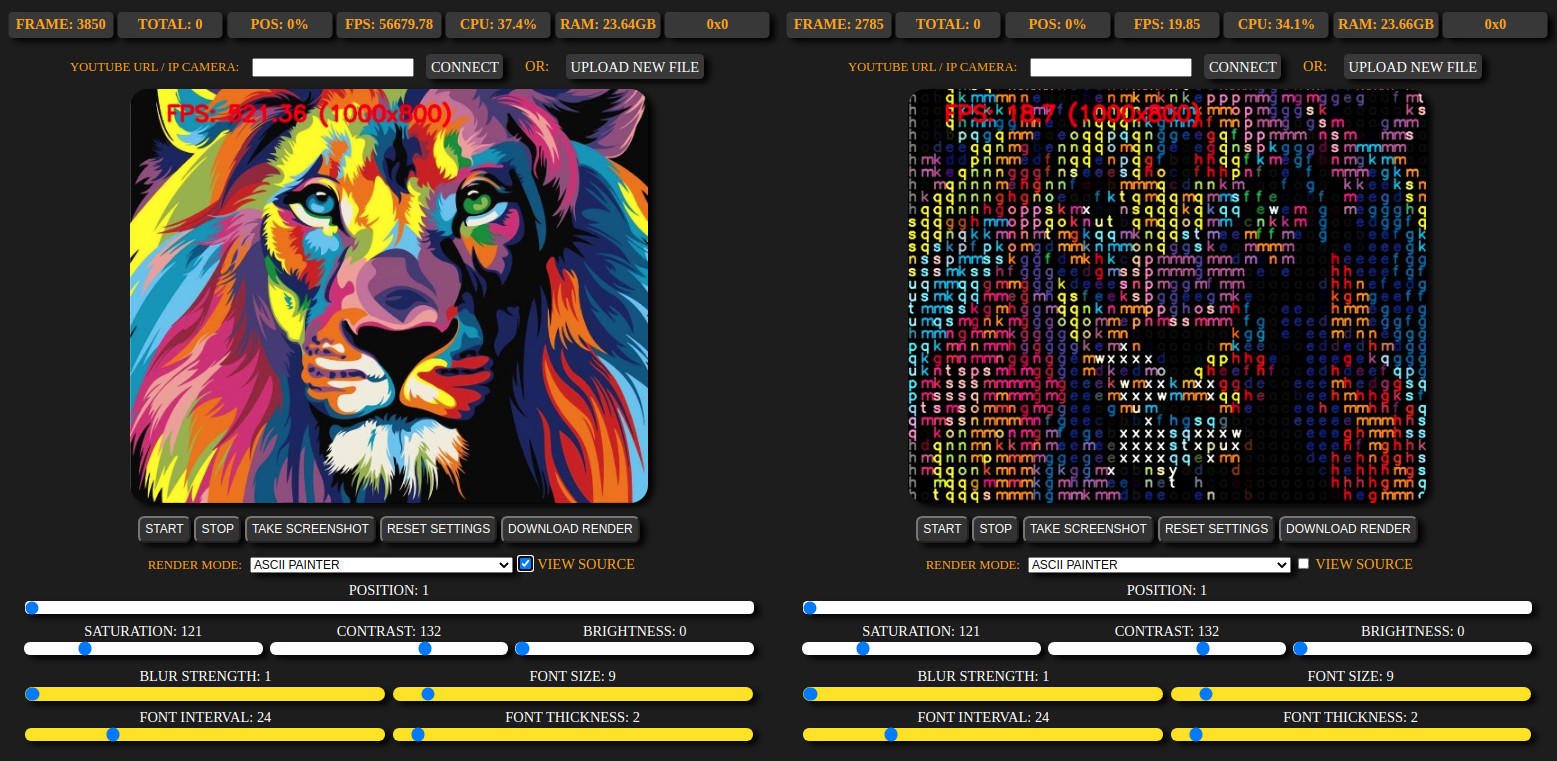

- (CPU) ASCII painter

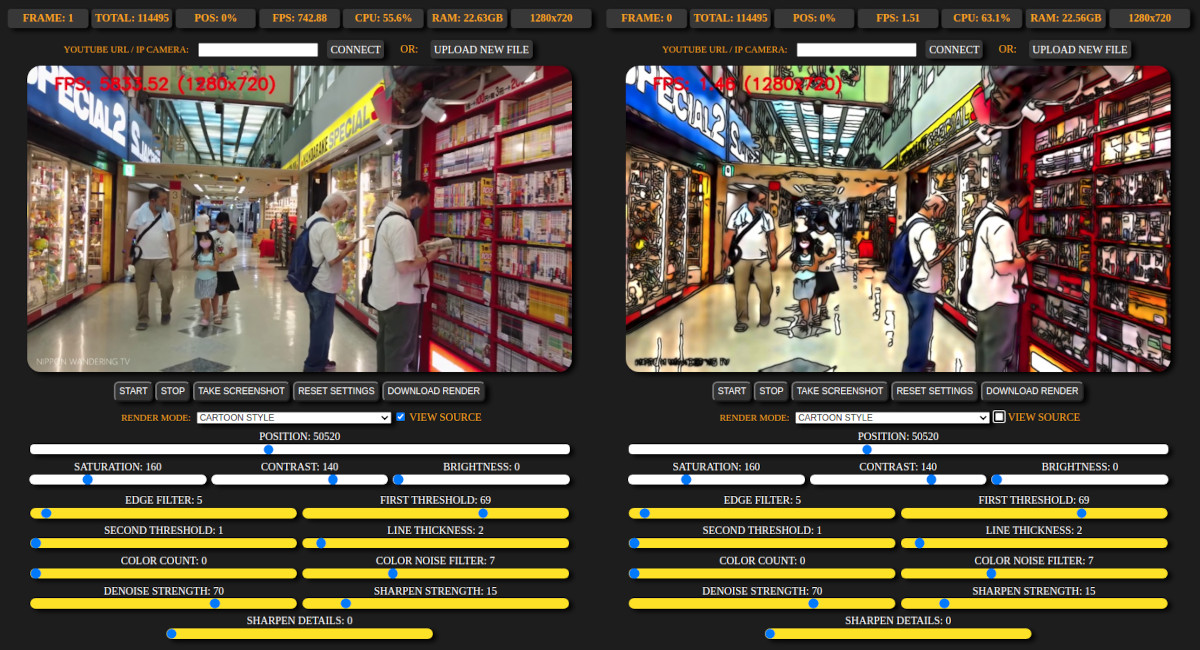

- (CPU) Cartoon style

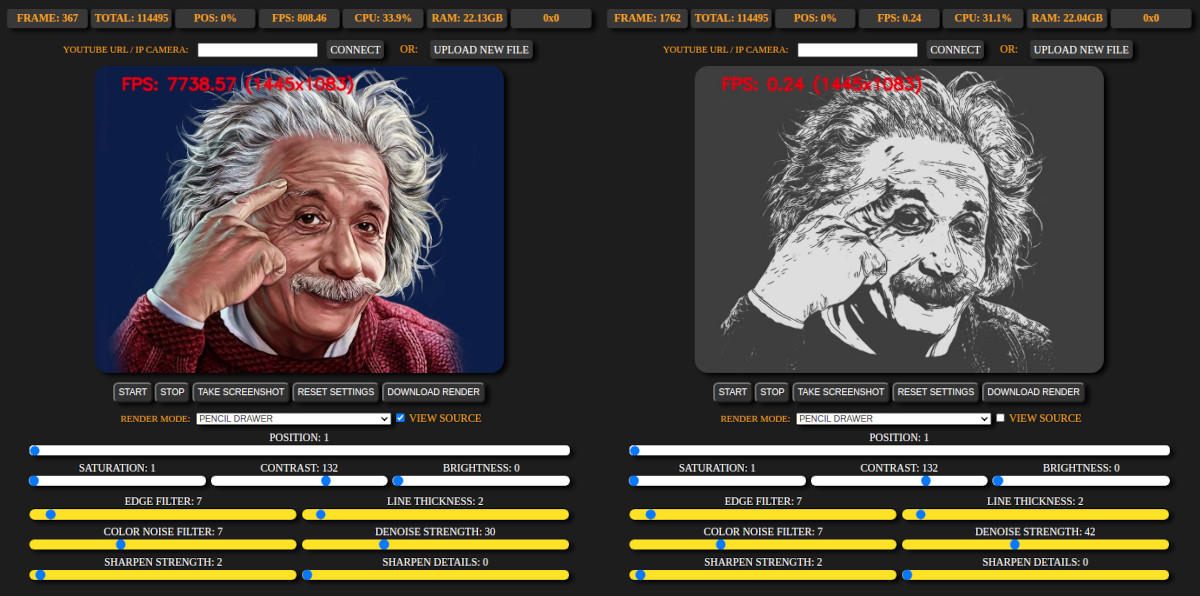

- (CPU) Pencil drawer

- (CPU) Sobel filter

- (CPU) Two-colored

- Animated demo for rendering modes

- Launches separate editor process with selected mode, source and unique port for multiple user connections

- Live Flask streaming preview

- Processing frames with selected mode/settings and downloading video/objects

- Taking a screenshot

- Rewinding with slider

- Original / rendered view switching

- Render mode, model and settings changing without page reload (AJAX)

- Viewing a source info, progress and server stats in real-time (RAM, CPU load, FPS, frame size)

Note: rendering process stops after a few seconds if user closed browser tab.

Simultaneous work on different devices / browser tabs provided by reserving unique user port, generated from main page.

- OS: Ubuntu 20.04 LTS

- GPU: NVIDIA RTX 2060

- NVIDIA driver: 440

- OpenCV 4.3

- CUDA 10.0

- cuDNN 7.6.4

- Python 3.8

- Flask 1.1.2

- PyTorch 1.4.0

Main page example (redirects user to editor with specific port and mode):

python main.py -i 0.0.0.0 -o 8000

Manual editor page launch:

python processing.py -i 0.0.0.0 -o 8001 -s my_source -c a -m video

Args:

-

-i: server ip -

-o: user port -

-s: video / image file, Youtube or IP Camera URL. File should be placed in folder "user_uploads" -

-c: rendering mode (letters 'a-z') -

m: source mode ("ipcam", "youtube", "video", "image")

Ipcam with YOLO detector:

python processing.py -i 0.0.0.0 -o 8002 -s http://192.82.150.11:8083/mjpg/video.mjpg -c a -m ipcam

Youtube with ASCII mode:

python processing.py -i 0.0.0.0 -o 8002 -s https://youtu.be/5JJu-CTDLoc -c q -m youtube

Video with frame interpolation:

python processing.py -i 0.0.0.0 -o 8002 -s my_video.avi -c z -m video

Image with ESRGAN upscaler:

python processing.py -i 0.0.0.0 -o 8002 -s my_image.jpg -c t -m image

Check processing.py for other modes

-

Follow this manual to compile OpenCV with GPU acceleration (YOLO, Mask R-CNN) and create python virtual environment in Linux: https://github.com/alexfcoding/OpenCV-cuDNN-manual

-

Unpack files to folder "models" [Google Drive]

-

Install libraries:

$ workon opencv_gpu

$ pip install flask

$ pip install psutil

$ pip install sklearn

$ pip install torch==1.4.0+cu100 torchvision==0.5.0+cu100 -f https://download.pytorch.org/whl/torch_stable.html

$ pip install pafy

$ pip install youtube-dl

- Generate PyTorch extensions and correlation package required by PWCNet for DAIN as described here:

$ workon opencv_gpu

$ cd DAIN

$ cd my_package

$ ./build.sh

$ cd ../PWCNet/correlation_package_pytorch1_0

$ ./build.sh

- Downloading zip with detected YOLO image objects

- Rendering and classes counting

- Blurring with ASCII

- Drawing R-CNN masks with classes and edge detection

- Animating background with secondary video and applying colored edge detection to masks

- Drawing R-CNN masks with grayscale background

- Drawing R-CNN masks with blurred background