MariaDB has a multi-master database called Galera Cluster.

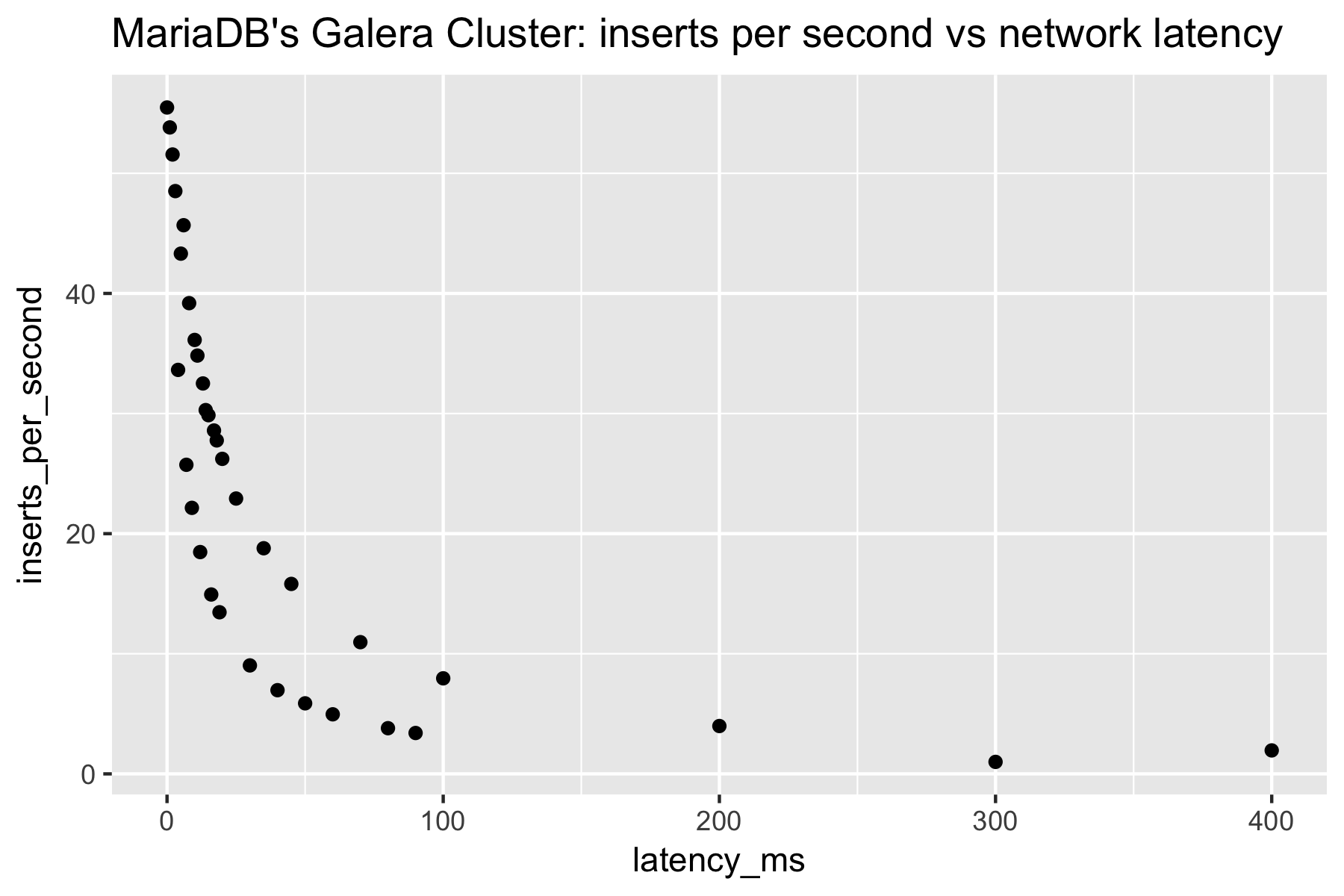

For our use case, the nodes of the cluster will be in different datacenters. When a record is written to MariaDB's Galera Cluster, it is replicated to all the nodes before an acknowlegement is made to the client. This is great for consistency, but not so great for throughput.

It was important for us to understand the relationship between network latency and throughput. If the network latency were to spike in one of the datacenters, what would happen how many writes could we perform from a single connection?

Benjamin Cane wrote a great blog post that explains how to simulate network latency using the Linux command tc (Traffic Control).

This project iteratively inserted records at various network latencies to understand the effect on throughput.

Here's a video walk-through: