- Python

- pip

- docker

- docker compose

- VSCode

python3 -m venv venv

source venv/bin/activate

pip3 install -r requirements.txt

git clone https://github.com/alfianhid/technical-tests.git

cd technical-tests-

Navigate to the

.env-example, rename to.env, and edit it with your credentials. -

Run the following commands to start DB and Airflow containers with the

.envfile:

docker compose --env-file <path_to_.env_file> -f <path_to_docker-compose-db.yml> up --build -ddocker compose --env-file <path_to_.env_file> -f <path_to_docker-compose-airflow.yml> up --build -d-

Once all the services are up and running, go to

https://localhost:8080(useairflowfor both username and password). -

But we need to grant privileges to the MySQL database in order to write to it. Execute the following command:

docker exec -it <mysql_container_name> mysql -u root -p

grant ALL PRIVILEGES ON *.* TO 'your_username'

flush privileges;

exit- Before running the DAGs, we need to create schema and tables in the database. Execute the following command:

python3 dags/initialize_databases.py

python3 dags/initialize_reference_table.py- Now, go back to

https://localhost:8080, then unpause all DAGs and run them for the first time.

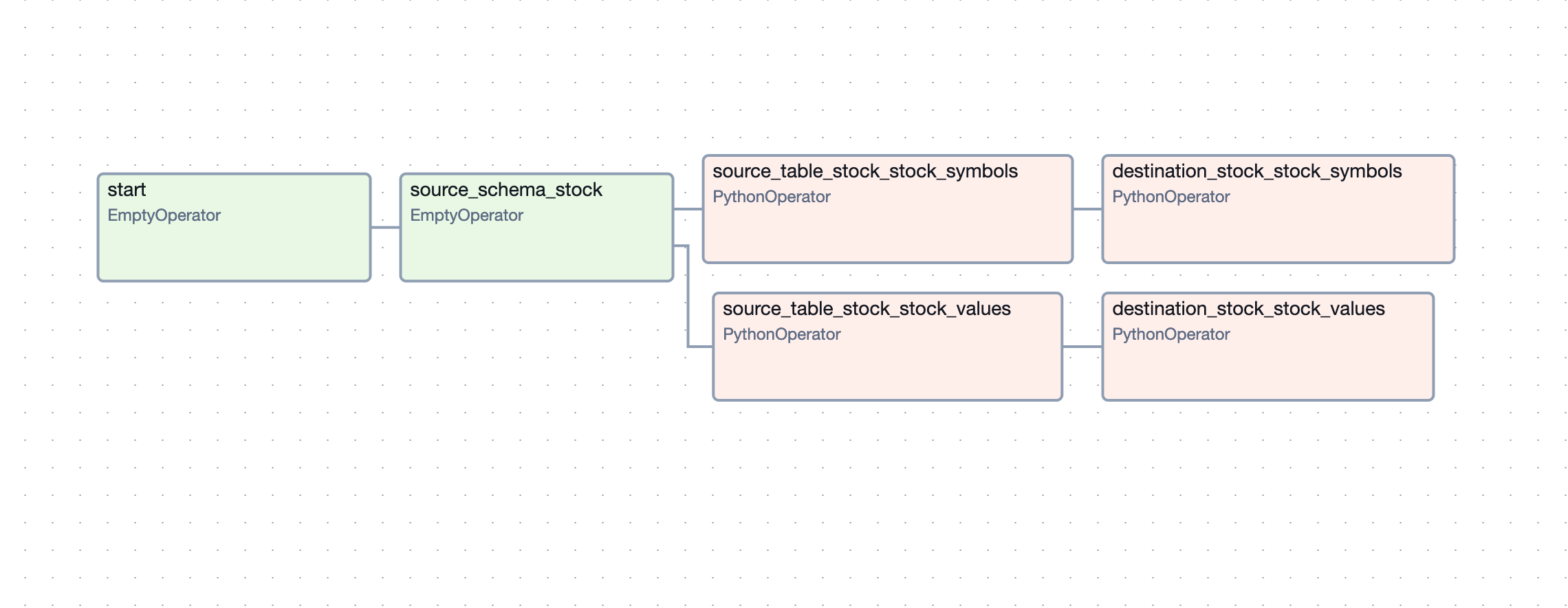

This is the expected result for Airflow:

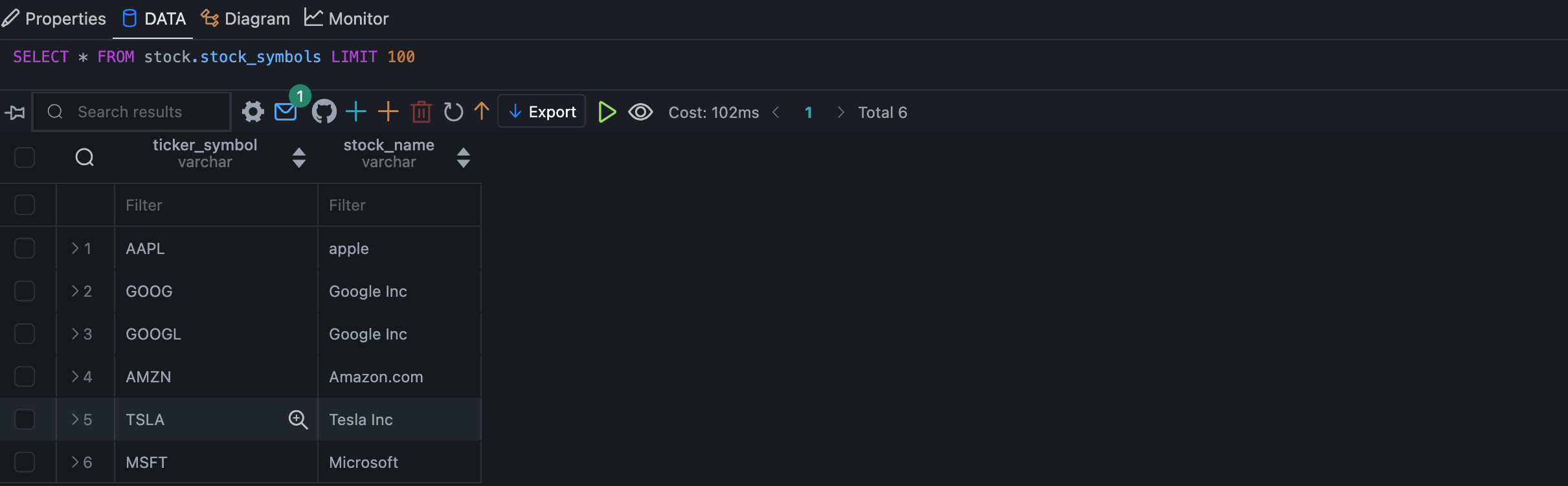

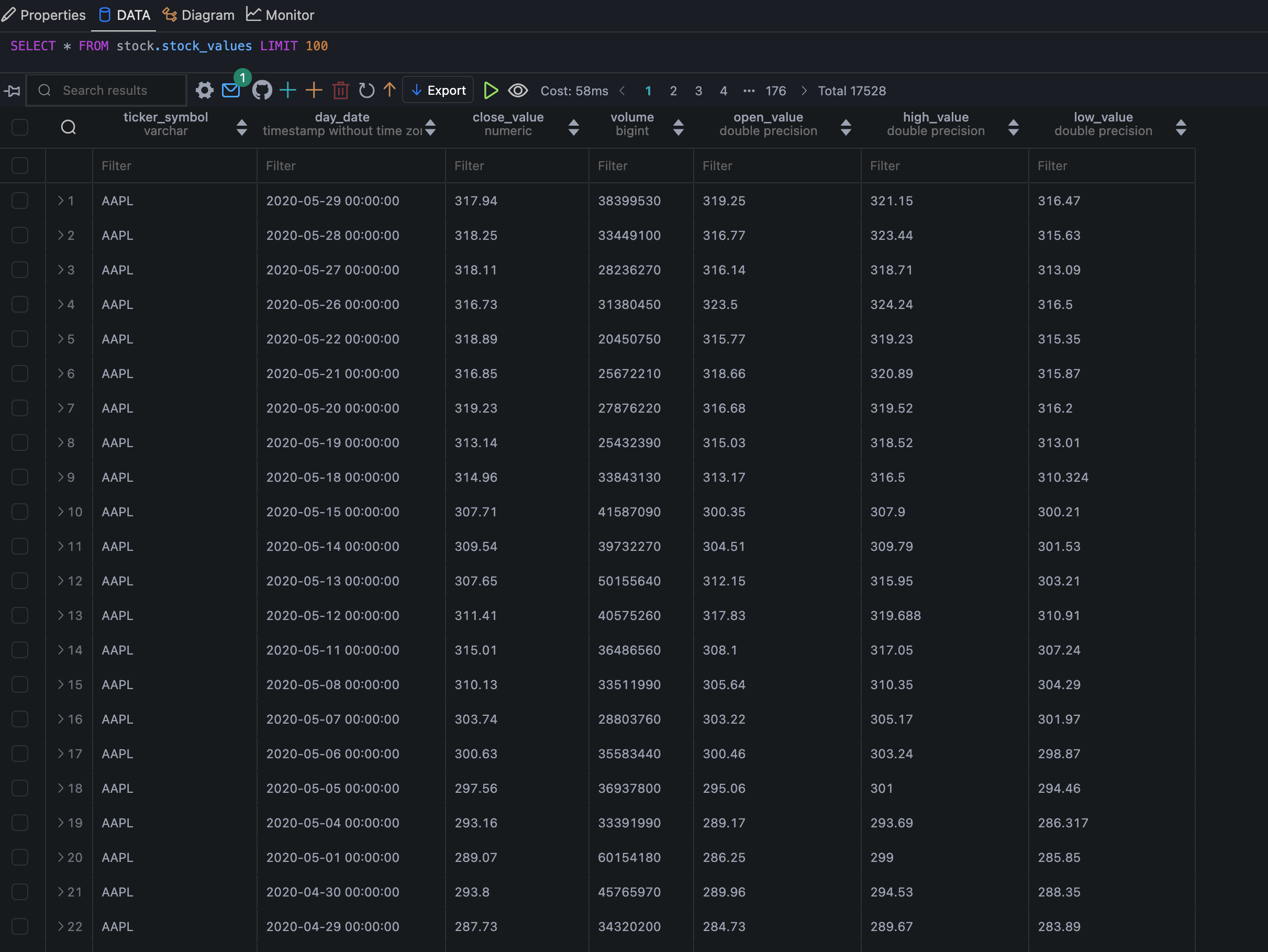

And this is the expected result (after DAG trigger) for local Postgres DB: