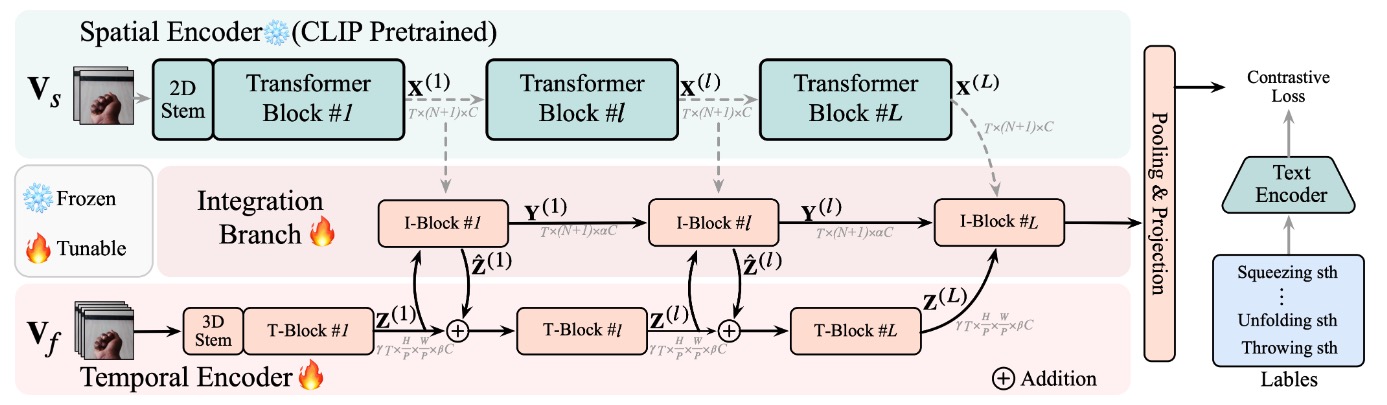

Disentangling Spatial and Temporal Learning for Efficient Image-to-Video Transfer Learning

Zhiwu Qing, Shiwei Zhang, Ziyuan Huang, [Yingya Zhang], Changxin Gao,

[Deli Zhao], Nong Sang

In ICCV, 2023. [Paper].

Latest

[2023-09] Codes and models are available!

This repo is a modification on the TAdaConv repo.

Installation

Requirements:

- Python>=3.6

- torch>=1.5

- torchvision (version corresponding with torch)

- simplejson==3.11.1

- decord>=0.6.0

- pyyaml

- einops

- oss2

- psutil

- tqdm

- pandas

optional requirements

- fvcore (for flops calculation)

Model Zoo

| Dataset | architecture | pre-training | #frames | acc@1 | acc@5 | checkpoint | config |

|---|---|---|---|---|---|---|---|

| SSV2 | ViT-B/16 | CLIP | 8 | 68.7 | 91.1 | [google drive] | vit-b16-8+16f |

| SSV2 | ViT-B/16 | CLIP | 16 | 70.2 | 92.0 | [google drive] | vit-b16-16+32f |

| SSV2 | ViT-B/16 | CLIP | 32 | 70.9 | 92.1 | [google drive] | vit-b16-32+64f |

| SSV2 | ViT-L/14 | CLIP | 32 | 73.1 | 93.2 | [google drive] | vit-l14-32+64f |

| K400 | ViT-B/16 | CLIP | 8 | 83.6 | 96.3 | [google drive] | vit-b16-8+16f |

| K400 | ViT-B/16 | CLIP | 16 | 84.4 | 96.7 | [google drive] | vit-b16-16+32f |

| K400 | ViT-B/16 | CLIP | 32 | 85.0 | 97.0 | [google drive] | vit-b16-32+64f |

| K400 | ViT-L/14 | CLIP | 32 | 88.0 | 97.9 | [google drive] | vit-l14-32+64f |

| K400 | ViT-L/14 | CLIP + K710 | 32 | 89.6 | 98.4 | [google drive] | vit-l14-32+64f |

Running instructions

You can find some pre-trained models in the Model Zoo.

For detailed explanations on the approach itself, please refer to the paper.

For an example run, set the DATA_ROOT_DIR and ANNO_DIR in configs/projects/dist/vit_base_16_ssv2.yaml, and OUTPUT_DIR in configs/projects/dist/ssv2-cn/vit-b16-8+16f_e001.yaml, and run the command for fine-tuning:

python runs/run.py --cfg configs/projects/dist/ssv2-cn/vit-b16-8+16f_e001.yaml

We use 8 Nvidia V100 GPUs for fine-tuning, and each GPU contains 32 video clips.

Citing DiST

If you find DiST useful for your research, please consider citing the paper as follows:

@inproceedings{qing2023dist,

title={Disentangling Spatial and Temporal Learning for Efficient Image-to-Video Transfer Learning},

author={Qing, Zhiwu and Zhang, Shiwei and Huang, Ziyuan and Yingya Zhang and Gao, Changxin and Deli Zhao and Sang, Nong},

booktitle={ICCV},

year={2023}

}