A tensorflow implementation of all the compared models for our SIGIR 2019 paper:

Lifelong Sequential Modeling with Personalized Memorization for User Response Prediction

The experiments are supported by Alimama Rank Group from Alibaba Inc.

The paper preprint version has been published in arXiv.

If you have any problems, please propose an issue or contact the authors: Kan Ren, Jiarui Qin and Yuchen Fang.

User response prediction, which models the user preference w.r.t. the presented items, plays a key role in online services. With two-decade rapid development, nowadays the cumulated user behavior sequences on mature Internet service platforms have become extremely long since the user's first registration. Each user not only has intrinsic tastes, but also keeps changing her personal interests during lifetime. Hence, it is challenging to handle such lifelong sequential modeling for each individual user. Existing methodologies for sequential modeling are only capable of dealing with relatively recent user behaviors, which leaves huge space for modeling long-term especially lifelong sequential patterns to facilitate user modeling. Moreover, one user's behavior may be accounted for various previous behaviors within her whole online activity history, i.e., long-term dependency with multi-scale sequential patterns. In order to tackle these challenges, in this paper, we propose a Hierarchical Periodic Memory Network for lifelong sequential modeling with personalized memorization of sequential patterns for each user. The model also adopts a hierarchical and periodical updating mechanism to capture multi-scale sequential patterns of user interests while supporting the evolving user behavior logs. The experimental results over three large-scale real-world datasets have demonstrated the advantages of our proposed model with significant improvement in user response prediction performance against the state-of-the-arts.

- TensorFlow 1.4

- python 2.7

- numpy

- sklearn

- In the

data/amazonfolder, we give three small sample dataset that has been preprocessed, the sample code is running on the sample data. Thedataset_crop.pklis for the baselineSHAN(cut a short-term and a long-term sequence) anddataset_hpmn.pkl(padding in the front) is for ourHPMNmodel, all the other baselines are based on thedataset.pkl - For the full dataset, the raw dataset link are Amazon, Taobao and XLong.

- For

AmazonandTaobao, you should extract the files intodata/raw_data/amazonanddata/raw_data/taobao, then do the following to preprocess the dataset:

python preprocess_amazon.py

python preprocess_taobao.py

- For

XLong, you should download the files intodata/xlong, then do the following:

python preprocess_xlong.py

- To run the code of the models:

python hpmn.py [DATASET] # To run HPMN, [DATASET] can be amazon or taobao

python train.py [MODEL] [DATASET] [GPU] # To run DNN/SVD++/GRU4Rec/DIEN/Caser/LSTM/, [GPU] is the GPU env

python shan.py [DATASET] [GPU] # To run SHAN

python rum.py [DATASET] [GPU] # To run RUM

You are more than welcome to cite our paper:

@inproceedings{ren2019lifelong,

title={Lifelong Sequential Modeling with Personalized Memorization for User Response Prediction},

author={Ren, Kan and Qin, Jiarui and Fang, Yuchen and Zhang, Weinan and Zheng, Lei and Bian, Weijie and Zhou, Guorui and Xu, Jian and Yu, Yong and Zhu, Xiaoqiang and Gai, Kun},

booktitle={Proceedings of 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval},

year={2019},

organization={ACM}

}

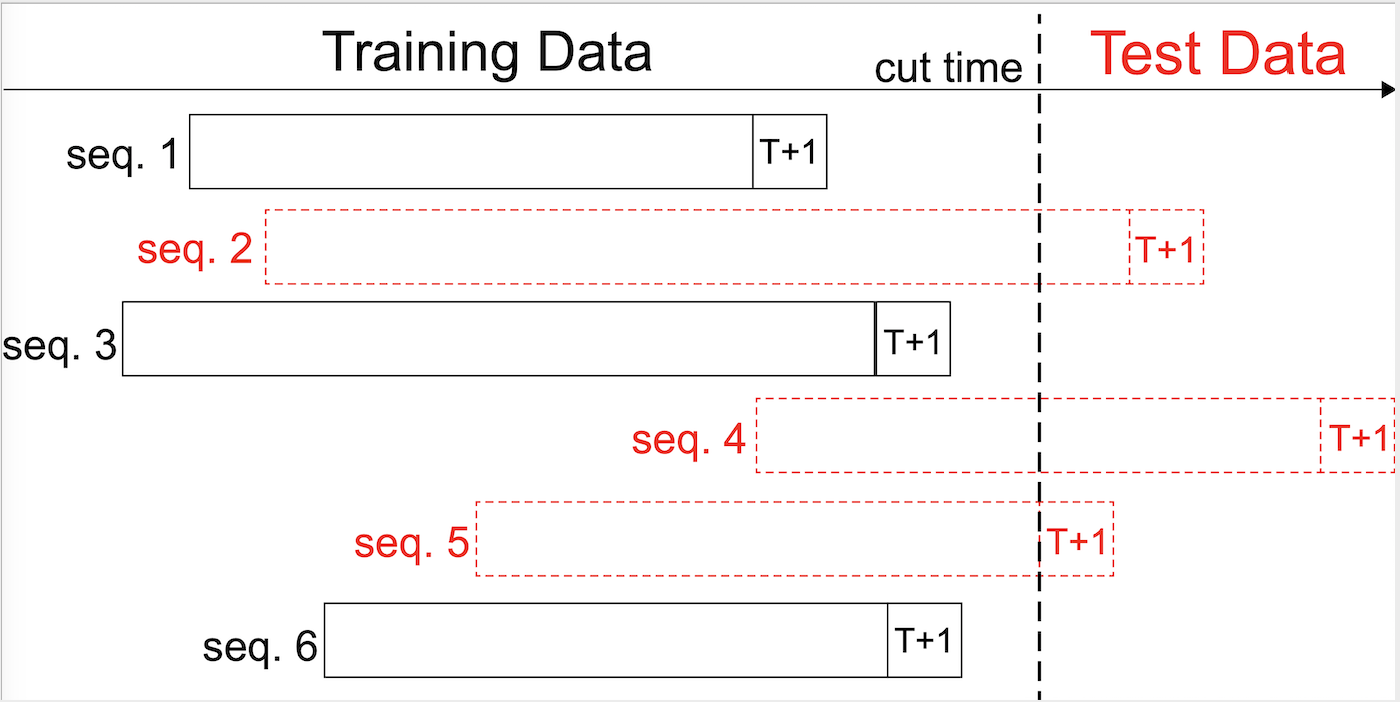

- As shown in figure, we split the training and testing dataset according to the timestamp of the prediction behavior. We set a cut time within the time range covered by the full dataset. If the last behavior of a sequence took place before the cut time, the sequence is put into the training set. Otherwise it would be in the test set. In this way, training set is about 70% of the whole dataset and test set is about 30%.