This repository implements a probabilistic and volumetric 3D reconstruction algorithm. The algorithm takes as input images (with known camera pose and intrinsics) and generates a dense probabilistic 3D model that exposes the uncertainty in the reconstruction. Please see the video below for a short explanation and results.

If you use this software please cite the following publication:

@inproceedings{3dv2015,

title = {Towards Probabilistic Volumetric Reconstruction using Ray Potentials},

author = {Ulusoy, Ali Osman and Geiger, Andreas and Black, Michael J.},

booktitle = {3D Vision (3DV), 2015 3rd International Conference on},

pages = {10-18},

address = {Lyon},

month = oct,

year = {2015}

}

Note: The code implements a modified version of the algorithm described in the paper. Namely, the belief update of the appearance variables is exchanged with an online-EM approach originally proposed by Pollard and Mundy [CVPR2007]. Our experiments show that this approximation is much faster compared to the original approach proposed in our paper and produces comparable results.

If you run into any issues or have any suggestions, please do not hesitate to contact Ali Osman Ulusoy at osman.ulusoy@tuebingen.mpg.de

- cmake

- OpenCL

- OpenGL

- Glut

- Glew

- Nvidia GPU with compute capability at least 2.0, see https://en.wikipedia.org/wiki/CUDA#Supported_GPU)

- Python >= 2.7

- Libtiff

- Libpng

cd /path/to/my/build/folder

cmake /path/to/vxl/source/folder -DCMAKE_BUILD_TYPE=Release

make -j -kIf everything compiled correctly you should see the executable /path/to/my/build/folder/bin/boxm2_ocl_render_view as well as the library /path/to/my/build/folder/lib/boxm2_batch.so

Add python scripts to the PYTHONPATH as follows,

export PYTHONPATH=$PYTHONPATH:/path/to/my/build/folder/lib/:/path/to/vxl/source/folder/contrib/brl/bseg/boxm2/pyscripts/-

Images: The current implementation works with intensity images. If you supply an RGB image, it will be automatically converted to intensity.

-

Cameras: Our algorithm expects camera intrinsics (

K3x3 matrix) and extrinsics ([R|t]3x4 matrix) for each image. The projection matrix isP = K [R | t]. Cameras are specified in separate text files for each image. Each camera text file is formatted as follows:

f_x s c_x

0 f_y c_y

0 0 1

\n

R_11 R_12 R_13

R_21 R_22 R_23

R_31 R_32 R_33

\n

t_1 t_2 t_3

where f_x and f_y are the focal lenghts, c_x and c_y is the principal point and s is the skew parameter. Together, these parameters make up the intrinsics matrix K. The second matrix is the rotation matrix R. The final row is the translation vector t.

Important note: The reconstruction scripts assume when the list of images and camera files are sorted alphabetically they form a correspondence:

img_files = glob(img_folder + "/*.png")

cam_files = glob(cam_folder + "/*.txt")

img_files.sort()

cam_files.sort()

img = img_files[index]

cam = cam_files[index]One way to ensure this correspondence is to name the cameras to match the images, i.e., img_00001.png and img_00001.txt.

You can specify the dimensions of the volume of interest, minimum allowed voxel size in the octree (in world coordinates), and the prior on occupancy probability (see paper reference above) in an XML file scene_info.xml as follows,

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<bwm_info_for_boxm2>

<bbox maxx="1" maxy="1" maxz="1" minx="0" miny="0" minz="0" >

</bbox>

<min_octree_cell_length val="0.001">

</min_octree_cell_length>

<prior_probability val="0.01">

</prior_probability>

</bwm_info_for_boxm2>Please run the following python script:

import boxm2_create_scene_scripts.py

boxm2_create_scene_scripts.create_scene('/path/to/scene_info.xml','/path/to/scene/')This script should create the folder /path/to/scene/ and an xml file called scene.xml in it.

We provide the following python script to reconstruct the scene from the input images and cameras:

/path/to/vxl/source/folder/contrib/brl/bseg/boxm2/pyscripts/reconstruct.pyPlease follow the instructions inside the script.

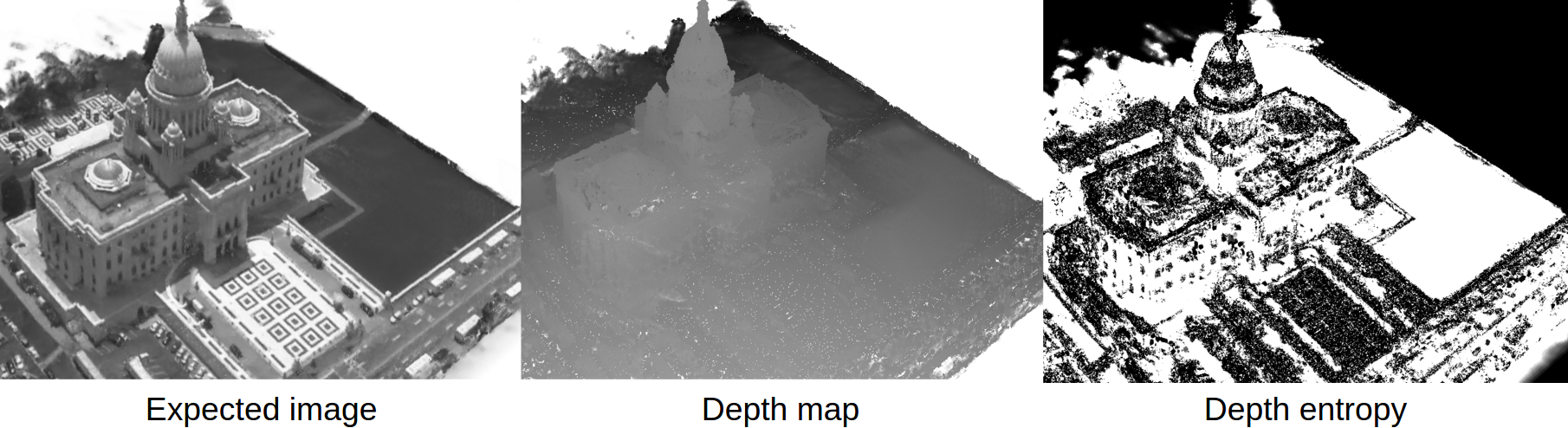

You can visualize the volumetric models using the renderer boxm2_ocl_render_view.

/path/to/my/build/folder/bin/boxm2_ocl_render_view -scene /path/to/scene/scene.xmlThe renderer computes expected pixel intensities by ray-tracing the probabilistic 3d volume. You can press d to render depth maps and e to render the entropy in depth distributions. Please see the paper for details.

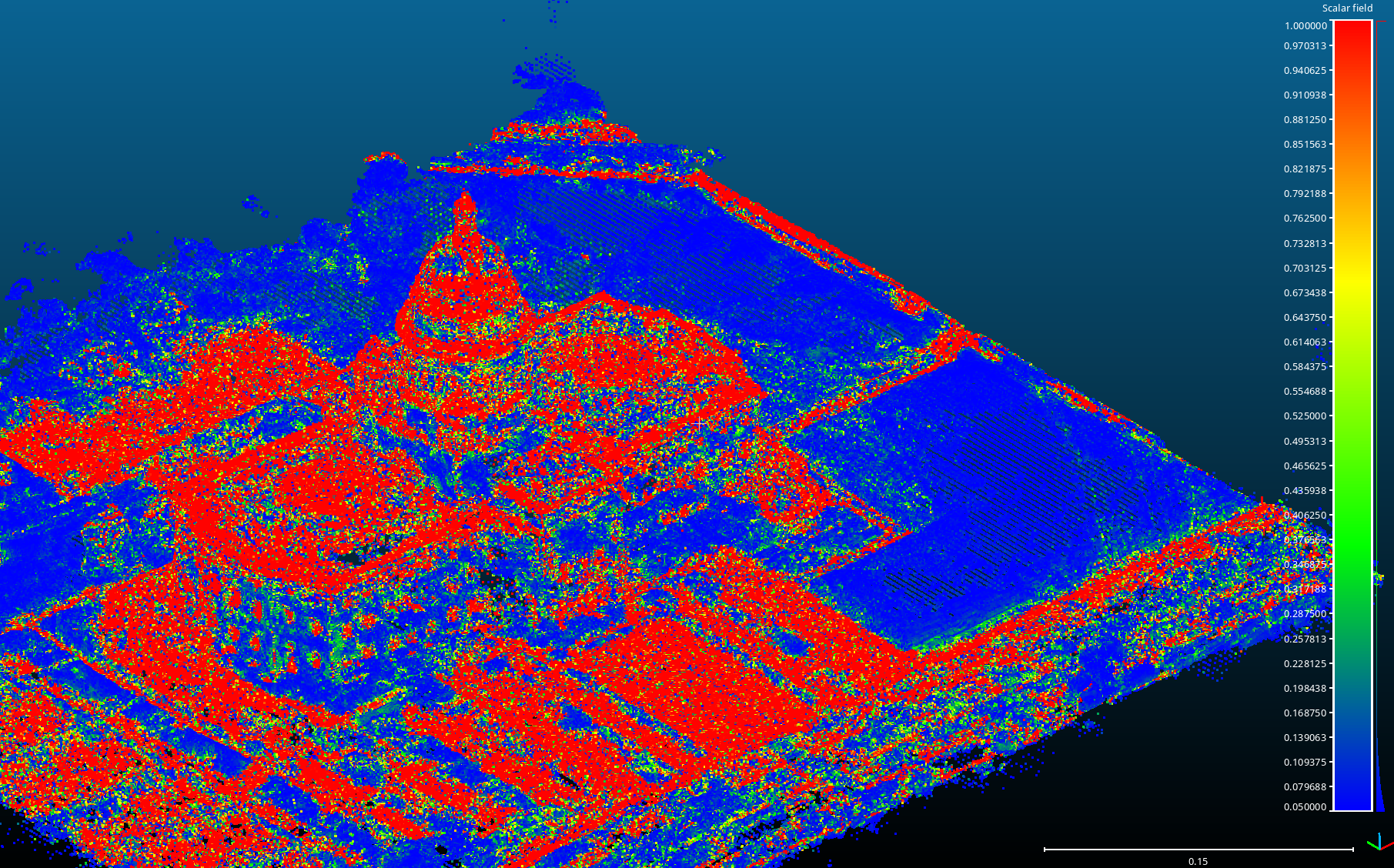

The probabilistic volumetric 3D model can also be visualized as a point cloud. We provide the following script that extracts a point cloud from the 3D model and exports it in XYZ format which can be visualized using CloudCompare:

/path/to/vxl/source/folder/contrib/brl/bseg/boxm2/pyscripts/export_point_cloud.pyThis script outputs points that correspond to voxel centers. Point with very small probability are filtered for better visualization. The script also outputs the marginal occupancy belief for each point. CloudCompare can be used to visualize these probabilities as shown below:

As example data, we provide the three aerial datasets used in the publication: link. The folder contains a subfolder for each scene. Each subfolder contains images, cameras, as well as the final octree structure used to produce the results in the paper. A python script to reconstruct the scene is also included for convenience. Please call python reconstruct.py from inside the subfolder.