Implementation of the rainbow paper: Combining Improvements in Deep Reinforcement Learning. After the introduction of Deep Q-Networks in 2015, five other methods were introduced afterwards to improve the performance of initial DQN algorithm. These methods are:

- Double Q-Learning

- Dueling architecture

- Prioritized experience replay

- Distributional reinforcement learning

- Noisy Nets

Rainbow combined all these methods also with multi-step learning, and showed the final combination does much better than all other separate methods alone.

| Pong | Boxing |

|---|---|

|

|

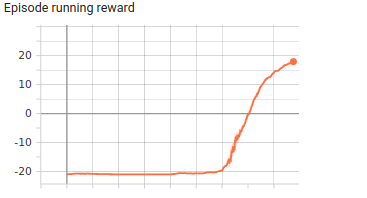

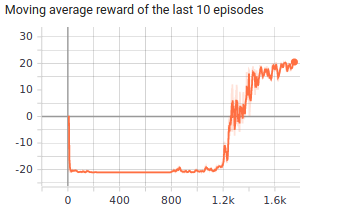

x-axis: episode number.

environment: Pong

| Running reward | Mean reawrd of the last ten episodes |

|---|---|

|

|

- the obvious learning phase has started from episode 1200 and the agent has reached to its best performance around episode 1600.

- PongNoFrameskip-v4

- BoxingNoFrameskip-v4

- MsPacmanNoFrameskip-v4

All values (except

final_annealing_beta_stepsthat was chosen by trial and error andinitial_mem_size_to_trainthat was chosen as a result of lack of computational resources) are based on the Rainbow paper, And instead of hard updates, the technique of soft updates of the DDPG paper was applied.

| Parameters | Value |

|---|---|

| lr | 6.25e-5 |

| n_step | 3 |

| batch_size | 32 |

| gamma | 0.99 |

| tau(based on DDPG paper) | 0.001 |

| train_period(number of steps between each optimization) | 4 |

| v_min | -10 |

| v_max | 10 |

| n_atoms | 51 |

| adam epsilon | 1.5e-4 |

| alpha | 0.5 |

| beta | 0.4 |

| clip_grad_norm | 10 |

├── Brain

│ ├── agent.py

│ └── model.py

├── Common

│ ├── config.py

│ ├── logger.py

│ ├── play.py

│ └── utils.py

├── main.py

├── Memory

│ ├── replay_memory.py

│ └── segment_tree.py

├── README.md

├── requirements.txt

└── Results

├── 10_last_mean_reward.png

├── rainbow.gif

└── running_reward.png- Brain dir consists the neural network structure and the agent decision making core.

- Common consists minor codes that are common for most RL codes and do auxiliary tasks like: logging, wrapping Atari environments and ... .

- main.py is the core module of the code that manges all other parts and make the agent interact with the environment.

- Memory consists memory of the agent with prioritized experience replay extension.

- gym == 0.17.2

- numpy == 1.19.1

- opencv_contrib_python == 3.4.0.12

- psutil == 5.4.2

- torch == 1.4.0

pip3 install -r requirements.txtmain.py [-h] [--algo ALGO] [--mem_size MEM_SIZE] [--env_name ENV_NAME]

[--interval INTERVAL] [--do_train] [--train_from_scratch]

[--do_intro_env]

Variable parameters based on the configuration of the machine or user's choice

optional arguments:

-h, --help show this help message and exit

--algo ALGO The algorithm which is used to train the agent.

--mem_size MEM_SIZE The memory size.

--env_name ENV_NAME Name of the environment.

--interval INTERVAL The interval specifies how often different parameters

should be saved and printed, counted by episodes.

--do_train The flag determines whether to train the agent or play

with it.

--train_from_scratch The flag determines whether to train from scratch or[default=True]

continue previous tries.

--do_intro_env Only introduce the environment then close the program.- In order to train the agent with default arguments , execute the following command and use

--do_trainflag to train the agent (You may change the memory capacity and the environment based on your desire.):

python3 main.py --do_train --algo="rainbow" --mem_size=150000 --env_name="BreakoutNoFrameskip-v4" --interval=100 --train_from_scratch- If you want to keep training your previous run, execute the follwoing:

python3 main.py --do_train --algo="rainbow" --mem_size=150000 --env_name="PongNoFrameskip-v4" --interval=100 - The whole training procedure was done on Google Colab and it took less than 15 hours of training, thus a machine with a similar configuration would be sufficient, but if you need a more powerful free online GPU provider, take a look at paperspace.com.

- Human-level control through deep reinforcement learning, Mnih et al., 2015

- Deep Reinforcement Learning with Double Q-learning, Van Hasselt et al., 2015

- Dueling Network Architectures for Deep Reinforcement Learning, Wang et al., 2015

- Prioritized Experience Replay, Schaul et al., 2015

- A Distributional Perspective on Reinforcement Learning, Bellemere et al., 2017

- Noisy Networks for Exploration, Fortunato et al., 2017

- Rainbow: Combining Improvements in Deep Reinforcement Learning, Hessel et al., 2017

- @Curt-Park for rainbow is all you need.

- @higgsfield for RL-Adventure.

- @wenh123 for NoisyNet-DQN.

- @qfettes for DeepRL-Tutorials.

- @AdrianHsu for breakout-Deep-Q-Network.

- @Kaixhin for Rainbow.

- @Kchu for DeepRL_PyTorch.