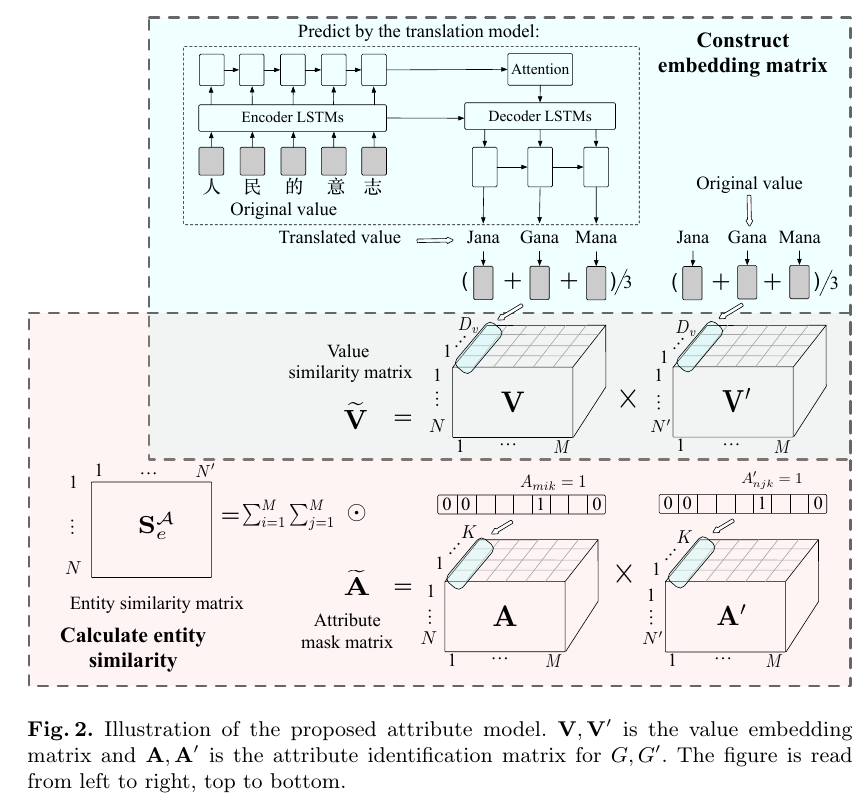

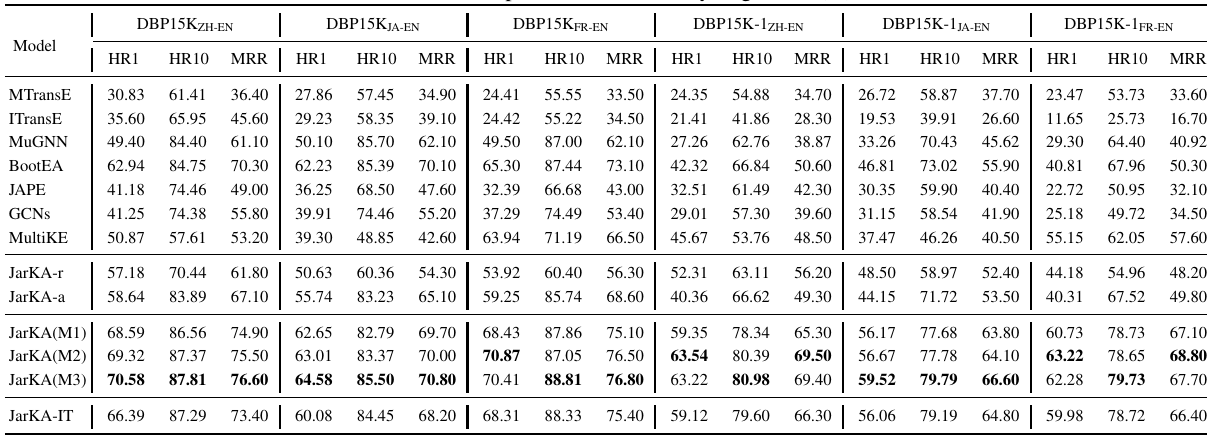

JarKA tackles the attribute heterogeneity problem by the interaction-based matching model when aligning cross-lingual knowledge graphs, and also further incorporates structure information by a jointly-trained framework. To alleviate the conflicts generated by the two modules, three merge strategies are adopted such that the most confident seeds can be selected appropriately.

- Python 3.6+

- Pytorch "1.1.0"+

- CPU/GPU

- Jieba

- MeCab

- ...

- Directory dbp_15k: stores three datasets, zh_en, fr_en, and ja_en. You can download from here with password:r9a7 ;

- Directoty model: saves JarKA code.

Taking, zh_en dataset, for example, we demostrate how to run JarKA model:

- You need first download dbp15k dataset, and then go to the dirs ./dbp15k/zh_en/, run the following code sequentially to generate essential data files:

- build_vocab_dict.py: to build vocabulary dict (whole_vocab_split) and the corpus for pretraining the NMT (zh_en_des);

- filter_att.py: to filter the popular attributes which appear at least 50 times in all attribute triplets, and then match each attribute to an id. (att2id_fre.p);

- generate_popular_attrs.py: filter and sort the attributes by popularity and seclect top-20 attribute triplets for each entity (filter_sort_zh/en_lan_att_filter.p)

Go the dirs model/

-

Copy all the files in the dir ./dbp15k/zh_en/ to ./data/

-

Run translate.py, and save the checkpoints.

python jarka_main.py --merge {merge_strategy}

Where {merge_strategy} contains ['multi_view', 'score_based', 'rank_based']

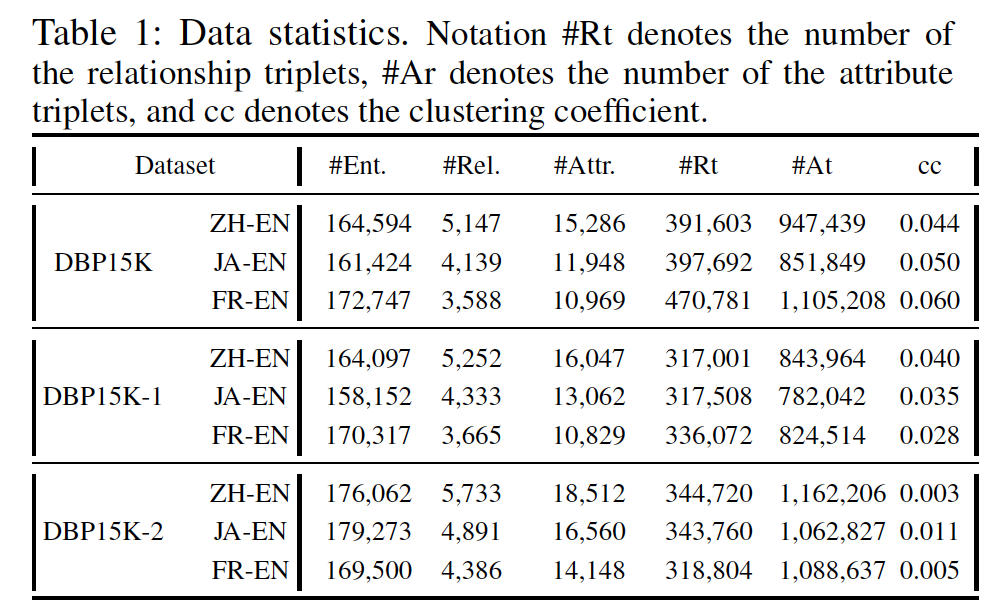

We evaluate the proposed model on three datasets. One is a well-adopted public dataset named DBP15K, the other two, named DBP15K-1 and DBP15K-2, modify DBP15K. DBP15K-1 loosens the constraint as 2 self-contained relationship triplets, and DBP15K-2 further removes the self-contained constraint. Thus, the clustering of the three datasets is different. Table 1 shows the statistics of the datasets.

The dbp15k-1 dataset is also avaliable online.(password: aclg)

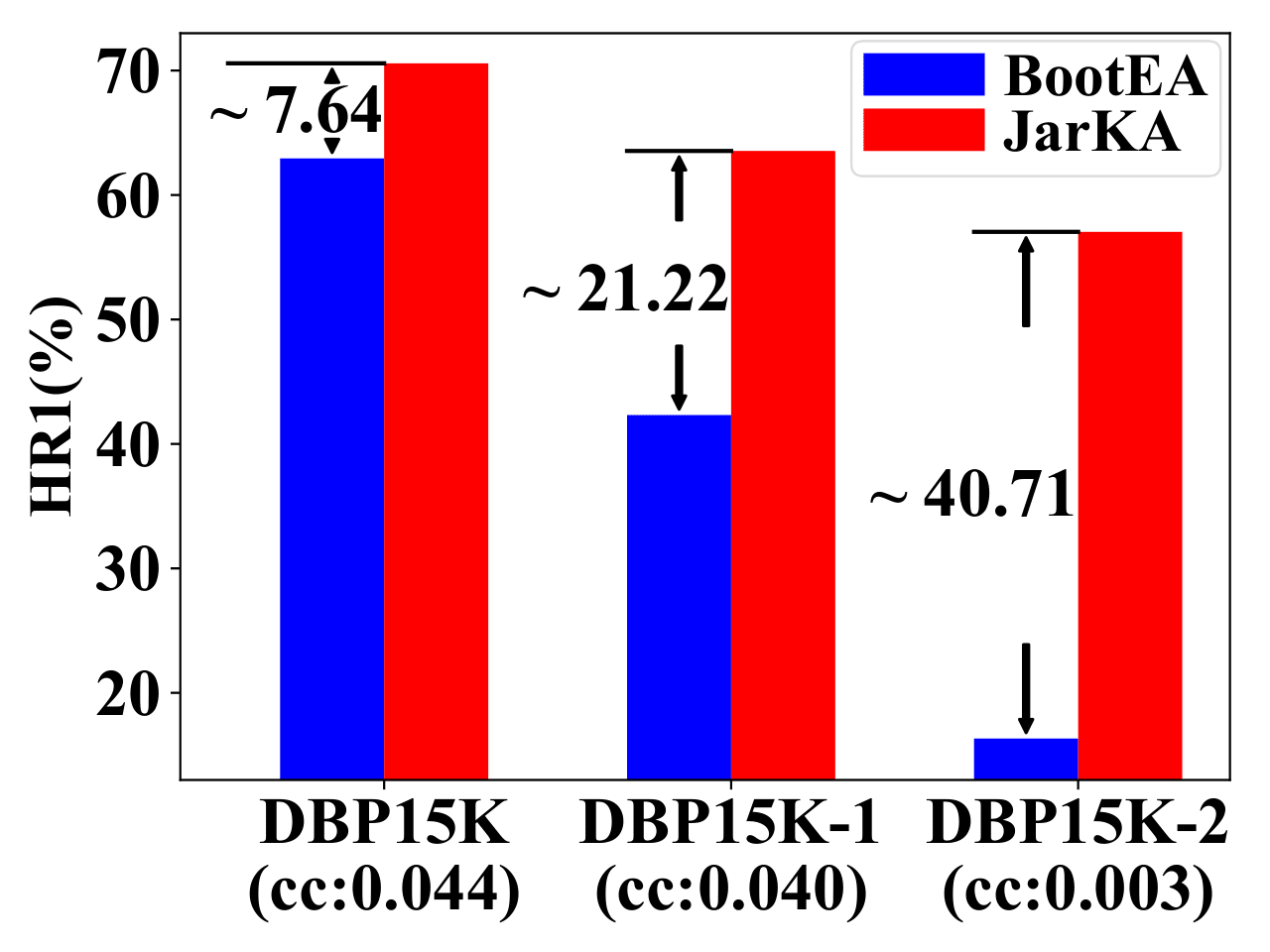

We compare JarKA and BootEA on three ZH-EN datasets, which shows that both of them perform poorer when the clustering coefficient (cc) of the dataset is smaller. But their performance gap increases with the decrease of cc, indicating BootEA is more sensitive to the clustering characteristics of the graph than JarKA, as BootEA only models the structures.

If you think JarKA inspires you, please cite it as follows:

@inproceedings{chen2020jarka,

title={JarKA: Modeling Attribute Interactions for Cross-lingual Knowledge Alignment},

author={Chen, Bo and Zhang, Jing and Tang, Xiaobin and Chen, Hong and Li, Cuiping},

booktitle={Pacific-Asia Conference on Knowledge Discovery and Data Mining},

pages={845--856},

year={2020},

organization={Springer}

}