Trillian is an implementation of the concepts described in the Verifiable Data Structures white paper, which in turn is an extension and generalisation of the ideas which underpin Certificate Transparency.

Trillian implements a Merkle tree whose contents are served from a data storage layer, to allow scalability to extremely large trees. On top of this Merkle tree, Trillian provides two modes:

- An append-only Log mode, analogous to the original Certificate Transparency logs. In this mode, the Merkle tree is effectively filled up from the left, giving a dense Merkle tree.

- An experimental Map mode that allows transparent storage of arbitrary key:value pairs derived from the contents of a source Log; this is also known as a log-backed map. In this mode, the key's hash is used to designate a particular leaf of a deep Merkle tree – sufficiently deep that filled leaves are vastly outnumbered by unfilled leaves, giving a sparse Merkle tree. (A Trillian Map is an unordered map; it does not allow enumeration of the Map's keys.)

Note that Trillian requires particular applications to provide their own personalities on top of the core transparent data store functionality.

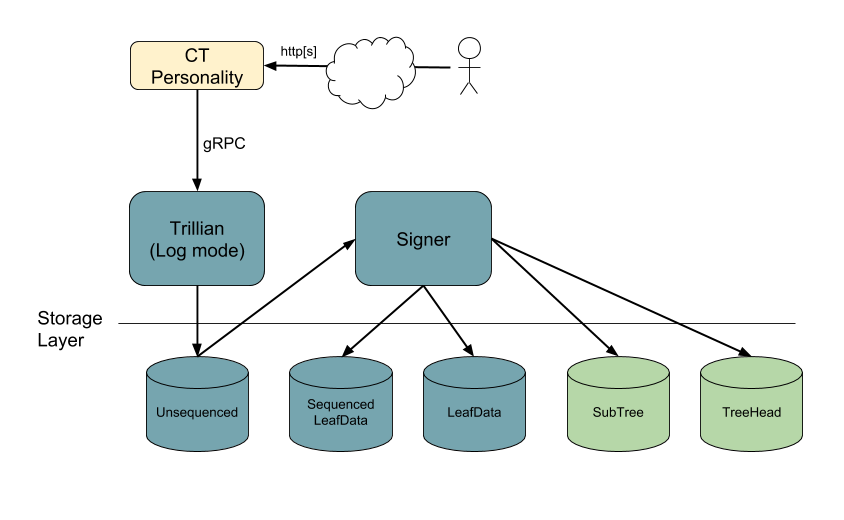

Certificate Transparency (CT) is the most well-known and widely deployed transparency application, and an implementation of CT as a Trillian personality is available in the certificate-transparency-go repo.

Other examples of Trillian personalities are available in the trillian-examples repo.

- Mailing list: https://groups.google.com/forum/#!forum/trillian-transparency

- Slack: https://gtrillian.slack.com/ (invitation)

WARNING: The Trillian codebase is still under development, but the Log mode is now being used in production by several organizations. We will try to avoid any further incompatible code and schema changes but cannot guarantee that they will never be necessary.

The current state of feature implementation is recorded in the Feature implementation matrix.

To build and test Trillian you need:

- Go 1.11 or later.

To run many of the tests (and production deployment) you need:

- MySQL or MariaDB to provide the data storage layer; see the MySQL Setup section.

Note that this repository uses Go modules to manage dependencies; Go will fetch and install them automatically upon build/test.

To fetch the code, dependencies, and build Trillian, run the following:

export GO111MODULE=auto

git clone https://github.com/google/trillian.git

cd trillian

go build ./...To build and run tests, use:

go test ./...The repository also includes multi-process integration tests, described in the Integration Tests section below.

To run Trillian's integration tests you need to have an instance of MySQL running and configured to:

- listen on the standard MySQL port 3306 (so

mysql --host=127.0.0.1 --port=3306connects OK) - not require a password for the

rootuser

You can then set up the expected tables in a

test database like so:

./scripts/resetdb.sh

Warning: about to destroy and reset database 'test'

Are you sure? y

> Resetting DB...

> Reset CompleteIf you are working with the Trillian Map, you will probably need to increase the MySQL maximum connection count:

% mysql -u root

MySQL> SET GLOBAL max_connections = 1000;Trillian includes an integration test suite to confirm basic end-to-end functionality, which can be run with:

./integration/integration_test.shThis runs two multi-process tests:

- A test that starts a Trillian server in Log mode, together with a signer, logs many leaves, and checks they are integrated correctly.

- A test that starts a Trillian server in Map mode, sets various key:value pairs and checks they can be retrieved.

Developers who want to make changes to the Trillian codebase need some additional dependencies and tools, described in the following sections. The Travis configuration for the codebase is also a useful reference for the required tools and scripts, as it may be more up-to-date than this document.

Some of the Trillian Go code is autogenerated from other files:

- gRPC message structures are originally provided as protocol buffer message definitions.

- Some unit tests use mock implementations of interfaces; these are created from the real implementations by GoMock.

- Some enums have string-conversion methods (satisfying the

fmt.Stringerinterface) created using the stringer tool (go get golang.org/x/tools/cmd/stringer).

Re-generating mock or protobuffer files is only needed if you're changing the original files; if you do, you'll need to install the prerequisites:

-

mockgentool from https://github.com/golang/mock -

stringertool from https://golang.org/x/tools/cmd/stringer -

protoc, Go support for protoc, grpc-gateway and protoc-gen-doc. -

protocol buffer definitions for standard Google APIs:

git clone https://github.com/googleapis/googleapis.git $GOPATH/src/github.com/googleapis/googleapis

and run the following:

go generate -x ./... # hunts for //go:generate comments and runs themThe Trillian codebase uses go.mod to declare fixed versions of its dependencies.

With Go modules, updating a dependency simply involves running go get:

export GO111MODULE=on

go get package/path # Fetch the latest published version

go get package/path@X.Y.Z # Fetch a specific published version

go get package/path@HEAD # Fetch the latest commit

To update ALL dependencies to the latest version run go get -u.

Be warned however, that this may undo any selected versions that resolve issues in other non-module repos.

While running go build and go test, go will add any ambiguous transitive dependencies to go.mod

To clean these up run:

go mod tidy

The scripts/presubmit.sh script runs various tools

and tests over the codebase.

Install golangci-lint.

go install github.com/golangci/golangci-lint/cmd/golangci-lintInstall prototool

go install github.com/uber/prototool/cmd/prototool./scripts/presubmit.shgolangci-lint run

prototool lintTrillian is primarily implemented as a gRPC service; this service receives get/set requests over gRPC and retrieves the corresponding Merkle tree data from a separate storage layer (currently using MySQL), ensuring that the cryptographic properties of the tree are preserved along the way.

The Trillian service is multi-tenanted – a single Trillian installation can

support multiple Merkle trees in parallel, distinguished by their TreeId – and

each tree operates in one of two modes:

- Log mode: an append-only collection of items; this has two sub-modes:

- normal Log mode, where the Trillian service assigns sequence numbers to new tree entries as they arrive

- 'preordered' Log mode, where the unique sequence number for entries in the Merkle tree is externally specified

- Map mode: a collection of key:value pairs.

In either case, Trillian's key transparency property is that cryptographic proofs of inclusion/consistency are available for data items added to the service.

To build a complete transparent application, the Trillian core service needs to be paired with additional code, known as a personality, that provides functionality that is specific to the particular application.

In particular, the personality is responsible for:

- Admission Criteria – ensuring that submissions comply with the overall purpose of the application.

- Canonicalization – ensuring that equivalent versions of the same data get the same canonical identifier, so they can be de-duplicated by the Trillian core service.

- External Interface – providing an API for external users, including any practical constraints (ACLs, load-balancing, DoS protection, etc.)

This is described in more detail in a separate document. General design considerations for transparent Log applications are also discussed separately.

When running in Log mode, Trillian provides a gRPC API whose operations are similar to those available for Certificate Transparency logs (cf. RFC 6962). These include:

GetLatestSignedLogRootreturns information about the current root of the Merkle tree for the log, including the tree size, hash value, timestamp and signature.GetLeavesByHash,GetLeavesByIndexandGetLeavesByRangereturn leaf information for particular leaves, specified either by their hash value or index in the log.QueueLeavesrequests inclusion of specified items into the log.- For a pre-ordered log,

AddSequencedLeavesrequests the inclusion of specified items into the log at specified places in the tree.

- For a pre-ordered log,

GetInclusionProof,GetInclusionProofByHashandGetConsistencyProofreturn inclusion and consistency proof data.

In Log mode (whether normal or pre-ordered), Trillian includes an additional Signer component; this component periodically processes pending items and adds them to the Merkle tree, creating a new signed tree head as a result.

(Note that each of the components in this diagram can be distributed, for scalability and resilience.)

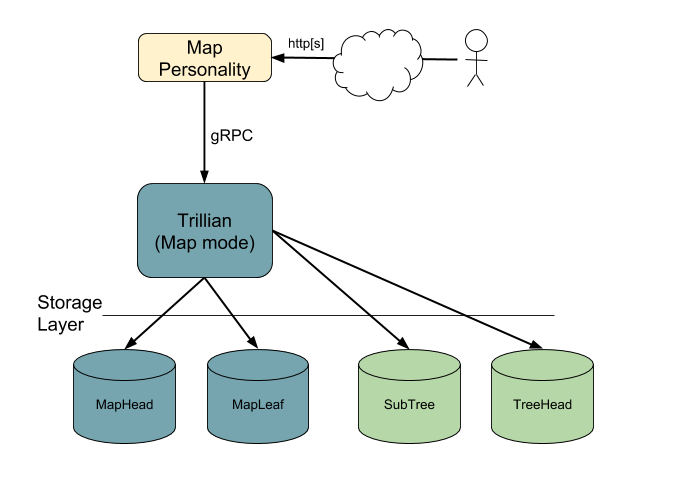

WARNING: Trillian Map mode is experimental and under development; it should not be relied on for a production service (yet).

Trillian in Map mode can be thought of as providing a key:value store for values derived from a data source (normally a Trillian Log), together with cryptographic transparency guarantees for that data.

When running in Map mode, Trillian provides a straightforward gRPC API with the following available operations:

SetLeavesrequests inclusion of specified key:value pairs into the Map; these will appear as the next revision of the Map, with a new tree head for that revision.GetSignedMapRootreturns information about the current root of the Merkle tree representing the Map, including a revision , hash value, timestamp and signature.- A variant allows queries of the tree root at a specified historical revision.

GetLeavesreturns leaf information for a specified set of key values, optionally as of a particular revision. The returned leaf information also includes inclusion proof data.

As a stand-alone component, it is not possible to reliably monitor or audit a Trillian Map instance; key:value pairs can be modified and subsequently reset without anyone noticing.

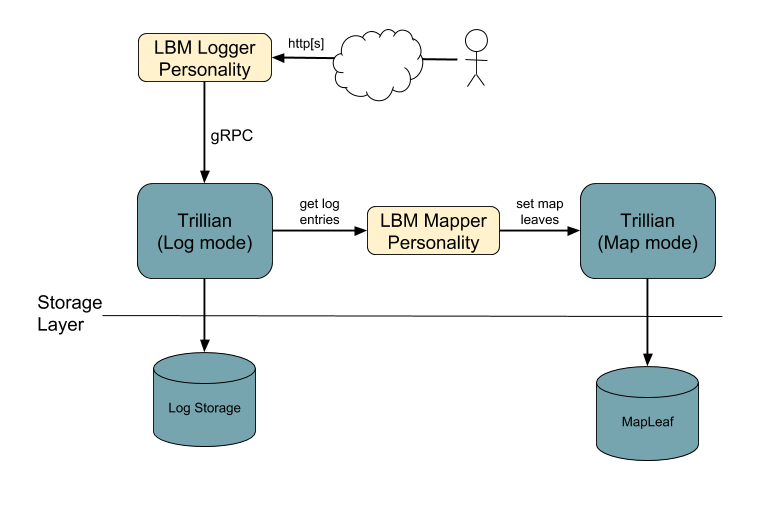

A future plan to deal with this is to create a Logged Map, which combines a Trillian Map with a Trillian Log so that all published revisions of the Map have their signed tree head data appended to the corresponding Map.

The mapping between the source Log data and the key:value data stored in the Map is application-specific, and so is implemented as a Trillian personality. This allows for wide flexibility in the mapping function:

- The simplest example is a Log that holds a journal of pending mutations to the key:value data; the mapping function here simply applies a batch of mutations.

- A more sophisticated example might log entries that are independently of interest (e.g. Web PKI certificates) and apply a more complex mapping function (e.g. map from domain name to public key for the domains covered by a certificate).

The most obvious application for Trillian in Log mode is to provide a Certificate Transparency (RFC 6962) Log. To do this, the CT Log personality needs to include all of the certificate-specific processing – in particular, checking that an item that has been suggested for inclusion is indeed a valid certificate that chains to an accepted root.

One useful application for Trillian in Map mode is to provide a verifiable log-backed map, as described in the Verifiable Data Structures white paper (which uses the term 'log-backed map'). To do this, a mapper personality would monitor the additions of entries to a Log, potentially external, and would write some kind of corresponding key:value data to a Trillian Map.

Clients of the log-backed map are then able to verify that the entries in the Map they are shown are also seen by anyone auditing the Log for correct operation, which in turn allows the client to trust the key/value pairs returned by the Map.

A concrete example of this might be a log-backed map that monitors a Certificate Transparency Log and builds a corresponding Map from domain names to the set of certificates associated with that domain.

The following table summarizes properties of data structures laid in the Verifiable Data Structures white paper. "Efficiently" means that a client can and should perform this validation themselves. "Full audit" means that to validate correctly, a client would need to download the entire dataset, and is something that in practice we expect a small number of dedicated auditors to perform, rather than being done by each client.

| Verifiable Log | Verifiable Map | Verifiable Log-Backed Map | |

|---|---|---|---|

| Prove inclusion of value | Yes, efficiently | Yes, efficiently | Yes, efficiently |

| Prove non-inclusion of value | Impractical | Yes, efficiently | Yes, efficiently |

| Retrieve provable value for key | Impractical | Yes, efficiently | Yes, efficiently |

| Retrieve provable current value for key | Impractical | No | Yes, efficiently |

| Prove append-only | Yes, efficiently | No | Yes, efficiently [1]. |

| Enumerate all entries | Yes, by full audit | Yes, by full audit | Yes, by full audit |

| Prove correct operation | Yes, efficiently | No | Yes, by full audit |

| Enable detection of split-view | Yes, efficiently | Yes, efficiently | Yes, efficiently |

- [1] -- although full audit is required to verify complete correct operation